Overview of strong human intelligence amplification methods

How can we make many humans who are very good at solving difficult problems?

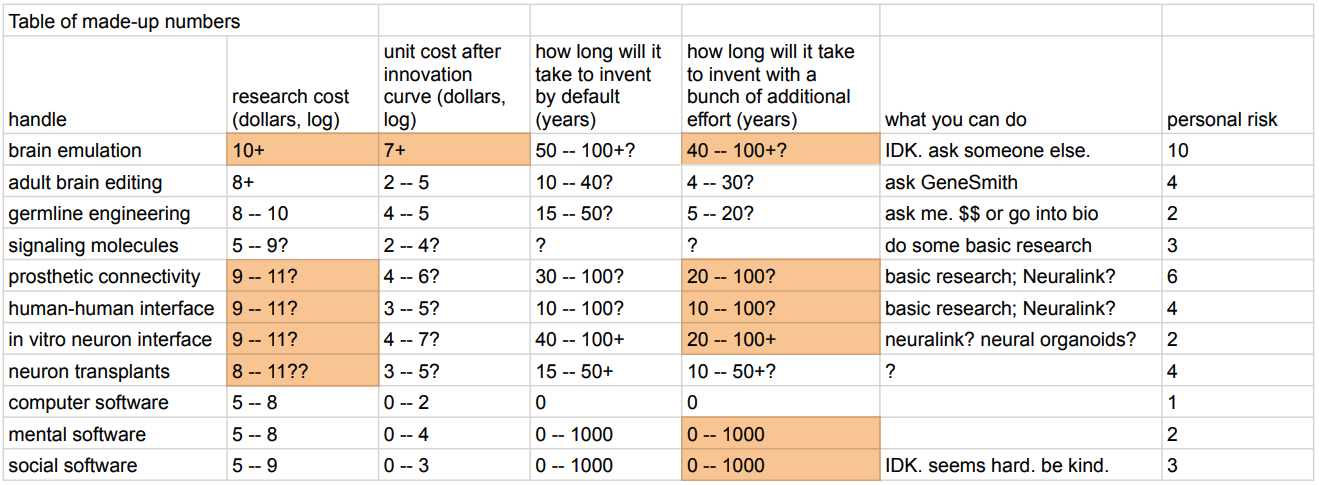

Summary (table of made-up numbers)

I made up the made-up numbers in this table of made-up numbers; therefore, the numbers in this table of made-up numbers are made-up numbers.

Call to action

If you have a shitload of money, there are some projects you can give money to that would make supergenius humans on demand happen faster. If you have a fuckton of money, there are projects whose creation you could fund that would greatly accelerate this technology.

If you’re young and smart, or are already an expert in either stem cell / reproductive biology, biotech, or anything related to brain-computer interfaces, there are some projects you could work on.

If neither, think hard, maybe I missed something.

You can DM me or gmail me at tsvibtcontact.

Context

The goal

What empowers humanity is the ability of humans to notice, recognize, remember, correlate, ideate, tinker, explain, test, judge, communicate, interrogate, and design. To increase human empowerment, improve those abilities by improving their source: human brains.

AGI is going to destroy the future’s promise of massive humane value. To prevent that, create humans who can navigate the creation of AGI. Humans alive now can’t figure out how to make AGI that leads to a humane universe.

These are desirable virtues: philosophical problem-solving ability, creativity, wisdom, taste, memory, speed, cleverness, understanding, judgement. These virtues depend on mental and social software, but can also be enhanced by enhancing human brains.

How much? To navigate the creation of AGI will likely require solving philosophical problems that are beyond the capabilities of the current population of humans, given the available time (some decades). Six standard deviations is 1 in 10^9, seven standard deviations is 1 in 10^12. So the goal is to create many people who are 7 SDs above the mean in cognitive capabilities. That’s “strong human intelligence amplification”. (Why not more SDs? There are many downside risks to changing the process that creates humans, so going further is an unnecessary risk.)

It is my conviction that this is the only way forward for humanity.

Constraint: Algernon’s law

Algernon’s law: If there’s a change to human brains that human-evolution could have made, but didn’t, then it is net-neutral or net-negative for inclusive relative genetic fitness. If intelligence is ceteris paribus a fitness advantage, then a change to human brains that increases intelligence must either come with other disadvantages or else be inaccessible to evolution.

Ways around Algernon’s law, increasing intelligence anyway:

We could apply a stronger selection pressure than human-evolution applied. The selection pressure that human-evolution applied to humans is capped (somehow) by the variation of IGF among all germline cells. So it can only push down mutational load to some point.

Maybe (recent, perhaps) human-evolution selected against intelligence beyond some point.

We could come up with better design ideas for mind-hardware.

We could use resources that evolution didn’t have. We have metal, wires, radios, basically unlimited electric and metabolic power, reliable high-quality nutrition, mechanical cooling devices, etc.

Given our resources, some properties that would have been disadvantages are no longer major disadvantages. E.g. a higher metabolic cost is barely a meaningful cost.

We have different values from evolution; we might want to trade away IGF to gain intelligence.

How to know what makes a smart brain

Figure it out ourselves

We can test interventions and see what works.

We can think about what, mechanically, the brain needs in order to function well.

We can think about thinking and then think of ways to think better.

Copy nature’s work

There are seven billion natural experiments, juz runnin aroun doin stuff. We can observe the behaviors of the humans and learn what circumstances of their creation leads to fewer or more cognitive capabilities.

We can see what human-evolution invested in, aimed at cognitive capabilities, and add more of that.

Brain emulation

The approach

Method: figure out how neurons work, scan human brains, make a simulation of a scanned brain, and then use software improvements to make the brain think better.

The idea is to have a human brain, but with the advantages of being in a computer: faster processing, more scalable hardware, more introspectable (e.g. read access to all internals, even if they are obscured; computation traces), reproducible computations, A/B testing components or other tweaks, low-level optimizable, process forking. This is a “figure it out ourselves” method——we’d have to figure out what makes the emulated brain smarter.

Problems

-

While we have some handle on the fast (<1 second) processes that happen in a neuron, no one knows much about the slow (>5 second) processes. The slow processes are necessary for what we care about in thinking. People working on brain emulation mostly aren’t working on this problem because they have enough problems as it is.

-

Experiments here, the sort that would give 0-to-1 end-to-end feedback about whether the whole thing is working, would be extremely expensive; and unit tests are much harder to calibrate (what reference to use?).

-

Partial success could constitute a major AGI advance, which would be extremely dangerous. Unlike most of the other approaches listed here, brain emulations wouldn’t be hardware-bound (skull-size bound).

-

The potential for value drift——making a human-like mind with altered / distorted / alien values——is much higher here than with the other approaches. This might be especially selected for: subcortical brain structures, which are especially value-laden, are more physiologically heterogeneous than cortical structures, and therefore would require substantially more scientific work to model accurately. Further: because the emulation approach is based on copying as much as possible and then filling in details by seeing what works, many details will be filled in by non-humane processes (such as the shaping processes in normal human childhood).

Fundamentally, brain emulations are a 0-to-1 move, whereas the other approaches take a normal human brain as the basic engine and then modify it in some way. The 0-to-1 approach is more difficult, more speculative, and riskier.

Genomic approaches

These approaches look at the 7 billion natural experiments and see which genetic variants correlate with intelligence. IQ is a very imperfect but measurable and sufficient proxy for problem-solving ability. Since >7 of every 10 IQ points are explained by genetic variation, we can extract a lot of what nature knows about what makes brains have many capabilities. We can’t get that knowledge about capable brains in a form usable as engineering (to build a brain from scratch), but we can at least get it in a form usable as scores (which genomes make brains with fewer or more capabilities). These are “copy nature’s work” approaches.

Adult brain gene editing

The approach

Method: edit IQ-positive variants into the brain cells of adult humans.

See “Significantly Enhancing …”.

Problems

-

Delivery is difficult.

-

Editors damage DNA.

-

The effect is greatly attenuated, compared to germline genetics. In adulthood, learning windows have been passed by; many genes are no longer active; damage that accumulates has already been accumulated; many cells don’t receive the edits. This adds up to an optimistic ceiling somewhere around +2 or +3 SDs.

Germline engineering

This is the way that will work. (Note that there are many downside risks to germline engineering, though AFAICT they can be alleviated to such an extent that the tradeoff is worth it by far.)

The approach

Method: make a baby from a cell that has a genome that has many IQ-positive genetic variants.

Subtasks:

-

Know what genome would produce geniuses. This is already solved well enough. Because there are already polygenic scores for IQ that explain >12% of the observed variance in IQ (pgscatalog.org/score/PGS003724/), 10 SDs of raw selection power would translate into trait selection power at a rate greater than √(1/9) = 1⁄3, giving >3.3 SDs of IQ trait selection power, i.e. +50 IQ points.

-

Make a cell with such a genome. This is probably not that hard——via CRISPR editing stem cells, via iterated meiotic selection, or via chromosome selection. My math and simulations show that several methods would achieve strong intelligence amplification. If induced meiosis into culturable cells is developed, IMS can provide >10 SDs of raw selection power given very roughly $10^5 and a few months.

-

Know what epigenomic state (in sperm / egg / zygote) leads to healthy development. This is not fully understood——it’s an open problem that can be worked on.

-

Given a cell, make a derived cell (diploid mitotic or haploid meiotic offspring cell) with that epigenomic state. This is not fully understood——it’s an open problem that can be worked on. This is the main bottleneck.

These tasks don’t necessarily completely factor out. For example, some approaches might try to “piggyback” off the natural epigenomic reset by using chromosomes from natural gametes or zygotes, which will have the correct epigenomic state already.

See also Branwen, “Embryo Selection …”.

More information on request. Some of the important research is happening, but there’s always room for more funding and talent.

Problems

-

It takes a long time; the baby has to grow up. (But we probably have time, and delaying AGI only helps if you have an out.)

-

Correcting the epigenomic state of a cell to be developmentally competent is unsolved.

-

The baby can’t consent, unlike with other approaches, which work with adults. (But the baby can also be made genomically disposed to be exceptionally healthy and sane.)

-

It’s the most politically contentious approach.

Signaling molecules for creative brains

The approach

Method: identify master signaling molecules that control brain areas or brain developmental stages that are associated with problem-solving ability; treat adult brains with those signaling molecules.

Due to evolved modularity, organic systems are governed by genomic regulatory networks. Maybe we can isolate and artificially activate GRNs that generate physiological states that produce cognitive capabilities not otherwise available in a default adult’s brain. The hope is that there’s a very small set of master regulators that can turn on larger circuits with strong orchestrated effects, as is the case with hormones, so that treatments are relatively simple, high-leverage, and discoverable. For example, maybe we could replicate the signaling context that activates childish learning capabilities, or maybe we could replicate the signaling context that activates parietal problem-solving in more brain tissue.

I haven’t looked into this enough to know whether or not it makes sense. This is a “copy nature’s work” approach: nature knows more about how to make brains that are good at thinking, than what is expressed in a normal adult human.

Problems

-

Who knows what negative effects might result.

-

Learning windows might be irreversibly lost after childhood, e.g. by long-range connections being irrecoverably pruned.

Brain-brain electrical interface approaches

Brain-computer interfaces don’t obviously give an opportunity for large increases in creative philosophical problem-solving ability. See the discussion in “Prosthetic connectivity”. The fundamental problem is that we, programming the computer part, don’t know how to write code that does transformations that will be useful for neural minds.

But brain-brain interfaces——adding connections between brain tissues that normally aren’t connected——might increase those abilities. These approaches use electrodes to read electrical signals from neurons, then transmit those signals (perhaps compressed/filtered/transformed) through wires / fiber optic cables / EM waves, then write them to other neurons through other electrodes. These are “copy nature’s work” approaches, in the sense that we think nature made neurons that know how to arrange themselves usefully when connected with other neurons.

Problems with all electrical brain interface approaches

The butcher number. Current electrodes kill more neurons than they record. That doesn’t scale safely to millions of connections.

Bad feedback. Neural synapses are not strictly feedforward; there is often reciprocal signaling and regulation. Electrodes wouldn’t communicate that sort of feedback, which might be important for learning.

Massive cerebral prosthetic connectivity

Source: https://www.neuromedia.ca/white-matter/

Half of the human brain is white matter, i.e. neuronal axons with fatty sheaths around them to make them transmit signals faster. White matter is ~1/10 the volume of rodent brains, but ~1/2 the volume of human brains. Wiring is expensive and gets minimized; see “Principles of Neural Design” by Sterling and Laughlin. All these long-range axons are a huge metabolic expense. That means fast, long-range, high bandwidth (so to speak——there are many different points involved) communication is important to cognitive capabilities. See here.

A better-researched comparison would be helpful. But vaguely, my guess is that if we compare long-range neuronal axons to metal wires, fiber optic cables, or EM transmissions, we’d see (amortized over millions of connections): axons are in the same ballpark in terms of energy efficiency, but slower, lower bandwidth, and more voluminous. This leads to:

Method: add many millions of read-write electrodes to several brain areas, and then connect them to each other.

See “Prosthetic connectivity” for discussion of variants and problems. The main problem is that current brain implants furnish <10^4 connections, but >10^6 would probably be needed to have a major effect on problem-solving ability, and electrodes tend to kill neurons at the insertion site. I don’t know how to accelerate this, assuming that Neuralink is already on the ball well enough.

Human / human interface

Method: add many thousands of read-write electrodes to several brain areas in two different brains, and then connect them to each other.

If one person could think with two brains, they’d be much smarter. Two people connected is not the same thing, but could get some of the benefits. The advantages of an electric interface over spoken language are higher bandwidth, lower latency, less cost (producing and decoding spoken words), and potentially more extrospective access (direct neural access to inexplicit neural events). But it’s not clear that there should be much qualitative increase in philosophical problem-solving ability.

A key advantage over prosthetic connectivity is that the benefits might require a couple ooms fewer connections. That alone makes this method worth trying, as it will be probably be feasible soon.

Interface with brain tissue in a vat

Method: grow neurons in vitro, and then connect them to a human brain.

The advantage of this approach is that it would in principle be scalable. The main additional obstacle, beyond any neural-neural interface approaches, is growing cognitively useful tissue in vitro. This is not completely out of the question——see “DishBrain”——but who knows if it would be feasible.

Massive neural transplantation

The approach

Method: grow >10^8 neurons (or appropriate stem cells) in vitro, and then put them into a human brain.

There have been some experiments along these lines, at a smaller scale, aimed at treating brain damage.

The idea is simply to scale up the brain’s computing wetware.

Problems

It would be a complex and risky surgery.

We don’t know how to make high-quality neurons in vitro.

The arrangement of the neurons might be important, and would be harder to replicate. Using donor tissue might fix this, but becomes more gruesome and potentially risky.

It might be difficult to get transplanted tissue to integrate. There’s at least some evidence that human cerebral organoids can integrate into mouse brains.

Problem-solving might be bottlenecked on long-range communication rather than neuron count.

Support for thinking

Generally, these approaches try to improve human thinking by modifying the algorithm-like elements involved in thinking. They are “figure it out ourselves” approaches.

The approaches

There is external support:

Method: create artifacts that offload some elements of thinking to a computer or other external device.

E.g. the printing press, the text editor, the search engine, the typechecker.

There is mental software:

Method: create methods of thinking that improve thinking.

E.g. the practice of mathematical proof, the practice of noticing rationalization, the practice of investigating boundaries.

There is social software:

Method: create methods of social organization that support and motivate thinking.

E.g. a shared narrative in which such-and-such cognitive tasks are worth doing, the culture of a productive research group.

Method: create methods of social organization that constitute multi-person thinking systems.

E.g. git.

Problems

The basic problem is that the core activity, human thinking, is not visible or understood. As a consequence, problems and solutions can’t be shared / reproduced / analysed / refactored / debugged. Philosophers couldn’t even keep paying attention to the question. There are major persistent blind spots around important cognitive tasks that have bad feedback.

Solutions are highly context dependent——they depend on variables that aren’t controlled by the technology being developed. This adds to the unscalability of these solutions.

The context contains strong adversarial memes, which limits these properties of solutions: speed (onboarding time), scope (how many people), mental energy budget (fraction of each person’s energy), and robustness (stability over time and context).

FAQ

What about weak amplification

Getting rid of lead poisoning should absolutely be a priority. It won’t greatly increase humanity’s maximum intelligence level though.

What about …

BCIs? weaksauce

Nootropics? weaksauce

Brain training? weaksauce

Transplanting bird neurons? Seems risky and unlikely to work.

Something something bloodflow? weaksauce

Transcranial magnetic stimulation? IDK, probably weaksauce. This is a “counting up from negative up to zero” thing; might remove inhibitions or trauma responses, or add useful noise that breaks anti-helpful states, or something. But it won’t raise the cap on insight, probably——people sometimes get to have their peak problem solving sometimes anyway.

Ultrasound? ditto

Neurofeedback? Possibly… seems like a better bet than other stuff like this, but probably weaksauce.

Getting good sleep? weaksauce——good but doesn’t make supergeniuses

Gut microbiome? weaksauce

Mnemonic systems? weaksauce

Software exobrain? weaksauces

LLMs? no

Psychedelics? stop

Buddhism? Aahhh, I don’t think you get what this is about

Embracing evil? go away

Rotating armodafinil, dextromethorphan, caffeine, nicotine, and lisdexamfetamine? AAHHH NOOO

[redacted]? Absolutely not. Go sit in the corner and think about what you were even thinking of doing.

The real intelligence enhancement is …

Look, I’m all for healing society, healing trauma, increasing collective consciousness, creating a shared vision of the future, ridding ourselves of malign egregores, blah blah. I’m all for it. But it’s a difficult, thinky problem. …So difficult that you might need some good thinking help with that thinky problem...

Is this good to do?

Yeah, probably. There are many downside risks, but the upside is large and the downsides can be greatly alleviated.

Curated. Augmenting human intelligence seems like one of the most important things-to-think-about this century. I appreciated this post’s taxonomy.

I appreciate the made of graph of made up numbers that Tsvi made up being clearly labeled as such.

I have a feeling that this post could be somewhat more thorough, maybe with more links to the places where someone could followup on the technical bits of each thread.

.

The point of made up numbers is that they are a helpful tool for teasing out some implicit information from your intuitions, which is often better than not doing that at all, but, it’s important that they are useful in a pretty different way from numbers-you-empirically-got-from-somewhere, and thus it’s important that they be clearly labeled as made up numbers that Tsvi made up numbers.

See: If it’s worth doing, it’s worth doing with Made Up Statistics

.

Why do you think the table is the most important thing in the article?

A different thing Tsvi could have done was say “here’s my best guess of which of these are most important, and my reasoning why”, but this would have essentially the same thing as the table + surrounding essay but with somewhat less fidelity of what his guesses were for the ranking.

Meanwhile I think the most important thing was laying out all the different potential areas of investigation, which I can now reason about on my own.

.

First, reiterating, the most important bit here is the schema, and drawing attention to this as an important area of further work.

Second, I think calling it “baseless speculation” is just wrong. Given that you’re jumping to a kinda pejorative framing, it looks like your mind is kinda made up and I don’t feel like arguing with you more. I don’t think you actually read the scott article in a way that was really listening to it and considering the implications.

But, since I think the underlying question of “what is LessWrong curated for” is nuanced and not clearly spelled out, I’ll go spell that out for the benefit of everyone just tuning in.

Model 1: LessWrong as “full intellectual pipeline, from ‘research office watercooler’ to ‘published’”

The purpose of LW curated is not to be a peer reviewed journal, and the purpose of LW is not to have quite the same standards for published academic work. Instead, I think of LW has tackling “the problem that academia is solving” through a somewhat different lens, which includes many of the same pieces but organizes them differently.

What you see in a finished, published journal article is the very end of a process, and it’s not where most of the generativity happens. Most progress is happening in conversations around watercoolers at work, slack channels, conference chit-chat, etc.

LW curation is not “published peer review.” The LessWrong Review is more aspiring to be that (I also think The Review fails at achieving all my goals with “the good parts of peer review,” although it achieves other goals, and I have thoughts on how to improve it on that axis)

But the bar for curated is something like “we’ve been talking about this around the watercooler for weeks, the people involved in the overall conversation have found this a useful concept and they are probably going to continue further research that builds on this and eventually you will see some more concrete output.

In this case, the conversation has already been ongoing awhile, with posts like Significantly Enhancing Adult Intelligence With Gene Editing May Be Possible (another curated post, which I think is more “rigorous” in the classical sense).

I don’t know if there’s a good existing reference post for “here in detail is the motivation for why we want to do human intelligence enhancement and make it a major priority.” Tsvi sort of briefly discusses that here but mostly focuses on “where might we want to focus, given this goal.”

Model 2. “Review” is an ongoing process.

One way you can do science is to do all of the important work in private, and then publish at the end. That is basically just not how LW is arranged. The whole idea here is to move the watercooler to the public area, and handle the part where “ideas we talk about at the watercooler are imprecise and maybe wrong” with continuous comment-driven review and improving our conversational epistemics.

I do think the bar for curated is “it’s been at least a few days, the arguments in the post make sense to me (the curator), and nobody was raised major red flags about the overall thrust of the post.” (I think this post meets that bar)

I want people to argue about both the fiddly details of the post, or the overall frame of the post. The way you argue that is by making specific claims about why the post’s details are wrong, or incomplete, or why the posts’s framing is pointed in the wrong direction.

The fact that this post’s framing seems important is more reason to curate it, if we haven’t found first-pass major flaws and I want more opportunity for people to discuss major flaws.

Saying “this post is vague and it’s made up numbers aren’t very precise” isn’t adding anything to the conversation (except for providing some scaffold for a meta-discussion on LW site philosophy, which is maybe useful to do periodically since it’s not obvious at a glance)

Revisiting the guesswork / “baseless speculation” bit

If a group of researchers have a vein they have been discussing at the watercooler, and it has survived a few rounds of discussion and internal criticism, and it’ll be awhile before a major legible rigorous output is published:

I absolutely want those researchers intuitions and best guesses about which bits are important. Those researchers have some expertise and worldmodels. They could spend another 10-100 hours articulating those intuitions with more precision and backing them up with more evidence. Sometimes it’s correct to do that. But if I want other researchers to be able to pick up the work and run with it, I don’t want them bottlenecked on the first researchers privately iterating another 10-100 hours before sharing it.

I don’t want us to overanchor on those initial intuitions and best guesses. And if you don’t trust those researcher’s intuitions, I want you to have an easy time throwing them out and thinking about them from scratch.

Basically what Raemon said. I wanted to summarize my opinions, give people something to disagree with (both the numbers and the rubric), highlight what considerations seem important to me (colored fields); but the numbers are made up (because they are predictions, which are difficult; and they are far from fully operationalized; and they are about a huge variety of complex things, so would be difficult to evaluate; and I’ve thought hard about some of the numbers, but not about most of them). It’s better than giving no numbers, no?

.

FYI I do think the downside of “people may anchor off the numbers” is reasonable to weigh in the calculus of epistemic-community-norm-setting.

I would frame the question: “is the downside of people anchoring off potentially-very-off-base numbers worse than the upside of having intuitions somewhat more quantified, with more gears exposed?”. I can imagine that question resolving in the “actually yeah it’s net negative”, but, if you’re treating the upside as “zero” I think you’re missing some important stuff.

As someone who spent a few years researching this direction intensely before deciding to go work on AI alignment directly (the opposite direction you’ve gone!), I can’t resist throwing in my two cents.

I think germline engineering could do a lot, if we had the multiple generations to work with. As I’ve told you, I don’t think we have anything like near enough time for a single generation (much less five or ten).

I think direct brain tissue implantation is harder even than you imagine. Getting the neurons wired up right in an adult brain is pretty tricky. Even when people do grow new axons and dendrites after an injury to replace a fraction of their lost tissue, this sometimes goes wrong and makes things worse. Misconnected neurons are more of a problem than too few neurons.

I think there’s a lot more potential in brain-computer-interfaces than you are giving them credit for, and an application you haven’t mentioned.

Some things to consider here:

The experiments that have been tried in humans have been extremely conservative, aiming to fix problems in the most well-understood but least-relevant-to-intelligence areas of the brain (sensory input, motor output). In other words, weaksauce by design. We really don’t have any experiments that tell us how much intelligence amplification we could get from having someone’s math/imagination/science/grokking/motivation areas hooked up to a feedback loop with a computer running some sort of efficient brain tissue simulator. I’m guessing that this could actually do some impressive augmentation using current tech (e.g. neuralink). The weak boring non-intelligence-relevant results you see in the literature are very much by design of the scientists, acting in socially reasonable ways to pursue cautious incremental science or timid therapeutics. This is not evidence that the tech itself is actually this limited.

BCI implants have also usually been targeted at having detailed i/o for a very specific area, not the properly distributed i/o you’d want for networking brains together. This is the case both for therapeutic human implants, and for scientific implants where scientists are trying to measure detailed things about a very specific spot in the brain.

For a proper readout, a good example is the webbing of many sensors laid over a large area of cortex in human patients who are being treated for epilepsy. The doctors need to find the specific source of the epileptic cascade so they can treat that particular root cause. Thus, they need to simultaneously monitor many brain regions. What you need is an implant designed more at this multi-area scale.

Growing brain tissue in a vat is relatively hard and expensive compared to growing brain tissue in an animal. Also, it’s going to be less well-ordered neural nets, which matters a lot. Well organized cortical microcolumns work well, disordered brain tissue works much less well.

Given this, I think it makes a lot more sense to focus on animal brains. Pigs have reasonably sized brains for a domestic animal, and are relatively long-lived (compared to mice for example) and easy to produce lots of in a controlled environment. It’s also not that hard to swap in a variety of genes related to neural development to get an animal to grow neurons that are much more similar to human neurons. This has been done a bunch in mice, for various different sets of genes. So, create a breeding line of pigs with humanized neurons, raise up a bunch of them in a secure facility, and give a whole bunch of juveniles BCI implants all at once. (Juvenile is important, since brain circuit flexibility is much higher in young animals.) You probably get something like 10000x as much brain tissue per dollar using pigs than neurons in a petri dish. Also, we already have pig farms that operate at the appropriate scale, but definitely don’t have brain-vat-factories at scale.

Once you have a bunch of test animals with BCI implants into various areas of their brains, you can try many different experiments with the same animals and hardware. You can try making networks where the animals are given boosts from computer models, where many animals are networked together, where there is a ‘controller’ model on the computer which tries to use the animals as compute resources (either separately or networked together). If you also have a human volunteer with BCI implants in the correct parts of their brain, you can have them try to use the pigs.

Whether or not a target subject is controlling or being controlled is dependent on a variety of factors like which areas the signals are being input into versus read from, and the timing thereof. You can have balanced networks, or controller/subject networks.

4. My studies of the compute graph of the brain based on recent human connectome research data (for AI alignment purposes), show that actually you can do everything you need with current 10^4 scale connection implant tech. You don’t need 10^6 scale tech. Why? Because the regions of the brain are themselves connected at 10^2 − 10^4 scale (fewer connections for more distant areas, the most connections for immediately adjacent and highly related areas like V1 to V2). The long range axons that communicate between brain regions are shockingly few. The brain seems to work fine with this level of information compression into and out of all its regions. Thus, if you had say, 6 different implants each with 10^3 − 10^4 connectivity, and had those 6 implants placed in key areas for intelligence (rather than weaksauce sensory or motor areas), that’s probably all you need. It’s common practice to insert 10-15 implants (sometimes even more if needed) into the brain of an epilepsy patient in order to figure out where the surgeons need to cut. The tech is there, and works plenty well.

That’s creative. But

It seems immoral, maybe depending on details. Depending on how humanized the neurons are, and what you do with the pigs (especially the part where human thinking could get trained into them!), you might be creating moral patients and then maiming and torturing them.

It has a very high ick factor. I mean, I’m icked out by it; you’re creating monstrosities.

I assume it has a high taboo factor.

It doesn’t seem that practical. I don’t immediately see an on-ramp for the innovation; in other words, I don’t see intermediate results that would be interesting or useful, e.g. in an academic or commercial context. That’s in contrast to germline engineering or brain-brain interfaces, which have lots of component technologies and partial successes that would be useful and interesting. Do you see such things here?

Further, it seems far far less scalable than other methods. That means you get way less adoption, which means you get way fewer geniuses. Also, importantly, it means that complaints about inequality become true. With, say, germline engineering, anyone who can lease-to-own a car can also have genetically super healthy, sane, smart kids. With networked-modified-pig-brain-implant-farm-monster, it’s a very niche thing only accessible to the rich and/or well-connected. Or is there a way this eventually results in a scalable strong intelligence boost?

That’s compelling though, for sure.

On the other hand, the quality is going to be much lower compared to human brains. (Though presumably higher quality compared to in vitro brain tissue.) My guess is that quality is way more important in our context. I wouldn’t think so as strongly if connection bandwidth were free; in that case, plausibly you can get good work out of the additional tissue. Like, on one end of the spectrum of “what might work”, with low-quality high-bandwidth, you’re doing something like giving each of your brain’s microcolumns an army of 100 additional, shitty microcolumns for exploration / invention / acceleration / robustness / fan-out / whatever. On the other end, you have high-quality low-bandwidth: multiple humans connected together, and it’s maybe fine that bandwidth is low because both humans are capable of good thinking on their own. But low-quality low-bandwith seems like it might not help much—it might be similar to trying to build a computer by training pigs to run around in certain patterns.

How important is it to humanize the neurons, if the connections to humans will be remote by implant anyway? Why use pigs rather than cows? (I know people say pigs are smarter, but that’s also more of a moral cost; and I’m wondering if that actually matters in this context. Plausibly the question is really just, can you get useful work out of an animal’s brain, at all; and if so, a normal cow is already “enough”.)

We see pretty significant changes in ability of humans when their brain volume changes only a bit. I think if you can 10x the effective brain volume, even if the additional ‘regions’ are of lower quality, you should expect some dramatic effects. My guess is that if it works at all, you get at least 7 SDs of sudden improvement over a month or so of adaptation, maybe more.

As I explained, I think evidence from the human connectome shows that bandwidth is not an issue. We should be able to supply plenty of bandwidth.

I continue to find it strange that you are so convinced that computer simulations of neurons would be insufficient to provide benefit. I’d definitely recommend that before trying to network animal brains to a human. In that case, you can do quite a lot of experimentation with a lot of different neuronal models and machine learning models as possible boosters for just a single human. It’s so easy to change what program the computer is running, and how much compute you have hooked up. Seems to me you should prove that this doesn’t work before even considering going the animal brain route. I’m confident that no existing research has attempted anything like this, so we have no empirical evidence to show that it wouldn’t work. Again, even if each simulated cortical column is only 1% as effective (which seems like a substantial underestimate to me), we’d be able to use enough compute that we could easily simulate 1000x extra.

Have you watched videos of the first neuralink patient using a computer? He has great cursor control, substantially better than previous implants have been able to deliver. I think this is strong evidence that the implant tech is at acceptable performance level.

I don’t think the moral cost is relevant if the thing you are comparing it too is saving the world, and making lots of human and animal lives much better. It seems less problematic to me than a single ordinary pig farm, since you’d be treating these pigs unusually well. Weird that you’d feel good about letting the world get destroyed in order to have one fewer pig farm in it. Are you reasoning from Copenhagen ethics? That approach doesn’t resonate with me, so maybe that’s why I’m confused.

It is quite impractical. A weird last ditch effort to save the world. It wouldn’t be scalable, you’d be enhancing just a handful of volunteers who would then hopefully make rapid progress on alignment.

To get a large population of people smarter, polygenic selection seems much better. But slow.

The humanization isn’t critical, and it isn’t for the purposes of immune-signature matching. It’s human genes related to neural development, so that the neurons behave more like human neurons (e.g. forming 10x more synapses in the cortex).

Pigs are a better cost-to-brain-matter ratio.

I wasn’t worrying about animal suffering here, like I said above.

Gotcha. Yeah, I think these strategies probably just don’t work.

The moral differences are:

Humanized neurons.

Animals with parts of their brains being exogenously driven; this could cause large amounts of suffering.

Animals with humanized thinking patterns (which is part of how the scheme would be helpful in the first place).

Where did you get the impression that I’d feel good about, or choose, that? My list of considerations is a list of considerations.

That said, I think morality matters, and ignoring morality is a big red flag.

Separately, even if you’re pretending to be a ruthless consequentialist, you still want to track morality and ethics and ickyness, because it’s a very strong determiner of whether or not other people will want to work on something, which is a very strong determiner of success or failure.

Yes, fair enough. I’m not saying that clearly immoral things should be on the table. It just seems weird to me that this is something that seems approximately equivalent to a common human activity (raising and killing pigs) that isn’t widely considered immoral.

FWIW, I wouldn’t expect the exogenous driving of a fraction of cortical tissue to result in suffering of the subjects.

I do agree that having humanized neurons being driven in human thought patterns makes it weird from an ethical standpoint.

My reason is that suffering in general seems related to [intentions pushing hard, but with no traction or hope]. A subspecies of that is [multiple drives pushing hard against each other, with nobody pulling the rope sideways]. A new subspecies would be “I’m trying to get my brain tissue to do something, but it’s being externally driven, so I’m just scrabbling my hands futilely against a sheer blank cliff wall.” and “Bits of my mind are being shredded because I create them successfully by living and demanding stuff of my brain, but the the bits are exogenously driven / retrained and forget to do what I made them to do.”.

Really hard to know without more research on the subject.

My subjective impression from working with mice and rats is that there isn’t a strong negative reaction to having bits of their cortex stimulated in various ways (electrodes, optogenetics).

Unlike, say, experiments where we test their startle reaction by placing them in a small cage with a motion sensor and then playing a loud startling sound. They hate that!

This is interesting, but I don’t understand what you’re trying to say and I’m skeptical of the conclusion. How does this square with half the brain being myelinated axons? Are you talking about adult brains or child brains? If you’re up for it, maybe let’s have a call at some point.

Half the brain by >>volume<< being myelinated axons. Myelinated axons are extremely volume-wasteful due to their large width over relatively large distances.

I’m talking about adult brains. Child brains have slightly more axons (less pruning and aging loss has occurred), but much less myelination.

Happy to chat at some point.

Yep, I agree. I vaguely alluded to this by saying “The main additional obstacle [...] is growing cognitively useful tissue in vitro.”; what I have in mind is stuff like:

Well-organized connectivity, as you say.

Actually emulating 5-minute and 5-day behavior of neurons—which I would guess relies on being pretty neuron-like, including at the epigenetic level. IIUC current in vitro neural organoids are kind of shitty—epigenetically speaking they’re definitely more like neurons than like hepatocytes, but they’re not very close to being neurons.

Appropriate distribution of cell types (related to well-organized connectivity). This adds a whole additional wrinkle. Not only do you have to produce a variety of epigenetic states, but also you have to have them be assorted correctly (different regions, layers, connections, densities...). E.g. the right amount of glial cells...

Your characterization of the current state of research matches my impressions (though it’s good to hear from someone who knows more). My reasons for thinking BCIs are weaksause have never been about that, though. The reasons are that:

I don’t see any compelling case for anything you can do on a computer which, when you hook it up to a human brain, makes the human brain very substantially better at solving philosophical problems. I can think of lots of cool things you can do with a good BCI, and I’m sure you and others can think of lots of other cool things, but that’s not answering the question. Do you see a compelling case? What is it? (To be more precise, I do see compelling cases for the few areas I mentioned: prosthetic intrabrain connectivity and networking humans. But those both seem quite difficult technically, and would plausibly be capped in their success by connection bandwidth, which is technically difficult to increase.)

It doesn’t seem like we understand nearly as much about intelligence compared to evolution (in a weak sense of “understand”, that includes stuff encoded in the human genome cloud). So stuff that we’ll program in a computer will be qualitatively much less helpful for real human thinking, compared to just copying evolution’s work. (If you can’t see that LLMs don’t think, I don’t expect to make progress debating that here.)

I think that cortical microcolumns are fairly close to acting in a pretty well stereotyped way that we can simulate pretty accurately on a computer. And I don’t think their precise behavior is all that critical. I think actually you could get 80-90% of the effective capacity by simply having a small (10k? 100k? parameter) transformer standing in for each simulated cortical column, rather than a less compute efficient but more biologically accurate simulation.

The tricky part is just setting up the rules for intercolumn connection (excitatory and inhibitory) properly. I’ve been making progress on this in my research, as I’ve mentioned to you in the past.

Interregional connections (e.g. parietal lobe to prefrontal lobe, or V1 to V2) are fewer, and consistent enough between different people, and involve many fewer total connections, so they’ve all been pretty well described by modern neuroscience. The full weighted directed graph is known, along with a good estimate of the variability on the weights seen between individuals.

It’s not the case that the whole brain is involved in each specific ability that a person has. The human brain has a lot of functional localization. For a specific skill, like math or language, there is some distributed contribution from various areas but the bulk of the computation for that skill is done by a very specific area. This means that if you want to increase someone’s math skill, you probably need to just increase that specific known 5% or so of their brain most relevant to math skill by 10x. This is a lot easier than needing to 10x the entire brain.

I don’t know enough to evaluate your claims, but more importantly, I can’t even just take your word for everything because I don’t actually know what you’re saying without asking a whole bunch of followup questions. So hopefully we can hash some of this out on the phone.

Sorry that my attempts to communicate technical concepts don’t always go smoothly!

I keep trying to answer your questions about ‘what I think I know and how I think I know it’ with dumps of lists of papers. Not ideal!

But sometimes I’m not sure what else to do, so.… here’s a paper!

https://journals.plos.org/plosbiology/article?id=10.1371/journal.pbio.3001575

An estimation of the absolute number of axons indicates that human cortical areas are sparsely connected

Burke Q. Rosen, Eric Halgren

Abstract

The tracts between cortical areas are conceived as playing a central role in cortical information processing, but their actual numbers have never been determined in humans. Here, we estimate the absolute number of axons linking cortical areas from a whole-cortex diffusion MRI (dMRI) connectome, calibrated using the histologically measured callosal fiber density. Median connectivity is estimated as approximately 6,200 axons between cortical areas within hemisphere and approximately 1,300 axons interhemispherically, with axons connecting functionally related areas surprisingly sparse. For example, we estimate that <5% of the axons in the trunk of the arcuate and superior longitudinal fasciculi connect Wernicke’s and Broca’s areas. These results suggest that detailed information is transmitted between cortical areas either via linkage of the dense local connections or via rare, extraordinarily privileged long-range connections.

Wait are you saying that not only there is quite low long-distance bandwidth, but also relatively low bandwith between neighboring areas? Numbers would be very helpful.

And if there’s much higher bandwidth between neighboring regions, might there not be a lot more information that’s propagating long-range but only slowly through intermediate areas (or would that be too slow or sth?)?

(Relatedly, how crisply does the neocortex factor into different (specialized) regions? (Like I’d have thought it’s maybe sorta continuous?))

I’m glad you’re curious to learn more! The cortex factors quite crisply into specialized regions. These regions have different cell types and groupings, so were first noticed by early microscope users like Cajal. In a cortical region, neurons are organized first into microcolumns of 80-100 neurons, and then into macrocolumns of many microcolumns. Each microcolumn works together as a group to calculate a function. Neighboring microcolumns inhibit each other. So each macrocolumn is sort of a mixture of experts. The question then is how many microcolumns from one region send an output to a different region. For the example of V1 to V2, basically every microcolumn in V1 sends a connection to V2 (and vise versa). This is why the connection percentage is about 1%. 100 neurons per microcolumn, 1 of which has a long distance axon to V2. The total number of neurons is roughly 10 million, organized into about 100,000 microcolumns.

For areas that are further apart, they send fewer axons. Which doesn’t mean their signal is unimportant, just lower resolution. In that case you’d ask something like “how many microcolumns per macrocolumn send out a long distance axon from region A to region B?” This might be 1, just a summary report of the macrocolumn. So for roughly 10 million neurons, and 100,000 microcolumns organized into around 1000 macrocolumns… You get around 1000 neurons send axons from region A to region B.

More details are in the papers I linked elsewhere in this comment thread.

Thanks!

Yeah I believe what you say about that long-distance connections not that many.

I meant that there might be more non-long-distance connections between neighboring areas. (E.g. boundaries of areas are a bit fuzzy iirc, so macrocolumns towards the “edge” of a region are sorta intertwined with macrocolumns of the other side of the “edge”.)

(I thought when you mean V1 to V2 you include those too, but I guess you didn’t?)

Do you think those inter-area non-long-distance connections are relatively unimportant, and if so why?

Here’s a paper about describing the portion of the connectome which is invariant between individual people (basal component), versus that which is highly variant (superstructure):

https://arxiv.org/abs/2012.15854

## Uncovering the invariant structural organization of the human connectome

Anand Pathak, Shakti N. Menon and Sitabhra Sinha

(Dated: January 1, 2021)

In order to understand the complex cognitive functions of the human brain, it is essential to study

the structural macro-connectome, i.e., the wiring of different brain regions to each other through axonal pathways, that has been revealed by imaging techniques. However, the high degree of plasticity and cross-population variability in human brains makes it difficult to relate structure to function, motivating a search for invariant patterns in the connectivity. At the same time, variability within a population can provide information about the generative mechanisms.

In this paper we analyze the connection topology and link-weight distribution of human structural connectomes obtained from a database comprising 196 subjects. By demonstrating a correspondence between the occurrence frequency of individual links and their average weight across the population, we show that the process by which the human brain is wired is not independent of the process by which the link weights of the connectome are determined. Furthermore, using the specific distribution of the weights associated with each link over the entire population, we show that a single parameter that is specific to a link can account for its frequency of occurrence, as well as, the variation in its weight across different subjects. This parameter provides a basis for “rescaling” the link weights in each connectome, allowing us to obtain a generic network representative of the human brain, distinct from a simple average over the connectomes. We obtain the functional connectomes by implementing a neural mass model on each of the vertices of the corresponding structural connectomes.

By comparing these with the empirical functional brain networks, we demonstrate that the rescaling procedure yields a closer structure-function correspondence. Finally, we show that the representative network can be decomposed into a basal component that is stable across the population and a highly variable superstructure.

Brain emulation looks closer than your summary table indicates.

Manifold estimates a 48% chance by 2039.

Eon Systems is hiring for work on brain emulation.

Manifold is pretty weak evidence for anything >=1 year away because there are strong incentives to bet on short term markets.

I’m not sure how to integrate such long-term markets from Manifold. But anyway, that market seems to have a very vague notion of emulation. For example, it doesn’t mention anything about the emulation doing any useful cognitive work!

Once we get superintelligence, we might get every other technology that the laws of physics allow, even if we aren’t that “close” to these other technologies.

Maybe they believe in a ≈38% chance of superintelligence by 2039.

PS: Your comment may have caused it to drop to 38%. :)

Manifold estimates an 81% chance of ASI by 2036, using a definition that looks fairly weak and subjective to me.

I’ve bid the brain emulation market back up a bit.

This is great! Everybody loves human intelligence augmentation, but I’ve never seen a taxonomy of it before, offering handholds for getting started.

I’d say “software exobrain” is less “weaksauce,” and more “80% of the peak benefits are already tapped out, for conscientious people who have heard of OneNote or Obsidian.” I also am still holding out for bird neurons with portia spider architectural efficiency and human cranial volume; but I recognize that may not be as practical as it is cool.

You’re assuming a steady state. Firstly, evolution takes time. Secondly, if humans were, for example, in an intelligence arms-race with other humans (for example, if smarter people can reliably con dumber people out of resources often enough to get a selective advantage out of it), then the relative genetic fitness of a specific intelligence level can vary over time, depending on how it compares to the rest of the population. Similarly, if much of the advantage of an IQ of 150 requires being able to find enough IQ 150 coworkers to collaborate with, then the relative genetic fitness of IQ 150 depends on the IQ profile of the rest of the population.

An example I love of a helpful brain adaptation with few downsides that I know of, which hasn’t spread far throughout mammals is one in seal brains. Seals, unlike whales and dolphins, had an evolutionary niche which caused them to not get as good at holding their breathe as would be optimal for them. They had many years of occasionally diving too deep and dying from brain damage related to oxygen deprivation (ROS in neurons). So, some ancient seal had a lucky mutation that gave them a cool trick. The glial cells which support neurons can easily grow back even if their population gets mostly wiped out. Seals have extra mitochondria in their glial cells and none in their neurons, and export the ATP made in the glial cells to the neurons. This means that the reactive oxygen species from oxygen deprivation of the mitochondria all occur in the glia. So, when a seal stays under too long, their glial cells die instead of their neurons. The result is that they suffer some mental deficiencies while the glia grow back over a few days or a couple weeks (depending on the severity), but then they have no lasting damage. Unlike in other mammals, where we lose neurons that can’t grow back.

Given enough time, would humans evolve the same adaptation (if it does turn out to have no downsides)? Maybe, but probably not. There just isn’t enough reproductive loss due to stroke/oxygen-deprivation to give a huge advantage to the rare mutant who lucked into it.

But since we have genetic engineering now… we could just give the ability to someone. People die occasionally competing in deep freediving competitions, and definitely get brain damage. I bet they’d love to have this mod if it were offered.

Also, sometimes there are ‘valleys of failure’ which block off otherwise fruitful directions in evolution. If there’s a later state that would be much better, but to get there would require too many negative mutations before the positive stuff showed up, the species may simply never get lucky enough to make it through the valley of failure.

This means that evolution is heavily limited to things which have mostly clear paths to them. That’s a pretty significant limitation!

Short note: We don’t need 7SDs to get 7SDs.

If we could increase the average IQ by 2SDs, then we’d have lots of intelligent people looking into intelligence enhancement. In short, intelligence feeds into itself, it might be possible to start the AGI explosion in humans.

(Just acknowledging that my response is kinda disorganized. Take it or leave it, feel free to ask followups.)

Most easy interventions work on a generational scale. There’s pretty easy big wins like eliminating lead poisoning (and, IDK, feeding everyone, basic medicine, internet access, less cannibalistic schooling) which we should absolutely do, regardless of any X-risk concerns. But for X-risk concerns, generational is pretty slow.

This is both in terms of increasing general intelligence, and also in terms of specific capabilities. Even if you bop an adult on the head and make zer +2SDs smarter, ze still would have to spend a bunch of time and effort to train up on some new field that’s needed for the next approach to further increasing intelligence. That’s not a generational scale exactly, maybe more like 10 years, but still.

We’re leaking survival probability mass to an AI intelligence explosion year by year. I think we have something like 0-2 or 0-3 generations before dying to AGI.

To be clear, I’m assuming that when you say “we don’t need 7SDs”, you mean “we don’t need to find an approach that could give 7SDs”. (Though to be clear, I agree with that in a literal sense, because you can start with someone who’s already +3SDs or whatever.) A problem with this is that approaches don’t necessarily stack or scale, just because they can give a +2SD boost to a random person. If you take a starving person and feed zer well, ze’ll be able to think better, for sure. Maybe even +2SDs? I really don’t know, sounds plausible. But you can’t then feed them much more and get them another +2SDs—maybe you can get like +.5SD with some clever fine-tuning or something. And you can’t then also get a big boost from good sleep, because you probably already increased their sleep quality by a lot; you’re double counting. Most (though not all!) people in, say, the US, probably can’t get very meaningfully less lead poisoned.

Further, these interventions I think would generally tend to bring people up to some fixed “healthy Gaussian distribution”, rather than shift the whole healthy distribution’s mean upward. In other words, the easy interventions that move the global average are more like “make things look like the developed world”. Again, that’s obviously good to do morally and practically, but in terms of X-risk specifically, it doesn’t help that much. Getting 3x samples from the same distribution (the healthy distribution) barely increases the max intelligence. Much more important (for X-risk) is to shift the distribution you’re drawing from. Stronger interventions that aren’t generational, such as prosthetic connectivity or adult brain gene editing, would tend to come with much more personal risks, so it’s not so scalable—and I don’t think in terms of trying to get vast numbers of people to do something, but rather just in terms of making it possible for people to do something if they really want to.

So what this implies is that either

your approach can scale up (maybe with more clever technology, but still riding on the same basic principle), or

you’re so capable that you can keep coming up with different effective, feasible approaches that stack.

So I think it matters to look for approaches that can scale up to large intelligence gains.

To put things in perspective, there’s lots of people who say that {nootropics, note taking, meditation, TCMS, blood flow optimization, …} give them +1SD boost or something on thinking ability. And yet, if they’re so smart, why ain’t they exploding?

All that said, I take your point. It does make it seem slightly more appealing to work on e.g. prosthetic connectivity, because that’s a non-generational intervention and could plausibly be scaled up by putting more effort into it, given an initial boost.

I think brain editing is maybe somewhat less scalable, though I’m not confident (plausibly it’s more scalable; it might depend for example on the floor of necessary damage from editing rounds, and might depend on a ceiling of how much you can get given that you’ve passed developmental windows). Support for thinking (i.e. mental / social / computer tech) seems like it ought to be scalable, but again, why ain’t you exploding? (Or in other words, we already see the sort of explosion that gets you; improving on it would take some major uncommon insights, or an alternative approach.) Massive neural transplantation might be scalable, but is very icky. If master regulator signaling molecules worked shockingly well, my wild guess is that they would be a little scalable (by finding more of them), but not much (there probably isn’t that much room to overclock neurons? IDK); they’d be somewhat all-or-nothing, I guess?

You’re correct that the average IQ could be increased in various ways, and that increasing the minimum IQ of the population wouldn’t help us here. I was imagining shifting the entire normal distribution two SDs to the right, so that those who are already +4-5SDs would become +5-7SDs.

As far as I’m concerned, the progress of humanity stands on the shoulders of giants, and the bottom 99.999% aren’t doing much of a difference.

The threshold for recursive self-improvement in humans, if one exists, is quite high. Perhaps if somebody like Neumann lived today it would be possible. By the way, most of the people who look into nootropics, meditations and other such things do so because they’re not functional, so in a way it’s a bit like asking “Why are there so many sick people in hospitals if it’s a place for recovery?” thought you could make the argument that geniuses would be doing these things if they worked.

My score on IQ tests has increased about 15 points since I was 18, but it’s hard to say if I succeeded in increasing my intelligence or if it’s just a result of improving my mental health and actually putting a bit of effort into my life. I still think that very high levels of concentration and effort can force the brain to reconstruct itself, but that this process is so unpleasant that people stop doing it once they’re good enough (for instance, most people can’t read all that fast, despite reading texts for 1000s of hours. But if they spend just a few weeks practicing, they can improve their reading speed by a lot, so this kind of shows how improvement stops once you stop applying pressure)

By the way, I don’t know much about neurons. It could be that 4-5SD people are much harder to improve since the ratio of better states to worse states is much lower

Right, but those interventions are harder (shifting the right tail further right is especially hard).

Also, shifting the distribution is just way different numerically from being able to make anyone who wants be +7SD. If you shift +1SD, you go from 0 people at +7SD to ~8 people.

(And note that the shift is, in some ways, more unequal compared to “anyone who wants, for the price of a new car, can reach the effective ceiling”.)

Right, I agree with that.

A right shift by 2SDs would make people like Hawkings, Einstein, Tesla, etc. about 100 times more common, and make it so that a few people who are 1-2SDs above these people are likely to appear soon. I think this is sufficient, but I don’t know enough about human intelligence to guarantee it.

I think it depends on how the SD is increased. If you “merely” create a 150-IQ person with a 20-item working memory, or with a 8SD processing speed, this may not be enough to understand the problem and to solve it. Of course, you can substitute with verbal intelligence, which I think a lot of mathematicians do. I can’t rotate 5D objects in my head, but I can write equations on paper which can rotate 5D objects and get the right answer. I think this is how mathematics is progressing past what we can intuitively understand. Of course, if your non-verbal intelligence can keep up, you’re much better off, since you can combine any insights from any area of life and get something new out of it.

we have really not fully explored ultrasound and afaik there is no reason to believe it’s inherently weaker than administering signaling molecules.

Signaling molecules can potentially take advantage of nature’s GRNs. Are you saying that ultrasound might too?

Neuronal activity could certainly affect gene regulation! so yeah, I think it’s possible (which is not a strong claim...lots of things “regulate” other things, that doesn’t necessarily make them effective intervention points)

Yeah, of course it affects gene regulation. I’m saying that—maayybe—nature has specific broad patterns of gene expression associated with powerful cognition (mainly, creativity and learning in childhood); and since these are implemented as GRNs, they’ll have small, discoverable on-off switches. You’re copying nature’s work about how to tune a brain to think/learn/create. With ultrasound, my impression is that you’re kind of like “ok, I want to activate GABA neurons in this vague area of the temporal cortex” or “just turn off the amygdala for a day lol”. You’re trying to figure out yourself what blobs being on and off is good for thinking; and more importantly you have a smaller action space compared to signaling molecules—you can only activate / deactivate whatever patterns of gene expression happen to be bundled together in “whatever is downstream of nuking the amygdala for a day”.

I think you’re underestimating meditation.

Since I’ve started meditating I’ve realised that I’ve been much more sensitive to vibes.

There’s a lot of folk who would be scarily capable if the were strong in system 1, in addition to being strong in system 2.

Then there’s all the other benefits that mediation can provide if done properly: additional motivation, better able to break out of narratives/notice patterns.

Then again, this is dependent on their being viable social interventions, rather than just aiming for 6 or 7 standard deviations of increase in intelligence.

Meditation has been practiced for many centuries and millions practice it currently.

Please list 3 people who got deeply into meditation, then went on to change the world in some way, not counting people like Alan Watts who changed the world by promoting or teaching meditation.

I think there are many cases of reasonably successful people who often cite either some variety of meditation, or other self-improvement regimes / habits, as having a big impact on their success. This random article I googled cites the billionaires Ray Dalio, Marc Benioff, and Bill Gates, among others. (https://trytwello.com/ceos-that-meditate/)

Similarly you could find people (like Arnold Schwarzenegger, if I recall?) citing that adopting a more mature, stoic mindset about life was helpful to them—Ray Dalio has this whole series of videos on “life principles” that he likes. And you could find others endorsing the importance of exercise and good sleep, or of using note-taking apps to stay organized.

I think the problem is not that meditation is ineffective, but that it’s not usually a multiple-standard-deviations gamechanger (and when it is, it’s probably usually a case of “counting up to zero from negative”, as TsviBT calls it), and it’s already a known technique. If nobody else in the world meditated or took notes or got enough sleep, you could probably stack those techniques and have a big advantage. But alas, a lot of CEOs and other top performers already know to do this stuff.

(Separately from the mundane life-improvement aspects, some meditators claim that the right kind of deep meditation can give you insight into deep philosophical problems, or the fundamental nature of conscious experience, and that this is so valuable that achieving this goal is basically the most important thing you could do in life. This might possibly even be true! But that’s different from saying that meditation will give you +50 IQ points, which it won’t. Kinda like how having an experience of sublime beauty while contemplating a work of art, might be life-changing, but won’t give you +50 IQ points.)

To compare to the obvious alternative, is the evidence for meditation stronger than the evidence for prayer? I assume there are also some religious billionaires and other successful people who would attribute their success to praying every day or something like that.

Maybe other people have a very different image of meditation than I do, such that they imagine it as something much more delusional and hyperreligious? Eg, some religious people do stuff like chanting mantras, or visualizing specific images of Buddhist deities, which indeed seems pretty crazy to me.

But the kind of meditation taught by popular secular sources like Sam Harris’s Waking Up app, (or that I talk about in my “Examining The Witness” youtube series about the videogame The Witness), seems to me obviously much closer to basic psychology or rationality techniques than to religious practices. Compare Sam Harris’s instructions about paying attention to the contents of one’s experiences, to Gendlin’s idea of “Circling”, or Yudkowsky’s concept of “sit down and actually try to think of solutions for five minutes”, or the art of “noticing confusion”, or the original Feynman essay where he describes holding off on proposing solutions. So it’s weird to me when people seem really skeptical of meditation and set a very high burden of proof that they wouldn’t apply for other mental habits like, say, CFAR techniques.

I’m not like a meditation fanatic—personally I don’t even meditate these days, although I feel bad about not doing it since it does make my life better. (Just like how I don’t exercise much anymore despite exercise making my day go better, and I feel bad about that too...) But once upon a time I just tried it for a few weeks, learned a lot of interesting stuff, etc. I would say I got some mundane life benefits out of it—some, like exercise or good sleep, that only lasted as long as I kept up the habit. and other benefits were more like mental skills that I’ve retained to today. I also got some very worthwhile philosophical insights, which I talk about, albeit in a rambly way mixed in with lots of other stuff, in my aforementioned video series. I certainly wouldn’t say the philosophical insights were the most important thing in my whole life, or anything like that! But maybe more skilled deeper meditation = bigger insights, hence my agnosticism on whether the more bombastic metitation-related claims are true.

So I think people should just download the Waking Up app and try meditating for like 10 mins a day for 2-3 weeks or whatever—way less of a time commitment than watching a TV show or playing most videogames—and see for themselves if it’s useful or not, instead of debating.

Anyways. For what it’s worth, I googled “billionares who pray”. I found this article (https://www.beliefnet.com/entertainment/5-christian-billionaires-you-didnt-know-about.aspx), which ironically also cites Bill Gates, plus the Walton Family and some other conservative CEOs. But IMO, if you read the article you’ll notice that only one of them actually mentions a daily practice of prayer. The one that does, Do Won Chang, doesn’t credit it for their business success… seems like they’re successful and then they just also pray a lot. For the rest, it’s all vaguer stuff about how their religion gives them a general moral foundation of knowing what’s right and wrong, or how God inspires them to give back to their local community, or whatever.

So, personally I’d consider this duel of first-page-google-results to be a win for meditation versus prayer, since the meditators are describing a more direct relationship between scheduling time to regularly meditate and the assorted benefits they say it brings, while the prayer people are more describing how they think it’s valuable to be christian in an overall cultural sense. Although I’m sure with more effort you could find lots of assorted conservatives claiming that prayer specifically helps them with their business in some concrete way. (I’m sure there are many people who “pray” in ways that resemble meditation, or resemble Yudkowsky’s sitting-down-and-trying-to-think-of-solutions-for-five-minutes-by-the-clock, and find these techniques helpful!)

IMO, probably more convincing than dueling dubious claims of business titans, is testimony from rationalist-community members who write in detail about their experiences and reasoning. Alexey Guzey’s post here is interesting, as he’s swung from being vocally anti-meditation, to being way more into it than I ever was. He seems to still generally have his head on straight (ie hasn’t become a religious fanatic or something), and says that meditation seems to have been helpful for him in terms of getting more things done: https://guzey.com/2022-lessons/

Thanks for answering my question directly in the second half.

I find the testimonies of rationalists who experimented with meditation less convincing than perhaps I should, simply because of selection bias. People who have pre-existing affinity towards “woo” will presumably be more likely to try meditation. And they will be more likely to report that it works, whether it does or not. I am not sure how much should I discount for this, perhaps I overdo it. I don’t know.

A proper experiment would require a control group—some people who were originally skeptical about meditation and Buddhism in general, and only agreed to do some exactly defined exercises, and preferably the reported differences should be measurable somehow. Otherwise, we have another selection bias, that if there are people for whom meditation does nothing, or is even harmful, they will stop trying. So at the end, 100% of people who tried will report success (whether real or imaginary), because those who didn’t see any success have selected themselves out.

I approve of making the “secular version of Buddhism”, but in a similar way, we could make a “secular version of Christianity”. (For example, how is gratitude journaling significantly different from thanking God for all his blessing before you go sleep?) And yet, I assume that the objection against “secular Christianity” on Less Wrong would be much greater than against “secular Buddhism”. Maybe I am wrong, but the fact that no one is currently promoting “secular Christianity” on LW sounds like weak evidence. I suspect, the relevant difference is that for an American atheist, Christianity is outgroup, and Buddhism is fargroup. Meditation is culturally acceptable among contrarians, because our neighbors don’t do it. But that is unrelated to whether it works or not.

Also, I am not sure how secular the “secular Buddhism” actually is, given that people still go to retreats organized by religious people, etc. It feels too much for me to trust that someone is getting lots of important information from religious people, without unknowingly also getting some of their biases.

Re: successful people who meditate, IIRC in Tim Ferriss’ book Tools of Titans, meditation was one of the most commonly mentioned habits of the interviewees.

Are these generally CEO-ish-types? Obviously “sustainably coping with very high pressure contexts” is an important and useful skill, and plausibly meditation can help a lot with that. But it seems pretty different from and not that related to increasing philosophical problem solving ability.

This random article I found repeats the Tim Ferriss claim re: successful people who meditate, but I haven’t checked where it appears in the book Tools of Titans:

Other than that, I don’t see why you’d relate meditation just to high-pressure contexts, rather than also conscientiousness, goal-directedness, etc. To me, it does also seem directly related to increasing philosophical problem-solving ability. Particularly when it comes to reasoning about consciousness and other stuff where an improved introspection helps most. Sam Harris would be kind of a posterchild for this, right?

What I can’t see meditation doing is to provide the kind of multiple SD intelligence amplification you’re interested in, plus it has other issues like taking a lot of time (though a “meditation pill” would resolve that) and potential value drift.

Got any evidence?

Not really.

How about TMS/tFUS/tACS ⇒ “meditation”/reducing neural noise?

Drastic improvements in mental health/reducing neural noise & rumination are way more feasible than increasing human intelligence (and still have huge potential for very high impact when applied on a population-wide scale [1]), and are possible to do on mass-scale (and there are some experimental TMS protocols like SAINT/accelerated TMS which aim to capture the benefits of TMS on a 1-2 week timeline) [there’s also wave neuroscience, which uses mERT and works in conjunction with qEEG, but I’m not sure if it’s “ready enough” yet—it seems to involve some sort of guesswork and there are a few negative reviews on reddit]. There are a few accelerated TMS centers and they’re not FDA-approved for much more than depression, but if we have fast AGI timelines, the money matters less.