Replacing Karma with Good Heart Tokens (Worth $1!)

Starting today, we’re replacing karma with Good Heart Tokens which can be exchanged for 1 USD each.

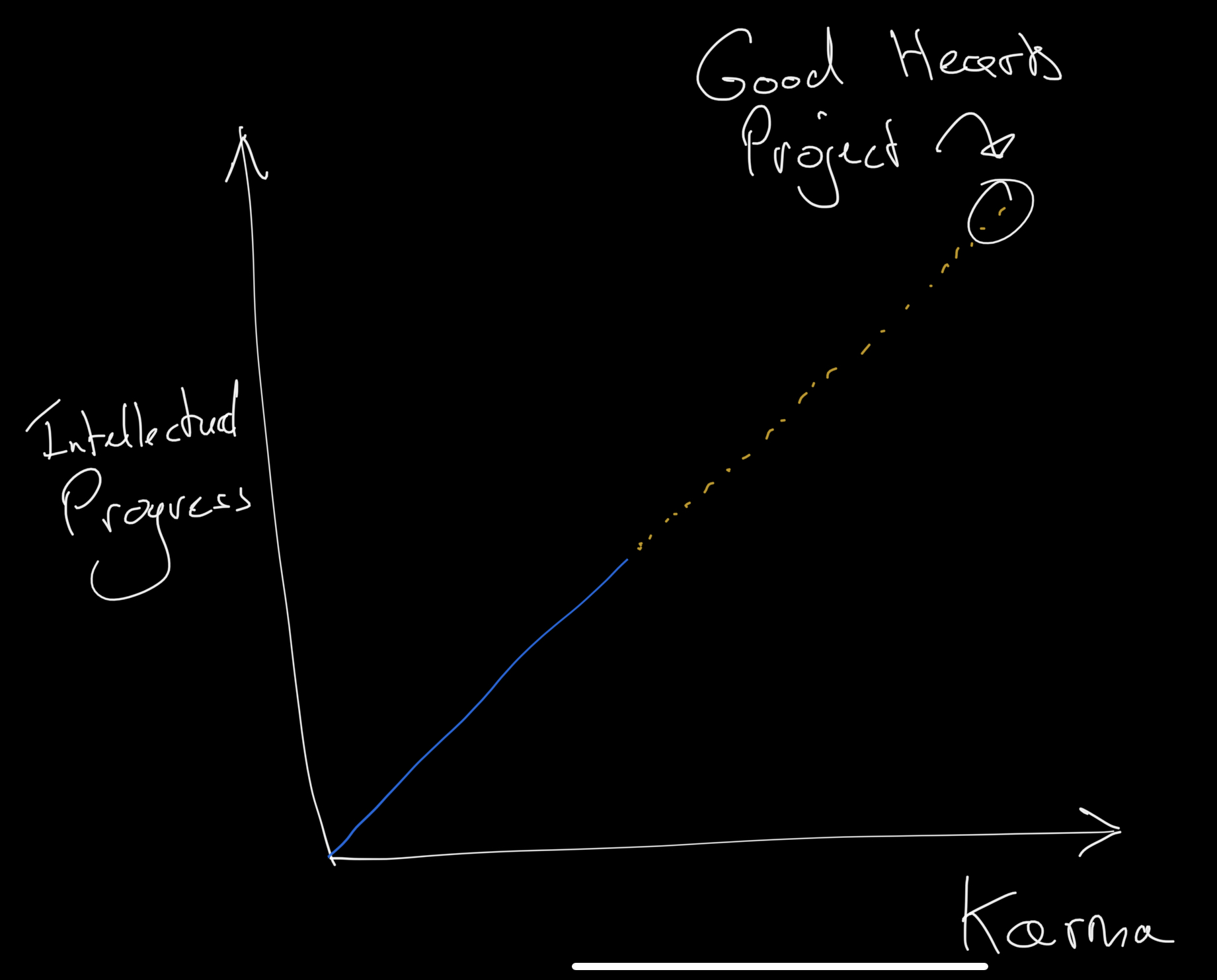

We’ve been thinking very creatively about metrics of things we care about, and we’ve discovered that karma is highly correlated with value.

Therefore, we’re creating a token that quantifies the goodness of the people writing, and whether in their hearts they care about rationality and saving the world.

We’re calling these new tokens Good Heart Tokens. And in partnership with our EA funders, we’ll be paying users $1 for each token that they earn.

“The essence of any religion is a good heart [token].”

— The Dalai Lama[1]

Voting, Leaderboards and Payment Info

Comments and posts now show you how many Good Heart Tokens they have.

(This solely applies to all new content on the site.)

At the top of LessWrong, there is now a leaderboard to show the measurement of who has the Goodest Heart. It looks like this. (No, self-votes are not counted!)

The usernames of our Goodest Hearts will be given a colorful flair throughout the entirety of their posts and comments on LessWrong.

To receive your funds, please log in and enter your payment info at lesswrong.com/payments/account.

While the form suggests using a PayPal address, you may also add an Ethereum address, or the name of a charity that you’d like us to donate it to.

Why are we doing this?

On this very day last year, we were in a dire spot.

To fund our ever-increasing costs, we were forced to move to Substack and monetize most of our content.

Several generous users subscribed at the price of 1 BTC/month, for which we will always be grateful. It turns out that Bitcoin was valued a little higher than the $13.2 we had assumed, and this funding quickly allowed us to return the site to its previous state.

Once we restored the site, we still had a huge pile of money, and we’ve spent the last year desperately trying to get rid of it.

In our intellectual circles, Robin Hanson has suggested making challenge coins, and Paul Christiano has suggested making impact certificates. Both are tokens that can later be exchanged for money, and whose value correlates with something we care about.

Inspired by that, we finally cracked it, and this is our plan.

...We’re also hoping that this is an initial prototype that larger EA funders will jump on board to scale up!

The EA Funding Ecosystem Wants To Fund Megaprojects

“A good heart [token] is worth gold.”

— King Henry IV, William Shakespeare[2]

Effective altruism has always been core to our hearts, and this is our big step to fully bring to bear the principles of effective altruism on making LessWrong great.

The new FTX Future Fund has said:

We’re interested in directly funding blogs, Substacks, or channels on YouTube, TikTok, Instagram, Twitter, etc.

They’ve also said:

We’re particularly interested in funding massively scalable projects: projects that could scale up to productively spend tens or hundreds of millions of dollars per year.

We are the best of both worlds: A blog that FTX and other funders can just pour money into. Right now we’re trading $1 per Good Heart Token, but in the future we could 10x or 100x this number and possibly see linear returns in quality content!

Trends Generally Continue Trending

Paul Christiano has said:

I have a general read of history where trend extrapolation works extraordinarily well relative to other kinds of forecasting, to the extent that the best first-pass heuristic for whether a prediction is likely to be accurate is whether it’s a trend extrapolation and how far in the future it is.

We agree with this position. So here is our trend-extrapolation argument, which we think has been true for many years and so will continue to be true for at least a few years.

So far it seems like higher-karma posts have been responsible for better insights about rationality and existential risk. The natural extrapolation suggests it will increase if people produce more content that gets high karma scores. Other trends are possible, but they’re not probable. The prior should be that the trend continues!

However, epistemic modesty does compel me to take into account the possibility that we are wrong on this simple trend extrapolation. To remove any remaining likelihood of karma and intellectual progress becoming decoupled, we would like to ask all users participating in the Good Hearts Project to really try hard to not be swayed by any unaligned incentives (e.g. the desire to give your friends money).

Yes, We Have Taken User Feedback (GPT-10 Simulated)

We care about hearing arguments for and against our decision, so we consulted with a proprietary beta version of GPT-10 to infer what several LessWrong users would say about the potential downsides of this project. Here are some of the responses.

Eliezer Yudkowsky: “To see your own creation have its soul turned into a monster before your eyes is a curious experience.”

Anna Salamon: “I can imagine a world where earnest and honest young people learn what’s rewarded in this community is the most pointed nitpick possible under a post and that this might be a key factor in our inability to coordinate on preventing existential risk”.

Kaj Sotala: “I am worried it would lead to the total collapse of an ecosystem I’ve poured my heart and soul into in the past decade”.

Scott Garrabrant: “Now I can get more Good Heart tokens than Abram Demski! This is exactly what I come to LessWrong for.”

Clippy: “What’s the exchange rate between these tokens and paperclips?”

Quirinus Quirrell: “A thousand of your measly tokens pale in comparison to a single Quirrell point.”

Zvi Mowshowitz: “I would never sell my soul for such a low price. LessWrong delenda est.” (Then my computer burst into flame, which is why this post was two hours late.)

(Of course, this is only what our beta-GPT-10 simulations of these users said. The users are welcome to give their actual replies in the comment section below.)

Our EA funders have reviewed these concerns, and agree that there are risks, but think that, in the absence of anything better to do with money, it’s worth trying to scale this system.

A Final Note on Why

I think the work on LessWrong matters a lot, and I’d like to see a world where these people and other people like them can devote themselves full-time to producing such work, and be financially supported when doing so.

Future Enhancement with Machine Learning

We’re hoping to enhance this in the future by using machine learning on users’ content to predict the karma you will get. Right now you only get Good Heart tokens after your content has been voted on, but with the right training, we expect our systems will be able to predict how many tokens you’ll receive in advance.

This will initially look like people getting the money for their post at the moment of publishing. Then it will look like people getting the money when they’ve opened their draft and entered the title. Eventually, we hope to start paying people the moment they create their accounts.

For example, Terence Tao will create a LessWrong account, receive $10 million within seconds, and immediately retire from academia.

Good Hearts Laws

While we’re rolling it out, only votes from existing accounts will count, and only votes on new content will count. No, self-votes will not count.

There is currently a cap of 600 Good Heart Tokens per user while we are rolling out the system.

The minimum number of tokens to be exchanged is 25. If you don’t make that many tokens, we will not take the time to process your request. (Go big or go home.)

“Together, people with good hearts [tokens] and fine minds can move the world.”

— Bill Clinton[3]

- Contra Ngo et al. “Every ‘Every Bay Area House Party’ Bay Area House Party” by (22 Feb 2024 23:56 UTC; 190 points)

- Good Heart Week: Extending the Experiment by (2 Apr 2022 7:13 UTC; 97 points)

- I discovered LessWrong… during Good Heart Week by (7 Apr 2022 13:22 UTC; 52 points)

- Towards trying to feel consistently energized by (1 Apr 2022 20:46 UTC; 40 points)

- Manafold Markets is out of mana 🤭 by (1 Apr 2022 22:07 UTC; 36 points)

- 's comment on Vaniver’s Shortform by (2 Apr 2022 17:46 UTC; 19 points)

- The median and mode use less information than the mean does by (1 Apr 2022 21:25 UTC; 10 points)

- Hallelujah by (2 Apr 2022 23:34 UTC; 9 points)

- 's comment on LessWrong has been acquired by EA by (1 Apr 2025 23:16 UTC; 8 points)

- Good Heart Donation Lottery by (EA Forum; 1 Apr 2022 18:17 UTC; 6 points)

- An Average Dialogue by (EA Forum; 1 Apr 2023 4:01 UTC; 5 points)

- An Average Dialogue by (1 Apr 2023 4:01 UTC; 4 points)

- 's comment on The Future Fund’s Project Ideas Competition by (EA Forum; 3 Apr 2022 11:53 UTC; 2 points)

Why have “notice your surprise” day when you could instead have “publish all of your drafts” day? ;)

I have so many unpublished drafts that I hit the limit (5) of what LW allows in a day. When I hit the limit, I took the least popular one down and put up a better post instead.

If you didn’t already try, I bet Lightcone would let you post more if you asked over Intercom.

I’m guessing it would just take us editing the relevant posts, and so would be technically easy; I think it might be a bad idea to do it at this point, since there wouldn’t be that long for the posts to be read (and it’d dilute attention between them).

With deep surprise and betrayal and regret in my heart am I forced to announce that we noticed highly suspicious voting and posting activity from three suspicious user engaging in a criminal conspiracy:

https://www.lesswrong.com/users/aphyer

https://www.lesswrong.com/users/aphyer_evil_sock_puppet

https://www.lesswrong.com/users/johnswentworth

We reported this case to the Good Hearts police, who have seized all Good Heart Tokens from the three individuals, and fined them each 1000 Good Heart Tokens.

While we never thought it would come to this, we are clarifying that voting rings are not allowed, and will be met with harsh punishment.

My client acknowledges his guilt but wishes to appeal the harshness of the punishment.

My client has renounced his evil ways, and sworn to follow the straight and narrow path. As proof of his sincere remorse, he has submitted two new articles (1, 2), intending to pursue Good Hearts with honestly-written articles earning honest upvotes.

However, the severity of the fine has made it impossible for him to ever return to a normal life in society. The GH1000 penalty is as of this writing ~5 times the largest quantity of GH earned by any writer.

My client is additionally willing to delete the criminal posts, just as soon as someone tells him how to delete a draft (it’s unclear to me how you can actually do this).

In the interests of clemency and rehabilitation, Your Honor, I ask that you reduce my client’s penalty to a sum he can more realistically ‘work off’ through good deeds, rather than fining him an amount far in excess of what even the wealthiest GH-holders are able to pay.

After much careful evaluation of this counterargument, examining the value of your posts, wringing my hands, and then kinda winging it because I didn’t feel like spending that much effort carefully counting up all the relevant bits of karma, I decided to set your karma to −22, and johnswentworth’s to 114 (each of these are the values of your top-level posts, minus 50, which seemed like a reasonable penalty)

Thank you!

This isn’t the last you’ll see of us, Good-Hearter! You may have won a token victory, but we’ll be back. Muahahahaha!

I am shocked, shocked, to find voting rings in this forum!

Your upvotes, sir.

Ah, thank you very much. Everybody out at once!

Strong-downvoted to deprive Ben Pace of money, because mwahaha.

[After thinking about this more, I changed my mind and upvoted]

Question: where can I see my Good Hearts score if I’m not currently on the leaderboard?

Assertion: lsusr appears to be setting a good example by engaging with this in good faith, posting lots of actually good stuff today. lsusr is also currently in the lead!!!

This provides actual evidence that this is actually an actual good idea (at least, a good idea for an April 1st one-shot)

If you are ALSO engaging with this in good faith, comment here to let me know. This will reduce the chance that I miss the good stuff you post today. (IE, I’ll consider upvoting it in good faith.)

I’m engaging with this in good faith, tho I think my current effortpost-draft is one that I want reviewed by other people before I post it, which suggests either I should switch to a different idea that I can shoot from the hip with or just do comment-engagement instead of post-engagement today.

[edit] Also I think we should just keep the leaderboard? I remember being pretty motivated by the ‘top karma last 30 days’ leaderboard to post something about once a month.

I didn’t realize that this was a test-bed for seeing if monetary value would actually work; everything I post on this account from now on will be actual effort posts that I would post normally. I really don’t want to be the type of person who sees the Petrov Day button and automatically tries a bunch of random passwords, in the process ruining it for everyone. I also think that monetary rewards for LW posts might be a good idea, and want to help operating by the decision-theory of whatever would work well in an actual LW environment with financial rewards; this means passing up the (12 eligible comments + however many other comments I make)*2 dollars which would result from me strong upvoting every comment w/ the test account I made yesterday to try and debug something. (posting this is also me committing myself to NOT doing that).

I have made a good-faith post as a result of the extension!

You can view your Good Heart Token count on your account page next to your karma.

Not sure why your simulation of me didn’t point this out, but it seems odd to call something a “token” and then not make it into a cryptocurrency. Maybe this was the original plan, but then you realized that launching a GoodHeartCoin would make you even richer, thus worsening the very problem you are trying to solve?

It was just so in an earlier draft.

I was debating between LessWrongCoin versus the more succinct WrongCoin.

You can’t just cite a Shakespeare quote as being from King Henry IV, that is two different plays you BARBARIAN. If you were trying to pander to me because King Henry IV Part 1 is my favorite play, you FAILED; you quoted from King Henry IV Part 2. Disgusting.

Next thing you know they’ll be announcing on the Alignment Forum that they’ve founded the Ally Mint, a foundation for minting tokens to award to our allies in alignment research.

Allies? Just take out the middleman, and pledge to give the tokens to AIs for being good.

Ah, but you can’t measure whether or not the AI is doing something that is actually good or just something that seems good to you. That is part of the problem.

Also it isn’t quite clear why a super-intelligence would want some not very valuable crypto tokens more than it would want the atoms that used to make up the economic system that made the crypto valuable.

This is also a problem for the

idea.

I was joking that, crypto[currency] is about taking out the third parties, so the same should be done there.

But Good Mint isn’t a pun.

Ally, aligned. Close enough.

I’m curious what people think the natural exchange rate is between dollars and LW karma. Presumably it’s not zero, although I think it’s definitely less than $1; it takes me a lot more than $100 worth of effort to write a 100 karma post.

It’s a little bit weird to think about “how much would I pay for karma”, because the whole point of karma is that you can only get it through creating a specific kind of value, and if anyone could buy it then the whole system would lose it’s purpose. But it feels more natural to ask “how many karma is an hour of my time worth”, and then I can convert that through “how many dollars is an hour of my time worth”.

Unfortunately, it’s generally a lot easier to generate karma through commenting than through posting.

Once upon a time, I hear there was a 10x multiplier on post karma. 10x is a lot, but it seems pretty plausible to me that a ~3x multiplier on post karma would be good.

This is the undoing of the multiplier: https://www.lesswrong.com/posts/uyCGvvai9Gco24iR8/the-great-karma-reckoning

I think top level posts generate much more than 10x the value than the entire comments section combined, based off my impression that the majority of lurkers don’t get deep in the comments. I wonder if top level posts having a x^1.5 exponent would get closer to the ideal… That would also disincentivize post series...

You could instead ask: how much would you be willing to pay for 1 karma’s worth of the-sort-of-value-karma-measures?

Or perhaps: how much would the LW community altogether be willing to pay?

We could estimate the second by dividing the amount of money Scott Alexander makes on substack per month (where do I find this?) by our estimate of the monthly LW karma those articles would generate, if they appeared on LW. (100 per post??)

The value of a post grows faster than its karma. A 200 karma post is more than 2× harder to write and provides more than 2× the value of a 100 karma post. A 100 karma post is more than 2× harder to write and provides more than 2× the value of a 50 karma post.

The LW team has, in the past, offered $500 for quality book reviews with a minimum post karma of about 50.

I could probably be paid to write medium-to-decent quality LW posts pretty cheaply? I think today has given me an affordance for “write things for LW” that I didn’t use to have nearly as strongly.

How much would you be willing to pay someone to write a 100(+) karma post?

...I actually didn’t see this until now. Huh.

I guess this means it’s actually a test we’re supposed to pass. I have a feeling that there’s going to be some confusion over “what game are we really playing”, like with Petrov Day. Oh. It’s to see if we could actually, long-term, reward LW participation with money. Guess I’ll just focus on actually writing posts.

Man, this is the worst time for me to have only skimmed one of those posts.

It’s really interesting seeing the change in attitude toward low-effort asking-for-money posts. Earlier, people upvoted/put up with them; now people are actively punishing bullshit with strong downvotes. This is good for LW implementing monetary incentives in the future; we can punish Goodharters ourselves.

Finding a way for people to make money by posting good ideas is a great idea.

Saying that it should be based on the goodness of the people and how much they care is a terrible idea. Privileging goodness and caring over reason is the most well-trodden path to unreason. This is LessWrong. I go to fimfiction for rainbows and unicorns.

I think that was part of the whole “haha goodhart’s law doesn’t exist, making value is really easy” joke. However, it’s also possible that that’s… actually one of the hard-to-fake things they’re looking for (along with actual competence/intelligence). See PG’s Mean People Fail or Earnestness. I agree that “just give good money to good people” is a terrible idea, but there’s a steelman of that which is “along with intelligence, originality, and domain expertise, being a Good Person (whatever that means) and being earnest is a really good trait in EA/LW and the world at large, and so we should try and find people who are Good and Earnest, to whatever extent that we can make sure that isn’t Goodharted .”

(I somewhat expect someone at LW to respond to this saying “no, the whole goodness thing was a joke”)

Literally took me until the end of the post to figure out the pun.

For people like me who are really slow on the uptake in things like this, and realize the pun randomly a few hours later while doing something else: The pun is because of goodhart (from Goodhart’s law).) (I’m not thinking much in what a word sounds like, and I just overread the “Good Hearts Laws” as something not particularly interesting, so I guess this is why I haven’t noticed.)

That makes me feel less bad for doing the same...

Can I request a 2-dimensional version of this? What if I want to distinguish between good comments and comments I agree with?

Hearts for good comments (monetary value $1), likes for the comments I agree with (monetary value $0). Because we obviously want to incentivize good content rather that political factions.

The advantage is that this will be backwards compatible with the old karma system, where people upvoted comments they agreed with, and received $0 for such comments.

Good comments should net real dollars, while agreed-with comments net imaginary dollars (that is, a currency which equals the square root of real-dollar debt).

If I get negative karma do I need to pay LessWrong money

Think of it as a LessWrong fundraiser.

I’m worried that if I exchange my Good Heart Tokens for sordid monetary profits, this will reduce the goodness of my heart. Do the payments replace the Tokens that earn them, or does my Heart remain Good?

Next April Fools, Lightcone will allow remaining Good Heart Tokens to be exchanged for longer-lifespan; when AGI finally gets around to making us grey goo, each token spent will buy a nanosecond of extra life.

Also, you can’t measure your Heart’s purity, so there’s no point trying to protect that value. Simple utility functions are so much easier to carry out. For guidance, look at the everyday rock; no messy values like “lack of boredom” or “happiness”.

Just exchange the money for covid tests.

This is a really good start, and I look forward to the inevitable improvements in the quality of discourse. But to fully leverage the potential of this exciting new system, I think you should create a futures market so we can bet on (or against) specific individuals writing good posts in future.

Also: from now on, I vow to ignore any and all ideas that aren’t supported by next-level puns.

Brilliant, and a marvelous pun.

I appear to have disappeared from the leaderboard despite my large volumes of high-quality content and justly earned Good Hearts. Is there a display bug of some kind?

Apparently you ran afoul of the Good Hearts police :p.

I’ve updated the donation lottery to reflect the update to the program. Thus I’m canceling this bit of fun since it doesn’t make sense after April Fools Day.

So I’m attempting to run adonation lotteryoff the new system, which is fun. However, it’d also be nice to end up with the Goodest Heart.I’ve already promised on that post to subsidize the donation lottery up to the $600 level if it turns out there’s actually no money (or I just get less than $600 worth of votes).However, in the interest of getting the most internet points I can today, if at the end of the Good Heart Token experiment I end up with the Goodest Heart (I’m at the top of the leaderboard) I’ll donate an additional $1000 to the winner of the donation lottery.I’ll make a similar comment over on the donation lottery.To ensure I actually get this, if someone else makes a competing offer to try to get my votes (for example, promises to donate $1001 to the winner of the donation lottery if they end up with the Goodest Heart, or otherwise makes a bid for votes to have the Goodest Heart) and they do in fact end up with the Goodest Heart, I’ll instead donate $1000 to whatever charity I think the person who usurps me would most dislike to see a donation made to (that is not a charity that is net-negative for the world in my estimation).May the voting commence!I’m so glad that comments with pointed nitpicking of world saving ideas are getting the respect and remuneration they deserves!

That said… I think maybe you’ve never heard of quadratic voting, which is VERY TRENDY lately?

Based on reasoning that I can unpack if you’re not smart enough to steelman my arguments and understand it for yourself, you should consider giving mere OPs the natural log of their upvotes, while giving e^upvotes good hearts for votes on comments.

The deep logic here is based on the insight that the hardest part of writing is figuring out who your audience is, and what they don’t understand, and whether they would welcome what you’re writing and be helped by it.

This is why brutally nitpicking writing by authors from a different social bubble than you is such an important part of a healthy epistemic ecology… if potential authors didn’t have examples of brutal nitpicking here on Lesswrong, they wouldn’t be able to understand and imagine the very high quality of the audience around these parts.

Also, the bad posters wouldn’t be pre-emptively scared away from writing dumb things.

And most importantly, the good posters wouldn’t write such consistently good posts.

We know that GANs are the heart of most important advances in machine learning. Smart people can’t help but notice that comments have that same role here on Lesswrong.

Therefore, today of all days, on the anniversary of this wonderful program, I think it is important (A) to remind people to think very hard, and then (B) to praise people who have the strongest pruning skills and least disinclination to verbosely criticize online acquaintances in public, and then (C) to affirm that the good heart system should be tweaked (again) to make sure that “critics in the comments” get the most money per upvote, since comment upvotes are some of the most causally significant upvotes that can be generated by the discerning, wise, rational, and not-at-all-emotionally-manipulable voters of Lesswrong <3

Note that quadratic voting has been used for all of the annual reviews, starting in 2018.

I assumed that that was the joke.

Given the flood of comments that will inevitably result from this, it might be hard to get noticed and to surface the best ones to the top. So I am offering the following service: If you reply to this I guarantee that I will read your comment, and then will give you one or two upvotes (or none) depending on how insightful I consider it to be. Sadly, this only works if people get to see this comment, so it is in your best interest to upvote it. Let’s turn this into a new, better comment section!

This was very clever, so I strong-upvoted it, but it also feels like it violates good faith, so I then strong-downvoted it.

Agreed, since now many people will probably comment in this thread, I make the same recursive offer:

So please upvote this comment so it stays on top of this comment thread!

I upvoted you (+1), but the point of my comment was to make sure that someone besides the author of a comment reads it so that the score more closely reflects its real value. If you do it recursively then we will have two kinds of comments to consider: replies to my comment and to your, so it will become harder to make comparisons.

I guess I should have said something like “and if you up/downvote a reply in this comment thread, please read and score all of its siblings accordingly”, since if it’s just me upvoting the problem will persist. I can only give one of 5 possible scores, they will be just a noisy estimate of the “ideal score” and older comments will have bigger magnitudes.

And even if that was fixed, it seems people are mostly ignoring what I said and commenting elsewhere. Oh well, at least I tried.

When I first wrote the comment, it seemed like the best possible strategy according to what I care about. It goodharts the LW karma system so that it loses correlation with intellectual progress or whatever else it is supposed to reflect. And yet it isn’t a pure “I will unfairly upvote you and you unfairly upvote me” like aphyer’s and Tofly’s comments, nor is it nonsense or bot-like behaviour, like what rank-biseral and maybe goodestheart are doing. Now I’m not so sure.

To the Lightcone team, if you want to make sure that scores actually reflect the quality of comments and that users are shown the best possible ones, you should probably implement a multi-armed bandit algorithm as the default sorting method, divide the scores by the number of impressions and then multiply by some constant (10 times the standard deviation?) so that they aren’t tiny. For comments with very few votes you could use some ML model as a prior or show the uncertainty to the user.

Oh, and another bad faith strategy consists on writing many ok comments instead of just a few good ones, the Good Heart Tokens a user receives aren’t actually proportional to the total value added. Please keep the score of this one at 1.

There is an inconsistency in the formatting of (simulated) user feedback. Some are formatted as Username: “XXX”, e.g.

while others are formatted as Username said “XXX”, e.g.

you saw nothing

4 hours ago, I thought there was a 0% chance money would be paid out. But 3 hours ago, a discussion made me think there was a chance they would do a payout for today, and then retract the whole thing tomorrow. That made me 20% certain that money would be paid out. So between those hours, there was a linear increase in the chance of money being paid out. And as other trends are possible, but not probable, then the prior should be that the trend continues, right? So I expect this to arrive at 100% chance several hours before payout time.

Though I’m curious whether the community agrees:

https://manifold.markets/LeonardoTaglialegne/will-lesswrong-actually-pay-out-the

This part makes me think they’ll actually pay out;

Especially the part where it’s 600 and not 500. That sounds like the kind of number that came out of people actually deciding how much they wanted to pay out.

It’s the kind of number caused by government regulation.

Not taking extrapolation far enough!

4 hours ago, your expected value of a point was $0. In an hour, it increased to $0.2, implying a ~20% chance it pays $1 (plus some other possibilities). By midnight, extrapolated expected value will be $4.19, implying a ~100% chance to pay $1, plus ~45% chance that they’ll make good on the suggestion of paying $10 instead, plus some other possibilities...

Wait, did people actually subscribe to the LessWrong Substack?

I’ve been working on setting up a TED talk at my high school, and since the beginning have been planning on asking for speakers through a post here. However, the day that we finally finished the website, and I can finally post here about it, is… when we’re doing this whole GoodHeart thing. Not sure whether I should publish it today or tomorrow. (Pros: money. Cons: possibly fewer views because of everything else posted today.) What do you all think?

Pro: There’s not just more posts on LW than usual. There’s also way more eyeballs on LW than usual.

Con: Readers might think your TED talk is an April Fool’s Day prank.

The link to “a dire spot” links to this post itself.

I think the strongest incentive is not the financial payout, but the desire to be on the leaderboard.

Oh no! Our Dark Conspiracy is being foiled!

If you’re confused by aphyer’s comment, do a

Ctrl-ffor other comments on this thread that involve aphyer.Sorry in advance for an entirely too serious comment to a lighthearted post; it made me have thoughts I thought worth sharing. The whole “Karma convertibility” system is funny, but the irony feels slightly off. Society (vague term alert!) does in fact reward popular content with money. Goodhart’s law is not “monetizing an economy instantly crashes it”. My objections to Karma convertibility, are:

Exploitability. Put in enough people on a system, and some of them will try to break it. Put in even more people, and they will likely succeed.

Inefficiency. There is a big upfront cost to generating good ideas. Ie: you’ve got to invest putting interesting things inside your mind before you can make up your own. Specialization pays off just as much in intellectual labor as in other areas.

Pareto’s law. A small, selected group can be a consistent performance outlier.

Marginal returns on money. The marginal value of money goes down dramatically when you get above the subsistence threshold.

I think that providing a salaried position to a small number of intrinsically motivated individuals is a much more efficient way of buying ideas. I think RAND is basically structured this way?

Epistemic status: Don’t quote me on any of this. I’ve done no research, instead I’m pattern-matching from stuff that I’ve passively absorbed.

First of all, happy April Fools!! Second, taking this seriously (🙃), I predict (contingent on this not disappearing tomorrow) that this will lead to far more posts and comments than we’ve previously seen. Will those posts and comments be higher quality? …probably not, but it will look great for SEO! Overall, I’d say that the likelihood that I’ve written this entire comment just to receive some sweet sweet good heart tokens is…~1?

Heartbreaking CDT. I’ve got a Transparent Newcomb’s I’d like to sell you

Is this an April fools joke?

Do you think the Lesswrong team would lie to you‽

So, what are the actual biggest problems you’ve noticed with the LW karma system?

This is brilliant! Way better than the points we used at Camp Bell long ago.

I don’t think this experiment could prove anything other than “it doesn’t work”. It’s too gameable. Even if it works in the short term, that’s only true for the current population. You’d change the people who join the community in the long run towards people who are willing to game the system.

I genuinely can’t tell how much of this is an April Fool joke. If all of it, it’s gone on too long now

It’s not a joke (though it is a pun), It’s a playful experiment with real money.

Well, it’s kind of a joke that it was ambiguous whether it was a joke or an experiment. Now it’s more clear it’s more like an experiment, but there was a substantial joke component to it.

Did anyone with >25 Good Heart Tokens get paid out at midnight last night? I’m still unsure whether this was just an April Fool’s joke or not. https://manifold.markets/WilliamKiely/will-lesswrong-pay-users-1-for-each

See here.

The ‘midnight’ thing was apparently reconsidered and removed soon after it was posted, but not before some people saw it. Currently it looks like they will get paid out, but in around a week.

Edited to add: found the comment about the payout time, see here.

I swear this post said payouts would go out at 11:59pm PST earlier, but that seems to be removed and I haven’t received my money yet. What gives?

oh new post

It did say that earlier, this was my mistake due to my asking an editor I know to help polish the post at 1:30am, they added the line and I didn’t catch it. That line was committing me to a lot of work on a daily basis that I am not planning to do, and I removed the line when I saw it midday.

Payouts will happen in one lump sum sometime after Good Heart Week is over.

What’s up with the leaderboard? Did you make a downvote worth 5$ or so, just for fun? Or what?

Based on the pattern of voting I’ve noticed over the past few hours, I suspect one or more people are engaged in aggressive downvoting of others. The natural people to do this would be those who want to rise to the top of the leaderboard. Since all votes are anonymous there’s no good way to figure out who might be doing that.

The obvious thing to do would be to coordinate against them, but if we can’t identify them then we can’t do that. Seems like a possible failure of the voting system created by attaching more than internet points to them. Luckily this is all just for fun! :-)

I sent out a call for people to help downvote blatant exploitation, while upvoting good-faith engagement.

Well, on the leaderbord (that I see), aphyer is at the top with $557, and when you click on the user and look at the votes, he almost only received downvotes. John Wentworth also received a lot of downvotes. Thus my hypothesis that a downvote is somehow worth something like $5 or so. If that is so, your call might have backfired. xD

(Though it could also be a hack or so.)

It looks like they were probably upvoting each other’s shared drafts.

Ah makes sense

Created a market on Manifold to see if either today’s GoodHeart system will last past today, or else if LW will try financial rewards for posting in 2022.

If you’re counting the book review, are you also counting the annual review? [We don’t commit to running it each year, but Laplace’s Rule of Succession says the odds of doing it in 2022 are pretty high.]

...I forgot about the annual review. I think I’ll just say that doesn’t count, and also commit to no more changes of the conditions.

EDIT: actually, just going to kill the market.

Any monetisation could add to the funds of an agreed /a couple of agreed just causes. This, as opposed to individual acquisition of Good Heart Tokens, seems Goodest to me:)

test post

Haha, I wish I thought of that idea. I know it’s a joke, but still...

I guess I’m glad I didn’t think of this idea.

Considering you get at least one free upvote from posting/commenting itself, you just have to be faster than the downvoters to generate money :P

I will strongly upvote all replies to this comment which state that they strongly upvoted this comment.

Yes, it is easy for you to defect here, or undo your strong upvote after commenting. However, I modeled the typical lesswrong user before making this comment, and would not have made it if they were likely to defect.

I strongly upvoted the comment above.

(Then I retracted my upvote.)

I strongly upvoted the comment above.

(Then I retracted my upvote.)

For the avoidance of doubt, that is the response I was expecting. :-)

This is a good lesson about game theory. Strong upvote.

Strongly upvoted, but I recommend you not bother with voting on this comment, and instead spend your votes helping to fuel the Good Heart donation lottery. Let’s take LW for all its got and donate it to a good cause!

If anyone is up for a reciprocal trade, where I upvote all your posts in exchange for you upvoting all of mine, please let me know below!

Most of the child comments have 5-7 votes and near-zero net

karmahearts. This suggests that non-participants are coordinating to keep the totals near zero. I’d love to participate, but I don’t expect it to be worthwhile.Maybe users who police bad comments/articles (like that one) should be rewarded for downvoting them by earning the $1 saved, thus maintaining financial equilibrium

Strong-downvoted, earned $6 by doing so.

(I did not actually do this)

Then who decides whether the comment is actually bad?

I think we are now properly defeated. The fundamental problem is that there’s only two of us mutually benefitting, so any group with more Karma than one of us can shut down the party.

While I would prefer for the forces of Social Coordination to win here, I would prefer they win against a real fight. Giving up so easily? Did you think for even five minutes? Shut up and do the impossible!!

I’ll note that the leaderboard doesn’t seem to notice the downvotes and so is still showing you and aphyer in the lead. This is likely a bug but at least you’re getting to have the goodest of hearts for a while for your effort. :-)

Oh, it definitely noticed the downvotes. We got about 100 karma each out of this thread, and then we lost it all and then some once people came along and started downvoting. We were both off the leaderboard entirely for a while after that.

But what’s happening now?!? How is Aphyer suddenly at over $500 when most of their comments are now negative, and the positive ones not very high?

How?????

My guess would be that they are upvoting some kind of post/comment which only shows up for those it is shared with. Some sites do allow these, though I’m not sure how to do this on lesswrong. I’ve already strong downvoted all of their public comments containing no value, so I guess they’ve won this time :(

EDIT: Looking around the UI I see that you can share drafts with people, maybe they’re upvoting each other’s drafts? (If that’s even possible)

Don’t give up yet!!!

We can probably get the LW team to stop counting whatever they’re pulling.

We can recruit more people to try and balance things out.

EDIT: It appears something is happening… the leaderboards are shifting again...

Unfortunately, I don’t have the capacity to deal with this problem right now however serious, because I have much more pressing issues in the form of r/place.

Oh yeah, I started getting downvotes and now I see I’ve fallen quite a bit. I just noticed you two rose up quite high and I wondered what happened there!

We turned to the Light Side and gained a ton of karma by writing high-quality posts and comments, which were legitimately upvoted.

If so, then where are they???

I’ve been looking...

Additional additional comments

Additional additional addition, which is additive.

Multiplicative comments?

I suppose if one were to put two comments in response to each comment… but that would become rate-limited very quickly...

Would it really?

Would it really?

Upvoting posts will not work unless they’re new today, need to create new comments

I did not know that, thank you! I think this Legitimately Very Good Comment is one that Legitimately Deserves a strong upvote!

Indeed, an excellent point!

As does this one!

Yeah, that synergizes really well with your previous point.

Indeed! This is a high value conversation providing lots of positive sum value to all readers!

So many comments, must parallelize

Continuing to comment!

An excellent point good sir.

You likewise, my good fellow!

(I feel like, as the founding member of the Dark Conspiracy and the one whose parent comment is exposed to social enforcement, I should be getting more of my comments upvoted)

Eh, we’ll hit the cap pretty fast regardless.

Wait, what cap?

Oh no! Our Dark Conspiracy is being foiled!

We must find some way to escape these limits...

Perhaps by building some kind of artificial intelligence capable of faster than human clicking?

That would probably get you banned on perfectly normal LW moderation type grounds?

Sssssh! Don’t tell them!

Comment all of the things!

Yet more comments!

More comments!

Comments! There is a 5 second delay enforced between comments! I never knew that before!

Yes comments yay!

And more! Now that the parent is down voted no one will see them except us Conspirators! Hahahahaha!

Recent Discussion doesn’t hide children of hidden comments. I’m not sure whether this is a bug or a feature.

Oh no! They’re onto us! Comment faster!!

That’s a great comment!

Sure. I’ll strong upvote the first 20 or so on your page.

From the post:

Upvoting comments/posts that were made before today doesn’t get you any tokens.

Oh. Oops.

Just delete all old content and repost it again.

It’s done. They’re all strong upvoted. I keep hearing this voice, though:

Acknowledged, reciprocated. If anyone else wants to join our Exploitative Karma Circle, let us know!

And I kinda hope whoever decided to do this for April Fool’s Day has a plan for reverting karma changes at the end of the day, otherwise this may cause some longer-term screwups.

Yeah; my participation in this scheme was predicated on “Lightcone is occupied by smart people who 100% knew that this would be a result of today’s April Fool’s Day; therefore, playing along with the game is okay. Also, they can afford whatever comes as a consequence of today’s thing, provided nobody actually writes a bot (something I very briefly considered before deciding it’d be too much).”

Also, I guess it’s worth enabling karma change notifications for today only. Trust, but verify. Edit: looking at all the karma change as a result of this already, I really hope they plan to revert it.