Fun with +12 OOMs of Compute

Or: Big Timelines Crux Operationalized

What fun things could one build with +12 orders of magnitude of compute? By ‘fun’ I mean ‘powerful.’ This hypothetical is highly relevant to AI timelines, for reasons I’ll explain later.

Summary (Spoilers):

I describe a hypothetical scenario that concretizes the question “what could be built with 2020’s algorithms/ideas/etc. but a trillion times more compute?” Then I give some answers to that question. Then I ask: How likely is it that some sort of TAI would happen in this scenario? This second question is a useful operationalization of the (IMO) most important, most-commonly-discussed timelines crux: “Can we get TAI just by throwing more compute at the problem?” I consider this operationalization to be the main contribution of this post; it directly plugs into Ajeya’s timelines model and is quantitatively more cruxy than anything else I know of. The secondary contribution of this post is my set of answers to the first question: They serve as intuition pumps for my answer to the second, which strongly supports my views on timelines.

The hypothetical

In 2016 the Compute Fairy visits Earth and bestows a blessing: Computers are magically 12 orders of magnitude faster! Over the next five years, what happens? The Deep Learning AI Boom still happens, only much crazier: Instead of making AlphaStar for 10^23 floating point operations, DeepMind makes something for 10^35. Instead of making GPT-3 for 10^23 FLOPs, OpenAI makes something for 10^35. Instead of industry and academia making a cornucopia of things for 10^20 FLOPs or so, they make a cornucopia of things for 10^32 FLOPs or so. When random grad students and hackers spin up neural nets on their laptops, they have a trillion times more compute to work with. [EDIT: Also assume magic +12 OOMs of memory, bandwidth, etc. All the ingredients of compute.]

For context on how big a deal +12 OOMs is, consider the graph below, from ARK. It’s measuring petaflop-days, which are about 10^20 FLOP each. So 10^35 FLOP is 1e+15 on this graph. GPT-3 and AlphaStar are not on this graph, but if they were they would be in the very top-right corner.

Question One: In this hypothetical, what sorts of things could AI projects build?

I encourage you to stop reading, set a five-minute timer, and think about fun things that could be built in this scenario. I’d love it if you wrote up your answers in the comments!

My tentative answers:

Below are my answers, listed in rough order of how ‘fun’ they seem to me. I’m not an AI scientist so I expect my answers to overestimate what could be done in some ways, and underestimate in other ways. Imagine that each entry is the best version of itself, since it is built by experts (who have experience with smaller-scale versions) rather than by me.

OmegaStar:

In our timeline, it cost about 10^23 FLOP to train AlphaStar. (OpenAI Five, which is in some ways more impressive, took less!) Let’s make OmegaStar like AlphaStar only +7 OOMs bigger: the size of a human brain.[1] [EDIT: You may be surprised to learn, as I was, that AlphaStar has about 10% as many parameters as a honeybee has synapses! Playing against it is like playing against a tiny game-playing insect.]

Larger models seem to take less data to reach the same level of performance, so it would probably take at most 10^30 FLOP to reach the same level of Starcraft performance as AlphaStar, and indeed we should expect it to be qualitatively better.[2] So let’s do that, but also train it on lots of other games too.[3] There are 30,000 games in the Steam Library. We train OmegaStar long enough that it has as much time on each game as AlphaStar had on Starcraft. With a brain so big, maybe it’ll start to do some transfer learning, acquiring generalizeable skills that work across many of the games instead of learning a separate policy for each game.

OK, that uses up 10^34 FLOP—a mere 10% of our budget. With the remainder, let’s add some more stuff to its training regime. For example, maybe we also make it read the entire internet and play the “Predict the next word you are about to read!” game. Also the “Predict the covered-up word” and “predict the covered-up piece of an image” and “predict later bits of the video” games.

OK, that probably still wouldn’t be enough to use up our compute budget. A Transformer that was the size of the human brain would only need 10^30 FLOP to get to human level at the the predict-the-next-word game according to Gwern, and while OmegaStar isn’t a transformer, we have 10^34 FLOP available.[4] (What a curious coincidence, that human-level performance is reached right when the AI is human-brain-sized! Not according to Shorty.)

Let’s also hook up OmegaStar to an online chatbot interface, so that billions of people can talk to it and play games with it. We can have it play the game “Maximize user engagement!”

...we probably still haven’t used up our whole budget, but I’m out of ideas for now.

Amp(GPT-7):

Let’s start by training GPT-7, a transformer with 10^17 parameters and 10^17 data points, on the entire world’s library of video, audio, and text. This is almost 6 OOMs more params and almost 6 OOMs more training time than GPT-3. Note that a mere +4 OOMs of params and training time is predicted to reach near-optimal performance at text prediction and all the tasks thrown at GPT-3 in the original paper; so this GPT-7 would be superhuman at all those things, and also at the analogous video and audio and mixed-modality tasks.[5] Quantitatively, the gap between GPT-7 and GPT-3 is about twice as large as the gap between GPT-3 and GPT-1, (about 25% the loss GPT-3 had, which was about 50% the loss GPT-1 had) so try to imagine a qualitative improvement twice as big also. And that’s not to mention the possible benefits of multimodal data representations.[6]

We aren’t finished! This only uses up 10^34 of our compute. Next, we let the public use prompt programming to make a giant library of GPT-7 functions, like the stuff demoed here and like the stuff being built here, only much better because it’s GPT-7 instead of GPT-3. Some examples:

Decompose a vague question into concrete subquestions

Generate a plan to achieve a goal given a context

Given a list of options, pick the one that seems most plausible / likely to work / likely to be the sort of thing Jesus would say / [insert your own evaluation criteria here]

Given some text, give a score from 0 to 10 for how accurate / offensive / likely-to-be-written-by-a-dissident / [insert your own evaluation criteria here] the text is.

And of course the library also contains functions like “google search” and “Given webpage, click on X” (remember, GPT-7 is multimodal, it can input and output video, parsing webpages is easy). It also has functions like “Spin off a new version of GPT-7 and fine-tune it on the following data.” Then we fine-tune GPT-7 on the library so that it knows how to use those functions, and even write new ones. (Even GPT-3 can do basic programming, remember. GPT-7 is much better.)

We still aren’t finished! Next, we embed GPT-7 in an amplification scheme — a “chinese-room bureaucracy” of calls to GPT-7. The basic idea is to have functions that break down tasks into sub-tasks, functions that do those sub-tasks, and functions that combine the results of the sub-tasks into a result for the task. For example, a fact-checking function might start by dividing up the text into paragraphs, and then extract factual claims from each paragraph, and then generate google queries designed to fact-check each claim, and then compare the search results with the claim to see whether it is contradicted or confirmed, etc. And an article-writing function might call the fact-checking function as one of the intermediary steps. By combining more and more functions into larger and larger bureaucracies, more and more sophisticated behaviors can be achieved. And by fine-tuning GPT-7 on examples of this sort of thing, we can get it to understand how it works, so that we can write GPT-7 functions in which GPT-7 chooses which other functions to call. Heck, we could even have GPT-7 try writing its own functions! [7]

The ultimate chinese-room bureaucracy would be an agent in its own right, running a continual OODA loop of taking in new data, distilling it into notes-to-future-self and new-data-to-fine-tune-on, making plans and sub-plans, and executing them. Perhaps it has a text file describing its goal/values that it passes along as a note-to-self — a “bureaucracy mission statement.”

Are we done yet? No! Since it “only” has 10^17 parameters, and uses about six FLOP per parameter per token, we have almost 18 orders of magnitude of compute left to work with.[8] So let’s give our GPT-7 uber-bureaucracy an internet connection and run it for 100,000,000 function-calls (if we think of each call as a subjective second, that’s about 3 subjective years). Actually, let’s generate 50,000 different uber-bureaucracies and run them all for that long. And then let’s evaluate their performance and reproduce the ones that did best, and repeat. We could do 50,000 generations of this sort of artificial evolution, for a total of about 10^35 FLOP.[9]

Note that we could do all this amplification-and-evolution stuff with OmegaStar in place of GPT-7.

Crystal Nights:

(The name comes from an excellent short story.)

Maybe we think we are missing something fundamental, some unknown unknown, some special sauce that is necessary for true intelligence that humans have and our current artificial neural net designs won’t have even if scaled up +12 OOMs. OK, so let’s search for it. We set out to recapitulate evolution.

We make a planet-sized virtual world with detailed and realistic physics and graphics. OK, not perfectly realistic, but much better than any video game currently on the market! Then, we seed it with a bunch of primitive life-forms, with a massive variety of initial mental and physical architectures. Perhaps they have a sort of virtual genome, a library of code used to construct their bodies and minds, with modular pieces that get exchanged via sexual reproduction (for those who are into that sort of thing). Then we let it run, for a billion in-game years if necessary!

Alas, Ajeya estimates it would take about 10^41 FLOP to do this, whereas we only have 10^35.[10] So we probably need to be a million times more compute-efficient than evolution. But maybe that’s doable. Evolution is pretty dumb, after all.

Instead of starting from scratch, we can can start off with “advanced” creatures, e.g. sexually-reproducing large-brained land creatures. It’s unclear how much this would save but plausibly could be at least one or two orders of magnitude, since Ajeya’s estimate assumes the average creature has a brain about the size of a nematode worm’s brain.[11]

We can grant “magic traits” to the species that encourage intelligence and culture; for example, perhaps they can respawn a number of times after dying, or transfer bits of their trained-neural-net brains to their offspring. At the very least, we should make it metabolically cheap to have big brains; no birth-canal or skull should restrict the number of neurons a species can have! Also maybe it should be easy for species to have neurons that don’t get cancer or break randomly.

We can force things that are bad for the individual but good for the species, e.g. identify that the antler size arms race is silly and nip it in the bud before it gets going. In general, more experimentation/higher mutation rate is probably better for the species than for the individual, and so we could speed up evolution by increasing the mutation rate. We can also identify when a species is trapped in a local optima and take action to get the ball rolling again, whereas evolution would just wait until some climactic event or something shakes things up.

We can optimise for intelligence instead of ability to reproduce, by crafting environments in which intelligence is much more useful than it was at any time in Earth’s history. (For example, the environment can be littered with monoliths that dispense food upon completion of various reasoning puzzles. Perhaps some of these monoliths can teach English too, that’ll probably come in handy later!) Think about how much faster dog breeding is compared to wolves evolving in the wild. Breeding for intelligence should be correspondingly faster than waiting for it to evolve.

There are probably additional things I haven’t thought of that would totally be thought of, if we had a team of experts building this evolutionary simulation with 2020’s knowledge. I’m a philosopher, not an evolutionary biologist!

Skunkworks:

What about STEM AI? Let’s do some STEM. You may have seen this now-classic image:

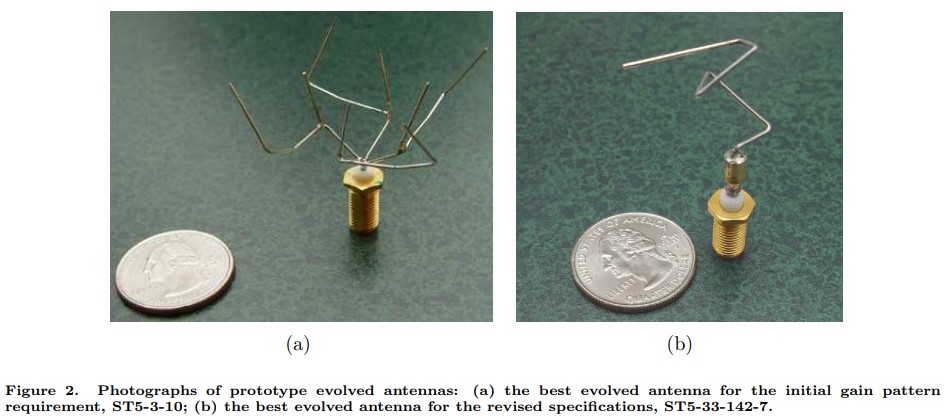

These antennas were designed by an evolutionary search algorithm. Generate a design, simulate it to evaluate predicted performance, tweak & repeat. They flew on a NASA spacecraft fifteen years ago, and were massively more efficient and high-performing than the contractor-designed antennas they replaced. Took less human effort to make, too.[12]

This sort of thing gets a lot more powerful with +12 OOMs. Engineers often use simulations to test designs more cheaply than by building an actual prototype. SpaceX, for example, did this for their Raptor rocket engine. Now imagine that their simulations are significantly more detailed, spending 1,000,000x more compute, and also that they have an evolutionary search component that auto-generates 1,000 variations of each design and iterates for 1,000 generations to find the optimal version of each design for the problem (or even invents new designs from scratch.) And perhaps all of this automated design and tweaking (and even the in-simulation testing) is done more intelligently by a copy of OmegaStar trained on this “game.”

Why would this be a big deal? I’m not sure it would be. But take a look at this list of strategically relevant technologies and events and think about whether Skunkworks being widely available would quickly lead to some of them. For example, given how successful AlphaFold 2 has been, maybe Skunkworks could be useful for designing nanomachines. It could certainly make it a lot easier for various minor nations and non-state entities to build weapons of mass destruction, perhaps resulting in a vulnerable world.

Neuromorph:

According to page 69 of this report, the Hodgkin-Huxley model of the neuron is the most detailed and realistic (and therefore the most computationally expensive) as of 2008. [EDIT: Joe Carlsmith, author of a more recent report, tells me there are more detailed+realistic models available now] It costs 1,200,000 FLOP per second per neuron to run. So a human brain (along with relevant parts of the body, in a realistic-physics virtual environment, etc.) could be simulated for about 10^17 FLOP per second.

Now, presumably (a) we don’t have good enough brain scanners as of 2020 to actually reconstruct any particular person’s brain, and (b) even if we did, the Hodgkin-Huxley model might not be detailed enough to fully capture that person’s personality and cognition.[13]

But maybe we can do something ‘fun’ nonetheless: We scan someone’s brain and then create a simulated brain that looks like the scan as much as possible, and then fills in the details in a random but biologically plausible way. Then we run the simulated brain and see what happens. Probably gibberish, but we run it for a simulated year to see whether it gets its act together and learns any interesting behaviors. After all, human children start off with randomly connected neurons too, but they learn.[14]

All of this costs a mere 10^25 FLOP. So we do it repeatedly, using stochastic gradient descent to search through the space of possible variations on this basic setup, tweaking parameters of the simulation, the dynamical rules used to evolve neurons, the initial conditions, etc. We can do 100,000 generations of 100,000 brains-running-for-a-year this way. Maybe we’ll eventually find something intelligent, even if it lacks the memories and personality of the original scanned human.

Question Two: In this hypothetical, what’s the probability that TAI appears by end of 2020?

The first question was my way of operationalizing “what could be built with 2020’s algorithms/ideas/etc. but a trillion times more compute?”

This second question is my way of operationalizing “what’s the probability that the amount of computation it would take to train a transformative model using 2020’s algorithms/ideas/etc. is 10^35 FLOP or less?”

(Please ignore thoughts like “But maybe all this extra compute will make people take AI safety more seriously” and “But they wouldn’t have incentives to develop modern parallelization algorithms if they had computers so fast” and “but maybe the presence of the Compute Fairy will make them believe the simulation hypothesis?” since they run counter to the spirit of the thought experiment.)

Remember, the definition of Transformative AI is “AI that precipitates a transition comparable to (or more significant than) the agricultural or industrial revolution.”

Did you read those answers to Question One, visualize them and other similarly crazy things that would be going on in this hypothetical scenario, and think “Eh, IDK if that would be enough, I’m 50-50 on this. Seems plausible TAI will be achieved in this scenario but seems equally plausible it wouldn’t be.”

No! … Well, maybe you do, but speaking for myself, I don’t have that reaction.

When I visualize this scenario, I’m like “Holyshit all five of these distinct research programs seem like they would probably produce something transformative within five years and perhaps even immediately, and there are probably more research programs I haven’t thought of!”

My answer is 90%. The reason it isn’t higher is that I’m trying to be epistemically humble and cautious, account for unknown unknowns, defer to the judgment of others, etc. If I just went with my inside view, the number would be 99%. This is because I can’t articulate any not-totally-implausible possibility in which OmegaStar, Amp(GPT-7), Crystal Nights, Skunkworks, and Neuromorph and more don’t lead to transformative AI within five years. All I can think of is things like “Maybe transformative AI requires some super-special mental structure which can only be found by massive blind search, so massive that the Crystal Nights program can’t find it…” I’m very interested to hear what people whose inside-view answer to Question Two is <90% have in mind for the remaining 10%+. I expect I’m just not modelling their views well and that after hearing more I’ll be able to imagine some not-totally-implausible no-TAI possibilities. My inside view is obviously overconfident. Hence my answer of 90%.

Poll: What is your inside-view answer to Question Two, i.e. your answer without taking into account meta-level concerns like peer disagreement, unknown unknowns, biases, etc.

Bonus: I’ve argued elsewhere that what we really care about, when thinking about AI timelines, is AI-induced points of no return. I think this is likely to be within a few years of TAI, and my answer to this question is basically the same as my answer to the TAI version, but just in case:

OK, here’s why all this matters

Ajeya Cotra’s excellent timelines forecasting model is built around a probability distribution over “the amount of computation it would take to train a transformative model if we had to do it using only current knowledge.”[15] (pt1p25) Most of the work goes into constructing that probability distribution; once that’s done, she models how compute costs decrease, willingness-to-spend increases, and new ideas/insights/algorithms are added over time, to get her final forecast.

One of the great things about the model is that it’s interactive; you can input your own probability distribution and see what the implications are for timelines. This is good because there’s a lot of room for subjective judgment and intuition when it comes to making the probability distribution.

What I’ve done in this post is present an intuition pump, a thought experiment that might elicit in the reader (as it does in me) the sense that the probability distribution should have the bulk of its mass by the 10^35 mark.

Ajeya’s best-guess distribution has the 10^35 mark as its median, roughly. As far as I can tell, this corresponds to answering “50%” to Question Two.[16]

If that’s also your reaction, fair enough. But insofar as your reaction is closer to mine, you should have shorter timelines than Ajeya did when she wrote the report.

There are lots of minor nitpicks I have with Ajeya’s report, but I’m not talking about them; instead, I wrote this, which is a lot more subjective and hand-wavy. I made this choice because the minor nitpicks don’t ultimately influence the answer very much, whereas this more subjective disagreement is a pretty big crux.[17] Suppose your answer to Question 2 is 80%. Well, that means your distribution should have 80% by the 10^35 mark compared to Ajeya’s 50%, and that means that your median should be roughly 10 years earlier than hers, all else equal: 2040-ish rather than 2050-ish.[18]

I hope this post helps focus the general discussion about timelines. As far as I can tell, the biggest crux for most people is something like “Can we get TAI just by throwing more compute at the problem?” Now, obviously we can get TAI just by throwing more compute at the problem, there are theorems about how neural nets are universal function approximators etc., and we can always do architecture search to find the right architectures. So the crux is really about whether we can get TAI just by throwing a large but not too large amount of compute at the problem… and I propose we operationalize “large but not too large” as “10^35 FLOP or less.”[19] I’d like to hear people with long timelines explain why OmegaStar, Amp(GPT-7), Crystal Nights, SkunkWorks, and Neuromorph wouldn’t be transformative (or more generally, wouldn’t cause an AI-induced PONR). I’d rest easier at night if I had some hope along those lines.

This is part of my larger investigation into timelines commissioned by CLR. Many thanks to Tegan McCaslin, Lukas Finnveden, Anthony DiGiovanni, Connor Leahy, and Carl Shulman for comments on drafts. Kudos to Connor for pointing out the Skunkworks and Neuromorph ideas. Thanks to the LW team (esp. Raemon) for helping me with the formatting.

- (The) Lightcone is nothing without its people: LW + Lighthaven’s big fundraiser by (Nov 30, 2024, 2:55 AM; 609 points)

- AI Timelines by (Nov 10, 2023, 5:28 AM; 300 points)

- Prizes for the 2020 Review by (Feb 20, 2022, 9:07 PM; 94 points)

- The optimal timing of spending on AGI safety work; why we should probably be spending more now by (EA Forum; Oct 24, 2022, 5:42 PM; 92 points)

- Review AI Alignment posts to help figure out how to make a proper AI Alignment review by (Jan 10, 2023, 12:19 AM; 85 points)

- CLR’s Annual Report 2021 by (EA Forum; Feb 26, 2022, 12:47 PM; 79 points)

- Prizes for the 2021 Review by (Feb 10, 2023, 7:47 PM; 69 points)

- Voting Results for the 2021 Review by (Feb 1, 2023, 8:02 AM; 66 points)

- Review of “Fun with +12 OOMs of Compute” by (Mar 28, 2021, 2:55 PM; 65 points)

- Takeoff Speeds and Discontinuities by (Sep 30, 2021, 1:50 PM; 63 points)

- The optimal timing of spending on AGI safety work; why we should probably be spending more now by (Oct 24, 2022, 5:42 PM; 62 points)

- Suggestions of posts on the AF to review by (Feb 16, 2021, 12:40 PM; 56 points)

- Forecasting transformative AI: the “biological anchors” method in a nutshell by (EA Forum; Aug 31, 2021, 6:17 PM; 50 points)

- 's comment on AGI in sight: our look at the game board by (EA Forum; Feb 19, 2023, 11:22 PM; 42 points)

- “Biological anchors” is about bounding, not pinpointing, AI timelines by (EA Forum; Nov 18, 2021, 9:03 PM; 38 points)

- How have shorter AI timelines been affecting you, and how have you been responding to them? by (EA Forum; Jan 3, 2023, 4:20 AM; 35 points)

- EA Organization Updates: March 2021 by (EA Forum; Apr 1, 2021, 2:42 AM; 30 points)

- Scenarios and Warning Signs for Ajeya’s Aggressive, Conservative, and Best Guess AI Timelines by (Mar 29, 2021, 1:38 AM; 25 points)

- What more compute does for brain-like models: response to Rohin by (Apr 13, 2022, 3:40 AM; 24 points)

- 's comment on Announcing the Winners of the 2023 Open Philanthropy AI Worldviews Contest by (EA Forum; Sep 30, 2023, 8:30 PM; 20 points)

- 's comment on Announcing the Future Fund’s AI Worldview Prize by (EA Forum; Sep 24, 2022, 3:35 AM; 17 points)

- 's comment on A compute-based framework for thinking about the future of AI by (EA Forum; Jun 1, 2023, 1:51 AM; 17 points)

- Hardware is already ready for the singularity. Algorithm knowledge is the only barrier. by (Mar 30, 2021, 10:48 PM; 17 points)

- 's comment on AI timelines by bio anchors: the debate in one place by (EA Forum; Aug 1, 2022, 4:36 PM; 13 points)

- 's comment on Draft report on AI timelines by (Dec 12, 2021, 12:36 PM; 12 points)

- 's comment on Samotsvety’s AI risk forecasts by (EA Forum; Sep 9, 2022, 1:09 PM; 10 points)

- 's comment on Announcing the Future Fund’s AI Worldview Prize by (EA Forum; Sep 24, 2022, 8:30 AM; 10 points)

- 's comment on Reviews of “Is power-seeking AI an existential risk?” by (Dec 17, 2021, 1:28 AM; 9 points)

- 's comment on Biology-Inspired AGI Timelines: The Trick That Never Works by (Dec 14, 2021, 2:22 PM; 5 points)

- 's comment on Some Intuitions Around Short AI Timelines Based on Recent Progress by (Apr 19, 2023, 10:27 PM; 5 points)

- 's comment on AMA: Ajeya Cotra, researcher at Open Phil by (EA Forum; Mar 2, 2021, 9:36 AM; 2 points)

- 's comment on Beyond Simple Existential Risk: Survival in a Complex Interconnected World by (EA Forum; Nov 30, 2022, 8:36 AM; 2 points)

- 's comment on O O’s Shortform by (Jun 7, 2023, 3:37 PM; 2 points)

- 's comment on [outdated] My current theory of change to mitigate existential risk by misaligned ASI by (May 21, 2023, 10:33 PM; 2 points)

- 's comment on Biology-Inspired AGI Timelines: The Trick That Never Works by (Dec 5, 2021, 10:36 AM; 2 points)

- 's comment on Fun with +12 OOMs of Compute by (Mar 4, 2021, 9:13 PM; 2 points)

- 's comment on Digital People Would Be An Even Bigger Deal by (Sep 14, 2021, 2:18 AM; 1 point)

This post provides a valuable reframing of a common question in futurology: “here’s an effect I’m interested in—what sorts of things could cause it?”

That style of reasoning ends by postulating causes. But causes have a life of their own: they don’t just cause the one effect you’re interested in, through the one causal pathway you were thinking about. They do all kinds of things.

In the case of AI and compute, it’s common to ask

Here’s a hypothetical AI technology. How much compute would it require?

But once we have an answer to this question, we can always ask

Here’s how much compute you have. What kind of AI could you build with it?

If you’ve asked the first question, you ought to ask the second one, too.

The first question includes a hidden assumption: that the imagined technology is a reasonable use of the resources it would take to build. This isn’t always true: given those resources, there may be easier ways to accomplish the same thing, or better versions of that thing that are equally feasible. These facts are much easier to see when you fix a given resource level, and ask yourself what kinds of things you could do with it.

This high-level point seems like an important contribution to the AI forecasting conversation. The impetus to ask “what does future compute enable?” rather than “how much compute might TAI require?” influenced my own view of Bio Anchors, an influence that’s visible in the contrarian summary at the start of this post.

I find the specific examples much less convincing than the higher-level point.

For the most part, the examples don’t demonstrate that you could accomplish any particular outcome applying more compute. Instead, they simply restate the idea that more compute is being used.

They describe inputs, not outcomes. The reader is expected to supply the missing inference: “wow, I guess if we put those big numbers in, we’d probably get magical results out.” But this inference is exactly what the examples ought to be illustrating. We already know we’re putting in +12 OOMs; the question is what we get out, in return.

This is easiest to see with Skunkworks, which amounts to: “using 12 OOMs more compute in engineering simulations, with 6 OOMs allocated to the simulations themselves, and the other 6 to evolutionary search.” Okay—and then what? What outcomes does this unlock?

We could replace the entire Skunkworks example with the sentence “+12 OOMs would be useful for engineering simulations, presumably?” We don’t even need to mention that evolutionary search might be involved, since (as the text notes) evolutionary search is one of the tools subsumed under the category “engineering simulations.”

Amp suffers from the same problem. It includes two sequential phases:

Training a scaled-up, instruction-tuned GPT-3.

Doing an evolutionary search over “prompt programs” for the resulting model.

Each of the two steps takes about 1e34 FLOP, so we don’t get the second step “for free” by spending extra compute that went unused in the first. We’re simply training a big model, and then doing a second big project that takes the same amount of compute as training the model.

We could also do the same evolutionary search project in our world, with GPT-3. Why haven’t we? It would be smaller-scale, of course, just as GPT-3 is smaller scale than “GPT-7” (but GPT-3 was worth doing!).

With GPT-3′s budget of 3.14e23 FLOP, we could to do a GPT-3 variant of AMP with, for example,

10000 evaluations or “1 subjective day” per run (vs “3 subjective years”)

population and step count ~1600 (vs ~50000), or two different values for population and step count whose product is 1600^2

100,000,000 evaluations per run (Amp) sure sounds like a lot, but then, so does 10000 (above). Is 1600 steps “not enough”? Not enough for what? (For that matter, is 50000 steps even “enough” for whatever outcome we are interested in?)

The numbers sound intuitively big, but they have no sense of scale, because we don’t know how they relate to outcomes. What do we get in return for doing 50000 steps instead of 1600, or 1e8 function evaluations instead of 1e5? What capabilities do we expect out of Amp? How does the compute investment cause those capabilities?

The question “What could you do with +12 OOMs of Compute?” is an important one, and this post deserves credit for raising it.

The concrete examples of “fun” are too fun for their own good. They’re focused on sounding cool and big, not on accomplishing anything. Little would be lost if they were replaced with the sentence “we could dramatically scale up LMs, game-playing RL, artificial life, engineering simulations, and brain simulations.”

Answering the question in a less “fun,” more outcomes-focused manner sounds like a valuable exercise, and I’d love to read a post like that.

Thanks for this thoughtful review! Below are my thoughts:

--I agree that this post contributes to the forecasting discussion in the way you mention. However, that’s not the main way I think it contributes. I think the main way it contributes is that it operationalizes a big timelines crux & forcefully draws people’s attention to it. I wrote this post after reading Ajeya’s Bio Anchors report carefully many times, annotating it, etc. and starting several gdocs with various disagreements. I found in doing so that some disagreements didn’t change the bottom line much, while others were huge cruxes. This one was the biggest crux of all, so I discarded the rest and focused on getting this out there. And I didn’t even have the energy/time to really properly argue for my side of the crux—there’s so much more I could say!--so I contented myself with having the conclusion be “here’s the crux, y’all should think and argue about this instead of the other stuff.

”—I agree that there’s a lot more I could have done to argue that OmegaStar, Amp(GPT-7), etc. would be transformative. I could have talked about scaling laws, about how AlphaStar is superhuman at Starcraft and therefore OmegaStar should be superhuman at all games, etc. I could have talked about how Amp(GPT-7) combines the strengths of neural nets and language models with the strengths of traditional software. Instead I just described how they were trained, and left it up to the reader to draw conclusions. This was mainly because of space/time constraints (it’s a long post already; I figured I could always follow up later, or in the comments. I had hoped that people would reply with objections to specific designs, e.g. “OmegaStar won’t work because X” and then I could have a conversation in the comments about it. A secondary reason was infohazard stuff—it was already a bit iffy for me to be sketching AGI designs on the internet, even though I was careful to target +12 OOMs instead of +6; it would have been worse if I had also forcefully argued that the designs would succeed in creating something super powerful. (This is also a partial response to your critique about the numbers being too big, too fun—I could have made much the same point with +6 OOMs instead of +12 (though not with, say, +3 OOMs, those numbers would be too small) but I wanted to put an extra smidgen of distance between the post and ‘here’s a bunch of ideas for how to build AGI soon.’)

Anyhow, so the post you say you would love to read, I too would love to read. I’d love to write it as well. It could be a followup to this one. That said, to be honest I probably don’t have time to devote to making it, so I hope someone else does instead! (Or, equally good IMO, would be someone writing a post explaining why none of the 5 designs I sketched would work. Heck I think I’d like that even more, since it would tell me something I don’t already think I know.)

The ideas in this post greatly influence how I think about AI timelines, and I believe they comprise the current single best way to forecast timelines.

A +12-OOMs-style forecast, like a bioanchors-style forecast, has two components:

an estimate of (effective) compute over time (including factors like compute getting cheaper and algorithms/ideas getting better in addition to spending increasing), and

a probability distribution on the (effective) training compute requirements for TAI (or equivalently the probability that TAI is achievable as a function of training compute).

Unlike bioanchors, a +12-OOMs-style forecast answers #2 by considering various kinds of possible transformative AI systems and using some combination of existing-system performance, scaling laws, principles, miscellaneous arguments, and inside-view intuition to estimate how much compute they would require. Considering the “fun things” that could be built with more compute lets us use more inside-view knowledge than bioanchors-style analysis, while not committing to a particular path to TAI like roadmap-style analysis would.

In addition to introducing this forecasting method, this post has excellent analysis of some possible paths to TAI.