What DALL-E 2 can and cannot do

I got access to DALL-E 2 earlier this week, and have spent the last few days (probably adding up to dozens of hours) playing with it, with the goal of mapping out its performance in various areas – and, of course, ending up with some epic art.

Below, I’ve compiled a list of observations made about DALL-E, along with examples. If you want to request art of a particular scene, or to test see what a particular prompt does, feel free to comment with your requests.

DALL-E’s strengths

Stock photography content

It’s stunning at creating photorealistic content for anything that (this is my guess, at least) has a broad repertoire of online stock images – which is perhaps less interesting because if I wanted a stock photo of (rolls dice) a polar bear, Google Images already has me covered. DALL-E performs somewhat better at discrete objects and close-up photographs than at larger scenes, but it can do photographs of city skylines, or National Geographic-style nature scenes, tolerably well (just don’t look too closely at the textures or detailing.) Some highlights:

Clothing design: DALL-E has a reasonable if not perfect understanding of clothing styles, and especially for women’s clothes and with the stylistic guidance of “displayed on a store mannequin” or “modeling photoshoot” etc, it can produce some gorgeous and creative outfits. It does especially plausible-looking wedding dresses – maybe because wedding dresses are especially consistent in aesthetic, and online photos of them are likely to be high quality?

Close-ups of cute animals. DALL-E can pull off scenes with several elements, and often produce something that I would buy was a real photo if I scrolled past it on Tumblr.

Close-ups of food. These can be a little more uncanny valley – and I don’t know what’s up with the apparent boiled eggs in there – but DALL-E absolutely has the plating style for high-end restaurants down.

Jewelry. DALL-E doesn’t always follow the instructions of the prompt exactly (it seems to be randomizing whether the big pendant is amber or amethyst) but the details are generally convincing and the results are almost always really pretty.

Pop culture and media

DALL-E “recognizes” a wide range of pop culture references, particularly for visual media (it’s very solid on Disney princesses) or for literary works with film adaptations like Tolkien’s LOTR. For almost all media that it recognizes at all, it can convert it in almost-arbitrary art styles.

[Tip: I find I get more reliably high-quality images from the prompt “X, screenshots from the Miyazaki anime movie” than just “in the style of anime”, I suspect because Miyazaki has a consistent style, whereas anime more broadly is probably pulling in a lot of poorer-quality anime art.]

Art style transfer

Some of most impressively high-quality output involves specific artistic styles. DALL-E can do charcoal or pencil sketches, paintings in the style of various famous artists, and some weirder stuff like “medieval illuminated manuscripts”.

IMO it performs especially well with art styles like “impressionist watercolor painting” or “pencil sketch”, that are a little more forgiving around imperfections in the details.

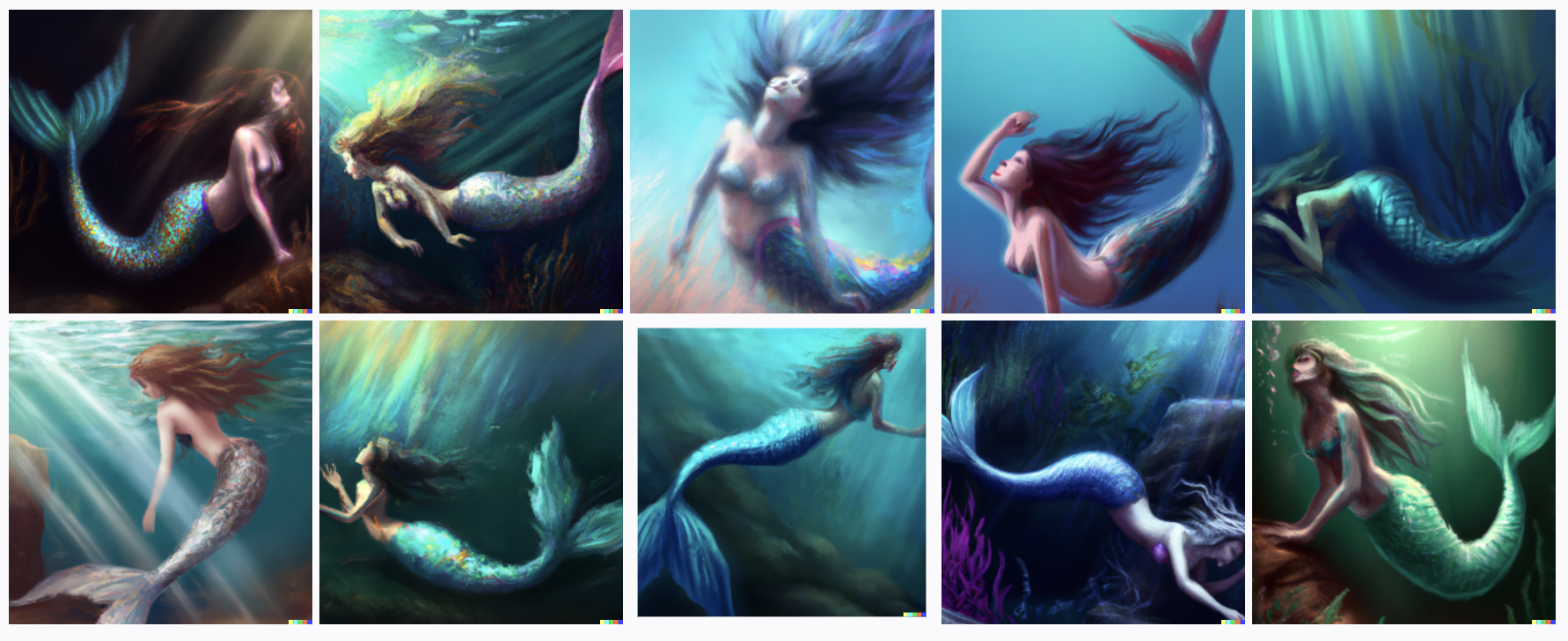

Creative digital art

DALL-E can (with the right prompts and some cherrypicking) pull off some absolutely gorgeous fantasy-esque art pieces. Some examples:

The output when putting in more abstract prompts (I’ve run a lot of “[song lyric or poetry line], digital art” requests) is hit-or-miss, but with patience and some trial and error, it can pull out some absolutely stunning – or deeply hilarious – artistic depictions of poetry or abstract concepts. I kind of like using it in this way because of the sheer variety; I never know where it’s going to go with a prompt.

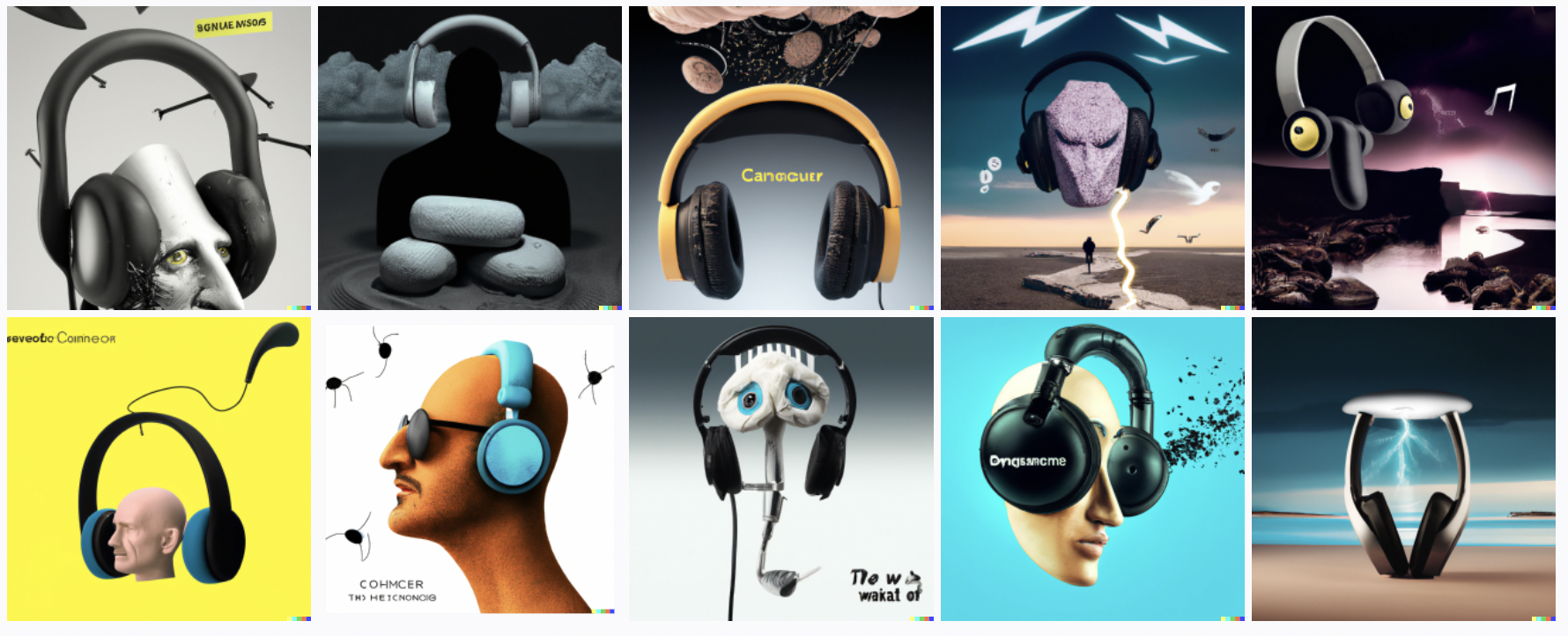

The future of commercials

This might be just a me thing, but I love almost everything DALL-E does with the prompt “in the style of surrealism” – in particular, its surreal attempt at commercials or advertisements. If my online ads were 100% replaced by DALL-E art, I would probably click on at least 50% more of them.

DALLE’s weaknesses

I had been really excited about using DALL-E to make fan art of fiction that I or other people have written, and so I was somewhat disappointed at how much it struggles to do complex scenes according to spec. In particular, it still has a long way to go with:

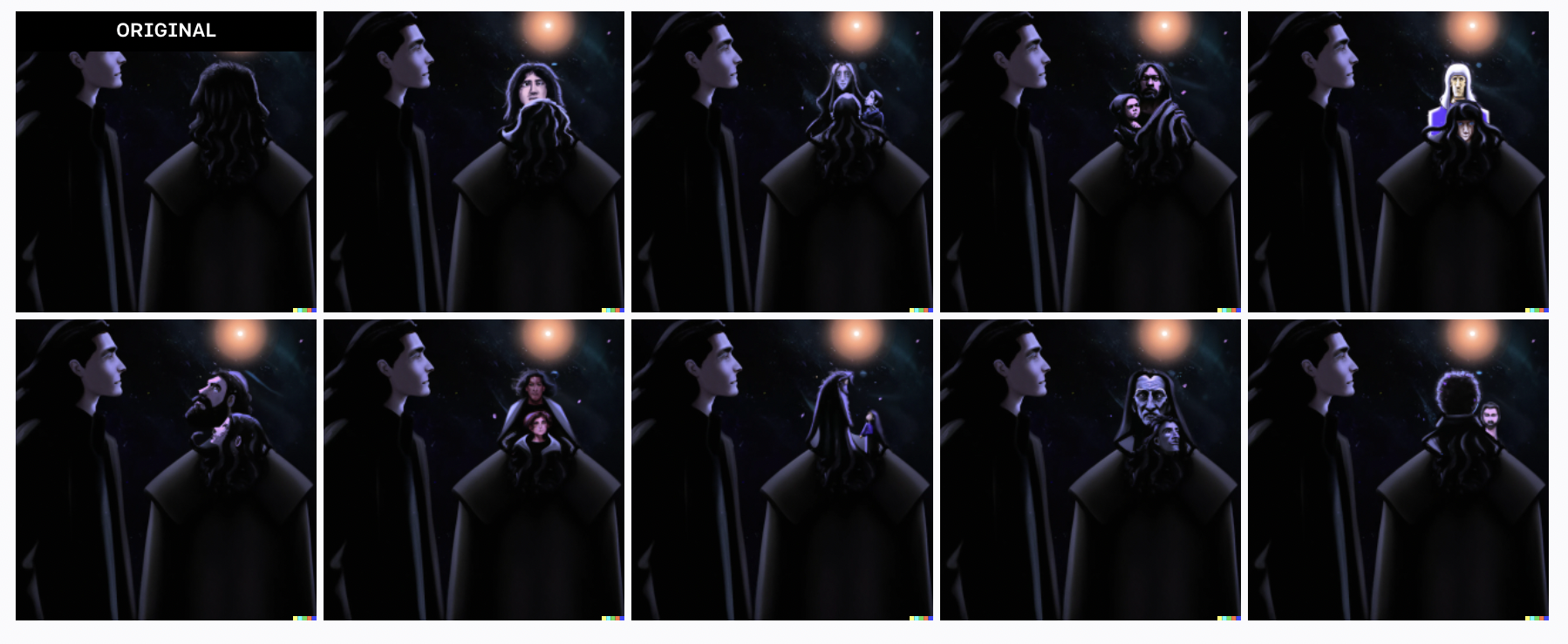

Scenes with two characters

I’m not kidding. DALL-E does fine at giving one character a list of specific traits (though if you want pink hair, watch out, DALL-E might start spamming the entire image with pink objects). It can sometimes handle multiple generic people in a crowd scene, though it quickly forgets how faces work. However, it finds it very challenging to keep track of which traits ought to belong to a specific Character A versus a different specific Character B, beyond a very basic minimum like “a man and a woman.”

The above is one iteration of a scene I was very motivated to figure out how to depict, as a fan art of my Valdemar rationalfic. DALL-E can handle two people, check, and a room with a window and at least one of a bed or chair, but it’s lost when it comes to remembering which combination of age/gender/hair color is in what location.

Even in cases where the two characters are pop culture references that I’ve already been able to confirm the model “knows” separately – for example, Captain America and Iron Man – it can’t seem to help blending them together. It’s as though the model has “two characters” and then separately “a list of traits” (user-specified or just implicit in the training data), and reassigns the traits mostly at random.

Foreground and background

A good example of this: someone on Twitter had commented that they couldn’t get DALL-E to provide them with “Two dogs dressed like roman soldiers on a pirate ship looking at New York City through a spyglass”. I took this as a CHALLENGE and spent half an hour trying; I, too, could not get DALL-E to output this, and end up needing to choose between “NYC and a pirate ship” or “dogs in Roman soldier uniforms with spyglasses”.

DALL-E can do scenes with generic backgrounds (a city, bookshelves in a library, a landscape) but even then, if that’s not the main focus of the image then the fine details tend to get pretty scrambled.

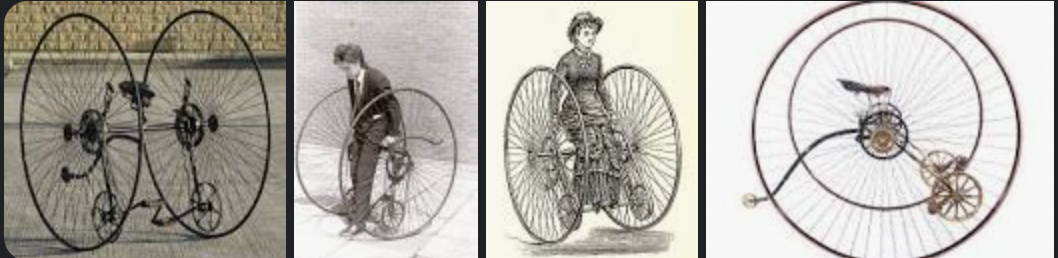

Novel objects, or nonstandard usages

Objects that are not something it already “recognizes.” DALL-E knows what a chair is. It can give you something that is recognizably a chair in several dozen different art mediums. It could not with any amount of coaxing produce an “Otto bicycle”, which my friend specifically wanted for her book cover. Its failed attempts were both hilarious and concerning.

Objects used in nonstandard ways. It seems to slide back toward some kind of ~prior; when I asked it for a dress made of Kermit plushies displayed on a store mannequin, it repeatedly gave me a Kermit plushie wearing a dress.

DALL-E generally seems to have extremely strong priors in a few areas, which end up being almost impossible to shift. I spent at least half an hour trying to convince it to give me digital art of a woman whose eyes were full of stars (no, not the rest of her, not the background scenery either, just her eyes...) and the closest DALL-E ever got was this.

I got: the goddess-eyed goddess of recursion

Spelling

DALL-E can’t spell. It really really cannot spell. It will occasionally spell a word correctly by utter coincidence. (Okay, fine, it can consistently spell “STOP” as long as it’s written on a stop sign.)

It does mostly produce recognizable English letters (and recognizable attempts at Chinese calligraphy in other instances), and letter order that is closer to English spelling than to a random draw from a bag of Scrabble letters, so I would guess that even given the new model structure that makes DALL-E 2 worse than the first DALL-E, just scaling it up some would eventually let it crack spelling.

At least sometimes its inability to spell results in unintentionally hilarious memes?

Realistic human faces

My understanding is that the face model limitation may have been deliberate to avoid deepfakes of celebrities, etc. Interestingly, DALL-E can nonetheless at least sometimes do perfectly reasonable faces, either as photographs or in various art styles, if they’re the central element of a scene. (And it keeps giving me photorealistic faces as a component of images where I wasn’t even asking for that, meaning that per the terms and conditions I can’t share those images publicly.)

Even more interestingly, it seems to specifically alter the appearance of actors even when it clearly “knows” a particular movie or TV show. I asked it for “screenshots from the second season of Firefly”, and they were very recognizably screenshots from Firefly in terms of lighting, ambiance, scenery etc, with an actor who looked almost like Nathan Fillion – as though cast in a remake that was trying to get it fairly similar – and who looked consistently the same across all 10 images, but was definitely a different person.

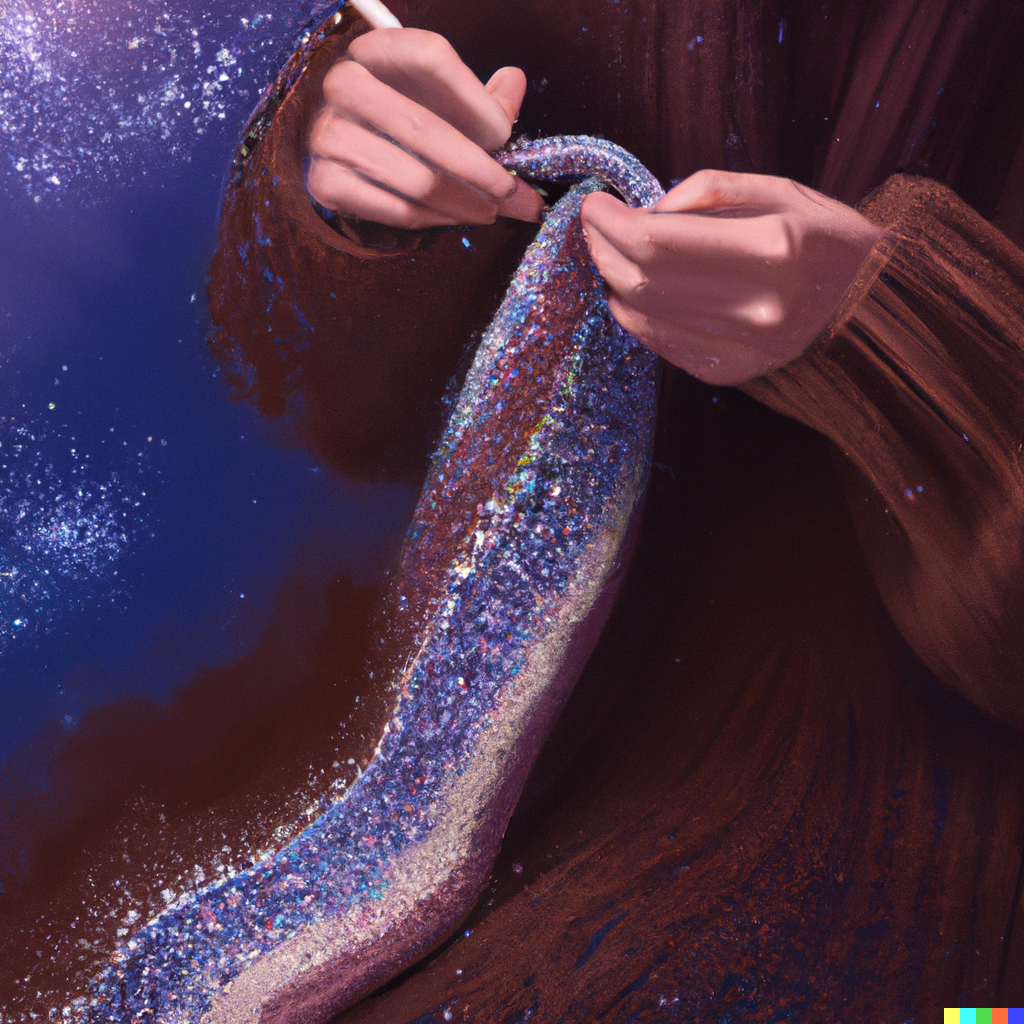

There are a couple of specific cases where DALL-E seems to “remember” how human hands work. The ones I’ve found so far mostly involve a character doing some standard activity using their hands, like “playing a musical instrument.” Below, I was trying to depict a character from A Song For Two Voices who’s a Bard; this round came out shockingly good in a number of ways, but the hands particularly surprised me.

Limitations of the “edit” functionality

DALL-E 2 offers an edit functionality – if you mostly like an image except for one detail, you can highlight an area of it with a cursor, and change the full description as applicable in order to tell it how to modify the selected region.

It sometimes works—this gorgeous dress (didn’t save the prompt, sorry) originally had no top, and the edit function successfully added one without changing the rest too much.

It often appears to do nothing. It occasionally full-on panics and does....whatever this is.

There’s also a “variations” functionality that lets you select the best image given by a prompt and generate near neighbors of it, but my experience so far is that the variations are almost invariably less of a good fit for the original prompt, and very rarely better on specific details (like faces) that I might want to fix.

Some art style observations

DALL-E doesn’t seem to hold a sharp delineation between style and content; in other words, adding stylistic prompts actively changes the some of what I would consider to be content.

For example, asking for a coffeeshop scene as painted by Alphonse Mucha puts the woman in in a long flowing period-style dress, like in this reference painting, and gives us a “coffeeshop” that looks a lot to me like a lady’s parlor; in comparison, the Miyazaki anime version mostly has the character in a casual sweatshirt. This makes sense given the way the model was trained; background details are going to be systematically different between Nouveau Art paintings and anime movies.

DALL-E is often sensitive to exact wording, and in particular it’s fascinating how “in the style of x” often gets very different results from “screenshot from an x movie”. I’m guessing that in the Pixar case, generic “Pixar style” might capture training data from Pixar shorts or illustrations that aren’t in their standard recognizable movie style. (Also, sometimes if asked for “anime” it gives me content that either looks like 3D rendered video game cutscenes, or occasionally what I assume is meant to be people at an anime con in cosplay.)

Conclusions

How smart is DALL-E?

I would give it an excellent grade in recognizing objects, and most of the time it has a pretty good sense of their purpose and expected context. If I give it just the prompt “a box, a chair, a computer, a ceiling fan, a lamp, a rug, a window, a desk” with no other specification, it consistently includes at least 7 of the 8 requested objects, and places them in reasonable relation to each other – and in a room with walls and a floor, which I did not explicitly ask for. This “understanding” of objects is a lot of what makes DALL-E so easy to work with, and in some sense seems more impressive than a perfect art style.

The biggest thing I’ve noticed that looks like a ~conceptual limitation in the model is its inability to consistently track two different characters, unless they differ on exactly one trait (male and female, adult and child, red hair and blue hair, etc) – in which case the model could be getting this right if all it’s doing is randomizing the traits in its bucket between the characters. It seems to have a similar issue with two non-person objects of the same type, like chairs, though I’ve explored this less.

It often applies color and texture styling to parts of the image other than the ones specified in the prompt; if you ask for a girl with pink hair, it’s likely to make the walls or her clothes pink, and it’s given me several Rapunzels wearing a gown apparently made of hair. (Not to mention the time it was confused about whether, in “Goldilocks and the three bears”, Goldilocks was also supposed to be a bear.)

The deficits with the “edit” mode and “variations” mode also seem to me like they reflect the model failing to neatly track a set of objects-with-assigned-traits. It reliably holds the non-highlighted areas of the image constant and only modifies the selected part, but the modifications often seem like they’re pulling in context from the entire prompt – for example, when I took one of my room-with-objects images and tried to select the computer and change it to “a computer levitating in midair”, DALL-E gave me a levitating fan and a levitating box instead.

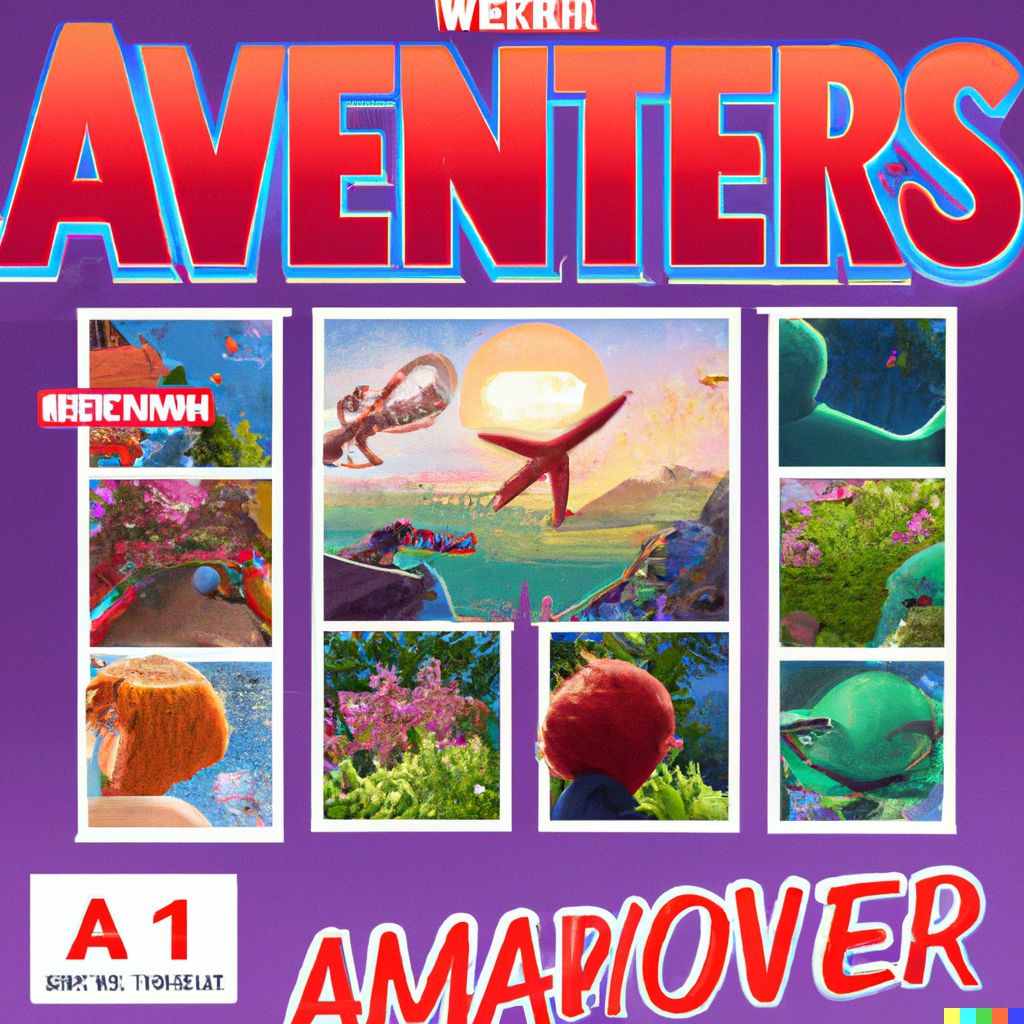

Working with DALL-E definitely still feels like attempting to communicate with some kind of alien entity that doesn’t quite reason in the same ontology as humans, even if it theoretically understands the English language. There are concepts it appears to “understand” in natural language without difficulty – including prompts like “advertising poster for the new Marvel’s Avengers movie, as a Miyazaki anime, in the style of an Instagram inspirational moodboard”, which would take so long to explain to aliens, or even just to a human from 1900. And yet, you try to explain what an Otto bicycle is – something which I’m pretty sure a human six-year-old could draw if given a verbal description – and the conceptual gulf is impossible to cross.

- Against Almost Every Theory of Impact of Interpretability by (Aug 17, 2023, 6:44 PM; 329 points)

- AI Safety 101 : Capabilities—Human Level AI, What? How? and When? by (Mar 7, 2024, 5:29 PM; 46 points)

- Synthetic Media and The Future of Film by (May 24, 2022, 5:54 AM; 35 points)

- 's comment on SolidGoldMagikarp (plus, prompt generation) by (Feb 11, 2023, 7:58 AM; 4 points)

- 's comment on SolidGoldMagikarp (plus, prompt generation) by (Feb 14, 2023, 8:17 PM; 4 points)

- AI Safety 101 : AGI by (EA Forum; Dec 21, 2023, 2:18 PM; 2 points)

Swimmer963 highlights DALL-E 2 struggling with anime, realistic faces, text in images, multiple characters/objects arranged in complex ways, and editing. (Of course, many of these are still extremely good by the standards of just months ago, and the glass is definitely more than half full.) itsnotatumor asks:

In general, we have not topped out on pretty much any scaling curve. Whether it’s language modeling, image generation, DRL, or whathaveyou, AFAIK, not a single modality can be truly said to have been ‘solved’ with the scaling curve broken. Either the scaling curve is flat, or we’re still far away. (There are some sound-related ones which seem to be close, but nothing all that important.) Diffusion models’ only scaling law I know of is an older one which bends a little but probably reflects poor hyperparameters, and no one has tried eg. Chinchilla on them yet.

So yes, we definitely can just make all the compute-budgets 10x larger without wasting it.

To go through the specific issues (caveat: we don’t know if Make-A-Scene solves any of these because no one can use it; and I have not read the Cogview2 paper*):

anime & realistic faces are purely self-imposed problems by OA. DALL-E 2 will do them fine just as soon as OA wants it to, and other models by other orgs do just fine on those domains. So no real problem there.

text in images: this is an odd one. This is especially odd because it destroys the commercial application of any image with text in it (because it’s garbage—who’d pay for these?), and if you go back to DALL-E 1, one of the demos was it putting text into images like onto generated teapots or storefronts. It was imperfect, but DALL-E 2 is way worse at it, it looks like. I mean, DALL-E 1 would’ve at least spelled ‘Avengers’ correctly. Nostalgebraist has also shown you can get excellent text generation with a specialized small model, and people using compviz (also much smaller than DALL-E 2) get good text results. So text in images is not intrinsically hard, this is a DALL-E 2-specific problem, whatever it is.

Why? As Nostalgebraist discusses at length in his earlier post, the unCLIP approach to using GLIDE to create the DALL-E 2 system seems to have a lot of weird drawbacks and tradeoffs. Just as CLIP’s contrastive view of the world (rather than discriminative or generative) leads to strange artifacts like images tessellating a pattern, unCLIP seems to cripple DALL-E 2 in some ways like compositionality is worsened. I don’t really get the unCLIP approach so I’m not completely sure why it’d screw up text. The paper speculates that

Damn you BPEs! Is there nothing you won’t blight?!

It may also be partially a dataset issue: OA’s licensing of commercial datasets may have greatly underemphasized images which have text in them, which tends to be more of a dirty Internet or downstream user thing to have. If it’s unCLIP, raw GLIDE should be able to do text much better. If it’s the training data, it probably won’t be much different.

If it’s the training data, it’ll be easy to fix if OA wants to fix it (like anime/faces); OA can find text-heavy datasets, or simply synthesize the necessary data by splatting random text in random fonts on top of random images & training etc. If it’s unCLIP, it can be hacked around by letting the users bypass unCLIP to use raw GLIDE, which as far as I know, they have no ability to do at the moment. (Seems like a very reasonable option to offer, if only for other purposes like research.) A longer-term solution would be to figure out a better unCLIP which avoids these contrastive pathologies, and a longer-term still solution would be to simply scale up enough that you no longer need this weird unCLIP thing to get diverse but high-quality samples, the base models are just good enough.

So this might be relatively easy to fix, or have an obvious fix but won’t be implemented for a long time.

complex scenes: this one is easy—unCLIP is screwing things up.

The problem with these samples generally doesn’t seem to be that the objects rendered are rendered badly by GLIDE or the upscalers, the problem seems to be that the objects are just organized wrong because the DALL-E 2 system as a whole didn’t understand the text input—that is, CLIP gave GLIDE the wrong blueprint and that is irreversible. And we know that GLIDE can do these things better because the paper shows us how much better one pair of prompts are (no extensive or quantitative evaluation, however):

And it’s pretty obvious that it almost has to screw up like this if you want to take the approach of a contrastively-learned fixed-size embedding (Nostalgebraist again): a fixed-size embedding is going to struggle if you want to stack on arbitrarily many details, especially without any recurrency or iteration (like DALL-E 1 had in being a Transformer on text inputs + VAE-token outputs). And a contrastive model like CLIP isn’t going to do relationships or scenes as well as it does other things because it just doesn’t encounter all that many pairs of images where the objects are all the same but their relationship is different as specified by the text caption, which is the sort of data which would force it to learn how “the red box is on top of the blue box” looks different from “the blue box is on top of the red box”.

Like before, just offering GLIDE as an option would fix a lot of the problems here. unCLIP screws up your complex scene? Do it in GLIDE. The GLIDE is hard to guide or lower-quality? Maybe seed it in GLIDE and then jazz it up in the full DALL-E 2.

Longer-term, a better text encoder would go a long way to resolving all sorts of problems. Just existing text models would be enough, no need for hypothetical new archs. People are accusing DALL-E 2 of lacking good causal understanding or not being able to solve problems of various sorts; fine, but CLIP is a very bad way to understand language, being a very small text encoder (base model: 0.06b) trained contrastively from scratch on short image captions rather than initialized from a real autoregressive language model. (Remember, OA started the CLIP research with autoregressive generation, Figure 2 in the CLIP paper, it just found that more expensive, not worse, and switched to CLIP.) A real language model, like Chinchilla-80b, would do much better when fused to an image model, like in Flamingo.

So, these DALL-E 2 problems all look soluble to me by pursuing just known techniques. They stem from either deliberate choices, removing the user’s ability to choose a different tradeoff, or lack of simple-minded scaling.

* On skimming, CogView2 looks like it’d avoid most of the DALL-E 2 pathologies, but looks like it’s noticeably lower-quality in addition to lower-resolution.

EDIT: between Imagen, Parti, DALL-E 3, and the miracle-of-spelling paper, I think that my claims that text in images is simply a matter of scale, and that tokenization screws up text in images, are now fairly consensus in DL as of late 2023.

Google Brain just announced Imagen (Twitter), which on skimming appears to be not just as good as DALL-E 2 but convincingly better. The main change appears to be reducing the CLIP reliance in favor of a much larger and more powerful text encoder before doing the image diffusion stuff. They make a point of noting superiority on “compositionality, cardinality, spatial relations, long-form text, rare words, and challenging prompts.” The samples also show text rendering fine inside the images as well.

I take this as strong support (already) for my claims 2-3: the problems with DALL-E 2 were not major or deep ones, do not require any paradigm shift to fix, or even any fix, really, beyond just scaling the components almost as-is. (In Kuhnian terms, the differences between DALL-E 2 and Imagen or Make-A-Scene are so far down in the weeds of normal science/engineering that even people working on image generation will forget many of the details and have to double-check the papers.)

EDIT: Google also has a more traditional autogressive DALL-E-1-style 1024px model, “Parti”, competing with diffusion Imagen; it is slightly better in COCO FID than Imagen. It likewise does well on all those issues, with again no special fancy engineering aimed specifically at those issues, mostly just scaling up to 20b.

Will future generative models choke to death on their own excreta? No.

Now that goalposts have moved from “these neural nets will never work and that’s why they’re bad” to “they are working and that’s why they’re bad”, a repeated criticism of DALL·E 2 etc is that their deployment will ‘pollute the Internet’ by democratizing high-quality media, which may (given all the advantages of machine intelligence) quickly come to exceed ‘regular’ (artisanally-crafted?) media, and that ironically this will make it difficult or impossible to train better models. I don’t find this plausible at all but lots of people seem to and no one is correcting all these wrong people on the Internet, so here’s a quick rundown why:

It Hasn’t Happened Yet: there is no such thing as ‘natural’ media on the Internet, and never has been. Even a smartphone photograph is heavily massaged by a pipeline of algorithms (increasingly DL-based) before it is encoded into a codec designed to throw away human-perceptually-unimportant data such as JPEG. We are saturated in all sorts of Photoshopped, CGIed, video-game-rendered, Instagram-filtered, airbrushed, posed, lighted, (extremely heavily) curated media. If these models are as uncreative as claimed by critics and merely memorizing… what’s the big deal?

Spread will happen slowly: see earlier comment. Consider GPT-3: you can sign up for the OA API easily, there’s GPT-Neo-20b, FB is releasing OPT, etc. It’s still ‘underused’ compared to what it could be used, and most of the text on the Internet continues to be written by humans or non-GPT-3-quality software etc.

There’s Enough Data Already: what if we have ‘used up’ the existing data and are ‘polluting’ new data unfixably such that the old pre-generative datasets are inadequate and we can’t cheaply get clean new data?

sample efficiency: critics love to harp on the sample-inefficiency. How can these possibly be good if it takes hundreds of millions of images? Surely the best algorithm would be far more sample-efficient, and you should be able to match DALL·E 2 with a million well-selected images, max. Sure: the first is always the worst, the experience curves will kick in, we are trading data for compute because photos are cheap & GPUs dear—I agree, I just don’t think those are all that bad or avoidable.

But the flip side of this is that if existing quantities are already enough to train near-photorealistic models, when these models are admittedly so grossly sample-inefficient, then when those more sample-efficient models become possible, we will definitely have enough data to train much better quality models in the future simply by using the same amount of data.

diversity: Sometimes people think that 400m or 1b images are ‘not sufficiently diverse’, and must be missing something. This might be due to a lack of appreciation for the tail and Littlewood’s Law: if you spend time going through datasets like LAION-400m or YFCC100M, there is a lot of strange stuff there. All it takes is one person out of 7 billion doing it for any crazy old reason (including being crazy).

This raises another ironic reversal of criticisms: to deprecate some remarkable sample, a critic will often try to show “it’s just copying” something that looks vaguely similar in Google Images. Obviously, this is a dilemma for the future-choke argument: if the remarkable sample is not already in the corpus, then the present models already have learned it adequately and ‘generalized’ (despite all of the noise from point #1), and future data is not necessary for future models; if the remarkable sample is in the corpus, then future models can just learn from that!

all the other data: current models only scratch the surface of available media. There is a lot of image data out there. A lot.

For example, Twitter blocks Common Crawl, which is the base for many scrapes; how many tens of billions of artworks alone is that? Twitter has tons of artists who post art on a regular basis (including many series of sketches to finished works which would be particularly valuable). Or DALL·E 2 is strikingly bad at anime and appears to have clearly filtered out or omitted all anime sources—so, that’s 5 million images from Danbooru2021 not included (>9.9 million on Big Booru), an overlapping >100 million images from Pixiv (powered in part by commissions through things like Skeb.jp, >100k commissions thus far), >2.8 million furry images on e621, 2 million My Little Pony images on Derpibooru… What about BAM!, n = 65 million? DeviantArt seems to be represented, but I doubt most of it is (>400 million). There’s something like >2 million books published every year; many have no illustrations but also many are things like comic books (>1500 series by major publishers?) or manga books (>8000/year?) which have hundreds or thousands of discrete illustrations—so that’s got to be millions of images piling up every year since. How about gig markets? Fiverr, just one of many, reports 3.4 million buyers spending >$200/each; at a nominal rate of $50/image, that would be 4 per buyer or 13 million images. So all together, there’s billions of high-quality images out there for the collecting, and something like hundreds of millions being added each year.

(These numbers may seem high but it makes sense if you think about the exponential growth of the Internet or smartphones or digital art: the majority of it must have been created recently, so if there are billions of images, then the current annual rate is probably going to be something like ‘hundreds of millions’. They can’t all be scans of ancient Victorian erotica.)

Also: multimodal data. How about video? A high-quality Hollywood movie or TV series probably provides a good non-redundant still every couple of seconds; TV production the past few years has been, due to streaming money, at all time highs like >500 series per year...

I mean, this is all a ludicrously narrow slice of the world, so I hope it adequately makes the point that we have not, do not now, and very much will not in the future, suffer from any hard limit on data availability. Chinchilla may make future models more data-hungry, and you may have to do some real engineering work to get as much as you want, or pay for it, but it’s there if you want it enough. (We will have a ‘data shortage’ in the same way we have a $5/pound filet mignon shortage.)

The Student Becomes The Master: imitation learning can lead to better performance than the original demonstrations or ‘experts’.

There are a bunch of ways to do bootstraps, but to continue the irony theme here, the most obvious one is the ‘cherry-picking’ accusation: “these samples are good but they’re just cherry-picked!” This is silly because all samples, human or past models, are all cherrypicked; the human samples are typically filtered much harder than the machine samples; there are machine ways to do the cherrypicking automatically; and you couldn’t get current samples out of old models no matter how hard you feasibly selected.

But for the choking argument, this is a problem: it is easier to recognize good samples than to create them. If humans are filtering model outputs, then the more heavily they filter, the more they are teaching future models what are good and bad samples by populating the dataset with good samples and adding criticism to bad samples. (Every time someone posts a tweet with a snarky “#dalle-fail”, they are labeling that sample as bad and teaching future DALL·Es what a mistake looks like and how not to draw a hand.) Good samples will get spread and edited and copied online, and will elicit more samples like that, as people imitate them and step up their game and learn how to use DALL·E right.

Mistakes Are Just Another Style: we can divide the supposed pernicious influence of the generated samples into 2: random vs systematic error.

random error: is not a problem.

NNs are notoriously robust to random error like label errors. This is already the case if you look at the data these models are trained on. (On the DALL·E 2 subreddit, people will sometimes exclaim that the model understood their “gamboy made of crystal” prompt: “it knew I meant ‘Gameboy’ despite my typo, amazing!” You sweet, sweet summer child.) You can do wacky things like scramble 99% of ImageNet labels, and as long as there is a seed of correctly-classified images reflecting the truth, a CNN classifier will… mostly work? Yeah. (Or the observation with training GPT-style models that as long as there is a seed of high-quality text like Wikipedia to work with, you can throw in pretty crummy Internet text and it’ll still help.) To the extent that the models are churning out random errors (similar to how GPT-3 stochastic sampling often leads to just dumb errors), they aren’t really going to interfere with themselves.

You pay a price in compute, of course, and GPUs aren’t free, but it’s certainly no fatal problem.

systematic error: people generally seem to have in mind more like systematic error in generated samples: DALL·E 2 can’t generate hands, and so all future models are doomed to look like missives from the fish-people beneath the sea.

But the thing is, if an error is consistent enough for the critic to notice, then it’s also obvious enough for the model to notice. If you repeat a joke enough times, it can become funny; if you repeat an error enough, then it’s just your style. Errors will be detected, learned, and conditioned on for generation. Past work in deepfake detection has shown that even when models like StyleGAN2 are doing photorealistic faces, they are still detectable with high confidence by other models because of subtle issues like reflections in the eye or just invisible artifacts in the high frequency domains where humans are blind. We all know the ‘Artstation’ prompt by now, and it goes the other way too: you can ask DALL·E 2 for “DeepDream” (remember the psychedelic dog-slugs?) (or ‘grainy 1940s photo’ or ’1980s VHS tape’ or ‘out of focus shot’ or ‘drawn by a child’ or...). You can’t really ask it for ‘DALL·E’ images, and that’s a testament to the success.

DALL·E 2 errors are just the DALL·E 2 style, and it will be promptable like anything else by future models, which will detect the errors (and/or the OA watermark in the lower right corner), and it will no more ‘break’ them than some melting watches in a Salvador Dali painting destroys their ability to depict a pocket-watch. Dali paintings have melty dairy products and timekeeping devices, and DALL·E 2 paintings have melty faces and hands, and that’s just the way those artistic genres are, but it doesn’t make you or future models think that’s how everything is.

(And if you can’t see a style, well then: Mission. Accomplished.)

If I worried about something, one worry I have not seen mentioned is the effect of generative model excellence on cultural evolution, not by being incapable of high-quality or diverse styles, but by being too capable, too good, too cheap, too fast. Ted Gioia in a recent grumpy article about sequelitis has a line:

Generative models may help push us towards a world in which alternative voices are everywhere and it has never been cheaper or easier for anyone in the world to create a unique new style which can complement their other skills and produce a vast corpus of high-quality works in that style, but also in which it has never been harder to be heard or to develop that style into any kind of ‘cultural impact’.

When content is infinite, what becomes scarce? Attention ie. selection. Communities rely on multilevel selection to incubate new styles and gradually percolate them up from niches to broader audiences. For this to work, those small communities need to be somewhat insulated from fashions, because a new style will never enter the world as fully optimized as old styles; they need investment, perhaps exploiting sunk costs, perhaps exploiting parasociality, so they can deeply explore it. Unfortunately, by rendering entry into a niche trivial, producing floods of excellent content, and accelerating cultural turnover & amnesia, it gets harder & harder to make any dent: by the time you show up to the latest thing with your long-form illustrated novel (which you could never have done by yourself), that’s soooo last week and your link is already buried 100 pages deep into the submission queue. Your 1000 followers on Twitter will think you’re a genius, and you will be, but that will be all it ever amounts to. I am already impressed just how many quite beautiful or interesting images I see posted from DALL-E 2 or Stable Diffusion, but which immediately disappear in the infinite scroll under the flood of further selections, never to be seen again or archived anywhere or curated.

Can I get a link to someone who actually believes this? I’m honestly a little skeptical this is a common opinion, but wouldn’t put it past people I guess.

I’ve seen it several times on Twitter, Reddit, and HN, and that’s excluding the people like Jack Clark who has pondered it repeatedly in his Import.ai newsletter & used it as theme in some of his short stories (but much more playfully & thoughtfully in his case so he’s not the target here). I think probably the one that annoyed me enough to write this was when Imagen hit HN and the second lengthy thread was all about ‘poisoning the well’ with most of them accepting the premise. It has also been asked here on LW at least twice in different places. (I’ve also since linked this writeup at least 4 times to various people asking this exact question about generative models choking on their own exhaust, and the rise of ChatGPT has led to it coming up even more often.)

Wow, this is going to explode picture books and book covers.

Hiring an illustrator for a picture book costs a lot, as it should given it’s bespoke art.

Now publishers will have an editor type in page descriptions, curate the best and off they go. I can easily imagine a model improvement to remember the boy drawn or steampunk bear etc.

Book cover designers are in trouble too. A wizard with lighting in hands while mountain explodes behind him—this can generate multiple options.

It’s going to get really wild when A/B split testing is involved. As you mention regarding ads you’d give the system the power to make whatever images it wanted and then split test. Letting it write headlines would work too.

Perhaps a full animated movie down the line. There are already programs that fill in gaps for animation poses. Boy running across field chased by robot penguins—animated, eight seconds. And so on. At that point it’s like Pixar in a box. We’ll see an explosion of directors who work alone, typing descriptions, testing camera angles, altering scenes on the fly. Do that again but more violent. Do that again but with more blood splatter.

Animation in the style of Family Guy seems a natural first step there. Solid colours, less variation, not messing with light rippling etc.

There’s a service authors use of illustrated chapter breaks, a black and white dragon snoozing, roses around knives, that sort of thing. No need to hire an illustrator now.

Conversion of all fiction novels to graphic novel format. At first it’ll be laborious, typing in scene descriptions but graphic novel art is really expensive now. I can see a publisher hiring a freelancer to produce fifty graphic novels from existing titles.

With a bit of memory, so once I choose the image of each character I want, this is an amazing game changer for publishing.

Storyboarding requires no drawing skill now. Couple sprinting down dark alley chased by robots.

Game companies can use it to rapid prototype looks and styles. They can do all that background art by typing descriptions and saving the best.

We’re going to end up with people who are famous illustrator who can’t draw but have created amazing styles using this and then made books.

Thanks so much for this post. This is wild astonishing stuff. As an author who is about to throw large sums of money at cover design, it’s incredible to think a commercial version of this could do it for a fraction of the price.

edit: just going to add some more

App design that requires art. For example many multiple choice story apps that are costly to make due to art cost.

Split-tested covers designs for pretty much anything—books, music, albums, posters. Generate, ad campaign, test clicks. An ad business will be able to throw up a 1000 completely different variations in a day.

All catalogs/brochures that currently use stock art. While choosing stock art to make things works it also sucks and is annoying with the limited range. I’m imagining a stock art company could radically expand their selection to keep people buying from them. All those searches that people have typed in are now prompts.

Illustrating wikipedia. Many articles need images to demonstrate a point and rely on contributors making them. This could open up improvements in the volume of images and quality.

Graphic novels/comic books—writers who don’t need artists essentially. To start it will be describing single panels and manually adding speech text but that’s still faster and cheaper than hiring an artist. For publishers—why pick and choose what becomes a graphic novel when you can just make every title into a graphic novel.

Youtube/video interstitial art. No more stock photos.

Licensed characters (think Paw Patrol, Disney, Dreamworks) - creation of endless poses, scenes. No more waiting for Dreamworks to produce 64 pieces of black and white line art when it may be able to take the movie frames and create images from that.

Adaptations—the 24-page storybook of Finding Nemo. The 24-page storybook of Pinocchio. The picture book of Fast and The Furious.

Looking further ahead we might even see a drop-down option of existing comics, graphic novels but in a different art style. Reading the same Spiderman story but illustrated by someone else.

Character design—for games, licensing, children’s animation. This radically expands the volume of characters that can be designed, selected and then chosen for future scenes.

With some sort of “keep this style”, “save that character” method, it really would be possible to generate a 24-page picture book in an incredibly short amount of time.

Quite frankly, knowing how it works, I’d write a picture book of a kid going through different art styles in their adventure. Chasing their puppy through the art museum and the dog runs into a painting. First Van Gogh, then Da Vinci and so on. The kid changes appearance due to the model but that works for the story.

As a commercial produce, this system would be incredible. I expect we’ll see an explosion in the number of picture books, graphic novels, posters, art designs, etsy prints, downloadable files and so on. Publishers with huge backlists would be a prime customer.

Video is on the horizon (video generation bibliography eg. FDM), in the 1-3 year range. I would say that video is solved conceptually in the sense that if you had 100x the compute budget, you could do DALL-E-2-but-for-video right now already. After all, if you can do a single image which is sensible and logical, then a video is simply doing that repeatedly. Nor is there any shortage of video footage to work with. The problem there is that a video is a lot of images: at least 24 images per second, so you could have 192 different samples, or 1 8s clip. Most people will prefer the former: decorating, say, a hundred blog posts with illustrations is more useful than a single OK short video clip of someone dancing.

So video’s game is mostly about whether you can come up with an approach which can somehow economize on that, like clever tricks in reusing frames to update only a little while updating a latent vector, as a way to take a shortcut to that point in the future where you had so much compute that the obvious Transformer & Diffusion models can be run in reasonable compute-budgets & video ‘just worked’.

And either way, it may be the revolution that robotics requires (video is a great way to plan).

Following up on your logic here, the one thing that DALLE-2 hasn’t done, to my knowledge, is generate entirely new styles of art, the way that art deco or pointillism were truly different from their predecessors.

Perhaps that’ll be the new of of human illustrators? Artists, instead of producing their own works to sell, would instead create their own styles, generating libraries of content for future DALLEs to be trained against. They then make a percentage on whatever DALLE makes from image sales if the style used was their own.

Can DALL·E Create New Styles?

Most DALL·E questions can be answered by just reading the paper of it or its competitors, or are dumb. This is probably the most interesting question that can’t be, and also one of the most common: can DALL·E (which we’ll use just as a generic representative of image generative models, since no one argues that one arch or model can and the others cannot AFAIK) invent a new style? DALL·E is, like GPT-3 in text, admittedly an incredible mimic of many styles, and appears to have gone well beyond any mere ‘memorization’ of the images depicting styles because it can so seamlessly insert random objects into arbitrary styles (hence all the “Kermit Through The Ages” or “Mughal space rocket” variants); but simply being a gifted epigone of most existing styles is not guarantee you can create a new one.

If we asked a Martian what ‘style’ was, it would probably conclude that “‘style’ is what you call it when some especially mentally-ill humans output the same mistakes for so long that other humans wearing nooses try to hide the defective output by throwing small pieces of green paper at the outputs, and a third group of humans wearing dresses try to exchange large white pieces of paper with black marks on them for the smaller green papers”.

Not the best definition, but it does provide one answer: since DALL·E is just a blob of binary which gets run on a GPU, it is incapable of inventing a style because it can’t take credit for it or get paid for it or ally with gallerists and journalists to create a new fashion, so the pragmatic answer is just ‘no’, no more than your visual cortex could. So, no. This is unsatisfactory, however, because it just punts to, ‘could humans create a new style with DALL·E?’ and then the answer to that is simply, ‘yes, why not? Art has no rules these days: if you can get someone to pay millions for a rotting shark or half a mill for a blurry DCGAN portrait, we sure as heck can’t rule out someone taking some DALL·E output and getting paid for it.’ After all, DALL·E won’t complain (again, no more than your visual cortex would). Also unsatisfactory but it is at least testable: has anyone gotten paid yet? (Of course artists will usually try to minimize or lie about it to protect their trade secrets, but at some point someone will ’fess up or it become obvious.) So, yes.

Let’s take ‘style’ to be some principled, real, systematic visual system of esthetics. Regular use of DALL·E, of course, would not produce a new style: what would be the name of this style in the prompt? “Unnamed new style”? Obviously, if you prompt DALL·E for “a night full of stars, Impressionism”, you will get what you ask for. What are the Internet-scraped image/text caption pairs which would correspond to the creation of a new style, exactly? “A dazzling image of an unnamed new style being born | Artstation | digital painting”? There may well be actual image captions out there which do say something like that, but surely far too few to induce some sort of zero-shot new-style creation ability. Humans too would struggle with such an instruction. (Although it’s fun to imagine trying to commission that from a human artist on Fiverr for $50, say: “an image of a cute cat, in a totally brand-new never before seen style.” “A what?” “A new style.” “I’m really best at anime-style illustrations, you know.” “I know. Still, I’d like ‘a brand new style’. Also, I’d like to commission a second one after that too, same prompt.” ”...would you like a refund?”)

Still, perhaps DALL·E might invent a new style anyway just as part of normal random sampling? Surely if you generated enough images it’d eventually output something novel? However, DALL·E isn’t trying to do so, it is ‘trying’ to do something closer to generating the single most plausible image for a given text input, or to some minor degree, sampling from the posterior distribution of the Internet images + commercial licensed image dataset it was trained on. To the extent that a new style is possible, it ought to be extremely rare, because it is not, in fact, in the training data distribution (by definition, it’s novel), and even if DALL·E 2 ‘mistakenly’ does so, it would be true that this newborn style would be extremely rare because it is so unpopular compared to all the popular styles: 1 in millions or billions.

Let’s say it defied the odds and did anyway, since OA has generated millions of DALL·E 2 samples already according to their PR. ‘Style’ is something of a unicorn: if DALL·E could (or had already) invented a new style… how would we know? If Impressionism had never existed and Van Gogh’s Starry Night flashed up on the screen of a DALL·E 2 user late one night, they would probably go ‘huh, weird blobby effect, not sure I like it’ and then generate new completions—rather than herald it as the ultimate exemplar of a major style and destined to be one of the most popular (to the point of kitsch).

Finally, if someone did seize on a sample from a style-less prompt because it looked new to them and wanted to generate more, they would be out of luck: DALL·E 2 can generate variations on an image, yes, but this unavoidably is a mashup of all of the content and style and details in an image. There is not really any native way to say ‘take the cool new style of this image and apply it to another’. You are stuck with hacks: you can try shrinking the image to uncrop, or halve it and paste in a target image to infill, or you can go outside DALL·E 2 entirely and use it in a standard style-transfer NN as the original style image… But there is no way to extract the ‘style’ as an easily reused keyword or tool the way you can apply ‘Impressionism’ to any prompt.

This is a bad situation. You can’t ask for a new style by name because it has none; you can’t talk about it without naming it because no one does that for new real-world styles, they name it; and if you don’t talk about it, a new style has vanishingly low odds of being generated, and you wouldn’t recognize it, nor could you make any good use of it if you did. So, no.

DALL·E might be perfectly capable of creating a new style in some sense, but the interface renders this utterly opaque, hidden dark knowledge. We can be pretty sure that DALL·E knows styles as styles rather than some mashup of physical objects/colors/shapes: just like large language models imitate or can be prompted to be more or less rude, more or less accurate, more or less calibrated, generate more or less buggy or insecure code, etc., large image models disentangle and learn pretty cool generic capabilities: not just individual styles, but ‘award-winning’ or ‘trending on Artstation’ or ‘drawn by an amateur’. Further, we can point to things like style transfer: you can use a VGG CNN trained solely on ImageNet, with near-zero artwork in it (and definitely not a lot of Impressionist paintings), to fairly convincingly stylize images in the style of “Starry Night”—VGG has never seen “Starry Night”, and may never have seen a painting, period, so how does it do this?

Where DALL·E knows about styles is in its latent space (or VGG’s Gram matrix embedding): the latent space is an incredibly powerful way to boil down images, and manipulation of the latent space can go beyond ordinary samples to make, say, a face StyleGAN generate cars or cats instead—there’s a latent for that. Even things which seem to require ‘extrapolation’ are still ‘in’ the capacious latent space somewhere, and probably not even that far away: in very high dimensional spaces, everything is ‘interpolation’ because everything is an ‘outlier’; why should a ‘new style’ be all that far away from the latent points corresponding to well-known styles?

All text prompts and variations are just hamfisted ways of manipulating the latent space. The text prompt is just there to be encoded by CLIP into a latent space. The latent space is what encodes the knowledge of the model, and if we can manipulate the latent space, we can unlock all sorts of capabilities like in face GANs, where you can find latent variables which correspond to, say, wearing eyeglasses or smiling vs frowning—no need to mess around with trying to use CLIP to guide a ‘smile’ prompt if you can just tweak the knob directly.

Unless, of course, you can’t tweak the knob directly, because it’s behind an API and you have no way of getting or setting the embedding, much less doing gradient ascent. Yeah, then you’re boned. So the answer here becomes, ‘no, for now: DALL·E 2 can’t in practice because you can’t use it in the necessary way, but when some equivalent model gets released, then it becomes possible (growth mindset!).’

Let’s say we have that model, because it surely won’t be too long before one gets released publicly, maybe a year or two at the most. And public models like DALL·E Mini might be good enough already. How would we go about it concretely?

‘Copying style embedding’ features alone would be a big boost: if you could at least cut out and save the style part of an embedding and use it for future prompts/editing, then when you found something you liked, you could keep it.

‘Novelty search’ has a long history in evolutionary computation, and offers a lot of different approaches. Defining ‘fitness’ or ‘novelty’ is a big problem here, but the models themselves can be used for that: novelty as compared against the data embeddings, optimizing the score of a large ensemble of randomly-initialized NNs (see also my recent essay on constrained optimization as esthetics) or NNs trained on subsets (such as specific art movements, to see what ‘hyper Impressionism’ looks like) or...

Preference-learning reinforcement learning is a standard approach: try to train novelty generation directly. DRL is always hard though.

One approach worth looking at is “CAN: Creative Adversarial Networks, Generating ‘Art’ by Learning About Styles and Deviating from Style Norms”, Elgammal et al 2017. It’s a bit AI-GA in that it takes an inverted U-curve theory of novelty/art: a good new style is essentially any new style which you don’t like but your kids will in 15 years, because it’s a lot like, but not too much like, an existing style. CAN can probably be adapted to this setting.

CAN is a multi-agent approach in trying to create novelty, but I think you can probably do something much simpler by directly targeting this idea of new-but-not-too-new, by exploiting embeddings of real data.

If you embed & cluster your training data using the style-specific latents (which you’ve found by one of many existing approaches like embedding the names of stylistic movements to see what latents they average out to controlling, or by training a classifier, or just rating manually by eye), styles will form island-chains of works in each style, surrounded by darkness. One can look for suspicious holes, areas of darkness which get a high likelihood from the model, but are anomalously underrepresented in terms of how many embedded datapoints are nearby; these are ‘missing’ styles. The missing styles around a popular style are valuable directions to explore, something like alternative futures: ‘Impressionism wound up going thattaway but it could also have gone off this other way’. These could seed CAN approaches, or they could be used to bias regular generation: what if when a user prompts ‘Impressionist’ and gets back a dozen sample, each one is deliberately diversified to sample from a different missing style immediately adjacent to the ‘Impressionist’ point?

So, maybe.

An interesting example of what might be a ‘name-less style’ in a generative image model, Stable Diffusion in this case (DALL-E 2 doesn’t give you the necessary access so users can’t experiment with this sort of thing): what the discoverer calls the “Loab” (mirror) image (for lack of a better name—what text prompt, if any, this image corresponds to is unknown, as it’s found by negation of a text prompt & search).

‘Loab’ is an image of a creepy old desaturated woman with ruddy cheeks in a wide face, which when hybridized with other images, reliably induces more images of her, or recognizably in the ‘Loab style’ (extreme levels of horror, gore, and old women). This is a little reminiscent of the discovered ‘Crungus’ monster, but ‘Loab style’ can happen, they say, even several generations of image breeding later when any obvious part of Loab is gone—which suggests to me there may be some subtle global property of descendant images which pulls them back to Loab-space and makes it ‘viral’, if you will. (Some sort of high-frequency non-robust or adversarial or steganographic phenomenon?) Very SCP.

Apropos of my other comments on weird self-fulfilling prophecies and QAnon and stand-alone-complexes, it’s also worth noting that since Loab is going viral right now, Loab may be a name-less style now, but in future image generator models feeding on the updating corpus, because of all the discussion & sharing, it (like Crungus) may come to have a name - ‘Loab’.

I wonder what happens when you ask it to generate

> “in the style of a popular modern artist <unknown name>”

or

> “in the style of <random word stem>ism”.

You could generate both types of prompts with GPT-3 if you wanted so it would be a complete pipeline.

“Generate conditioned on the new style description” may be ready to be used even if “generate conditioned on an instruction to generate something new” is not. This is why a decomposition into new style description + image conditioned on it seems useful.

If this is successful, then more of the high-level idea generation involved can be shifted onto a language model by letting it output a style description. Leave blanks in it and run it for each blank, while ensuring generations form a coherent story.

>”<new style name>, sometimes referred to as <shortened version>, is a style of design, visual arts, <another area>, <another area> that first appeared in <country> after <event>. It influenced the design of <objects>, <objects>, <more objects>. <new style name> combined <combinatorial style characteristic> and <another style characteristic>. During its heyday, it represented <area of human life>, <emotion>, <emotion> and <attitude> towards <event>.”

DALL-E can already model the distribution of possible contexts (image backgrounds, other objects, states of the object) + possible prompt meanings. An go from the description 1) to high-level concepts, 2) to ideas for implementing these concepts (relative placement of objects, ideas for how to merge concepts), 3) to low-level details. All within 1 forward pass, for all prompts! This is what astonished me most about DALL-E 1.

Importantly, placing, implementing, and combining concepts in a picture is done in a novel way without a provided specification. For style generation, it would need to model a distribution over all possible styles and use each style, all without a style specification. This doesn’t seem much harder to me and could probably be achieved with slightly different training. The procedure I described is just supposed to introduce helpful stochasticity in the prompt and use an established generation conduit.

...Hmm now I’m wondering if feeding DALL-E an “in the style of [ ]” request with random keywords in the blank might cause it do replicable weird styles, or if it would just get confused and do something different every time.

I’d love to see it tried. Maybe even ask for “in the style of DALLE-2”?

“A woman riding a horse, in the style of DALLE-2”

I have no idea how to interpret this. Any ideas?

It seems like we got a variety of different styles, with red, blue, black, and white as the dominant colors.

Can we say that DALLE-2 has a style of its own?

I think DALL-E has been nerfed (as a sort of low-grade “alignment” effort) and some of what you’re talking about as “limitations” are actually bugs that were explicitly introduced with the goal of avoiding bad press.

It wouldn’t surprise me if they just used intelligibility tools to find the part of the vectorspace that represents “the face of any famous real person” and then applied some sort of noise blur to the model itself, as deployed?

Except! Maybe not a “blur” but some sort of rotation of a subspace or something? This hint is weirdly evocative:

The alternative of having it ever ever ever produce a picture of “Obama wearing <embarassing_thing>” or “Trump sleeping in box splashed with bright red syrup” or some such… that stuff might go viral… badly...

...so any single thing anyone manages to make has to be pre-emptively and comprehensively nerfed in general?

By comparison, it costs almost nothing to have people complain about how it did some totally bizarre other thing while refusing to shorten the hair of someone who might look too much like “Alan Rickman playing Snape” such that you might see a distinctive earlobe.

Sort of interestingly: in a sense, this damage-to-the-model is a (super low tech) “alignment” strategy!

The thing they might have wanted was to just say “while avoiding any possible embarrassing image (that could be attributed to the company that made the model making the image) in the circa-2022 political/PR meta… <user provided prompt content”.

But the system isn’t a genie that can understand the spirit and intent of wishes (yet?) so instead… just reach into the numbers and scramble them in certain ways?

In this sense, I think we aren’t seeing “DALL-E 2 as trained” but rather “DALL-E 2 with some sort of interesting alignment-related lobotomy to make it less able to accidentally stir up trouble”.

Yes, I thought their ‘horse in ketchup’ example made the point well that it’s an ‘artificial stupidity’ Harrison-Bergeron sort of approach rather than a genuine solution. (And then, like BPEs, there seems to be unpredictable fallout which would be hard to benchmark and which no one apparently even thought to benchmark—despite whatever they did on May 1st to upgrade quality, the anime examples still struggle to portray specific characters like Kyuubey, where Swimmer’s examples are all very Kyuubey-esque but never actually Kyuubey. I am told the CLIP used is less degraded, and so we’re probably seeing the output of ‘CLIP models which know about characters like Kyuubey combined with other models which have no idea’.)

Thread of all known anime examples.

That’s how you know it’s not a problem of pulling in lots of poorer-quality anime art. First, poorer-quality doesn’t impede learning that much; remember, you just prompt for high-quality. Compute allowing, more n is always better. And second, if it was a master of poorer-quality anime drawings, it wouldn’t be desperately ‘sliding away’, if you will, like squeezing a balloon, from rendering true anime, as opposed to CGI of anime or Western fanart of anime or photographs of physical objects related to anime. It would just do it (perhaps generating poorer-quality anime), not generate high-quality samples of everything but anime. (See my comment there for more examples.)

The problem is it’s somehow not trained on anime. Everything it knows about anime seems to come primarily from adjacent images and the CLIP guidance (which does know plenty about anime, but we also know that pixel generation from CLIP guidance never works as well).

A prompt i’d love to see: “Anomalocaris Canadensis flying through space.” I’m really curious how well it does with an extinct species which has very little existing artistic depictions. No text->image model i’ve played with so far has managed to create a convincing anomalocaris, but one interestingly did know it was an aquatic creature and kept outputting lobsters.

Going by the Wikipedia page reference, I think it got it somewhat closer than “lobsters” at least?

I’d rate these highly, there are many forms of anomalocarids (https://en.m.wikipedia.org/wiki/Radiodonta#/media/File%3A20191201_Radiodonta_Amplectobelua_Anomalocaris_Aegirocassis_Lyrarapax_Peytoia_Laggania_Hurdia.png) and it looks to have picked a wide variety aside from just candensis, but I’m thoroughly impressed that it got the form right in nearly all 10.

Challenging prompt ideas to try:

A row of five squares, in which the rightmost four squares each have twice the area of the square to their immediate left.

Screenshots from a novel game comparable in complexity to tic-tac-toe sufficient to demonstrate the rules of the game.

Elon Musk signing his own name in ASL.

The hands of a pianist as they play the first chord from Chopin’s Polonaise in Ab major, Op. 53

Pages from a flip book of a water glass spilling.

First one: ….yeah no, DALL-E 2 can’t count to five, it definitely doesn’t have the abstract reasoning to double areas. Image below is literally just “a horizontal row of five squares”.

Very interesting that it can’t manage to count to five. That to me is strong evidence that DALL-E’s not “constructing” the scenes it depicts. I guess it has more of a sense of relationships among scene element components? Like, “coffee shop” means there’s a window-like element, and if there’s a window element, then there’s some sort of scene through the window, and that’s probably some sort of rectangular building shape. Plausible guesses all the way down to the texture and color of skin or fur. Filling in the blanks on steroids, but with a complete lack of design or forethought.

Yeah, this matches with my sense. It has a really extensive knowledge of the expected relationships between elements, extending over a huge number of kinds of objects, and so it can (in one of the areas that are easy for it) successfully fill in the blanks in a way that looks very believable, but the extent to which it has a gears-y model of the scene seems very minimal. I think this also explains its difficulty with non-stereotypical scenes that don’t have a single focal element – if it’s filling in the blanks for both “pirate ship scene” and “dogs in Roman uniforms scene” it gets more confused.

You’re making my dreams come true. I really want to see the Elon Musk one :)

Edit: or the waterglass spilling. That’s the one with my most uncertainty about its performance.

The Elon Musk one has realistic faces so I can’t share it; I have, however, confirmed that DALL-E does not speak ASL with “The ASL word for “thank you”″:

We’ve got some funky fingers here. Six six fingers, a sort of double-tipped finger, an extra joint on the index finger on picture (1, 4). Fascinating.

It seems to be mostly trying to go for the “I love you” sign, perhaps because that’s one of the most commonly represented ones.

I’m curious why this prompt resulted in overwhelmingly black looking hands. Especially considering that all the other prompts I see result in white subjects being represented. Any theories?

It’s unnatural, yes: ASL is predominantly white, and people involved in ASL are even more so (I went to NTID and the national convention, so can speak first-hand, but you can also check Google Image for that query and it’ll look like what you expect, which is amusing because ‘Deaf’ culture is so university & liberal-centric). So it’s not that ASL diagrams or photographs in the wild really do look like that—they don’t.

Overrepresentation of DEI material in the supersekrit licensed databases would be my guess. Stock photography sources are rapidly updated for fashions, particularly recent ones, and you can see this occasionally surfacing in weird queries. (An example going around Twitter which you can check for yourself: “happy white woman” in Google will turn up a lot of strange photos for what seems like a very easy straightforward query.) Which parts are causing it is a better question: I wouldn’t expect there to be much Deaf stock photo material which had been updated, or much ASL material at all, so maybe there’s bleedthrough from all of the hand-centric (eg ‘Black Power salute’, upraised Marxist fists, protests) iconography? There being so much of the latter and so little of the former that the latter becomes the default kind of hand imagery.

It must be something like that, but it still feels like there’s a hole there. The query is for “ASL”, not “Hands”, and these images don’t look like something from a protest. The top left might be vaguely similar to some kind of street gesture.

I’m curious what the role of the query writer is. Can you ask DALL-E for “this scene, but with black skin colour”? I got a sense that updating areas was possible but inconsistent. Could DALL-E learn to return more of X to a given person by receiving feedback? I really don’t know how complicated the process gets.

ASL will always be depicted by a model like DALL-E as hands; I am sure that there are non-pictorial ways to write down ASL but I can’t recall them, and I actually took ASL classes. So that query should always produce hands in it. Then because actual ASL diagrams will be rare and overwhelmed by leakage from more popular classes (keep in mind that deafness is well under 1% of the US population, even including people like me who are otherwise completely uninvolved and invisible, and basically any political fad whatsoever will rapidly produce vastly more material than even core deaf topics), and maybe some more unCLIP looseness...

OA announced its new ‘reducing bias’ DALL-E 2 today. Interestingly, it appears to do so by secretly editing your prompt to inject words like ‘black’ or ‘female’.

“Pages from a flip book of a water glass spilling” I...think DALL-E 2 does not know what a flip book is.

I...think it just does not understand the physics of water spilling, period.

Relatedly, DALL-E is a little confused about how Olympic swimming is supposed to work.

This is interesting, because you’d think it would at least understand that the cup should be tipping over. Makes me think it is considering the cup and the water as two distinct objects, and doesn’t really understand that the cup tipping over would be what causes the water to spill. But it does understand that the water should be located “inside” the cup, but probably purely in a “it looks like the water is inside the cup” sense. I don’t think DALL-E seems to understand the idea of “inside” as an actual location.

I wonder if its understanding of the world is just 2D or semi-3D. Perhaps training it on photogrammetry datasets (photos of the same objects but from multiple points of view) would improve that?

Slightly reworded to “a game as complex tic-tac-toe, screenshots showing the rules of the game”, I am pretty sure DALL-E is not able to generate and model consistent game rules though.

At least it seems to have figured out we wanted a game that was not tic-tac-toe.

Depends on if it generates stuff like this if you ask it for tic-tac-toe :P

What about the combo: a tic-tac-toe board position, a tic-tac-toe board position with X winning, and a tic-tac-toe board position with O winning. Would it give realistic positions matching the descriptions?

I really doubt it but I’ll give it a try once I’m caught on on all the requested prompts here!

Thanks for this thorough account. The bit where you tried to shorten the hair really made me laugh.

I’ve seen this prompt programming bug noted on Twitter by DALL-E 2 users as well. With earlier models, there didn’t seem to be that much difference between ‘by X’ vs ‘in the style of X’, but with the new high-end models, perhaps there is now?

The speculation why is that ‘in the style of X’ is generally inferior because you are now tapping into epigones, imitations, and loosely related images rather than the masters themselves. So it’s become a version of ‘trending on Artstation’: if you ask for X, you ask for the best; if you ask for in the style of X, you ask for broader (and regressed-to-the-mean?) things.

Thanks for that awesome sumup,

I tried to generate character (Dark Elf / Drow), Magic Items and Scene in a Dungeon and Dragon or Magic the Gathering style like so many cool images on Pinterest :

https://www.pinterest.fr/rbarlow177/dd-character-art/

It was very very difficult !

- Character style is very crappy like old Google Search clipart

- Some “technical term” like Dark Elf or Drow match nothing

The Idea was to generate Medieval Fantasy style for Card Game like Magic but it’s very hard to get something good. I fail after 30+ attempt

This is great! I’m generally most interested to see people finding weaknesses of new DL tools, which in and of itself is a sign of how far the technology has progressed.

I’m having real trouble finding out about Dall E and copyright infringement. There are several comments about how Dall E can “copy a style” without it being a violation to the artist, but seriously, I’m appalled. I’m even having trouble looking at some of the images without feeling “the death of artists.” It satisfies the envy of anyone who every wanted to do art without making the effort, but on whose backs? Back in the day, we thought that open source would be good advertising, but there is NO reference to any sources. I’m already finding it nearly impossible to find who authored any work on the web, and this kind of program, for all its genius makes that fully impossible. Yes, it’s a great tool, but where is the responsibility? Where is the bread crumb trail back to the talented human? This is a full example of evil AI, because the human who was the originator of the art is never acknowledged, so everyone on the user side thinks that these images were just “created” by a computer out of thin air. I AM NOT THIN AIR!!! And I know, for a fact, that my work will show up in this program, without anyone ever knowing it was the work of 10,000 hours. Is that not evil? I know, no one ever got to sign the cathedral wall, either. Slaves to the machine.

Sorry that automation is taking your craft. You’re neither the first nor the last this will happen to. Orators, book illuminators, weavers, portrait artists, puppeteers, cartoon animators, etc. Even just in the artistic world, you’re in fine company. Generally speaking, it’s been good for society to free up labor for different pursuits while preserving production. The art can even be elevated as people incorporate the automata into their craft. It’s a shame the original skill is lost, but if that kept us from innovating, there would be no way to get common people multiple books or multiple pictures of themselves or CGI movies. It seems fair to demand society have a way to support people whose jobs have been automated, at least until they can find something new to do. But don’t get mad at the engine of progress and try to stop it—people will just cheer as it runs you over.

It’s not just a question of automation eliminating skilled work. Deep learning uses the work of artists in a significant sense. There is a patchwork of law and social norms in place to protect artists, EG, the practice of explicitly naming major inspirations for a work. This has worked OK up to now, because all creative re-working of other art has either gone through relatively simple manipulation like copy/paste/caption/filter, or thru the specific route of the human mind taking media in and then producing new media output which takes greater or smaller amounts of inspiration from media consumed.

AI which learns from large amounts of human-generated content, is legitimately a new category here. It’s not obvious what should be legal vs illegal, or accepted vs frowned upon by the artistic community.

Is it more like applying a filter to someone else’s artwork and calling it your own? Or is it more like taking artistic inspiration from someone else’s work? What kinds of credit are due?

It seems to me that the only thing that seems possible is to treat it like a human that took inspiration from many sources. In the vast majority of cases, the sources of the artwork are not obvious to any viewer (and the algorithm cannot tell you one). Moreover, any given created piece is really the combination of the millions of pieces of the art that the AI has seen, just like how a human takes inspiration from all of the pieces that it has seen. So it seems most similar to the human category, not the simple manipulations (because it isn’t a simple manipulation of any given image or set of images).

I believe that you can get the AI to output an image that is similar to an existing one, but a human being can also create artwork that is similar to existing art. Ultimately, I think the only solution to rights protection must be handling it at that same individual level.

Another element that needs to be considered is that AI generated art will likely be entirely anonymous before long. Right now, anyone can go to http://notarealhuman.com/ and share the generated face to Reddit. Once that’s freely available with DALL-E 2 level art and better (and I don’t think that’s avoidable at this point), I don’t think any social norms can hinder it.

The other option to social norms is to outlaw it. I don’t think that a limited regulation would be possible, so the only possibility would be a complete ban. However, I don’t think all the relevant governments will have the willpower to do that. Even if the USA bans creating image generation AIs like this (and they’d need to do so in the next year or two to stop it from already being widely spread), people in China and Russia will surely develop them within a decade.

Determining that the provenance of an artwork is a human rather than an AI seems impossible. Even if we added tracing to all digital art tools, it would still be possible to create an image with an AI, print and scan it, and then claim that you made it yourself. In some cases, you actually could trace the AI-generated art, which still involves some effort but not nearly as much.

I agree that this is a plausible outcome, but I don’t think society should treat it as a settled question right now. It seems to me like the sort of technology question which a society should sit down and think about.

It is most similar to the human category, yes absolutely, but it enables different things than the human category. The consequences are dramatically different. So it’s not obvious a priori that it should be treated legally the same.

You argue against a complete ban by pointing out that not all relevant governments would cooperate. I don’t think all governments have to come to the same decision here. Copyright enforcement is already not equal across countries. I’m not saying I think there should be a complete ban, but again, I don’t think it’s totally obvious either, and I think artists calling for a ban should have a voice in the decision process.