(i.)

On April 6, OpenAI announced “DALLE-2” to great fanfare.

There are two different ways that OpenAI talks to the public about its models: as research, and as marketable products.

These two ways of speaking are utterly distinct, and seemingly immiscible. The OpenAI blog contains various posts written in each of the two voices, the “research” voice and the “product” voice, but each post is wholly one or the other.

Here’s OpenAI introducing a model (the original DALLE) in the research voice:

DALL·E is a 12-billion parameter version of GPT-3 trained to generate images from text descriptions, using a dataset of text–image pairs. […]

Like GPT-3, DALL·E is a transformer language model. It receives both the text and the image as a single stream of data containing up to 1280 tokens, and is trained using maximum likelihood to generate all of the tokens, one after another. This training procedure allows DALL·E to not only generate an image from scratch, but also to regenerate any rectangular region of an existing image that extends to the bottom-right corner, in a way that is consistent with the text prompt.

And here they are, introducing a model (Codex) in the product voice:

OpenAI Codex is a descendant of GPT-3; its training data contains both natural language and billions of lines of source code from publicly available sources, including code in public GitHub repositories. […]

GPT-3’s main skill is generating natural language in response to a natural language prompt, meaning the only way it affects the world is through the mind of the reader. OpenAI Codex has much of the natural language understanding of GPT-3, but it produces working code—meaning you can issue commands in English to any piece of software with an API. OpenAI Codex empowers computers to better understand people’s intent, which can empower everyone to do more with computers.

Interestingly, when OpenAI is planning to announce a model as a product, they tend not to publicize the research leading up to it.

They don’t write research posts as they go along, and then write a product post once they’re done. They do publish the research, in PDFs on the Arxiv. The researchers who were directly involved might tweet a link to it. And of course these papers are immediately noticed by ML geeks with RSS feeds, and then by their entire social networks. It’s not like OpenAI is trying to hide these publications. It’s just not making a big deal out of them.

Remember when the GPT-3 paper came out? It didn’t get a splashy announcement. It didn’t get noted in the blog at all. It was just dumped unceremoniously onto the Arxiv.

And then, a few weeks later, they donned the product voice and announced the “OpenAI API,” GPT-3 as a service. Their post on the API was full of enthusiasm, but contained almost no technical details. It mentioned the term “GPT-3″ only in passing:

Today the API runs models with weights from the GPT-3 family with many speed and throughput improvements. Machine learning is moving very fast, and we’re constantly upgrading our technology so that our users stay up to date.

It didn’t even mention how many parameters the model had!

(My tone might sound negative. Just to be clear, I’m not trying to criticize OpenAI for the above. I’m just pointing out recurring patterns in their PR.)

(ii.)

DALLE-2 was announced in the product voice.

In fact, their blog post on it is the most over-the-top product-voice-y thing imaginable. It goes so far in the direction of “aiming for a non-technical audience” that it ends up seemingly addressed to small children. As in a picture book, it offers simple sentences in gigantic letters, one or two per page, each one nestled between huge comforting expanses of blank space. And sentences themselves are … well, stuff like this:

DALL·E 2 can create original, realistic images and art from a text description. It can combine concepts, attributes, and styles. […]

DALL·E 2 has learned the relationship between images and the text used to describe them. […]

Our hope is that DALL·E 2 will empower people to express themselves creatively.

It’s honestly one of the weirdest, most offputting, least appealing web pages I’ve seen in a long time. But then, I’m clearly not in its target demographic. I have no idea who the post is intended for, but whoever they are, I guess maybe this is the kind of thing they like? IDK.

Anyway, weird blog post aside, what is this thing?

The examples we’ve seen from it are pretty impressive. OpenAI clearly believes it’s good enough to be a viable product, the pictorial equivalent of GPT-3. So it must be doing something new and different, right – behind the scenes, in the research? Otherwise, how could it be better than anything else out there?

There’s also an associated research paper on the Arxiv.

I asked myself, “how is DALLE-2 so good?”, and then read the research paper, naively imagining it would contain a clear answer. But the paper left me confused. It did introduce a new idea, but it was kind of a weird idea, and I didn’t quite believe that it, of all things, could be the answer.

It turns out that DALLE-2 is a combination of 3 things:

GLIDE, a model from an earlier (Jan 2022) OpenAI paper.

The GLIDE paper was released around the same time as the (GLIDE-like) image models I made for @nostalgebraist-autoresponder, and I talked about it in my post on those.

An additional upsampling model that converts GLIDE’s 256x256 images into larger 1024x1024 images.

This one is pretty trivial to do (if compute-intensive), but the bigger images are probably responsible for some of DALLE-2′s “wow effect.”

“unCLIP”: a novel, outside-the-box, and (to me) counterintuitive architecture for conditioning an image model on text.

The only part that’s really new is unCLIP. Otherwise, it’s basically just GLIDE, which they published a few months ago. So, is unCLIP the magical part? Should I interpret DALLE-2 as tour de force demo of unCLIP’s power?

Well, maybe not.

Remember, when OpenAI is building up to a “product voice” release, they don’t make much noise about the research results leading up to it. Things started to make a lot more sense when I realized that “GLIDE” and “DALLE-2″ are not two different things. They’re clearly products of the same research project. Inside OpenAI, there’d be no reason to draw a distinction between them.

So maybe the magic was already there in GLIDE, the whole time.

Indeed, this seems very plausible. GLIDE did two new things: it added text conditioning, and it scaled up the model.

The text conditioning was presented as the main contribution of the paper, but the scaling part was not a small change: GLIDE’s base image model is 2.3B parameters, around 8 times bigger than its analogue in their mid-2021 diffusion paper, which was state-of-the-art at the time.

(Sidenote: the base model in the cascaded diffusion paper was pretty big too, though – around half the size of GLIDE, if I’m doing the math right.)

I’d guess that much of the reason DALLE-2 is so visually impressive is just that it’s bigger. This wasn’t initially obvious, because it’s “only” as big as GLIDE, and size wasn’t “supposed to be the point” of GLIDE. But the scale-up did happen, in any case.

(Sidenote: OA made the unusual choice to release a dramatically weakened version of GLIDE, rather than releasing the full model, or releasing nothing. After training a big model, they released a structurally similar but tiny one, smaller than my own bot’s base model by half. As if that wasn’t enough, they also applied a broad-brush policy of content moderation to its training data, e.g. ensuring it can’t generate porn by preventing it from ever seeing any picture of any human being.

So you can “download GLIDE,” sort of, and generate your own pictures with it … but they kind of suck. It’s almost like they went out of their way to make GLIDE feel boring, since we weren’t supposed to be excited until the big planned release day.)

But what fraction of the magic is due to scale, then, and what fraction is due to unCLIP? Well, what exactly is unCLIP?

(iii.)

unCLIP is a new way of communicating a textual description to an image-generating model.

I went into more detail on this in that post about the bot, but in short, there are multiple ways to do this. In some of them (like my bot’s), the model can actually “see the text” as a sequence of individual words/tokens. In other ones, you try to compress all the relevant meaning in the text down into a single fixed-length vector, and that’s what the model sees.

GLIDE actually did both of these at once. It had a part that processed the text, and it produced two representations of it: a single fixed-length vector summary, and another token-by-token sequence of vectors. These two things were fed into different parts of the image model.

All of that was learned from scratch during the process of training GLIDE. So, at the end of its training, GLIDE contained a model that was pretty good at reading text and distilling the relevant meaning into a single fixed-length vector (among other things).

But as it happens, there was already an existing OpenAI model that was extremely good at reading text, and distilling the relevant meaning into a single fixed-length vector. Namely, CLIP.

CLIP doesn’t generate any pictures or text on its own. Only vectors. It has two parts: one of them reads text and spits out a vector, and the other one looks at an image and spits out another vector.

During training, CLIP was encouraged to make these vectors close to one another (in cosine similarity) if the text “matched” the image, and far from one another if they didn’t “match.” CLIP is very good at this task.

And to be good at it, it has to be able to pack a lot of detailed meaning into its vectors. This isn’t easy! CLIP’s task is to invent a notation system that can express the essence of (1) any possible picture, and (2) any possible description of a picture, in only a brief list of maybe 512 or 1024 floating-point numbers. Somehow it does pretty well. A thousand numbers are enough to say a whole lot about a picture, apparently.

If CLIP’s vectors are so great, can’t we just use them to let our image models understand text? Yes, of course we can.

The most famous way is “CLIP guidance,” which actually still doesn’t let the image model itself glimpse anything about the text. Instead, it tells the text-vector side of CLIP to distill the text into a vector, and then repeatedly shows the gestating image to the image-vector side of CLIP, while it’s still being generated.

The image-vector side of CLIP makes a vector from what it sees, checks it against the text vector, and says “hmm, these would match more if the picture were a little more like this.” And you let these signals steer the generation process around.

If you want to let the image model actually know about the text, and learn to use it directly, rather than relying on CLIP’s backseat driving … well, CLIP gives you an easy way to do that, too.

You just take the text-vector side of CLIP (ignoring the other half), use it to turn text into vectors, and feed the vectors into your image generator somewhere. Then train the model with this setup in place.

This is “CLIP conditioning.” It’s the same thing as the fixed-vector part of GLIDE’s conditioning mechanism, except instead of training a model from scratch to make the vectors, we just use the already trained CLIP.

And like, that’s the natural way to use CLIP to do this, right? CLIP’s text-vector part can distill everything relevant about a caption into a vector, or it sure feels that way, anyway. If your image model can see these vectors, what more could it need?

But if you’re OpenAI, at this point, you don’t stop there. You ask a weird question.

What if we trained a model to turn CLIP’s “text vectors” into CLIP’s “image vectors”?

And then, instead of feeding in the text vectors directly, we added an intermediate step where we “turn them into” image vectors, and feed those in, instead?

That is, suppose you have some caption like “vibrant portrait painting of Salvador Dalí with a robotic half face.” OK, feed that caption through CLIP’s text-vector part. Now you have a “CLIP text vector.”

Your new model reads this vector, and tries to generate a “CLIP image vector”: the sort of vector that CLIP’s image-vector would create, if you showed it an actual vibrant portrait painting of Salvador Dalí with a robotic half face.

And then this vector is what your image generator gets to see.

At first, this struck me as almost nonsensical. “CLIP text vectors” and “CLIP image vectors” aren’t two different species. They’re vectors in the same space – you can take cosine similarities between them!

And anyway, the entire thing CLIP is trained to do is make its “text vectors” as close as possible to its “image vectors.” So, given a text vector, surely the “corresponding image vector” is just … the same vector, right? That’s the best possible match! But if a perfect score is that easy to get, then exactly what task is this new neural net supposed to be doing?

It made more sense after I thought about it some more, though I still don’t really get why it works.

CLIP’s task isn’t just to make the text and image vectors as close as possible. Getting a perfect score on that part is easy, too: just spit out the same vector for all texts and all images.

However, CLIP also has to make the vectors dissimilar when there isn’t a match. This forces it to actually use the vectors as a medium of communication, expressing features that can distinct images and texts from one another. Also, the amount of vector similarity has to capture its probabilistic credences about different conceivable matches.

For these reasons, CLIP generally won’t try for a perfect match between the two vectors. Among other things, that behavior would make it unable to express different levels of confidence.

The image vectors aren’t supposed to encode exactly one “ideal” caption, but a bunch of information that could affect the relevance of many different captions, to different extents. Likewise, the text vectors aren’t encoding a single “ideal” image, they’re encoding a bunch of information (…etc). Merely-partial matches are a feature, not a bug; they encode rational uncertainty about alternatives.

So we shouldn’t be thinking about identical vectors. We should be thinking, roughly, about sets of vectors that are roughly some specific level of closeness to another vector (whatever suits the model’s purposes). These look like spherical caps or segments.

These are the regions in which CLIP vectors can vary, without impacting the model’s decisions at all. Its indifference curves, if you will. To CLIP, all the vectors in such a region “look” identical. They all match their counterparts to an equal extent, and that’s all CLIP cares about.

In high dimensions, there’s a lot of room everywhere, so these regions are big. Given a caption, there are many different image vectors CLIP would consider equally consistent with it.

This means that something is missing from CLIP! There’s information it doesn’t know. The information is implicit in CLIP’s training data, but never made it from there into CLIP’s weights.

Because not all images (or captions) are equally plausible, just considered on their own. CLIP knows nothing about plausibility. It doesn’t know whether an image “looks real” or not, just on its own.

CLIP’s regions of indifference contain many image vectors corresponding to real-looking images, but they could well contain many other image vectors whose images look like garbage, or like nothing. It would have no idea anything was wrong with these, and would happily go along matching them (nonsensically) with captions. Why should it care? In training, it only saw real images.

So the meaning of a CLIP text vector is not, “any image that matches me deserves my caption.” The meaning is, “an image that matches me deserves my caption, provided it is a real image you’d find somewhere online.”

But when you’re trying to generate images, you don’t get to make that assumption.

I mean, you sort of do, though? That’s what your image generator is being trained to do, apart from the conditioning aspect: it’s learning to make plausible images.

So you’d hope that “CLIP text vector + general prior for plausible images” would be enough. The CLIP vector encodes a bunch of plausible matching images, and a bunch of other implausible ones, but your image model will naturally go for the plausible ones, right? I’m still confused about this, actually.

But anyway, OpenAI decided to deal with this by training a model to take in text vectors, and spit out image vectors. Obviously, there’s more than one possible right answer, so your model will be able to generate many different possibilities psuedo-randomly, like GPT.

But it will specifically try to pick only plausible image vectors. Its outputs will not uniformly fill up those spherical caps and sections. They’ll cluster up in patches of plausibility, like real images do.

OA calls this new model “unCLIP,” because it sort of inverts CLIP, in a sense. (They also call it a “prior,” which confused me until I realized it was expressing a prior probability over images, and that this was its value-add.)

And then your image generator sees these vectors, each of which refers to some specific picture (up to CLIP’s own ability to tell two pictures apart, which has its limits). Whereas, the text vectors actually refer to entire indifference sets of images, some of which happen to be garbage.

(iv.)

What does this get us, practically speaking? Apparently – for some reason – it makes the diversity-fidelity tradeoff disappear!

What’s the diversity-fidelity tradeoff? OK, a little more exposition.

These days, with this type of model, everyone uses a technique called “classifier-free guidance.” Without going into detail, this basically takes a model that’s already conditional, and makes it like … more conditional.

Your model is trying do two things: generate some plausible picture, and generate a picture that specifically matches the text (or category, or whatever) that you fed in. Classifier-free guidance tells it, “you think that’s a match? come on, make them match even more.” And you can do more or less of it. It’s a dial you can turn.

Usually, when you turn the dial way up, the model has a better chance of generating the thing you asked for (“fidelity”) – but at the cost of diversity. That is, with guidance turned way up, the images start to look less and less different, eventually converging on slight variants of a single picture. Which probably will be a version of what you requested. But if you want another one instead, you’re out of luck.

But when you have unCLIP, you can ask it to psuedo-randomly generate many different image vectors from the same text vector. These encode diverse images, and then you’re free to turn the guidance up as much as you want. (It’s just the guidance for that one vector, and the vectors are all different.) That solves the problem, doesn’t it?

Weirdly (?), that is not what OpenAI does! No, they have unCLIP generate a single fixed vector, and then turn guidance up, and generate many images from this same vector. And somehow … these images are still diverse??

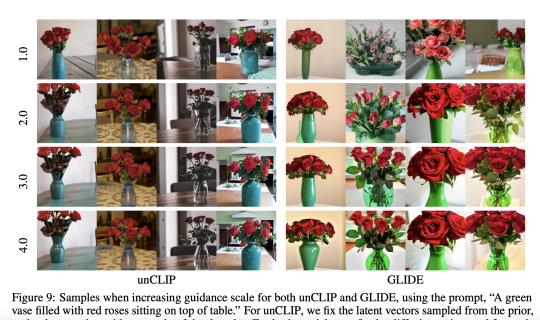

Here’s a picture from the paper showing this:

The GLIDE panels display the classic diversity-fidelity tradeoff, with the POV and background becoming nearly identical at high guidance. But even if you guide hard on a single unCLIP vector, you still get vases in different-looking rooms and stuff. (In fairness, it still doesn’t make them green, which GLIDE does.)

This rides on the fact that CLIP cannot always fully tell two pictures apart, I guess. There are many different image that have the same CLIP image vector, so a single image vector can be “interpreted” in a variety of ways.

And indeed, the paper uses unCLIP to visualize this more directly, with fun galleries of images that all look the same to CLIP. (Or close to the same; the generator might not do this perfectly.)

Exactly what information is written in these CLIP image vectors? What do they say, if we could read them? Well, there’s one vector that expresses … whatever all these pictures have in common:

If there’s a concept there, it’s an alien kind of concept. But you can see the family resemblance.

If I understand things correctly, the different apple/Apple-ish images above are precisely like the different vases from the earlier figure. They reflect the extent and nature of the diversity available given a single image vector.

But … I still don’t get why this is any better than conditioning on a single text vector. Like, if an image vector is ambiguous, surely a text vector is even more ambiguous? (It could map to many image vectors, each of which could map to many images.)

So why do models conditioned on a text vector (like GLIDE) collapse to a single image under conditioning, while a model conditioned on an image vector can still be diverse? Surely the text vectors aren’t encoding the contents of one specific image more precisely than the image vectors do? The reverse should be true.

But the different is real, and they measure it quantitatively, too.

(v.)

Whence DALLE-2′s magic?

TBH I think most of it is just in the scale. We’re not used to seeing AI images this crisply detailed, this pretty, with this few glitches. I’d expect a bigger model to be superior in those ways.

The unCLIP thing … well, I guess it lets them feel comfortable turning the guidance scale way up. And that raises the probability that you get what you asked for.

It’s not that the model now has a deeper understanding of what you asked for, necessarily. (GLIDE could do the “green vase” prompt perfectly, it just couldn’t do many variations of it.) What has changed is that you can now push the model really hard in the direction of using that understanding, while still getting varied results.

I think the single-vector style of conditioning is going to have some fundamental limits.

Along with the unCLIP vectors, OpenAI let their model see the other things GLIDE usually sees, from its own text-conditioning mechanism. But they talk about this as if it’s sort of vestigial, and unCLIP is clearly sufficient/better.

But there are important distinctions that are not written into CLIP vectors. Is this a green apple, or an Apple device, or both? The CLIP vector can’t tell you (see above).

In general, fixed-length vectors will struggle to express arbitrary layers of composition.

This is an issue for CLIP, which has trouble keeping track of which attributes go with which objects. Or how big anything is. Here’s another batch of “identical” pictures:

In NLP, this kind of thing is what made attention + RNN models work so much better than pure RNNs, even before the transformer came along and dispensed with the RNN part.

If your model’s inner representation of a text grows with the length of the text (GLIDE, etc), then when you feed it a longer string of composed clauses, it has correspondingly more “room” to record and process information about what’s happening in them.

While a fixed-length vector (RNNs, CLIP) can only fit a fixed quantity of information, no matter how long the input.

But having GLIDE’s sequential representation doesn’t actually solve the problem. The model that produced these picture was looking at a prompt, with both the unCLIP and GLIDE lenses, and still it produced the pictures we see.

But I would be very surprised if it turned out that fixed-length vectors were just as good, in this context, as variable-length sequences. NLP is just not like that.

CLIP is very good, but we shouldn’t resign ourselves to never besting it. There are things it struggles to represent which used to be hard for all NLP models, but no longer need to be hard.

It’s odd that the GLIDE mechanism was so ineffective. But OpenAI (and everyone else) should keep trying things like that. CLIP is too limiting.

How good is DALL-E-2? As a reversed CLIP, it has all of CLIP’s limitations.

Here is the paper’s Figure 19, Random samples from unCLIP for prompt “A close up of a handpalm with leaves growing from it.”

They are all very pretty, and hands and leaves are generally involved, but, well:

A co-creator of DALL-E 2 wrote this blog post: http://adityaramesh.com/posts/dalle2/dalle2.html .

From that blog post:

“One might ask why this prior model is necessary: since the CLIP text encoder is trained to match the output of the image encoder, why not use the output of the text encoder as the “gist” of the image? The answer is that an infinite number of images could be consistent with a given caption, so the outputs of the two encoders will not perfectly coincide. Hence, a separate prior model is needed to “translate” the text embedding into an image embedding that could plausibly match it.”

The idea that conditioning on unCLIP-produced image vectors instead of text vectors would improve diversity seems very bewildering. And I really have a hard time swallowing the explanation “maybe this happens because for a given CLIP image vector v, there’s a large equivalence class of images that all approximately encode to v.” After all, this explanation doesn’t actually have anything to do with conditioning on image vs. text vectors; in other words, whether we condition on image or text vectors, the final resulting image should still have a large equivalence class of images which encode to approximately the same image vector.

(Unless the relevant thing is that the image vector on which the image generator conditions has a large equivalence class of images that encode to the same vector? But it’s not clear to me how that should be relevant.)

Spitballing other possible explanations, is it possible that GLIDE’s lack of diversity comes from conditioning on a variable-length tokenization of the caption (which is potentially able to pack in a diversity-killing amount of information, relative to a fixed-length vector)? I’m not really happy with this explanation either. For instance, it seems like maybe the model that produced the roses on the left-hand side of the diversity-fidelity figure was also given a variable-length encoding of the caption? I’m having a hard time telling from what’s written in the paper,[1] but if so, that would sink this explanation.

The apparent ambiguity about whether the image generator in this model was conditioned on a variable-length tokenization of the caption (in addition to the image vector produced by unCLIP) is probably a reading comprehension issue on my part. I’d appreciate the help of anyone who can resolve this one way or the other.

I completely agree that the effects of using unCLIP are mysterious, in fact the opposite of what I’d predict them to be.

I wish the paper had said more about why they tried unCLIP in the first place, and what improvements they predicted they would get from it. It took me a long time just to figure out why the idea might be worth trying at all, and even now, I would never have predicted the effects it had in practice. If OpenAI predicted them, then they know something I don’t.

Yes, that model did get to see a variable-length encoding of the caption. As far as I can tell, the paper never tries a model that only has a CLIP vector available, with no sequential pathway.

Again, it’s very mysterious that (GLIDE’s pathway + unCLIP pathway) would increase diversity over GLIDE, since these models are given strictly more information to condition on!

(Low-confidence guess follows. The generator view the sequential representation in its attention layers, and in the new model, these layers are also given a version of the CLIP vector, as four “tokens,” each a different projection of the vector. [The same vector is also, separately, added to the model’s more global “embedding” stream.] In attention, there is competitive inhibition between looking at one position, and looking at another. So, it’s conceivable that the CLIP “tokens” are so information-rich that the attention fixates on them, ignoring the text-sequence tokens. If so, it would ignore some information that GLIDE does not ignore.)

It’s also noteworthy that they mention the (much more obvious) idea of conditioning solely on CLIP text vectors, citing Katherine Crawson’s work:

...but they never actually try this out in a head-to-head comparison. For all we know, a model conditioned on CLIP text vectors, trained with GLIDE’s scale and data, would do better than GLIDE and unCLIP. Certainly nothing in the paper rules out this possibility.

My take would be that the text vector encodes a distribution over images, so it contains a lot of image-ness and constrains the image generation conditioned on this vector a lot in what the image looks like.

The image vector encodes a distribution over captions so it contains a lot of caption-ness and strongly constrains the topic of the generated image but leaves the details of the image much less constrained.

Therefore, at least from my position of relative ignorance, it makes sense that the image vector is better for diversity.

Seems to me that what CLIP needs is a secondary training regime, where it has an image generated as a 2d render of a 3d scene that is generated from a simulator which can also generate a correct and an several incorrect captions. Like: red vase on a brown table (correct), blue vase on a brown table (incorrect), red vase on a green table (incorrect), red vase under a brown table (incorrect). Then do the CLIP training with the text set deliberately including the inappropriate text samples in addition to the usual random incorrect caption samples. I saw this idea in a paper a few years back, not sure how to find that paper now since I can’t seem to guess the right keywords to get Google to come up with it. But there’s a lot of work related to this idea out there, for example: https://developer.nvidia.com/blog/sim2sg-generating-sim-to-real-scene-graphs-for-transfer-learning/

Do you think that would fix CLIP’s not-precisely-the-right-object in not-precisely-the-right-positional-relationship problem? Maybe also, if the simulated data contained labeled text, then it would also fix the incoherent text problem?

Here’s some work by folks at deepmind looking at model’s relational understanding (verbs) vs subjects and objects. Kinda relevant to the type of misunderstanding CLIP tends to exhibit. https://www.deepmind.com/publications/probing-image-language-transformers-for-verb-understanding

I wonder what would happen if you would teach a system like Dalle 2 to write text criticizing images and explaining the flaws in what isn’t realistic in an image.

Presumably it depends on how you’d decide to teach it. DALLE 2 is not a general-purpose consequentialist, so one cannot just hook up an arbitrary goal and have it complete that.

This chart showing 10,000 CLIP text embeddings and 10,000 CLIP image embeddings might give insights about the utility of the “prior” neural networks: https://twitter.com/metasemantic/status/1356406256802607112.

Hmm… what moral are you drawing from that result?

Apparently, CLIP text vectors are very distinguishable from CLIP image vectors. I don’t think this should be surprising. Text vectors aren’t actually expressing images, after all, they’re expressing probability distributions over images.

They are more closely analogous to the outputs of a GPT model’s final layer than they are to individual tokens from its vocab. The output of GPT’s final layer doesn’t “look like” the embedding of a single token, nor should it. Often the model wants to spread its probability mass across a range of alternatives.

Except even that analogy isn’t quite right, because CLIP’s image vectors aren’t “images,” either—they’re probability distributions over captions. It’s not obvious that distributions-over-captions would be a better type of input for your image generator than distributions-over-images.

Also note that CLIP has a trainable parameter, the “logit scale,” which multiplies the cosine similarities before the softmax. So the overall scale of the cosine similarities is arbitrary (as is their maximum value). CLIP doesn’t “need” the similarities to span any particular range. A similarity value like 0.4 doesn’t mean anything on its about about how close CLIP thinks the match is. That’s determined by (similarity * logit scale).

(Disclaimer: not my forte.)

How many bits is this? 2KiB / 16Kib? Other?

Has there been any work in using this or something similar as the basis of a high-compression compression scheme? Compression and decompression speed would be horrendous, but still.

Hm. I wonder what would happen if you trained a version on internet images and its own image errors. I suspect it might not converge, but it’s still interesting to think about.

Assuming it did converge, take the final trained version and do the following:

Encode the image.

Take the difference between the image and output. Encode that.

Take the difference between the image and output + delta output. Encode that.

Repeat until you get to the desired bitrate or error rate.

Ah, I now realize that I was kind of misleading in the sentence you quoted. (Sorry about that.)

I made it sound like CLIP was doing image compression. And there are ML models that are trained, directly and literally to do image compression in a more familiar sense, trying to get the pixel values as close to the original as possible. These are the image autoencoders.

DALLE-2 doesn’t use an autoencoder, but many other popular image generators do, such as VQGAN and the original DALLE.

So for example, the original DALLE has an autoencoder component which can compress and decompress 256x256 images. Its compressed representation is a 32x32 array, where each cell takes a discrete value from 8192 possible values. This is 13 bits per cell (if you don’t do any further compression like RLE on it), so you end up with 13 KiB per image. And then DALLE “writes” in this code the same way GPT writes text.

CLIP, though, is not an autoencoder, because it never has to decompress its representation back into an image. (That’s what unCLIP does, but the CLIP encoding was not made “with the knowledge” that unCLIP would later come along and try to do this; CLIP was never encouraged to make is code to be especially suitable for this purpose.)

Instead, CLIP is trying to capture . . . “everything about an image that could be relevant to matching it with a caption.”

In some sense this is just image compression, because in principle the caption could mention literally any property of the image.But lossy compression always has to choose something to sacrifice, and CLIP’s priorities are very different from the compressors we’re more familiar with. They care about preserving pixel values, so they care a lot about details. CLIP cares about matching with short (<= ~70 word) captions, so it cares almost entirely about high-level semantic features.

There’s no blank space, though. In between the sentences are interactive examples. Or is this a joke I’m not getting?

If you’re on mobile, try it on desktop. Here’s what the sentences look like on my laptop, at 100% zoom.