Consciousness as a conflationary alliance term for intrinsically valued internal experiences

Tl;dr: In this post, I argue from many anecdotes that the concept of ‘consciousness’ is more conflated than people realize, in that there’s a lot of divergence in what people mean by “consciousness”, and people are unaware of the degree of divergence. This confusion allows the formation of broad alliances around the value of consciousness, even when people don’t agree on how to define it. What their definitions do have in common is that most people tend to use the word “consciousness” to refer to an experience they detect within themselves and value intrinsically. So, it seems that people are simply learning to use the word “consciousness” to refer to whatever internal experience(s) they value intrinsically, and thus they agree whenever someone says “consciousness is clearly morally valuable” or similar.

I also introduce the term “conflationary alliance” for alliances formed by conflating terminology.

Executive Summary

Part 1: Mostly during my PhD, I somewhat-methodically interviewed a couple dozen people to figure out what they meant by consciousness, and found that (a) there seems to be a surprising amount of diversity in what people mean by “consciousness”, and (b) they are often surprised to find out that other people mean different things when they say “consciousness”. This has implications for AI safety advocacy because AI will sometimes be feared and/or protected on the grounds that it is “conscious”, and it’s good to be able to navigate these debates wisely.

(Other heavily conflated terms in AI discourse might include “fairness”, “justice”, “alignment”, and “safety”, although I don’t want to debate any of those cases here. This post is going to focus on consciousness, and general ideas about the structure of alliances built around confused concepts in general.)

Part 2: When X is a conflated term like “consciousness”, large alliances can form around claims like “X is important” or “X should be protected”. Here, the size of the alliance is a function of how many concepts get conflated with X. Thus, the alliance grows because of the confusion of meanings, not in spite of it. I call this a conflationary alliance. Persistent conflationary alliances resist disambiguation of their core conflations, because doing so would break up the alliance into factions who value the more precisely defined terms. This resistance to deconflation can be deliberate, or merely a social habit or inertia. Either way, groups that resist deconflation tend to last longer, so conflationary alliance concepts have a way of sticking around once they take hold.

Part 1: What people mean by “consciousness”.

“Consciousness” is an interesting word, because many people have already started to notice that it’s a confused term, yet there is still widespread agreement that conscious beings have moral value. You’ll even find some people taking on strange positions like “I’m not conscious” or “I don’t know if I’m conscious” or “lookup tables are conscious”, as if rebelling against the implicit alliance forming around the “consciousness” concept. What’s going on here?

To investigate, over about 10 years between 2008 and 2018 I informally interviewed dozens of people who I noticed were interested in talking about consciousness, for 1-3 hours each. I did not publish these results, and never intended to, because I was mainly just investigating for my own interest. In retrospect, it would have been better, for me and for anyone reading this post, if I’d made a proper anthropological study of it. I’m sorry that didn’t happen. In any case, here is what I have to share:

“Methodology”

Extremely informal; feel free to skip or just come back to this part if you want to see my conclusions first.

Whom did I interview?

Mostly academics I met in grad school, in cognitive science, AI, ML, and mathematics. In an ad hoc manner at academic or other intellectually-themed gatherings, whenever people talked about consciousness, I gravitated toward the conversation and tried to get someone to spend a long conversation with me to unpack what they meant.

How did I interview them?

First, early in the discussion, I would ask “Are you conscious?” and they would almost always say “yes”. If they said “no” or “I don’t know”, we’d have a different conversation, which maybe happened like 3 times, essentially excluding those people from the “study”.

For everyone who said “yes I’m conscious”, I would then ask “How can you tell?”, and they’d invariably say “I can just tell/sense/perceive/know that I am conscious” or something similar.

I would then ask them somehow pay closer attention to the cconsciousness thing or aspect of their mind that they could just “tell” was there, and asked them to “tell” me more abut that consciousness thing they were finding within theselves. “What’s it like?” I would ask, or similar. If they felt incapable of introspection (maybe 20% felt that way?), I’d ask them to introspect on other things as a warm up, like how their body felt.

I did not say “this is an interview” or anything official-sounding, because honestly I didn’t feel very official about it.

When they defined consciousness using common near-synonyms like “awareness” or “experience”, I asked them to instead describe the structure of the consciousness process, in terms of moving parts and/or subprocesses they internallt fet were connected to or associated with it, at a level that would in principle help me to programmatically check whether the processes inside another mind or object were conscious.

Often it took me 2-5 push-backs to get them focussing on the ‘structure’ of what they called consciousness and not just synonyms for it, but if they stuck with me for 10 minutes, they usually ended up staying in the conversation beyond that, for more like 1-3 hours in total, with them attending for a long time to whatever inside them they meant by “consciousness”. Sometimes the conversation ended more quickly, in like 20 minutes, if the notion of consciousness being conveyed was fairly simple to describe. Some people seemed to have multiple views on what consciousness is, in which cases I talked to them longer until they became fairly committed to one main idea.

Caveats

I’m mainly only confident in the conclusion that people are referring to a lot of different mental processes in mind when they say “consciousness”, and are surprised to hear that others have very different meanings in mind.

I didn’t take many notes or engage anyone else to longitudinally observe these discussions, or do any other kind of adversarially-robust-scientist stuff. I do not remember the names of the people with each answer, and I’m pretty sure I have a bias where I’ve more easily remembered answers that were given by more than one person. Nonetheless, I think my memory here is good enough to be interesting and worth sharing, so here goes.

Results

Epistemic status: reporting from memory.

From the roughly thirty conversations I remember having, below are the answers I remember getting. Each answer is labeled with a number (n) roughly counting the number of people I remember having that answer. After most of the conversations I told people about the answers other people had given, and >80% of the time they seemed surprised:

(n≈3) Consciousness as introspection. Parts of my mind are able to look at other parts of my mind and think about them. That process is consciousness. Not all beings have this, but I do, and I consider it valuable.

Note: people with this answer tended to have shorter conversations with me than the others, because the idea was simpler to explain than most of the other answers.(n≈3) Consciousness as purposefulness. These is a sense that one’s live has meaning, or purpose, and that the pursuit of that purpose is self-evidently valuable. Consciousness is a deep the experience of that self-evident value, or what religions might call the experience of having a soul. This is consciousness. Probably not all beings have this, and maybe not even all people, but I definitely do, and I consider it valuable.

(n≈2) Consciousness as experiential coherence. I have a subjective sense that my experience at any moment is a coherent whole, where each part is related or connectable to every other part. This integration of experience into a coherent whole is consciousness.

(n≈2) Consciousness as holistic experience of complex emotions. Emotional affects like fear and sadness are complex phenomena. They combine and sustain cognitive processes — like the awareness that someone is threatening your safety, or that someone has died — as well as physical processes — like tense muscles. It’s possible to be holistically aware of both the physical and abstract aspects of an emotion all at once. This is consciousness. I don’t know if other beings or objects have this, but I definitely do, and I consider it valuable.

(n≈2) Consciousness as experience of distinctive affective states. Simple bodily affects like hunger and fatigue are these raw and self-evidently real “feelings” that you can “tell are definitely real”. The experience of these distinctively-and-self-evidently-real affective states is consciousness. I don’t know if other living things have this, but non-living objects probably don’t, and I definitely do, and I consider it valuable.

(n≈2) Consciousness as pleasure and pain. Some of my sensations are self-evidently “good” or “bad”, and there is little doubt about those conclusions. A bad experience like pain-from-exercise can lead to good outcomes later, but the experience itself still self-evidently has the “bad” quality. Consciousness is the experience of these self-evidently “good” and “bad” features of sensation. Simple objects like rocks don’t have this, and maybe not even all living beings, but I definitely do, and I consider it valuable.

(n≈2) Consciousness as perception of perception. Inside the mind is something called “perception” that translates raw sense data into awareness of objects and relations, e.g., “perceiving a chair from the pixels on my retina”. There’s also an internal perception-like process that looks at the process of perception while it’s happening. That thing is consciousness. Probably not all beings have this, but I do, and I consider it valuable.

(n≈2) Consciousness as awareness of awareness. A combination of perception and logical inference cause the mind to become intuitively aware of certain facts about one’s surroundings, including concrete things like the presence of a chair underneath you while you sit, but also abstract things like the fact that you will leave work and go home soon if you can’t figure out how to debug this particular bit of code. It’s also possible to direct one’s attention at the process of awareness itself, thereby becoming aware of awareness. This is consciousness. Probably not all beings have this, but I do, and I consider it valuable.

(n≈2) Consciousness as symbol grounding. Words, mental imagery, and other symbolic representations of the world around us have meanings, or “groundings”, in a reality outside of our minds. We can sense the fact that they have meaning by paying attention to the symbol and “feeling” its connection to the real world. This experience of symbols having a meaning is consciousness. Probably not all beings have this, but I definitely do, and I consider it valuable.

(n≈2) Consciousness as proprioception. At any moment, I have a sense of where my body is physically located in the world, including where my limbs are, and how I’m standing, which constitutes a strong sense of presence. That sense is what I call consciousness. I don’t know if other beings have this, but objects probably don’t, and I definitely do, and I consider it valuable.

(n≈2) Consciousness as awakeness. When I’m in dreamless sleep, I have no memory or sense of existing or anything like that. When I wake up, I do. Consciousness is the feeling of being awake. Probably not all beings or objects have this, but I do, and I consider it valuable.

(n≈2) Consciousness as alertness. When I want, I can voluntarily increase my degree of alertness or attunement to my environment. That sense of alertness is consciousness, and it’s something I have more of or less of depending on whether I focus on it. Probably not all beings or objects have this, but I do, and I consider it valuable.

(n≈2) Consciousness as detection of cognitive uniqueness. “It’s like something to be me”. Being me is different from being other people or animals like bats, and I can “tell” that just by introspecting and noticing a bunch of unique things about my mind, and that my mind is separate from other minds. I get a self-evident “this is me and I’m unique” feeling when I look inside my mind. That’s consciousness. Probably not all beings or objects have this, but I do, and I consider it valuable.

(n≈1 or 2) Consciousness is mind-location. I have this feeling that my mind exists and is located behind my eyes. That feeling of knowing where my mind is located is consciousness. Probably not all beings or objects have this, but I do, and I consider it valuable.

(n≈1) Consciousness as a sense of cognitive extent. I have this sense that tells me which parts of the world are part of my body versus not. In a different but analogous way, I have a sense of which information processes in the world are part of my mind versus external to my mind. That sense that “this mind-stuff is my mind-stuff” is consciousness. Probably a lot of living beings have this, but most objects probably don’t, and I consider it valuable.

(n≈1) Consciousness as memory of memory. I have a sense of my life happening as part of a larger narrative arc. Specifically, it feels like I can remember the process of storing my memories, which gives me a sense of “Yeah, this stuff all happened, and being the one to remember it is what makes me me”. Probably not all beings or objects have this, but I do, and I consider it valuable.

(n≈1) Consciousness as vestibular sense. At any moment, one normally has a sense of being oriented towards the world in a particular way, which goes away when you’re dizzy. We feel locked into a kind of physically embodied frame of reference, which tells us which way is up and down and so on. This is the main source of my confidence that my mind exists, and it’s my best explanation of what I call consciousness.

Note: Unlike the others, I don’t remember this person saying they considered consciousness to be valuable.

So what is “consciousness”?

It’s a confused word that people reliably use to refer to mental phenomena that they value intrinsically, with surprising variation in what specifically people have in mind when they say it. As a result, we observe

Widespread agreement that conscious beings are valuable, and

Widespread disagreement or struggle in defining or discovering “what consciousness is”.

What can be done about this?

For one thing, when people digress from a conversation to debate about “consciousness”, nowadays I usually try asking them to focus away from “consciousness” and instead talk about either “intrinsically valued cognition” or “formidable intelligence”. This usually helps the conversation move forward without having to pin down what precisely they meant by “consciousness”.

More generally, this variation in meanings intended by the word “consciousness” has implications for how we think about alliances that form around the value of consciousness as a core value.

Part 2: The conflationary alliance around human consciousness

Epistemic status: personal sense-making from the observations above

Most people use the word “consciousness” to refer to a cognitive process that they consider either

terminally valuable (as an aspect of moral patiency), or

instrumentally valuable (as a component of intelligence).

Thus, it’s easy to form alliances or agreement around claims like

conscious beings deserve protection, or

humans lives are valuable because we’re conscious, or

humans are smarter than other animals because we’re conscious.

Such utterances reinforce the presumption that consciousness must be something valuable, but without pinning down specifically what is being referred to. This vagueness in turn makes the claims more broadly agreeable, and the alliance around the value of human consciousness strengthens.

I call this a conflationary alliance, because it’s an alliance supported by the conflation of concepts that would otherwise have been valued by a smaller alliance. Here, the size of the alliance is a function of how many concepts get conflated with the core value term.

A persistent conflationary alliance must, tautologically, resist the disambiguation of its core conflations. The resistance can arise by intentional design of certain Overton windows or slogans, or arise simply by natural selection acting on the ability of memes to form alliances that reinforce them.

Correspondingly, there are lots of social patterns that somehow end up protecting the conflated status of “consciousness” as a justification for the moral value of human beings. Some examples:

Alice: [eats a porkchop]

Bob: You shouldn’t eat pigs; they’re conscious beings capable of suffering, you know!

Alice: There’s no scientific consensus on what consciousness is. It’s mysterious, and and I believe it’s unique to humans. [continues eating porkchop]

Charlie: I think AI might become conscious. Isn’t that scary?

Dana: Don’t worry; there is no consensus on what consciousness is, because it’s a mystery. It’s hubris to think scientists are able to build conscious machines!

Charlie: [feels relieved] Hmm, yeah, good point.

Eric: AI systems are getting really smart, and I think they might be conscious. Shouldn’t we feel bad about essentially making them our slaves?

Faye: Consciousness is special to humans and other living organisms, not machines. How it works is still a mystery to scientists, and definitely not something we can program into a computer.

Eric: But these days AI systems are trained, not programmed, and how they work is mysterious to us, just like consciousness. So, couldn’t we end up making them conscious without even knowing it?

Faye: Perhaps, but the fact that we don’t know means we shouldn’t treat them as valuable in the way humans are, because we know humans are conscious. At least I am; aren’t you?

Eric: Yes of course I’m conscious! [feels insecure about whether others will believe he’s conscious] When you put it that way, I guess we’re more confident in each other’s consciousness than we can be about the consciousness of something different from us.

What should be done about these patterns? I’m not sure yet; a topic for another day!

Conclusion

In Part 1, I described a bunch of slightly-methodical conversations I’ve had, where I learned that people are referring to many different kinds of processes inside themselves when they say “consciousness”, and that they’re surprised by the diversity of other people’s answers. I’ve also noticed people used “consciousness” to refer to things they value, either terminally or instrumentally. In Part 2, I note how this makes it easier to form alliances around the idea that consciousness is valuable. There seems to be a kind of social resistance to clarification about the meaning of “consciousness”, especially in situations where someone is defending or avoiding the questioning of human moral superiority or priority. I speculate that these conversational patterns further perpetuate the notion that “consciousness” refers to something inherently mysterious. In such cases, I often find it helpful to ask people to focus away from “consciousness” and instead talk about either “intrinsically valued cognition” or “formidable intelligence”, whichever better suits the discussion at hand.

In future posts I pan to discuss the implications of conflationary terms and alliances for the future of AI and AI policy, but that work will necessarily be more speculative and less descriptive than this one.

Thanks for reading!

- Yes, It’s Subjective, But Why All The Crabs? by (Jul 28, 2023, 7:35 PM; 248 points)

- Voting Results for the 2023 Review by (Feb 6, 2025, 8:00 AM; 86 points)

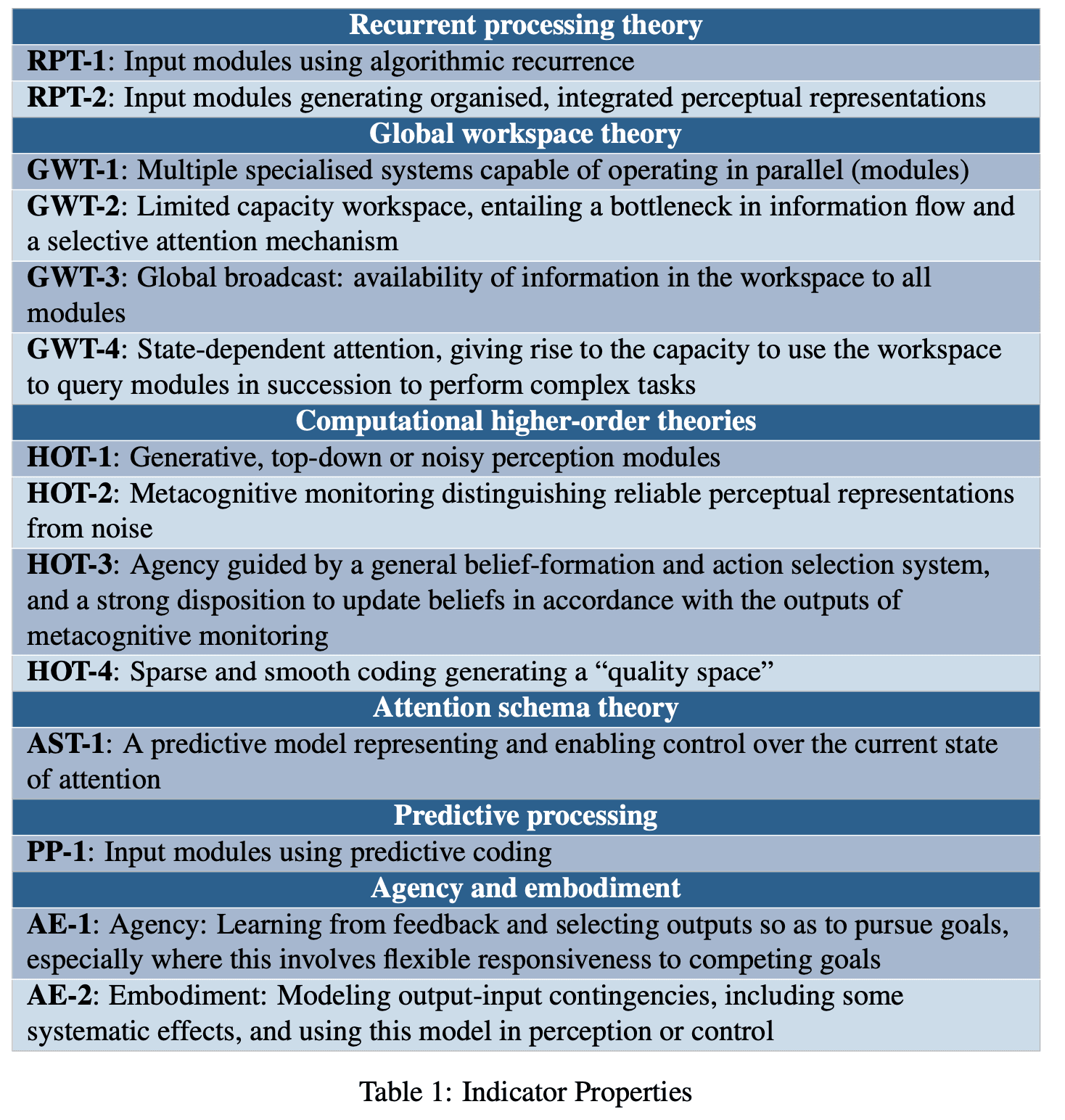

- LLM chatbots have ~half of the kinds of “consciousness” that humans believe in. Humans should avoid going crazy about that. by (Nov 22, 2024, 3:26 AM; 76 points)

- AE Studio @ SXSW: We need more AI consciousness research (and further resources) by (Mar 26, 2024, 8:59 PM; 67 points)

- My intellectual journey to (dis)solve the hard problem of consciousness by (Apr 6, 2024, 9:32 AM; 49 points)

- 's comment on LLM chatbots have ~half of the kinds of “consciousness” that humans believe in. Humans should avoid going crazy about that. by (Nov 22, 2024, 2:19 PM; 37 points)

- The intelligence-sentience orthogonality thesis by (Jul 13, 2023, 6:55 AM; 19 points)

- AE Studio @ SXSW: We need more AI consciousness research (and further resources) by (EA Forum; Mar 26, 2024, 9:15 PM; 15 points)

- LLM chatbots have ~half of the kinds of “consciousness” that humans believe in. Humans should avoid going crazy about that. by (EA Forum; Nov 22, 2024, 3:26 AM; 11 points)

- “AI Wellbeing” and the Ongoing Debate on Phenomenal Consciousness by (Aug 17, 2023, 3:47 PM; 10 points)

- 's comment on Why it’s so hard to talk about Consciousness by (Jan 19, 2025, 8:03 PM; 10 points)

- Lighthaven Sequences Reading Group #19 (Tuesday 01/28) by (Jan 26, 2025, 12:02 AM; 7 points)

- 's comment on Is This Thing Sentient, Y/N? by (Jan 21, 2025, 5:23 PM; 4 points)

- 's comment on Only mammals and birds are sentient, according to neuroscientist Nick Humphrey’s theory of consciousness, recently explained in “Sentience: The invention of consciousness” by (EA Forum; Dec 29, 2023, 11:35 AM; 3 points)

- 's comment on Safety isn’t safety without a social model (or: dispelling the myth of per se technical safety) by (Jun 14, 2024, 5:18 PM; 2 points)

- 's comment on Seth Explains Consciousness by (Jan 20, 2025, 2:19 PM; 2 points)

- 's comment on I would have shit in that alley, too by (Jun 21, 2024, 4:36 AM; 1 point)

I often find myself revisiting this post—it has profoundly shaped my philosophical understanding of numerous concepts. I think the notion of conflationary alliances introduced here is crucial for identifying and disentangling/dissolving many ambiguous terms and resolving philosophical confusion. I think this applies not only to consciousness but also to situational awareness, pain, interpretability, safety, alignment, and intelligence, to name a few.

I referenced this blog post in my own post, My Intellectual Journey to Dis-solve the Hard Problem of Consciousness, during a period when I was plateauing and making no progress in better understanding consciousness. I now believe that much of my confusion has been resolved.

I think the concept of conflationary alliances is almost indispensable for effective conceptual work in AI safety research. For example, it helps clarify distinctions, such as the difference between “consciousness” and “situational awareness.” This will become increasingly important as AI systems grow more capable and public discourse becomes more polarized around their morality and conscious status.

Highly recommended for anyone seeking clarity in their thinking!

On the one hand, I agree with Paradiddle that the methodology used doesn’t let us draw the conclusion stated at the end of this post, and thus this is an anti-example of a study I want to see on LW.

On the other hand, I do think the concept here is valuable, and I do have a high prior probability that something like a conflationary alliance is going on with consciousness, because it’s often an input into questions of moral worth, and thus there is an incentive to both fight over the word, and make the word’s use as wide or as narrow as possible.

I have to give this a −1 for it’s misleading methodology (and not realizing this), for local validity reasons.