Two easy things that maybe Just Work to improve AI discourse

So, it seems AI discourse on X / Twitter is getting polarised. This is bad. Especially bad is how some engage in deliberate weaponization of discourse, for political ends.

At the same time, I observe: AI Twitter is still a small space. There are often important posts that have only ~100 likes, ~10-100 comments, and maybe ~10-30 likes on top comments. Moreover, it seems to me little sane comments, when they do appear, do get upvoted.

This is… crazy! Consider this thread:

A piece of legislation is being discussed, with major ramifications for regulation of frontier models, and… the quality of discourse hinges on whether 5-10 random folks show up and say some sensible stuff on Twitter!?

It took me a while to see these things. I think I had a cached view of “political discourse is hopeless, the masses of trolls are too big for anything to matter, unless you’ve got some specialised lever or run one of these platforms”.

I now think I was wrong.

Just like I was wrong for many years about the feasibility of getting public and regulatory support for taking AI risk seriously.

This begets the following hypothesis: AI discourse might currently be small enough that we could basically just brute force raise the sanity waterline. No galaxy-brained stuff. Just a flood of folks making… reasonable arguments.

It’s the dumbest possible plan: let’s improve AI discourse by going to places with bad discourse and making good arguments.

I recognise this is a pretty strange view, and does counter a lot of priors I’ve built up hanging around LessWrong for the last couple years. If it works, it’s because of a surprising, contingent, state of affairs. In a few months or years the numbers might shake out differently. But for the time being, the arbitrage is real.

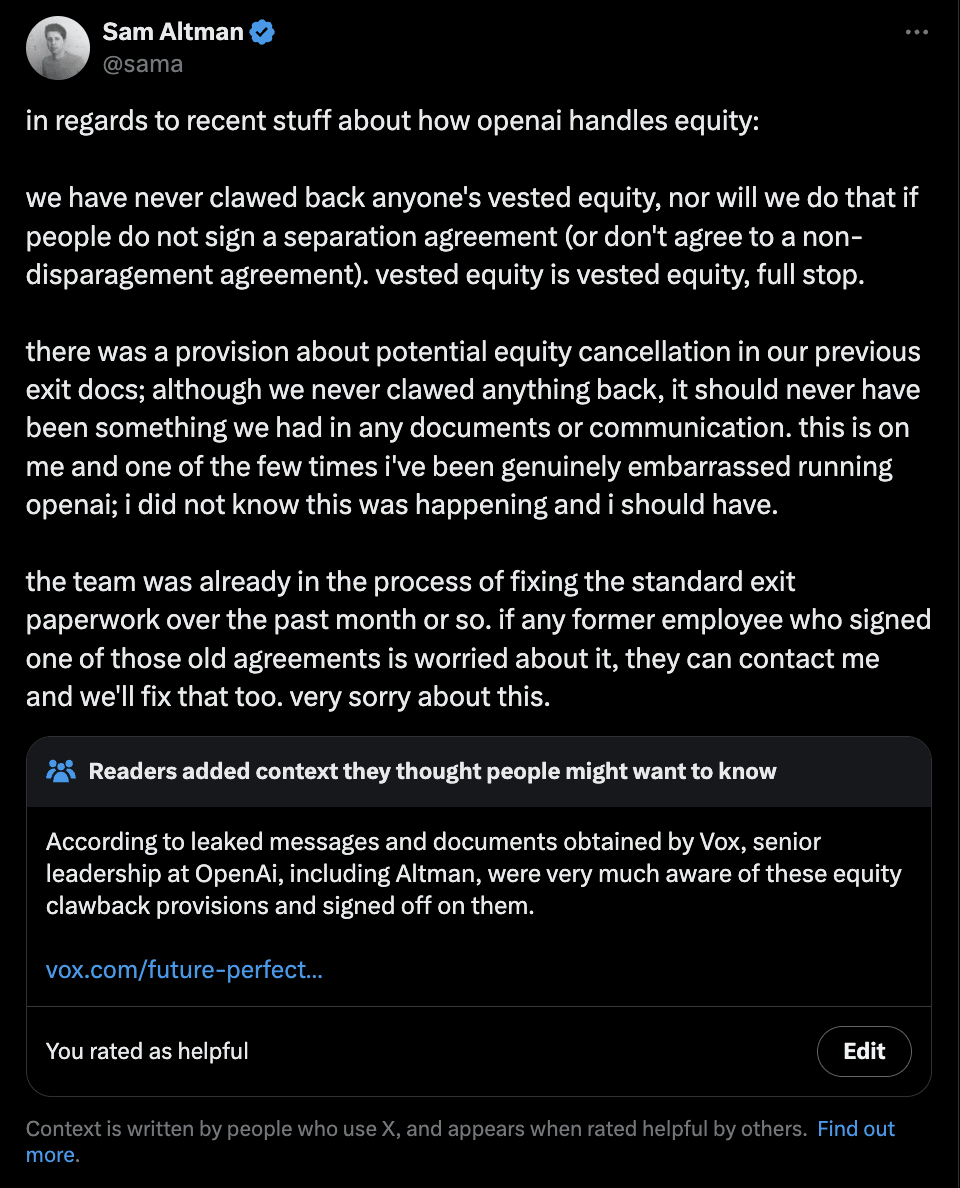

Furthermore, there’s of course already a built-in feature, with beautiful mechanism design and strong buy-in from X leadership, for increasing the sanity waterline: Community Notes. It’s a feature that allows users to add “notes” to tweets providing context, and then only shows those notes ~if they get upvoted by people who usually disagree.

Yet… outside of massive news like the OpenAI NDA scandal, Community Notes is barely being used for AI discourse. I’d guess probably for no more interesting of a reason than that few people use community notes overall, multiplied by few of those engaging in AI discourse. Again, plausibly, the arbitrage is real.

If you think this sounds compelling, here’s two easy ways that might just work to improve AI discourse:

Make an account on X. When you see invalid or bad faith arguments on AI: reply with valid arguments. Upvote other such replies.

Join Community Notes at this link. Start writing and rating posts. (You’ll to need to rate some posts before you’re allowed to write your own.)

And, above all: it doesn’t matter what conclusion you argue for; as long as you make valid arguments. Pursue asymmetric strategies, the sword that only cuts if your intention is true.

I strongly recommend against engaging with Twitter at all. The LessWrong community has been significantly underestimating the extent to which it damages the quality of its users’ thinking. Twitter pulls its users into a pattern of seeking social approval in a fast-paced loop. Tweets shape their regular readers’ thoughts into becoming more tweet-like: short, vague, lacking in context, status-driven, reactive, and conflict-theoretic. AI alignment researchers, more than perhaps anyone else right now, need to preserve their ability to engage in high-quality thinking. For them especially, spending time on Twitter isn’t worth the risk of damaging their ability to think clearly.

I think yall will be okay if you make sure your twitter account isn’t your primary social existence, and you don’t have to play twitter the usual way. Write longform stuff. Retweet old stuff. Be reasonable and conciliatory while your opponents are being unreasonable and nasty, that’s how you actually win.

Remember that the people who’ve fallen in deep and contracted twitter narcissism are actually insane, It’s not an adaptive behavior, they’re there to lose. Every day they’re embarrassing themselves and alienating people and all you have to do is hang around, occasionally point it out, and be the reasonable alternative.

Yeah, my hypothesis is something like this might work.

(Though I can totally see how it wouldn’t though, and I wouldn’t have thought it a few years ago, so my intuition might just be mistaken)

Interesting arguments going on on the e/acc Twitter side of this debate: https://x.com/khoomeik/status/1799966607583899734

“arguments” is perhaps a bit generous of a term...

I don’t anticipate being personally affected by this much if I start using Twitter.

I think this is personally right for me. I do not twit, and I have fully blocked the platform for (I think) more than half of the last two years.

I sometimes go there and seek out the thoughts of people I respect. When I do, I commonly find them arguing against positions they’re uninterested in and with people who seem to pick their positions for political reasons (rather than their assessment of what’s true), and who bring low-quality arguments and discussion norms. It’s not where I want to spend my time thinking.

I think some people go there to just have fun and make friends, which is quite a different thing.

Can you expand the list, go into further detail, or list a source that goes into further detail?

I dont think the numbers really check out on your claim. Only a small proportion of people reading this are alignment researchers. And for remaining folks many are probably on Twitter anyway, or otherwise have some similarly slack part of their daily scheduling filled with sort of random non high opportunity cost stuff.

Historically there sadly hasn’t been scalable ways for the average LW lurker to contribute to safety progress; now there might be a little one.

The time expenditure isn’t the crux for me, the effects of Twitter on its user’s habits of thinking are the crux. Those effects also apply to people who aren’t alignment researchers. For those people, trading away epistemic rationality for Twitter influence is still very unlikely to be worth it.

While I do not use the platform myself, what do you think of people doing their thinking and writing offline, and then just using it as a method of transmission? I think this is made even easier by the express strategic decision to create an account for AI-specific engagement.

For example, when I look at tweets at all it is largely as links to completed threads or off-twitter blogs/articles/papers.

That’s not as bad, since it doesn’t have the rapid back-and-forth reward loop of most Twitter use.

agreed, and this is why I don’t use it; however, probably not so much a thing that must be avoided at nearly all costs for policy people. For them, all I know how to suggest is “use discretion”.

This also seems like a reason to think that intervening on AI Twitter is not very important. Not an overwhelming reason: there could still be founder effects in the discourse, or particularly influential people could be engaged in the twitter discourse.

I think that’s the case. Mostly the latter, some of the former.

tl;dr: the amount of people who could write sensible arguments is small, they would probably still be vastly outnumbered, and it makes more sense to focus on actually trying to talk to people who might have an impact

EDIT: my arguments mostly apply to “become a twitter micro-blogger” strat, but not to the “reply guy” strat that jacob seems to be arguing for

as someone who has historically wrote multiple tweets that were seen by the majority of “AI Twitter”, I think I’m not that optimistic about the “let’s just write sensible arguments on twitter” strategy

for context, here’s my current mental model of the different “twitter spheres” surrounding AI twitter:

- ML Research twitter: academics, or OAI / GDM / Anthropic announcing a paper and everyone talks about it

- (SF) Tech Twitter: tweets about startup, VCs, YC, etc.

- EA folks: a lot of ingroup EA chat, highly connected graph, veneration of QALY the lightbulb and mealreplacer

- tpot crew: This Part Of Twitter, used to be post-rats i reckon, now growing bigger with vibecamp events, and also they have this policy of always liking before replying which amplifies their reach

- Pause AI crew: folks with pause (or stop) emojis, who will often comment on bad behavior from labs building AGI, quoting (eg with clips) what some particular person say, or comment on eg sam altman’s tweets

- AI Safety discourse: some people who do safety research, will mostly happen in response to a top AI lab announcing some safety research, or to comment on some otherwise big release. probably a subset of ML research twitter at this point, intersects with EA folks a lot

- AI policy / governance tweets: comment on current regulations being passed (like EU AI act, SB 1047), though often replying / quote-tweeting Tech Twitter

- the e/accs: somehow connected to tech twitter, but mostly anonymous accounts with more extreme views. dunk a lot on EAs & safety / governance people

I’ve been following these groups somehow evolve since 2017, and maybe the biggest recent changes have been how much tpot (started circa 2020 i reckon) and e/acc (who have grown a lot with twitter spaces / mainstream coverage) accounts have grown in the past 2 years. i’d say that in comparison the ea / policy / pause folks have also started to post more but there accounts are quite small compared to the rest and it just still stays contained in the same EA-adjacent bubble

I do agree to some extent with Nate Showell’s comment saying that the reward mechanisms don’t incentivize high-quality thinking. I think that if you naturally enjoy writing longform stuff in order to crystallize thinking, then posting with the intent of getting feedback on your thinking as some form of micro-blogging (which you would be doing anyway) could be good, and in that sense if everyone starts doing that this could shift the quality of discourse by a small bit.

To give some example on the reward mechanisms stuff, my last two tweets have been 1) some diagram I made trying to formalize what are the main cruxes that would make you want to have the US start a manhattan project 2) some green text format hyperbolic biography of leopold (who wrote the situational awareness series on ai and was recently on dwarkesh)

both took me the same amount of time to make (30 minutes to 1h), but the diagram got 20k impressions, whereas the green text format got 2M (so 100x more), and I think this is because of a) many more tech people are interested in current discourse stuff than infographics b) tech people don’t agree with the regulation stuff c) in general, entertainement is more widely shared than informative stuff

so here are some consequences of what I expect to happen if lesswrong folks start to post more on x:

- 1. they’re initially not going to reach a lot of people

- 2. it’s going to be some ingroup chat with other EA folks / safety / pause / governance folks

- 3. they’re still going to be outnumbered by a large amount of people who are explicitly anti-EA/rationalists

- 4. they’re going to waste time tweeting / checking notifications

- 5. the reward structure is such that if you have never posted on X before, or don’t have a lot of people who know you, then long-form tweets will perform worse than dunks / talking about current events / entertainement

- 6. they’ll reach an asymptote given that the lesswrong crowd is still much smaller than the overal tech twitter crowd

to be clear, I agree that the current discourse quality is pretty low and I’d love to see more of it, my main claims are that:

- i. the time it would take to actually shift discourse meaningfully is much longer than how many years we actually have

- ii. current incentives & the current partition of twitter communities make it very adversarial

- iii. other communities are aligned with twitter incentives (eg. e/accs dunking, tpots liking everything) which implies that even if lesswrong people tried to shape discourse the twitter algorithm would not prioritize their (genuine, truth-seeking) tweets

- iv. twitter’s reward system won’t promote rational thinking and lead to spending more (unproductive) time on twitter overall.

all of the above points make it unlikely that (on average) the contribution of lw people to AI discourse will be worth all of the tradeoffs that comes with posting more on twitter

EDIT: in case we’re talking about main posts, but I could see why posting replies debunking tweets or community notes could work

(Also the arguments of this comment do not apply to Community Notes.)

Disagree. The quality of arguments that need debunking is often way below the average LW:ers intellectual pay grade. And there’s actually quite a lot of us.

ok I meant something like “people would could reach a lot of people (eg. roon’s level, or even 10x less people than that) from tweeting only sensible arguments is small”

but I guess that don’t invalidate what you’re suggesting. if I understand correctly, you’d want LWers to just create a twitter account and debunk arguments by posting comments & occasionally doing community notes

that’s a reasonable strategy, though the medium effort version would still require like 100 people spending sometimes 30 minutes writing good comments (let’s say 10 minutes a day on average). I agree that this could make a difference.

I guess the sheer volume of bad takes or people who like / retweet bad takes is such that even in the positive case that you get like 100 people who commit to debunking arguments, this would maybe add 10 comments to the most viral tweets (that get 100 comments, so 10%), and maybe 1-2 comments for the less popular tweets (but there’s many more of them)

I think it’s worth trying, and maybe there are some snowball / long-term effects to take into account. it’s worth highlighting the cost of doing so as well (16h or productivity a day for 100 people doing it for 10m a day, at least, given there are extra costs to just opening the app). it’s also worth highlighting that most people who would click on bad takes would already be polarized and i’m not sure if they would change their minds of good arguments (and instead would probably just reply negatively, because the true rejection is more something about political orientations, prior about AI risk, or things like that)

but again, worth trying, especially the low efforts versions

I love this. Strong upvoted. I wonder if there’s a “silent majority” of folks who would tend to post (and upvote) reasonable things, but don’t bother because “everyone knows there’s no point in trying to have a civil discussion on Twitter”.

Might there be a bit of a collective action problem here? Like, we need a critical mass of reasonable people participating in the discussion so that reasonable participation gets engagement and thus the reasonable people are motivated to continue? I wonder what might be done about that.

Yes, I’ve felt some silent majority patterns.

Collective action problem idea: we could run an experiment -- 30 ppl opt in to writing 10 comments and liking 10 comments they think raise the sanity waterline, conditional on a total of 29 other people opting in too. (A “kickstarter”.) Then we see if it seemed like it made a difference.

I’d join. If anyone is also down for that, feel free to use this comment as a schelling point and reply with your interest below.

(I’m not sure the right number of folks, but if we like the result we could just do another round.)

I’d participate.

I’d participate.

Interested

Keep in mind that long (>280 character) replies don’t notify the people in the thread, so you have to manually tag them if you leave a high quality reply.

(also, lol at this being voted into negative! Giving karma as encouragement seems like a great thing. It’s the whole point of it. It’s even a venerable LW tradition, and was how people incentivised participation in the annual community surveys in the elden days)

The LW survey is something that has broad buy-in. In-general promising to upvote others distorts the upvote signal (since like, people could very easily manufacture lots of karma by just upvoting each others), so there is a prior against this kind of stuff that sometimes can be overcome.

Strong agree. I think twitter and reposting stuff on other platforms is still neglected, and this is important to increase safety culture

I think Twitter systematically underpromotes tweets with links external to the Twitter platform, so reposting isn’t a viable strategy.

want to also stress that even though I presented a lot of counter-arguments in my other comment, I basically agree with Charbel-Raphaël that twitter as a way to cross-post is neglected and not costly

and i also agree that there’s a 80⁄20 way of promoting safety that could be useful

Cross posting sure seems cheap. Though I think replying and engaging with existing discourse is easier than building a following of one’s top level posts from scratch.

im already on twitter but i saw this reposted on twitter and came to say i would be glad to see you there!

my one big recommendation is to focus on talking to people who seem reasonable and boosting ideas you like (vs trying to argue or dunk on ideas that are bad). twitter algo rewards any engagement so if you dont like what someone is saying, the best thing is to move on and talk to someone neutral or positive instead

Assuming that the goal is to ‘raise the sanity waterline’, I would recommend against engaging on most social media platforms, except for promoting content that resides outside the media circle (e.g. a book, blogpost, paper, etc...).

The LessWrong Review runs every year to select the posts that have most stood the test of time. This post is not yet eligible for review, but will be at the end of 2025. The top fifty or so posts are featured prominently on the site throughout the year.

Hopefully, the review is better than karma at judging enduring value. If we have accurate prediction markets on the review results, maybe we can have better incentives on LessWrong today. Will this post make the top fifty?