I own lots of SPY/SPX calls and agree with this perspective. I think QQQ calls also look pretty good (and have done great over the last year).

Jonas V

AI 2027: What Superintelligence Looks Like

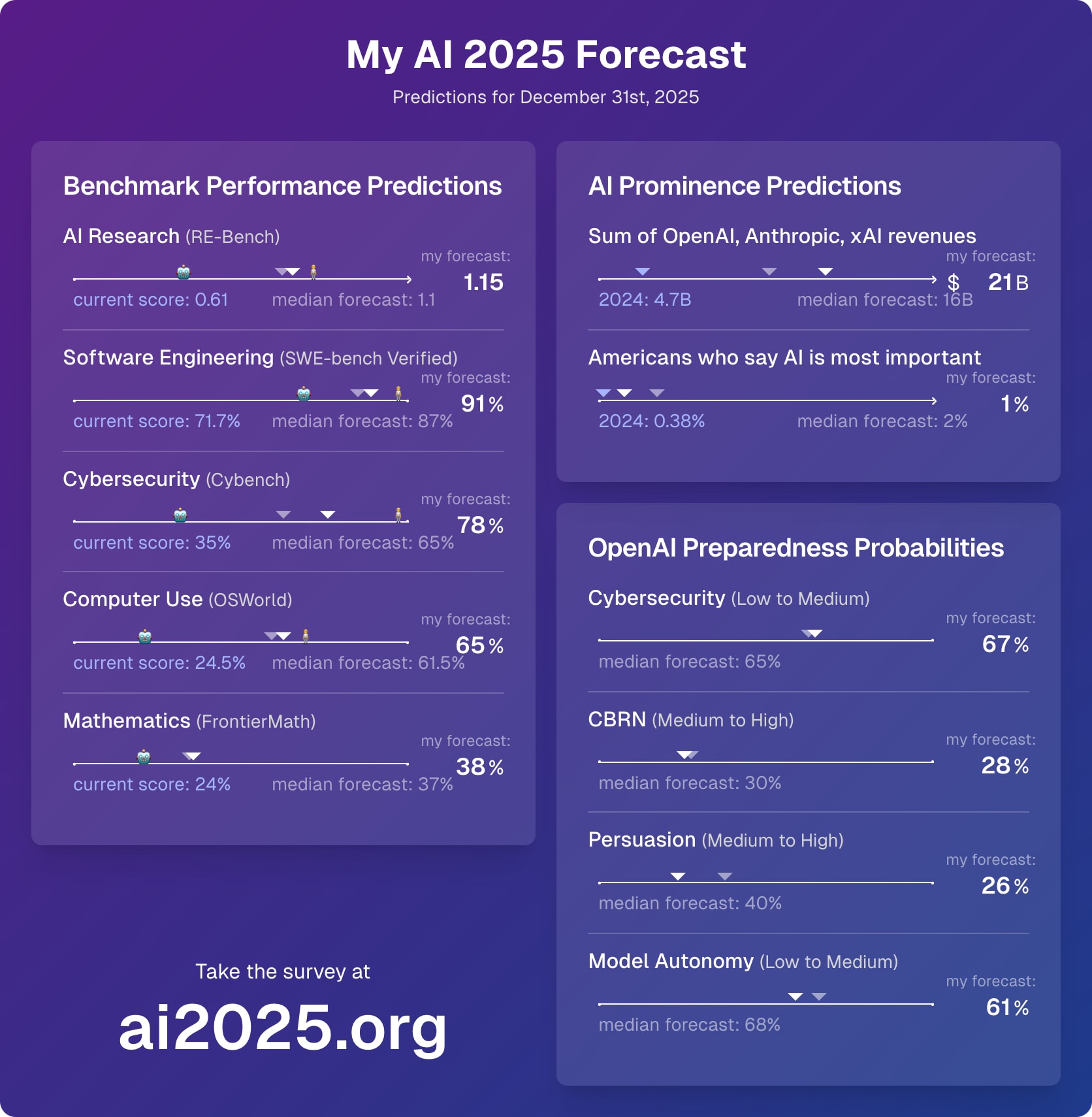

My most recent forecasts:

Predict 2025 AI capabilities (by Sunday)

OpenAI didn’t say what the light blue bar is

Presumably light blue is o3 high, and dark blue is o3 low?

If you’re launching an AI safety startup, reach out to me; Polaris Ventures (which I’m on the board of) may be interested, and I can potentially introduce you to other VCs and investors.

Makes sense! And yeah, IDK, I think the concept of ‘measure’ is pretty confusing itself and not super convincing to me, but if you think through the alternatives, they seem even less satisfying.

That leads you to always risk your life if there’s a slight chance it’ll make you feel better … Right? E.g. 2% death, 98% not just totally fine but actually happy, etc., all the way to 99.99% death, 0.01% super duper happy

I did some research and the top ones I found were SMH and QQQ.

I’m not sure what (if any) action-relevant point you might be making. If this is supposed to make you less concerned about death, I’d point out that the thing to care about is your measure (as in quantum immortality), which is still greatly reduced.

Someone else added these quotes from a 1968 article about how the Vietnam war could go so wrong:

Despite the banishment of the experts, internal doubters and dissenters did indeed appear and persist. Yet as I watched the process, such men were effectively neutralized by a subtle dynamic: the domestication of dissenters. Such “domestication” arose out of a twofold clubbish need: on the one hand, the dissenter’s desire to stay aboard; and on the other hand, the nondissenter’s conscience. Simply stated, dissent, when recognized, was made to feel at home. On the lowest possible scale of importance, I must confess my own considerable sense of dignity and acceptance (both vital) when my senior White House employer would refer to me as his “favorite dove.” Far more significant was the case of the former Undersecretary of State, George Ball. Once Mr. Ball began to express doubts, he was warmly institutionalized: he was encouraged to become the inhouse devil’s advocate on Vietnam. The upshot was inevitable: the process of escalation allowed for periodic requests to Mr. Ball to speak his piece; Ball felt good, I assume (he had fought for righteousness); the others felt good (they had given a full hearing to the dovish option); and there was minimal unpleasantness. The club remained intact; and it is of course possible that matters would have gotten worse faster if Mr. Ball had kept silent, or left before his final departure in the fall of 1966. There was also, of course, the case of the last institutionalized doubter, Bill Moyers. The President is said to have greeted his arrival at meetings with an affectionate, “Well, here comes Mr. Stop-the-Bombing....” Here again the dynamics of domesticated dissent sustained the relationship for a while.

A related point—and crucial, I suppose, to government at all times—was the “effectiveness” trap, the trap that keeps men from speaking out, as clearly or often as they might, within the government. And it is the trap that keeps men from resigning in protest and airing their dissent outside the government. The most important asset that a man brings to bureaucratic life is his “effectiveness,” a mysterious combination of training, style, and connections. The most ominous complaint that can be whispered of a bureaucrat is: “I’m afraid Charlie’s beginning to lose his effectiveness.” To preserve your effectiveness, you must decide where and when to fight the mainstream of policy; the opportunities range from pillow talk with your wife, to private drinks with your friends, to meetings with the Secretary of State or the President. The inclination to remain silent or to acquiesce in the presence of the great men—to live to fight another day, to give on this issue so that you can be “effective” on later issues—is overwhelming. Nor is it the tendency of youth alone; some of our most senior officials, men of wealth and fame, whose place in history is secure, have remained silent lest their connection with power be terminated. As for the disinclination to resign in protest: while not necessarily a Washington or even American specialty, it seems more true of a government in which ministers have no parliamentary backbench to which to retreat. In the absence of such a refuge, it is easy to rationalize the decision to stay aboard. By doing so, one may be able to prevent a few bad things from happening and perhaps even make a few good things happen. To exit is to lose even those marginal chances for “effectiveness.”

Implied volatility of long-dated, far-OTM calls is similar between AI-exposed indices (e.g. SMH) and individual stocks like TSM or MSFT (though not NVDA).

The more concentrated exposure you get from AI companies or AI-exposed indices compared to VTI is likely worth it, unless you expect that short-timelines slow AI takeoff will involve significant acceleration of the broader economy (not just tech giants), which I think is not highly plausible.

There are SPX options that expire in 2027, 2028, and 2029, those seem more attractive to me than 2-3-y-dated VTI options, especially given that they have strike prices that are much further out of the money.

Would you mind posting the specific contracts you bought?

I have some of those in my portfolio. It’s worth slowly walking GTC orders up the bid-ask spread, you’ll get better pricing that way.

Doing a post-mortem on sapphire’s other posts, their track record is pretty great:

BTC/crypto liftoff prediction: +22%

Meta DAO: +1600%

SAVE: −60%

BSC Launcher: −100%?

OLY2021: +32%

Perpetual futures: +20%

Perpetual futures, DeFi edition: +15%

Bet on Biden: +40%

AI portfolio: approx. −5% compared to index over same time period

AI portfolio, second post: approx. +30% compared to index over same time period

OpenAI/MSFT: ~0%

Buy SOL: +1000%

There are many more that I didn’t look into.

All of these were over a couple weeks/months, so if you just blindly put 10% of your portfolio into each of the above, you get very impressive returns. (Overall, roughly ~5x relative to the broad market.)

Worth pointing out that this is up ~17x since it was posted. Sometimes, good ideas will be communicated poorly, and you’ll pay a big price for not investigating yourself. (At least I did.)

Just stumbled across this post, and copying a comment I once wrote:

Intuitively and anecdotally (and based on some likely-crappy papers), it seems harder to see animals as sentient beings or think correctly about the badness of factory farming while eating meat; this form of motivated reasoning plausibly distorts most people’s epistemics, and this is about a pretty important part of the world, and recognizing the badness of factory farming has minor implications for s-risks and other AI stuff

With some further clarifications:

Nobody actively wants factory farming to happen, but it’s the cheapest way to get something we want (i.e. meat), and we’ve built a system where it’s really hard for altruists to stop it from happening. If a pattern like this extended into the long-term future, we might want to do something about it.

In the context of AI, suffering subroutines might be an example of that.

Regarding futures without strong AGI: Factory farming is the arguably most important example of a present-day ongoing atrocity. If you fully internalize just how bad this is, that there’s something like a genocide (in terms of moral badness, not evilness) going on right here, right now, under our eyes, in wealthy Western democracies that are often understood to be the most morally advanced places on earth, and it’s really hard for us to stop it, that might affect your general outlook on the long-term future. I still think the long-term future will be great in expectation, but it also makes me think that utopian visions that don’t consider these downside risks seem pretty naïve.

I used to eat a lot of meat, and once I stopped doing that, I started seeing animals with different eyes (treating them as morally relevant, and internalizing that a lot more). The reason why I don’t eat meat now is not that I think it would cause value drift, but that it would make me deeply sad and upset – eating meat would feel similar to owning slaves that I treat poorly, or watching a gladiator fight for my own amusement. It just feels deeply morally wrong and isn’t enjoyable anymore. The fact that the consequences are only mildly negative in the grand scheme of things doesn’t change that. So [I actually don’t think that] my argument supports me remaining a vegan now, but I think it’s a strong argument for me to go vegan in the first place at some point.

My guess is that a lot of people don’t actually see animals as sentient beings whose emotions and feelings matter a great deal, but more like cute things to have fun with. And anecdotally, how someone perceives animals seems to be determined by whether they eat them, not the other way around. (Insert plausible explanation – cognitive dissonance, rationalizations, etc.) I think squashing dust mites, drinking milk, eating eggs etc. seems to have a much less strong effect in comparison to eating meat, presumably because they’re less visceral, more indirect/accidental ways of hurting animals.

Yeah, as I tried to explain above (perhaps it was too implicit), I think it probably matters much more whether you went vegan at some point in your life than whether you’re vegan right now.

I don’t feel confident in this; I wanted to mainly offer it as a hypothesis that could be tested further. I also mentioned the existence of crappy papers that support my perspective (you can probably find them in 5 minutes on Google Scholar). If people thought this was important, they could investigate this more.

You can also just do the unlevered versions of this, like SMH / SOXX / SOXQ, plus tech companies with AI exposure (MSFT, GOOGL, META, AMZN—or a tech ETF like QQQ).

A leverage + put options combo means you’ll end up paying lots of money to market makers.

I think more like you don’t argue why you believe what you believe and instead just assert it’s cool, and the whole thing looks a bit sloppy (spelling mistakes, all-caps, etc.)

Yeah, you will get much better fills if you walk your options limit orders (manually or automatically, see here for an example of an automatic implementation: https://www.schwab.com/content/how-to-place-walk-limit-order). Market makers will often fill your nearly-mid-market limit orders within seconds.