Toward A Bayesian Theory Of Willpower

(crossposted from Astral Codex Ten)

I.

What is willpower?

Five years ago, I reviewed Baumeister and Tierney’s book on the subject. They tentatively concluded it’s a way of rationing brain glucose. But their key results have failed to replicate, and people who know more about glucose physiology say it makes no theoretical sense.

Robert Kurzban, one of the most on-point critics of the glucose theory, gives his own model of willpower: it’s a way of minimizing opportunity costs. But how come my brain is convinced that playing Civilization for ten hours has no opportunity cost, but spending five seconds putting away dishes has such immense opportunity costs that it will probably leave me permanently destitute? I can’t find any correlation between the subjective phenomenon of willpower or effort-needingness and real opportunity costs at all.

A tradition originating in psychotherapy, and ably represented eg here by Kaj Sotala, interprets willpower as conflict between mental agents. One “subagent” might want to sit down and study for a test. But maybe one subagent represents the pressure your parents are putting on you to do well in school so you can become a doctor and have a stable career, and another subagent represents your own desire to drop out and become a musician, and even though the “do well in school” subagent is on top now, the “become a musician” subagent is strong enough to sabotage you by making you feel mysteriously unable to study. This usually ends with something about how enough therapy can help you reconcile these subagents and have lots of willpower again. But this works a lot better in therapy books than it does in real life. Also, what childhood trauma made my subagents so averse to doing dishes?

I’ve come to disagree with all of these perspectives. I think willpower is best thought of as a Bayesian process, ie an attempt to add up different kinds of evidence.

II.

My model has several different competing mental processes trying to determine your actions. One is a prior on motionlessness; if you have no reason at all to do anything, stay where you are. A second is a pure reinforcement learner—“do whatever has brought you the most reward in the past”. And the third is your high-level conscious calculations about what the right thing to do is.

These all submit “evidence” to your basal ganglia, the brain structure that chooses actions. Using the same evidence-processing structures that you would use to resolve ambiguous sense-data into a perception, or resolve conflicting evidence into a belief, it resolves its conflicting evidence about the highest-value thing to do, comes up with some hypothesized highest-value next task, and does it.

I’ve previously quoted Stephan Guyenet on the motivational system of lampreys (a simple fish used as a model organism). Guyenet describes various brain regions making “bids” to the basal ganglia, using dopamine as the “currency”—whichever brain region makes the highest bid gets to determine the lamprey’s next action. “If there’s a predator nearby”, he writes “the flee-predator region will put in a very strong bid to the striatum”.

The economic metaphor here is cute, but the predictive coding community uses a different one: they describe it as representing the “confidence” or “level of evidence” for a specific calculation. So an alternate way to think about lampreys is that the flee-predator region is saying “I have VERY VERY strong evidence that fleeing a predator would be the best thing to do right now.” Other regions submit their own evidence for their preferred tasks, and the basal ganglia weighs the evidence using Bayes and flees the predator.

This ties the decision-making process into the rest of the brain. At the deepest level, the brain isn’t really an auction or an economy. But it is an inference engine, a machine for weighing evidence and coming to conclusions. Your perceptual systems are like this—they weigh different kinds of evidence to determine what you’re seeing or hearing. Your cognitive systems are like this, they weigh different kinds of evidence to discover what beliefs are true or false. Dopamine affects all these systems in predictable ways. My theory of willpower asserts that it affects decision-making in the same way—it’s representing the amount of evidence for a hypothesis.

III.

In fact, we can look at some of the effects of dopaminergic drugs to flesh this picture out further.

Stimulants increase dopamine in the frontal cortex. This makes you more confident in your beliefs (eg cocaine users who are sure they outrun that cop car) and sometimes perceptions (eg how some stimulant abusers will hallucinate voices). But it also improves willpower (eg Adderall helping people study). I think all of these are functions of increasing the (apparent) level of evidence attached to “beliefs”. Since the frontal cortex disproportionately contains the high-level conscious processes telling you to (eg) do your homework, the drug artificially makes these processes sound “more convincing” relative to the low-level reinforcement-learning processes in the limbic system. This makes them better able to overcome the desire to do reinforcing things like video games, and also better able to overcome the prior on motionlessness (which makes you want to lie in bed doing nothing). So you do your homework.

Antipsychotics decrease dopamine. At low doses of antipsychotics, patients might feel like they have a little less willpower. At high doses, so high we don’t use them anymore, patients might sit motionless in a chair, not getting up to eat or drink or use the bathroom, not even shifting to avoid pressure sores. Now not only can the frontal cortex conscious processes not gather up enough evidence overcome the prior on motionlessness even the limbic system instinctual processes (like “you should eat food” and “you should avoid pain”) can’t do it. You just stay motionless forever (or until your doctor lowers your dose of antipsychotics).

In contrast, people on stimulants fidget, pace, and say things like “I have to go outside and walk this off now”. They have so much dopamine in their systems that any passing urge is enough to overcome the prior on motionlessness and provoke movement. If you really screw up someone’s dopamine system by severe methamphetamine use or obscure side effects of swinging around antipsychotic doses, you can give people involuntary jerks, tics, and movement disorders—now even random neural noise is enough to overcome the prior.

(a quick experiment: wiggle your index finger for one second. Now wave your whole arm in the air for one second. Now jump up and down for one second. Now roll around on the floor for one second. If you’re like me, you probably did the index finger one, maybe did the arm one, but the thought of getting up and jumping—let alone rolling on the floor—sounded like too much work, so you didn’t. These didn’t actually require different amounts of useful resources from you, like time or money or opportunity cost. But the last two required moving more and bigger muscles, so you were more reluctant to do them. This is what I mean when I say there’s a prior on muscular immobility)

IV.

I think this theory matches my internal experience when I’m struggling to exert willpower. My intellectual/logical brain processes have some evidence for doing something (“knowing how the education system works, it’s important to do homework so I can get into a good college and get the job I want”). My reinforcement-learner/instinctual brain processes have some opposing argument (“doing your homework has never felt reinforcing in the past, but playing computer games has felt really reinforcing!”). These two processes fight it out. If one of them gets stronger (for example, my teacher says I have to do the homework tomorrow or fail the class) it will have more “evidence” for its view and win out.

It also explains an otherwise odd feature of willpower: sufficient evidence doesn’t necessarily make you do something, but overwhelming evidence sometimes does. For example, many alcoholics know that they need to quit alcohol, but find they can’t. They only succeed after they “hit bottom”, ie things go so bad that the evidence against using alcohol gets “beyond a reasonable doubt”. Alcoholism involves some imbalance in brain regions such that the reinforcing effect of alcohol is abnormally strong. The reinforcement system is always more convinced in favor of alcohol than the intellectual system is convinced against it—until the intellectual evidence becomes disproportionately strong even more than the degree to which the reinforcement system is disproportionately strong.

Why don’t the basal ganglia automatically privilege the intellectual/logical processes, giving you infinite willpower? You could give an evolutionary explanation—in the past, animals were much less smart, and their instincts were much better suited to their environment, so the intellectual/logical processes were less accurate, relative to the reinforcement/instinctual processes, than they are today. Whenever that system last evolved, it was right to weight them however much it weighted them.

But maybe that’s giving us too much credit. Even today, logical/intellectual processes can be pretty dumb. Millions of people throughout history have failed to reproduce because they became monks for false religions; if they had just listened to their reinforcement/instinctual processes instead of their intellectual/logical ones, they could have avoided that problem. The moral law says we should spend our money saving starving children instead of buying delicious food and status goods for ourselves; our reinforcement/instinctual processes let us tell the moral law to f#@k off, keeping us well-fed, high-status, and evolutionarily fit. Any convincing sophist can launch an attack through the intellectual/logical processes; when they do, the reinforcement/instinctual processes are there to save us; Heinrich argues that the secret of our success is avoiding getting too bogged down by logical thought. Too bad if you have homework to do, though.

Does this theory tell us how to get more willpower? Not immediately, no. I think naive attempts to “provide more evidence” that a certain course of action is good will fail; the brain is harder to fool than people expect. I also think the space of productivity hacks has been so thoroughly explored that it would be surprising if a theoretical approach immediately outperformed the existing atheoretical one.

I think the most immediate gain to having a coherent theory of willpower is to be able to more effectively rebut positions that assume willpower doesn’t exist, like Bryan Caplan’s theory of mental illness. If I’m right, lack of willpower should be thought of as an imbalance between two brain regions that decreases the rate at which intellectual evidence produces action. This isn’t a trivial problem to fix!

One interesting datum about willpower (which I’ve observed repeatedly in many people and contexts; not sure if it’s officially documented anywhere) is that it’s much easier to take a fully scripted action than to take an action that requires creatively filling in details.

For example, suppose several people are out trying to do “rejection therapy” (a perhaps-dubious game in which folks make requests of strangers that are likely to be rejected, e.g. “Can I listen to the walkman you’re listening to for a moment?” or “Can we trade socks?”). Many many people who set out to ask a stranger such a question will… “decide” not to, once the stranger is actually near them. However, people who have written down exactly which words they plan to stay in what order to exactly which stranger, with no room for ambiguity, are (anecdotally but repeatedly) more likely to actually say the words. (Or to do many other difficult habits, I think.)

(I originally noticed this pattern in undergrad, when there was a study group I wanted to leave, but whose keeper I felt flinchy about disappointing by leaving. I planned to leave the group and then … didn’t. And the next week, planned again to leave the group and then didn’t. And the third week, came in with a fully written exact sentence, said my scripted sentence, and left.)

Further examples:

It’s often easier to do (the dishes, or exercise, or other ‘difficult’ tasks) if there’s a set time for it.

Creativity-requiring tasks are often harder to attempt than more structured/directions-following-y tasks (e.g. writing poetry, especially if you’re “actually trying” at it; or attempting alignment research in a more “what actually makes sense here?” way and a less “let me make deductions from this framework other people are using” way; or just writing a blog post vs critiquing one).

— I’ve previously taken the above observations as evidence for the “subparts of my mind with differing predictions” view — if there are different bits of my (mind/brain) that are involved in e.g. assembling the sentence, vs saying the sentence, then if I need to figure out what words to say I’ll need whatever’s involved in calling the “assemble sentences” bit to also be on board, which is to say that more of me will need to be on board.

I guess you could also try this from the “Bayesian evidence” standpoint. But I’m curious how you’d do it in detail. Like, would you say the prior against “moving muscles” extends also for “assembling sentences”?

I suppose that any moment when you have to choose “A or B” is actually a moment when your choices are “A or B or give up”. So the more such moments, the greater the chance of giving up.

Which seems like the reason behind one-click shopping (each click is a moment of choice “click or give up”).

To handle this, it would probably work to extend the “motionless” prior to a more abstract “status quo” prior. It’s not necessarily related to physical movement (it could even be the opposite if it were a sports group instead of a study group in your example) but rather an aversion to expending effort or experiencing potential undesired consequences. The prior says, “Things are fine. It’s not worth going out of the way to change that.”

With this extended version, creativity-requiring tasks are analogous to physically-demanding tasks. With pre-scripted tasks, it’s not as demanding in the moment.

Interestingly there was a recent neuroscience paper that basically said “our computational model of the brain includes this part about mental effort but we have no damn idea why anything should require mental effort, we put it in our model because obviously that’s a thing with humans but no theory would predict it”:

Under the bidding system theory, if the non-winning bids still have to pay out some fraction of the amount bid even when they lose, then bidding wars are clearly costly. Even when the executive control agent is winning all the bids, resources are being drained every auction in some proportion to how strongly other agents are still bidding. This seems to align with my own perceptions at first glance and explains how control wanes over time.

I’m not sure whether your model actually differs substantially from mine. :-) Or at least not the version of my model articulated in “Subagents, akrasia, and coherence in humans”. Compare you:

with me:

You don’t explicitly talk about the utility side, just the probability, but if the flee-predator region says its proposed course of action is “the best thing to do right now”, then that sounds like there’s some kind of utility calculation also going on. On the other hand, I didn’t think of the “dopamine represents the strength of the bid” hypothesis, but combining that with my model doesn’t produce any issues as far as I can see.

Expanding a bit on this correspondence: I think a key idea Scott is missing in the post is that a lot of things are mathematically identical to “agents”, “markets”, etc. These are not exclusive categories, such that e.g. the brain using an internal market means it’s not using Bayes’ rule. Internal markets are a way to implement things like (Bayesian) maximum a-posteriori estimates; they’re a very general algorithmic technique, often found in the guise of Lagrange multipliers (historically called “shadow prices” for good reason) or intermediates in backpropagation. Similar considerations apply to “agents”.

See also the correspondence between prediction markets of kelly bettors and Bayesian updating.

Also, you:

Me, later in my post:

I think the problem with this theory is similar to evolutionary psychology.

Reading it, it makes perfect sense and explains current observations. But how do you methodically disprove the other competing theories? How do we winnow down to this as the actual truth?

You need empirical data that we don’t yet have a practical means of collecting. With a system like a neural link, with thousands of electrodes wired to the frontal cortex and data cross correlating it to dopamine levels, we could actually built a simulation from the data and then validate this theory against that simulation.

(evolutionary psychology has a worse problem I suppose—in that case it is making theories about a past that we cannot observe. with your theory we can hope to collect the data)

Wow, you got me straight. I did my finger and my arm then stopped.

Though it felt more to me like my reinforcement-learner had strong preferences (i.e. to keep holding my iPad and look at the screen) that overweighed it, than muscular immobility in general having a prior. Often when I’m in the middle of exercise, I feel pretty excited about doing more, or I’ll notice someone doing something and I’ll run over to help. In those situations my reinforcement learner isn’t saying anything like “keep holding the iPad, you like the iPad”.

I don’t understand what’s the point of calling it “evidence” instead of “updating weights” unless brain literally implements P(A|B) = [P(A)*P(B|A)]/P(B) for high level concepts like “it’s important to do homework”. And even then this story about evidence and beliefs doesn’t bring anything additional to the explanation with specific weight aggregation algorithm.

An alternative explanation of will-power is hyperbolic discounting. Your time discount function is not exponential, and therefore not dynamically consistent. So you can simultaneously (i) prefer gaining short-term pleasure on the expense of long-term goals (e.g. play games instead of studying) and (ii) take actions to prevent future-you from doing the same (e.g. go to rehab).

This seems simpler, but it doesn’t explain why the same drugs that cause/prevent weird beliefs should add/deplete will-power.

“How to weight evidence vs. the prior” is not a free parameter in Bayesianism. What you can have is some parameter controlling the prior itself (so that the prior can be less or more confident about certain things). I guess we can speculate that there are some parameters in the prior and some parameters in the reward function s.t. various drugs affect both of them simultaneously, and maybe there’s a planning-as-inference explanation for why the two are entangled.

I mean, there is something of a free parameter which is how strong your prior is over ‘hypotheses’ vs how much of a likelihood ratio you get from observing ‘evidence’ if there’s a difference between hypotheses and evidence, and you can set your prior joint distribution over hypotheses and evidence however you want.

Maybe this is what you meant by “What you can have is some parameter controlling the prior itself (so that the prior can be less or more confident about certain things).”

Yes, I think we are talking about the same thing. If you change your distribution over hypotheses, or the distribution over evidence implied by each hypothesis, then it means you’re changing the prior.

Adding to the metaphor here: suppose every day I, a Bayesian, am deciding what to do. I have some prior on what to do, which I update based on info I hear from a couple of sources, including my friend and the blogosphere. It seems that I should have some uncertainty over how reliable these sources are, such that if my friend keeps giving advice that in hindsight looks better than the advice I’m getting from the blogosphere, I update to thinking that my friend is more reliable than the blogosphere, and in future update more on my friend’s advice than on the blogosphere’s.

This means that if we take this sort of Bayesian theory of willpower seriously, it seems like you’re going to have ‘more willpower’ if in the past the stuff that your willpower advised you to do seemed ‘good’. Which sounds like the standard theory of “if being diligent pays off you’ll be more diligent” but isn’t: if your ‘willpower/explicit reasoning module’ says that X is a good idea and Y is a terrible idea, but other evidence comes in saying that Y will be great such that you end up doing Y anyway, and it sucks, you should have more willpower in the future. I guess the way this ends up not being what the Bayesian framework predicts is if what the evidence is actually for is the proposition “I will end up taking so-and-so action”—but that’s loopy enough that I at most want to call it quasi-Bayesian. Or I guess you could have an uninformative prior over evidence reliability, such that you don’t think past performance predicts future performance.

I’m still confused by you having this experience, since my (admittedly) anecdotal one is that actually all of that stuff does work roughly as well as the therapy books suggest it does. (Different worlds, I guess. :)) Though with some caveats; from my own experience doing this and talking with others who do find it to work:

Often an initial presenting symptom is a manifestation of a deeper one. Then one might fix the initial issue so that the person does get genuine relief, but while that helps, the problem will return in a subtler form later. (E.g. my own follow-up on one particular self-intervention, which found some of the issues to have been healed for good but others to have returned in a different form.)

The roots of some issues are just really hard to find and blocked behind many, many defenses that are impossible to get to in any short amount of time.

I’d guess that the people who write the therapy books are being mostly honest when they say that the selection of stories is representative, in that they do get quick results with many clients, so it’s fair to say that none of the stories are exceptionally rare in nature… but the authors also do want to promote the technique, so they don’t go into as much detail on the cases that were long and messy and complicated and for those reasons wouldn’t be very pedagogically useful to cover in the book anyway. So then they kind of gloss over the fact that even with these techniques, it can still take several years to get sufficiently far.

I do also think that—as you speculate in that post—therapist quality is definitely a thing. At least a therapist should be capable of being open and non-judgmental towards their clients, and it’s not hard to find all kinds of horror stories about bad therapists who thought that a client’s problems were all rooted in the client being polyamorous, or something similar.

Could be many reasons. :) Though it doesn’t need to be a literal childhood trauma; it can just be an aversion picked up through association.

A typical one would be something like being told to do the dishes as a kid, not liking the way your parents go around ordering you, and then picking up a general negative association with various chores.

Someone else might have an aversion due to an opposite problem, like not being allowed to do many chores on their own and then picking up the model that one gets rewarded better by being dependent on others.

A third person might have had a parent who also really disliked doing the dishes themselves (for whatever reason) and internalized the notion that this is something to avoid.

(I don’t think these are just-so stories; rather they’re all variations of patterns I’ve encountered either in myself or others.)

The second and third one are also easy to rephrase in an equivalent Bayesian framework (in the second one, the mind comes to predict that independence causes bad outcomes; in the third one, it picks up the belief “doing the dishes is bad” through social learning). For the first one, the prediction is not quite as clear, but at least we know conditioning-by-association to be a thing that definitely happens.

Seems to me that we have two general paradigms for explaining psychological things such as (but not limited to) willpower.

From the perspective of energies, there are some numeric values, which can be increased or decreased by inputs from environment, or by each other. Different models within this paradigm posit different types of values and different relations between them. We can have a simple model with one mysterious value called “willpower”; or we can have a more complicated model with variables such as “glucose”, “dopamine”, “reward”, “evidence”, etc. Eating a cake increases the “reward” and “glucose” variables; completing a level on a mobile game increases the “reward” variable; the “reward” variable increases the “dopamine” variable; the “glucose” variable decreases over time as the glucose is processed by the metabolism, etc.

Solutions within this paradigm consist of choosing a variable that can be modified most efficiently, and trying to modify it. We can try increase the “willpower” by yelling at people to use their willpower, or increase “motivation” by giving them a supposedly motivational speech, we can give rewards or punishment, increase “evidence” by explaining how bad their situation is, and then we can increase “glucose” by giving them a cake, or increase “dopamine” by giving them a pill. The idea is that the change of the value will propagate through the system, and achieve the desired outcome.

From the perspective of contents, there are some beliefs or habits or behaviors or whatever, things that can have many shapes (instead of just one-dimensional value), and the precise shape is what determines the functionality. They are more like lines of code in an algorithm, or perhaps like switches; flipping the switch can redirect the existing energies from one pathway to another. A single update, such as “god does not exist”, can redirect a lot of existing motivations, and perceived rewards or punishments. A belief of “X helps to achieve Y” or “X does not help to achieve Y” can connect or disconnect the motivation to do X with the desirability of Y.

Solutions within this paradigm consist of debugging the mental contents, and finding the ones that should be changed, for example by reflecting on them, providing new evidence, experimenting with things. Unlike the energy model, which is supposed to be the same in general for all humans (like, maybe some people metabolize glucose faster than others, but the hypothesis “glucose causes willpower” is either true for everyone or false for everyone), the contents model requires custom-made solutions for everyone, because people have different beliefs, habits, and behaviors.

(Now I suspect that some readers are screaming “the first paradigm is called behaviorism, and the second paradigm is called psychoanalysis”. If that is your objection, then I’d like to remind you of CBT.)

Yesterday there was the article How You Can Gain Self Control Without “Self-Control”, whose theory seems mostly based on the energies model (variables: awareness, motivation, desires, pain tolerance, energy...), but then it suddenly provides an example based on a real person, whose mental content (“suffering is glorious”) simply flips the sign in the equation how two energies relate to each other, and anecdotally the outcomes are impressive.

I guess I am trying to say that if you overly focus on gradually increasing the right energies by training or pills, you might miss the actually high-impact interventions, because they will be out of your paradigm.

(But of course, if your energies are in disorder e.g. because you have a metabolic problem, fix that first, instead of looking for a clever insight or state of mind that would miraculously cure you.)

Becoming a monk isn’t as much of a reproductive dead end as you paint it here: yes, the individual won’t reproduce, but the individual wasn’t spontaneously created from a vacuum. The monk has siblings and cousins who will go on to reproduce, and in the context of a society that places high value on religion, the respect the monk’s family earns via the monk demonstrating such a costly sacrifice, will help the monk’s genes more than the would-be monk himself could do by trying to reproduce.

It’s important to view religion not as a result of our cognitive processes being insufficient to reject religion (which is nearly trivial for an intelligent being to do, by design), but as a result of evolution intentionally sabotaging our cognitive processes to better navigate social dynamics, and for this reason I take issue with this being used as an example to support the point you make with it.

I agree with the point of “belief in religion likely evolved for a purpose so it’s not that we’re intrinsically too dumb to reject them”, but I’m not sure of the reasoning in the previous paragraph. E.g. if religion in the hunter-gatherer period wasn’t already associated with celibacy, then it’s unlikely for this particular causality to have created an evolved “sacrifice your personal sexual success in exchange for furthering the success of your relatives” strategy in the brief period of time that celibacy happened to bring status. And the plentiful sex scandals associated with various organized religions don’t give any indication of religion and celibacy being intrinsically connected; in general, being high status seems to make men more rather than less interested in sex.

A stronger argument would be that regardless of how smart intellectual processes are, they generally don’t have “maximize genetic fitness” as their goal, so the monk’s behavior isn’t caused by the intellectual processes being particularly dumb… but then again, if those processes don’t directly care about fitness, then that just gives evolution another reason to have instincts sometimes override intellectual reasoning. So this example still seems to support Scott’s point of “if they had just listened to their reinforcement/instinctual processes instead of their intellectual/logical ones, they could have avoided that problem”.

But my point is that the process that led to them becoming monks was an instinctual process, not an intellectual one, and the “problem” isn’t actually one from the point of view of the genes.

Actually upon further thought, I disagree with Scott’s premise that this case allows for a meaningful distinction between “instinctual” and “intellectual” processes, so I guess I agree with you.

What’s this supposed to be estimating or predicting with Bayes here? The thing you’ll end up doing? Something like this?:

Each of the 3 processes has a general prior about how often they “win” (that add up to 100%, or maybe the basal ganglia normalizes them). And a bayes factor, given the specific “sensory” inputs related to their specific process, while remaining agnostic about the options of the other process. For example, the reinforcer would be thinking: “I get my way 30% of the time. Also, this level of desire to play the game is 2 times more frequent when I end up getting my way than when I don’t (regardless of which of the other 2 won, let’s assume, or I don’t know how to keep this modular). Similarly, the first process would be looking at the level of laziness, and the last one at the strength of the arguments or sth.

Then, the basal ganglia does bayes to update the priors given the 3 pieces of evidence, and gets to a posterior probability distribution among the 3 options.

And finally you’ll end up doing what was estimated because, well, the brain does what minimizes the prediction error. Is this the weird sense in which the info is mixed with bayes and this is all bayesian stuff?

I must be missing something. If this interpretation was correct, e.g., what would increasing the dopamine e.g. in the frontal cortex be doing? Increasing the “unnormalized” prior for such process? (like, it falsely thinks it wins more often than it does, regardless of the evidence). Falsely bias the bayes factor? (like, it thinks it almost never happens that it feels this convinced of what should happen in the cases when it doesn’t end up winning.)

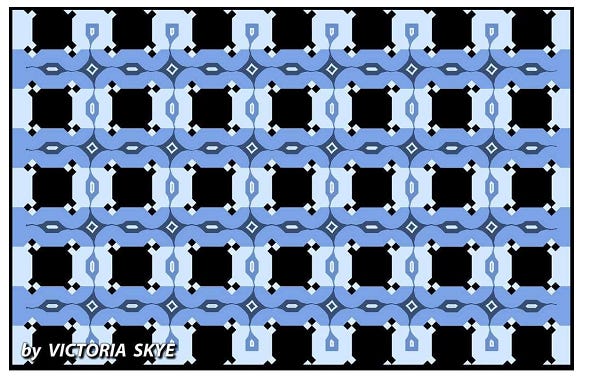

In fact, I can make myself perceive the dark blue lines as straight by holding the phone on which I read this article about a foot and a half from my face. I don’t know if this is partly because my contact lenses aren’t on at the moment. As I gradually move the screen away, the lines appear to rotate into a horizontal arrangement.

In general, I am more sympathetic to the idea that a well-conceived and consistently executed, theory-based approach to productivity hacks will yield fruit that an atheoretical haphazard approach can’t achieve, and for this reason. Between the lack of incentives to help others be more productive, limited bandwidth to explore for ourselves, scientific inadequacies in aligning evidence with reason, and the constant improvements in technology, culture, and scientific understanding, there’s a lot of new resources to draw upon.

This theory seems to explain all observations but I am not able to figure out what it doesn’t explain in day to day life.

Also, for the last picture the key lies in looking straight at the grid and not the noise then you can see the straight lines, although it takes a bit of practice to reduce your perception to that.

This reminds me of lukeprog’s post on motivation (I can’t seem to find it though...; this should suffice). Your model and TMT kind of describe the same thing: you have more willpower for things that are high value and high expectancy. And the impulsiveness factor is similar to how there are different “kinds” of evidence: eg you are more “impulsive” to play video games or not move, even though they aren’t high value+expectation logically.

How to Beat Procrastination and My Algorithm for Beating Procrastination