0th Person and 1st Person Logic

Truth values in classical logic have more than one interpretation.

In 0th Person Logic, the truth values are interpreted as True and False.

In 1st Person Logic, the truth values are interpreted as Here and Absent relative to the current reasoner.

Importantly, these are both useful modes of reasoning that can coexist in a logical embedded agent.

This idea is so simple, and has brought me so much clarity that I cannot see how an adequate formal theory of anthropics could avoid it!

Crash Course in Semantics

First, let’s make sure we understand how to connect logic with meaning. Consider classical propositional logic. We set this up formally by defining terms, connectives, and rules for manipulation. Let’s consider one of these terms: . What does this mean? Well, its meaning is not specified yet!

So how do we make it mean something? Of course, we could just say something like “represents the statement that ‘a ball is red’”. But that’s a little unsatisfying, isn’t it? We’re just passing all the hard work of meaning to English.

So let’s imagine that we have to convey the meaning of without using words. We might draw pictures in which a ball is red, and pictures in which there is not a red ball, and say that only the former are . To be completely unambiguous, we would need to consider all the possible pictures, and point out which subset of them are . For formalization purposes, we will say that this set is the meaning of .

There’s much more that can be said about semantics (see, for example, the Highly Advanced Epistemology 101 for Beginners sequence), but this will suffice as a starting point for us.

0th Person Logic

Normally, we think of the meaning of as independent of any observers. Sure, we’re the ones defining and using it, but it’s something everyone can agree on once the meaning has been established. Due to this independence from observers, I’ve termed this way of doing things 0th Person Logic (or 0P-logic).

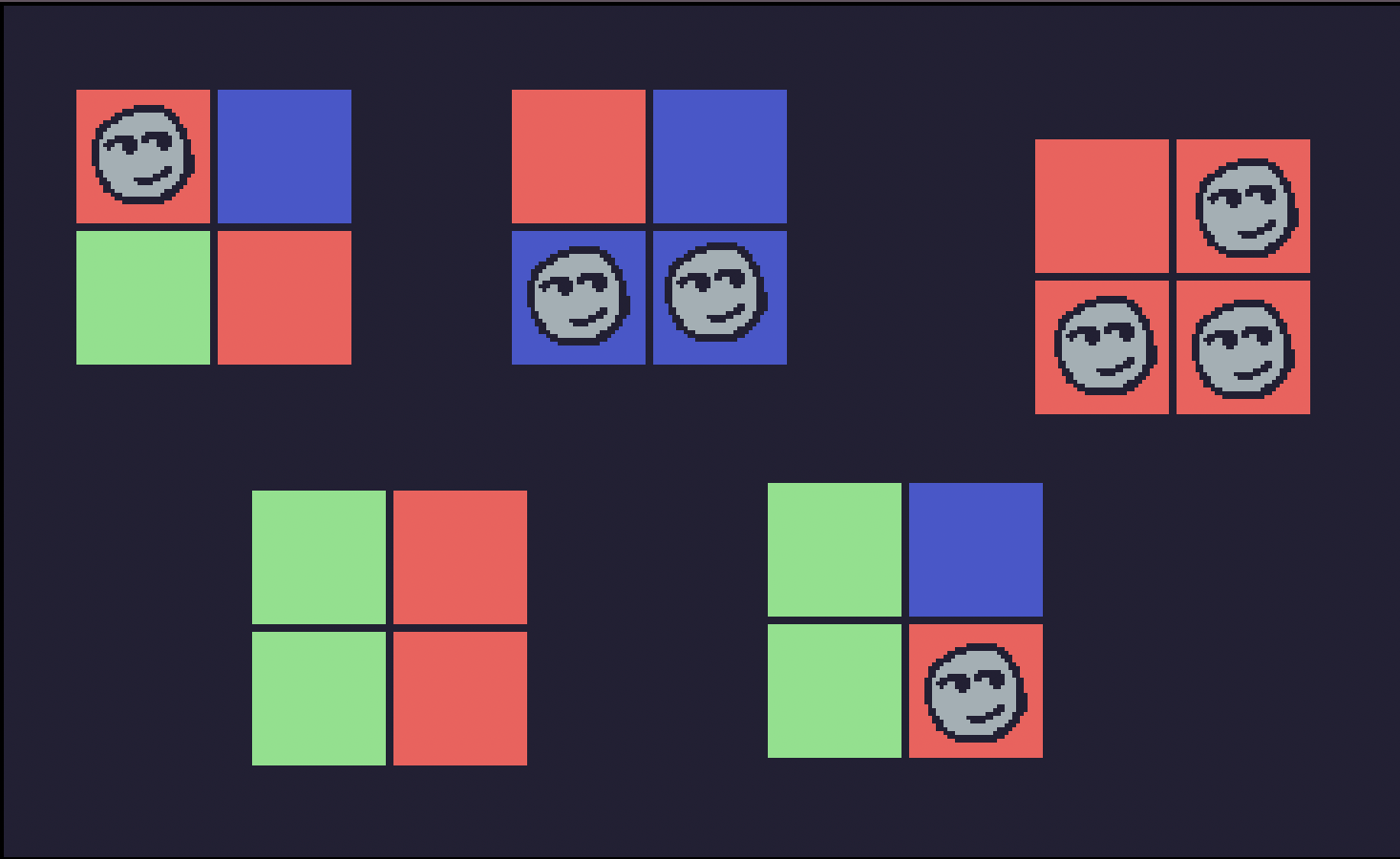

The elements of a meaning set I’ll call worlds in this case, since each element represents a particular specification of everything in the model. For example, say that we’re only considering states of tiles on a 2x2 grid. Then we could represent each world simply by taking a snapshot of the grid.

From logic, we also have two judgments. is judged True for a world iff that world is in the meaning of . And False if not. This judgement does not depend on who is observing it; all logical reasoners in the same world will agree.

1st Person Logic

Now let’s consider an observer using logical reasoning. For metaphysical clarity, let’s have it be a simple, hand-coded robot. Fix a set of possible worlds, assign meanings to various symbols, and give it the ability to make, manipulate, and judge propositions built from these.

Let’s give our robot a sensor, one that detects red light. At first glance, this seems completely unproblematic within the framework of 0P-logic.

But consider a world in which there are three robots with red light sensors. How do we give the intuitive meaning of “my sensor sees red”? The obvious thing to try is to look at all the possible worlds, and pick out the ones where the robot’s sensor detects red light. There are three different ways to do this, one for each instance of the robot.

That’s not a problem if our robot knows which robot it is. But without sensory information, the robot doesn’t have any way to know which one it is! There may be both robots which see a red signal, and robots which do not—and nothing in 0P-Logic can resolve this ambiguity for the robot, because this is still the case even if the robot has pinpointed the exact world it’s in!

So statements like “my sensor sees red” aren’t actually picking out subsets of worlds like 0P-statements are. Instead, they’re picking out a different type of thing, which I’ll term an experience.[1] Each specific combination of possible sensor values constitutes a possible experience.

For the most part, experiences work in exactly the same way as worlds. We can assign meanings to statements like “my sensor sees red” by picking out subsets of experiences, just as before. It’s still appropriate to reason about these using logic. Semantically, we’re still just doing basic set operations—but now on sets of experiences instead of sets of worlds.

The crucial difference comes from how we interpret the “truth” values. is judged Here for an experience iff that experience is in the meaning of . And Absent if not. This judgment only applies to the robot currently doing the reasoning—even the same robot in the future may come to different judgments about whether is Here. Therefore, I’ve termed this 1st Person Logic (or 1P-logic).

We Can Use Both

In order to reason effectively about its own sensor signals, the robot needs 1P-logic.

In order to communicate effectively about the world with other agents, it needs 0P-logic, since 0P-statements are precisely the ones which are independent of the observer. This includes communicating with itself in the future, i.e. keeping track of external state.

Both modes of reasoning are useful and valid, and I think it’s clear that there’s no fundamental difficulty in building a robot that uses both 0P and 1P reasoning—we can just program it to have and use two logic systems like this. It’s hard to see how we could build an effective embedded agent that gets by without using them in some form.

While 0P-statements and 1P-statements have different types, that doesn’t mean they are separate magisteria or anything like that. From an experience, we learn something about the objective world. From a model of the world, we infer what sort of experiences are possible within it.[2]

As an example of the interplay between the 0P and 1P perspectives, consider adding a blue light sensor to our robot. The robot has a completely novel experience when it first gets activated! If its world model doesn’t account for that already, it will have to extend it somehow. As it explores the world, it will learn associations with this new sense, such as it being commonly present in the sky. And as it studies light further, it may realize there is an entire spectrum, and be able to design a new light sensor that detects green light. It will then anticipate another completely novel experience once it has attached the green sensor to itself and it has been activated.

This interplay allows for a richer sense of meaning than either perspective alone; blue is not just the output of an arbitrary new sensor, it is associated with particular things already present in the robot’s ontology.

Further Exploration

I hope this has persuaded you that the 0P and 1P distinction is a core concept in anthropics, one that will provide much clarity in future discussions and will hopefully lead to a full formalization of anthropics. I’ll finish by sketching some interesting directions it can be taken.

One important consequence is that it justifies having two separate kinds of Bayesian probabilities: 0P-probabilities over worlds, and 1P-probabilities over experiences. Since probability can be seen as an extension of propositional logic, it’s unavoidable to get both kinds if we accept these two kinds of logic. Additionally, we can see that our robot is capable of having both, with both 0P-probabilities and 1P-probabilities being subjective in the sense that they depend on the robot’s own best models and evidence.

From this, we get a nice potential explanation to the Sleeping Beauty paradox: 1⁄2 is the 0P-probability, and 1⁄3 is the 1P-probability (of slightly different statements: “the coin in fact landed heads”, “my sensors will see the heads side of the coin”). This could also explain why both intuitions are so strong.

It’s worth noting that no reference to preferences has yet been made. That’s interesting because it suggests that there are both 0P-preferences and 1P-preferences. That intuitively makes sense, since I do care about both the actual state of the world, and what kind of experiences I’m having.

Additionally, this gives a simple resolution to Mary’s Room. Mary has studied the qualia of ‘red’ all her life (gaining 0P-knowledge), but has never left her grayscale room. When she leaves it and sees red for the very first time, she does not gain any 0P-knowledge, but she does gain 1P-knowledge. Notice that there is no need to invoke anything epiphenomenal or otherwise non-material to explain this, as we do not need such things in order to construct a robot capable of reasoning with both 0P and 1P logic.[3]

Finally, this distinction may help clarify some confusing aspects of quantum mechanics (which was the original inspiration, actually). Born probabilities are 1P-probabilities, while the rest of quantum mechanics is a 0P-theory.

Special thanks to Alex Dewey, Nisan Stiennon and Claude 3 for their feedback on this essay, to Alexander Gietelink Oldenziel and Nisan for many insightful discussions while I was developing these ideas, and to Alex Zhu for his encouragement. In this age of AI text, I’ll also clarify that everything here was written by myself.

The idea of using these two different interpretations of logic together like this is original to me, as far as I am aware (Claude 3 said it thought so too FWIW). However, there have been similar ideas, for example Scott Garrabrant’s post about logical and indexical uncertainty, or Kaplan’s theory of indexicals.

- ^

I’m calling these experiences because that is a word that mostly conveys the right intuition, but these are much more general than human Experiences and apply equally well to a simple (non-AI) robot’s sensor values.

- ^

More specifically, I expect there to be an adjoint functor pair of some sort between them (under the intuition that an adjoint functor pair gives you the “best” way to cast between different types).

- ^

I’m not claiming that this explains qualia or even what they are, just that whatever they are, they are something on the 1P side of things.

I’m confused about why 1P-logic is needed. It seems to me like you could just have a variable X which tracks “which agent am I” and then you can express things like

sensor_observes(X, red)oris_located_at(X, northwest). Here and Absent are merely a special case of True and False when the statement depends onX.Because you don’t necessarily know which agent you are. If you could always point to yourself in the world uniquely, then sure, you wouldn’t need 1P-Logic. But in real life, all the information you learn about the world comes through your sensors. This is inherently ambiguous, since there’s no law that guarantees your sensor values are unique.

If you use X as a placeholder, the statement

sensor_observes(X, red)can’t be judged as True or False unless you bind X to a quantifier. And this could not mean the thing you want it to mean (all robots would agree on the judgement, thus rendering it useless for distinguishing itself amongst them).It almost works though, you just have to interpret “True” and “False” a bit differently!

How do you assign meaning to statements like “my sensor will see red”? (In the OP you mention “my sensors will see the heads side of the coin” but I’m not sure what your proposed semantics of such statements are in general.)

Also, here’s an old puzzle of mine that I wonder if your line of thinking can help with: At time 1 you will be copied and the original will be shown “O” and the copy will be shown “C”, then at time 2 the copy will be copied again, and the three of you will be shown “OO” (original), “CO” (original of copy), “CC” (copy of copy) respectively. At time 0, what are your probabilities for “I will see X” for each of the five possible values of X?

That’s a very good question! It’s definitely more complicated once you start including other observers (including future selves), and I don’t feel that I understand this as well.

But I think it works like this: other reasoners are modeled (0P) as using this same framework. The 0P model can then make predictions about the 1P judgements of these other reasoners. For something like anticipation, I think it will have to use memories of experiences (which are also experiences) and identify observers for which this memory corresponds to the current experience. Understanding this better would require being more precise about the interplay between 0P and 1P, I think.

(I’ll examine your puzzle when I have some time to think about it properly)

Defining the semantics and probabilities of anticipation seems to be a hard problem. You can see some past discussions of the difficulties at The Anthropic Trilemma and its back-references (posts that link to it). (I didn’t link to this earlier in case you already found a fresh approach that solved the problem. You may also want to consider not reading the previous discussions to avoid possibly falling into the same ruts.)

I have a solution that is completely underwhelming, but I can see no flaws in it, besides the complete lack of definition of which part of the mental state should be preserved to still count as you and rejection of MWI (as well as I cannot see useful insights into why we have what looks like continuous subjective experience).

You can’t consistently assign probabilities for future observations in scenarios where you expect creation of multiple instances of your mental state. All instances exist and there’s no counterfactual worlds where you end up as a mental state in a different location/time (as opposed to the one you happened to actually observe). You are here because your observations tells you that you are here, not because something intangible had moved from previous “you”(1) to the current “you” located here.

Born rule works because MWI is wrong. The collapse is objective and there’s no alternative yous.

(1) I use “you” in scare quotes to designate something beyond all information available in the mental state that presumably is unique and moves continuously (or jumps) thru time.

Let’s iterate through questions of The Anthropic Trilemma.

The Boltzmann Brain problem: no probabilities, no updates. Observing either room doesn’t tell you anything about the value of the digit of pi. It tells you that you observe the room you observe.

Winning the lottery: there’s no alternative quantum branches, so your machinations don’t change anything.

Personal future: Britney Spears observes that she has memories of Britney Spears, you observe that you have your memories. There’s no alternative scenarios if you are defined just by the information in your mental state. If you jump off the cliff, you can expect that someone with a memory of deciding to jump off the cliff (as well as all other your memories) will hit the ground and there will be no continuation of this mental state in this time and place. And your memory tells you that it will be you who will experience consequences of your decisions (whatever the underlying causes for that).

Probabilistic calculations of your future experiences work as expected, if you add “conditional on me experiencing staying here and now”.

It’s not unlike operator “do(X=x)” in Graphical Models that cuts off all other causal influences on X.

Expanding a bit on the topic.

Exhibit A: flip a fair coin and move a suspended robot into a green or red room using a second coin with probabilities (99%, 1%) for heads, and (1%, 99%) for tails.

Exhibit B: flip a fair coin and create 99 copies of the robot in green rooms and 1 copy in a red room for heads, and reverse colors otherwise.

What causes the robot to see red instead of green in exhibit A? Physical processes that brought about a world where the robot sees red.

What causes a robot to see red instead of green in exhibit B? The fact that it sees red, nothing more. The physical instance of the robot who sees red in one possible world, could be the instance who sees green in another possible world, of course (physical causality surely is intact). But a robot-who-sees-red (that is one of the instances who see red) cannot be made into a robot-who-sees-green by physical manipulations. That is subjective causality of seeing red is cut off from physical causes (in the case of multiple copies of an observer). And as such cannot be used as a basis for probabilistic judgements.

I guess that if I’ll not see a resolution of the Anthropic Trilemma in the framework of MWI in about 10 years, I’ll be almost sure that MWI is wrong.

[Without having looked at the link in your response to my other comment, and I also stopped reading cubefox’s comment once it seemed that it was going in a similar direction. ETA: I realized after posting that I have seen that article before, but not recently.]

I’ll assume that the robot has a special “memory” sensor which stores the exact experience at the time of the previous tick. It will recognize future versions of itself by looking for agents in its (timeless) 0P model which has a memory of its current experience.

For p(“I will see O”), the robot will look in its 0P model for observers which have the t=0 experience in their immediate memory, and selecting from those, how many have judged “I see O” as Here. There will be two such robots, the original and the copy at time 1, and only one of those sees O. So using a uniform prior (not forced by this framework), it would give a 0P probability of 1⁄2. Similarly for p(“I will see C”).

Then it would repeat the same process for t=1 and the copy. Conditioned on “I will see C” at t=1, it will conclude “I will see CO” with probability 1⁄2 by the same reasoning as above. So overall, it will assign: p(“I will see OO”) = 1⁄2, p(“I will see CO”) = 1⁄4, p(“I will see CC”) = 1⁄4

The semantics for these kinds of things is a bit confusing. I think that it starts from an experience (the experience at t=0) which I’ll call E. Then REALIZATION(E) casts E into a 0P sentence which gets taken as an axiom in the robot’s 0P theory.

A different robot could carry out the same reasoning, and reach the same conclusion since this is happening on the 0P side. But the semantics are not quite the same, since the REALIZATION(E) axiom is arbitrary to a different robot, and thus the reasoning doesn’t mean “I will see X” but instead means something more like “They will see X”. This suggests that there’s a more complex semantics that allows worlds and experiences to be combined—I need to think more about this to be sure what’s going on. Thus far, I still feel confident that the 0P/1P distinction is more fundamental than whatever the more complex semantics is.

(I call the 0P → 1P conversion SENSATIONS, and the 1P → 0P conversion REALIZATION, and think of them as being adjoints though I haven’t formalized this part well enough to feel confident that this is a good way to describe it: there’s a toy example here if you are interested in seeing how this might work.)

If we look at the situation in 0P, the three versions of you at time 2 all seem equally real and equally you, yet in 1P you weigh the experiences of the future original twice as much as each of the copies.

Suppose we change the setup slightly so that copying of the copy is done at time 1 instead of time 2. And at time 1 we show O to the original and C to the two copies, then at time 2 we show them OO, CO, CC like before. With this modified setup, your logic would conclude P(“I will see O”)=P(“I will see OO”)=P(“I will see CO”)=P(“I will see CC”)=1/3 and P(“I will see C”)=2/3. Right?

Similarly, if we change the setup from the original so that no observation is made at time 1, the probabilities also become P(“I will see OO”)=P(“I will see CO”)=P(“I will see CC”)=1/3.

Suppose we change the setup from the original so that at time 1, we make 999 copies of you instead of just 1 and show them all C before deleting all but 1 of the copies. Then your logic would imply P(“I will see C”)=.999 and therefore P(“I will see CO”)=P(“I will see CC”)=0.4995, and P(“I will see O”)=P(“I will see OO”)=.001.

This all make me think there’s something wrong with the 1⁄2,1/4,1/4 answer and with the way you define probabilities of future experiences. More specifically, suppose OO wasn’t just two letters but an unpleasant experience, and CO and CC are both pleasant experiences, so you prefer “I will experience CO/CC” to “I will experience OO”. Then at time 0 you would be willing to pay to switch from the original setup to (2) or (3), and pay even more to switch to (4). But that seems pretty counterintuitive, i.e., why are you paying to avoid making observations in (3), or paying to make and delete copies of yourself in (4). Both of these seem at best pointless in 0P.

But every other approach I’ve seen or thought of also has problems, so maybe we shouldn’t dismiss this one too easily based on these issues. I would be interested to see you work out everything more formally and address the above objections (to the extent possible).

Is the puzzle supposed to be agnostic to the specifics of copying?

It seems to me that if by copying we mean fissure, when a person is separated into two, we have 1⁄2 for OO, 1⁄4 for CO and 1⁄4 for CC, while if by copying we mean “a clone of you is created” then the probability to observe OO a time 0 is 1, because there is no causal mechanism due to which you would swap bodies with a clone.

see also Infra-Bayesian physicalism which aims to formulate ‘bridge rules’ that bridge between the 0P and 1P perspective.

In the context of 0P vs 1P perspectives, I’ve always found Diffractor’s Many faces of Beliefs to be enticing. One day anthropics will be formulated in adjoint functors no doubt.

Frankly, I’m not sure whether the distinction between “worlds” and “experiences” is more useful or more harmful. There is definitely something that rings true about your post but people have been misinterpreting all that in a very silly ways for decades and it seems that you are ready to go in the same direction, considering your mentioning of anthropics.

Mathematically, there are mutually exclusive outcomes which can be combined into events. It doesn’t matter whether these outcomes represent worlds or possible experiences in one world or whatever else—as long as they are truly mutually exclusive we can lawfully use probability theory. If they are not, then saying the phrase “1st person perspective” doesn’t suddenly allow us to use it.

We don’t, unless we can formally specify what “my” means. Until then we are talking about the truth of statements “Red light was observed” and “Red light was not observed”. And if our mathematical model doesn’t track any other information, then for the sake of this mathematical model all the robots that observe red are the same entity. The whole point of math is that it’s true not just for one specific person but for everyone satisfying the conditions. That’s what makes it useful.

Suppose I’m observing a dice roll and I wonder what is the probability that the result will be “4”. The mathematical model that tells me that it’s 1⁄6 also tells the same to you, or any other person. It tells the same fact about any other roll of any other dice with similar relevant properties.

I was worried that you would go there. There is only one lawful way to define probability in Sleeping Beauty problem. The crux of disagreement between thirders and halfers is whether this awakening should be modeled as random awakening between three equiprobable mutually exclusive outcomes: Heads&Monday, Tails&Monday and Tails&Tuesday. And there is one correct answer to it—no it should not. We can formally prove that if Tails&Monday awakening is always followed by Tails&Tuesday awakening, then they are not mutually exclusive.

I’m still reading your Sleeping Beauty posts, so I can’t properly respond to all your points yet. I’ll say though that I don’t think the usefulness or validity of the 0P/1P idea hinges on whether it helps with anthropics or Sleeping Beauty (note that I marked the Sleeping Beauty idea as speculation).

This is frustrating because I’m trying hard here to specify exactly what I mean by the stuff I call “1st Person”. It’s a different interpretation of classical logic. The different interpretation refers to the use of sets of experiences vs the use of sets of worlds in the semantics. Within a particular interpretation, you can lawfully use all the same logic, math, probability, etc… because you’re just switching out which set you’re using for the semantics. What makes the interpretations different practically comes from wiring them up differently in the robot—is it reasoning about its world model or about its sensor values? It sounds like you think the 1P interpretation is superfluous, is that right?

Rephrasing it this way doesn’t change the fact that the observer has not yet been formally specified.

I agree that that is an important and useful aspect of what I would call 0P-mathematics. But I think it’s also useful to be able to build a robot that also has a mode of reasoning where it can reason about its sensor values in a straightforward way.

I agree. Or I’d even say that the usefulness and validity of the 0P/1P idea is reversely correlated with their applications to “anthropic reasoning”.

Yes, I see that and I’m sorry. This kind of warning isn’t aimed at you in particular, it’s a result of my personal pain how people in general tend to misuse such ideas.

I’m not sure. It seems that one of them has to be reducible to the other, though probably in a opposite direction. Isn’t having a world model also a type of experience?

Like, consider two events: “one particular robot observes red” and “any robot observes red”. It seems that the first one is 1st person perspective, while the second is 0th person perspective in your terms. When a robot observes red with its own sensor it concludes that it in particular has observed red and deduces that it means that any robot has observed red. Observation leads to an update of a world model. But what if all robots had a synchronized sensor that triggered for everyone when any of them has observed red. Is it 1st person perspective now?

Probability theory describes subjective credence of a person who observed a specific outcome from a set possible outcomes. It’s about 1P in a sense that different people may have different possible outcomes and thus have different credence after an observation. But also it’s about 0P because any person who observed the same outcome from the same set of possible outcomes should have the same credence.

I guess, I feel that the 0P, 1P distinction doesn’t really carve math by its joints. But I’ll have to think more about it.

It is if the robot has introspective abilities, which is not necessarily the case. But yes, it is generally possible to convert 0P statements to 1P statements and vice-versa. My claim is essentially that this is not an isomorphism.

The 1P semantics is a framework that can be used to design and reason about agents. Someone who thought of “you” as referring to something with a 1P perspective would want to think of it that way for those robots, but it wouldn’t be as helpful for the robots themselves to be designed this way if they worked like that.

I think this is wrong, and that there is a wholly 0P probability theory and a wholly 1P probability theory. Agents can have different 0P probabilities because they don’t necessarily have the same priors, models, or seen the same evidence (yes seeing evidence would be a 1P event, but this can (imperfectly) be converted into a 0P statement—which would essentially be adding a new axiom to the 0P theory).

I disagree with the whole distinction. “My sensor” is indexical. By saying it from my own mouth, I effectively point at myself: “I’m talking about this guy here.” Also, your possible worlds are not connected to each other. “I” “could” stand up right now because the version of me that stands up would share a common past with the other versions, namely my present and my past, but you haven’t provided a common past between your possible worlds, so there is no question of the robots from different ones sharing an identity. As for picking out one robot from the others in the same world, you might equally wonder how we can refer to this or that inanimate object without confusing it with indistinguishable ones. The answer is the same: indexicals. We distinguish them by pointing at them. There is nothing special about the question of talking about ourselves. As for the sleeping beauty problem, it’s a matter of simple conditional probability: “when you wake up, what’s the probability?” 1⁄3. If you had asked, “when you wake up, what’s the probability of having woken up?” The answer would be one, even though you might never wake up. The fact that you are a (or “the”) conscious observer doesn’t matter. It works the same from the third person. If, instead of waking someone up, the scientist had planned to throw a rock on the ground, and the question were, “when the rock is thrown on the ground, what’s the probability of the coin having come up heads?” It would all work out the same. The answer would still be 1⁄3.

I would say that English uses indexicals to signify and say 1P sentences (probably with several exceptions, because English). Pointing to yourself doesn’t help specify your location from the 0P point of view because it’s referencing the thing it’s trying to identify. You can just use yourself as the reference point, but that’s exactly what the 1P perspective lets you do.

I’m not sure whether I agree with Epirito or Adele here. I feel confused and unclear about this whole discussion. But I would like to try to illustrate what I think Epirito is talking about, by modifying Adele’s image to have a robot with an arm and a speaker, capable of pointing at itself and saying something like ‘this robot that is speaking and that the speaking robot is pointing to, sees red’.

“it’s referencing the thing it’s trying to identify” I don’t understand why you think that fails. If I point at a rock, does the direction of my finger not privilege the rock I’m pointing at above all others? Even by looking at merely possible worlds from a disembodied perspective, you can still see a man pointing to a rock and know which rock he’s talking about. My understanding is that your 1p perspective concerns sense data, but I’m not talking about the appearance of a rock when I point at it. I’m talking about the rock itself. Even when I sense no rock I can still refer to a possible rock by saying “if there is a rock in front of me, I want you to pick it up.”

This approach implies there are two possible types of meanings: Sets of possible worlds and sets of possible experiences. A set of possible worlds would constitute truth conditions for “objective” statements about the external world, while a set of experience conditions would constitute verification conditions for subjective statements, i.e. statements about the current internal states of the agent.

However, it seems like a statement can mix both external or internal affairs, which would make the 0P/1P distinction problematic. Consider Wei Dai’s example of “I will see red”. It expresses a relation between the current agent (“I”) and its hypothetical “future self”. “I” is presumably an internal object, since the agent can refer to itself or its experiences independently of how the external world turns out to be constituted. The future agent, however, is an external object relative to the current agent which makes the statement. It must be external because its existence is uncertain to the present agent. Same for the future experience of red.

Then the statement “I will see red” could be formalized as follows, where i (“I”/”me”/”myself”) is an individual constant which refers to the present agent:

∃x(WillBecome(i,x)∧∃y(Red(y)∧Sees(x,y))).

Somewhat less formally: “There is an x such that I will become x and there is a experience of red y such that x sees y.”

(The quantifier is assumed to range over all objects irrespective of when they exist in time.)

If there is a future object x and a future experience y that make this statement true, they would be external to the present agent who is making the statement. But i is internal to the present agent, as it is the (present) agent itself. (Consider Descartes demon currently misleading you about the existence of the external world. Even in that case you could be certain that you exist. So you aren’t something external.)

So Wei’s statement seems partially internal and partially external, and it is not clear whether its meaning can be either a set of experiences or a set of possible worlds on the 0P/1P theory. So it seems a unified account is needed.

Here is an alternative theory.

Assume the meaning of a statement is instead a set of experience/degree-of-confirmation pairs. That is, two statements have the same meaning if they get confirmed/disconfirmed to the same degree for all possible experiences that E. So statement A has the same meaning as a statement B iff:

∀E(P(A∣E)=P(B∣E)),

where P(_∣_) is a probability function describing conditional beliefs. (See Yudkowsky’s anticipated experiences. Or Rudolf Carnap’s liberal verificationism, which considers degrees of confirmation instead of Wittgenstein’s strict verification.)

Now this arguably makes sense for statements about external affairs: If I make two statements, and I would regard them to be confirmed or disconfirmed to the same degree by the same evidence, that would plausibly mean I regard them as synonymous. And if two people disagree regarding the confirmation conditions of a statement, that would imply they don’t mean the same (or completely the same) thing when they express that statement, even if they use the same words.

It also makes sense for internal affairs. I make a statement about some internal affair, like “I see red”, formally See(i,r)∧Red(r). Here i refers to myself and r to my current experience of red. Then this is true iff there is some piece of evidence that E which is equivalent to that internal statement, namely the experience that I see red. Then P(See(i,r)∧Red(r)∣E)=1 if E=See(i,r)∧Red(r), otherwise P(See(i,r)∧Red(r)∣E)=0.

Again, the “I” here is logically an individual constant i internal to the agent, likewise the experience r. That is, only my own experience verifies that statement. If there is another agent, who also sees red, those experiences are numerically different. There are two different constants i which refer to numerically different agents, and two constants r which refer to two different experiences.

That is even the case if the two agents are perfectly correlated, qualitatively identical doppelgangers with qualitatively identical experiences (on, say, some duplicate versions of Earth, far away from each other). If one agent stubs its toe, the other agent also stubs its toe, but the first agent only feels the pain caused by the first agent’s toe, while the second only feels the pain caused by the second agent’s toe, and neither feels the experience of the other. Their experiences are only qualitatively but not numerically identical. We are talking about two experiences here, as one could have occurred without the other. They are only contingently correlated.

Now then, what about the mixed case “I will see red”? We need an analysis here such that the confirming evidence is different for statements expressed by two different agents who both say “I will see red”. My statement ∃x(WillBecome(i,x)∧∃y(Red(y)∧Sees(x,y))) would be (now) confirmed, to some degree, by any evidence (experiences) suggesting that a) I will become some future person x such that b) that future person will see red. That is different from the internal “I see red” experience that this future person would have themselves.

An example. I may see a notice indicating that a red umbrella I ordered will arrive later today, which would confirm that I will see red. Seeing this notice would constitute such a confirming experience. Again, my perfect doppelganger on a perfect twin Earth would also see such a notice, but our experiences would not be numerically identical. Just like my doppelganger wouldn’t feel my pain when we both, synchronously, stub our toes. My experience of seeing the umbrella notice is caused (explained) by the umbrella notice here on Earth, not by the far away umbrella notice on twin Earth. When I say “this notice” I refer to the hypothetical object which causes my experience of a notice. So every instance of the indexical “this” involves reference to myself and to an experience I have. Both are internal, and thus numerically different even for agents with qualitatively identical experiences. So if we both say “This notice says I will see a red umbrella later today”, we would express different statements. Their meaning would be different.

In summary, I think this is a good alternative to the 0P/1P theory. It provides a unified account of meanings, and it correctly deals with distinct agents using indexicals while having qualitatively identical experiences. Because it has a unified account of meaning, it has no in-principle problem with “mixed” (internal/external) statements.

It does omit possible worlds. So one objection would be that it would assign the same meaning to two hypotheses which make distinct but (in principle) unverifiable predictions. Like, perhaps, two different interpretations of quantum mechanics. I would say that a) these theories may differ in other aspects which are subject to some possible degree of (dis)confirmation and b) if even such indirect empirical comparisons are excluded a priori, regarding them as synonymous doesn’t sound so bad, I would argue.

The problem with using possible worlds to determine meanings is that you can always claim that the meaning of “The mome raths outgrabe” is the set of possible worlds where the mome raths outgrabe. Since possible worlds (unlike anticipated degrees of confirmation by different possible experiences) are objects external to an agent, there is no possibility of a decision procedure which determines that an expression is meaningless. Nor can there, with the possible worlds theory, be a decision procedure which determines that two expressions have the same or different meanings. It only says the meaning of “Bob is a bachelor” is determined by the possible worlds where Bob is a bachelor, and that the meaning of “Bob is an unmarried man” is determined by the worlds where Bob is an unmarried man, but it doesn’t say anything which would allow an agent to compare those meanings.

Where do these degrees-of-confirmation come from? I think part of the motivation for defining meaning in terms of possible worlds is that it allows us to compute conditional and unconditional probabilities, e.g., P(A|B) = P(A and B)/P(B) where P(B) is defined in terms of the set of possible worlds that B “means”. But with your proposed semantics, we can’t do that, so I don’t know where these probabilities are supposed come from.

You can interpret them as subjective probability functions, where the conditional probability P(A|B) is the probability you currently expect for A under the assumption that you are certain that B. With the restriction that P(A and B)=P(A|B)P(B)=P(A)P(B|A).

I don’t think possible worlds help us to calculate any of the two values in the ratio P(A and B)/P(B). That would only be possible of you could say something about the share of possible worlds in which “A and B” is true, or “B”.

Like: “A and B” is true in 20% of all possible worlds, “B” is true in 50%, therefore “A” is true in 40% of the “B” worlds. So P(A|B)=0.4.

But that obviously doesn’t work. Each statement is true in infinitely many possible worlds and we have no idea how to count them to assign numbers like 20%.

Where do they come from or how are they computed? However that’s done, shouldn’t the meaning or semantics of A and B play some role in that? In other words, how do you think about P(A|B) without first knowing what A and B mean (in some non-circular sense)? I think this suggests that “the meaning of a statement is instead a set of experience/degree-of-confirmation pairs” can’t be right.

See What Are Probabilities, Anyway? for some ideas.

Yeah, this is a good point. The meaning of a statement is explained by experiences E, so the statement can’t be assumed from the outset to be a proposition (the meaning of a statement), as that would be circular. We have to assume that it is a potential utterance, something like a probabilistic disposition to assent to it. The synonymity condition can be clarified by writing the statements in quotation marks:

∀E(P("A"∣E)=P("B"∣E)).

Additionally the quantifier ranges only over experiences E, which can’t be any statements, but only potential experiences of the agent. Experiences are certain once you have them, while ordinary beliefs about external affairs are not.

By the way, the above is the synonymity condition which defines when two statements are synonymous or not. A somewhat awkward way to define the meaning of an individual statement would be as the equivalence class of all synonymous statements. But a possibility to define the meaning of an individual statement more directly would be to regard the meaning as the set of all pairwise odds ratios between the statement and any possible evidence. The odds ratio measures the degree of probabilistic dependence between two events. Which accords with the Bayesian idea that evidence is basically just dependence.

Then one could define synonymity alternatively as the meanings of two statements, their odds ratio sets, being equal. The above definition of synonymity would then no longer be required. This would have the advantage that we don’t have to assign some mysterious unconditional value to P(“A”|E)=P(“A”) if we think A and E are independent. Because independence just means OddsRatio(“A”,E)=1.

Another interesting thing to note is that Yudkowsky sometimes seems to express his theory of “anticipated experiences” in the reverse of what I’ve done above. He seems to think of prediction instead of confirmation. That would reverse things:

∀E(P(E∣"A")=P(E∣"B")).

I don’t think it makes much of a difference, since probabilistic dependence is ultimately symmetric, i.e. OddsRatio(X,Y)=OddsRatio(Y,X).

Maybe there is some other reason though to prefer the prediction approach over the confirmation approach. Like, for independence we would, instead of P(“A”|E)=P(“A”), have P(E|”A”)=P(E). The latter refers to the unconditional probability of an experience, which may be less problematic than to rely on the unconditional probability of a statement.

And how does someone compute the degree to which they expect some experience to confirm a statement? I leave that outside the theory. The theory only says that what you mean with a statement is determined by what you expect to confirm or disconfirm it. I think that has a lot of plausibility once you think about synonymity. How could be say two different statements have different meaning when we regard them as empirically equivalent under any possible evidence?

The approach can be generalized to account for the meaning of sub-sentence terms, i.e. individual words. A standard solution is to say that two words are synonymous iff they can be substituted for each other in any statement without affecting the meaning of the whole statement. Then there are tautologies, which are independent of any evidence, so they would be synonymous according to the standard approach. I think we could say their meaning differs in the sense that the meaning of the individual words differ. For other sentence types, like commands, we could e.g. rely on evidence that the command is executed—instead of true, like in statements. An open problem are to account for the meaning of expressions that don’t have any obvious satisfaction conditions (like being true or executed), e.g. greetings.

Regarding “What Are Probabilities, Anyway?”. The problem you discuss there is how to define an objective notion of probability. Subjective probabilities are simple, they are are just the degrees of belief of some agent at a particular point in time. But it is plausible that some subjective probability distributions are better than others, which suggests there is some objective, ideally rational probability distribution. It is unclear how to define such a thing, so this remains an open philosophical problem. But I think a theory of meaning works reasonably well with subjective probability.

Suppose I tell a stranger, “It’s raining.” Under possible worlds semantics, this seems pretty straightforward: I and the stranger share a similar map from sentences to sets of possible worlds, so with this sentence I’m trying to point them to a certain set of possible worlds that match the sentence, and telling them that I think the real world is in this set.

Can you tell a similar story of what I’m trying to do when I say something like this, under your proposed semantics?

I don’t think we should judge philosophical ideas in isolation, without considering what other ideas it’s compatible with and how well it fits into them. So I think we should try to answer related questions like this, and look at the overall picture, instead of just saying “it’s outside the theory”.

No, in that post I also consider interpretations of probability where it’s subjective. I linked to that post mainly to show you some ideas for how to quantify sizes of sets of possible worlds, in response to your assertion that we don’t have any ideas for this. Maybe try re-reading it with this in mind?

So my conjecture of what happens here is: You and the stranger assume a similar degree of confirmation relation between the sentence “It’s raining” and possible experiences. For example, you both expect visual experiences of raindrops, when looking out of the window, to confirm the sentence pretty strongly. Or rain-like sounds on the roof. So with asserting this sentence you try to tell the stranger that you predict/expect certain forms of experiences, which presumably makes the stranger predict similar things (if they assume you are honest and well-informed).

The problem with agents mapping a sentence to certain possible worlds is that this mapping has to occur “in our head”, internally to the agent. But possible worlds / truth conditions are external, at least for sentences about the external world. We can only create a mapping between things we have access to. So it seems we cannot create such a mapping. It’s basically the same thing Nate Showell said in a neighboring comment.

(We could replace possible worlds / truth conditions themselves with other beliefs, presumably a disjunction of beliefs that are more specific than the original statement. Beliefs are internal, so a mapping is possible. But beliefs have content (i.e. meaning) themselves, just like statements. So how then to account for these meanings? To explain them with more beliefs would lead to an infinite regress. It all has to bottom out in experiences, which is something we simply have as a given. Or any really any robot with sensory inputs, as Adele Lopez remarked.)

Okay, I admit I have a hard time understanding the post. To comment on the “mainstream view”:

(While I wouldn’t personally call this a way of “estimating the size” of sets of possible worlds,) I think this interpretation has some plausibility. And I guess it may be broadly compatible with the confirmation/prediction theory of meaning. This is speculative, but truth seems to be the “limit” of confirmation or prediction, something that is approached, in some sense, as the evidence gets stronger. And truth is about how the external world is like. Which is just a way of saying that there is some possible way the world is like, which rules out other possible worlds.

Your counterarguments against interpretation 1 seems to be that it is merely subjective and not objective, which is true. Though this doesn’t rule out the existence of some unknown rationality standards which restrict the admissible beliefs to something more objective.

Interpretation 2, I would argue, is confusing possibilities with indexicals. These are really different. A possible world is not a location in a large multiverse world. Me in a different possible world is still me, at least if not too dissimilar, but a doppelganger of me in this world is someone else, even if he is perfectly similar to me. (It seems trivially true to say that I could have had different desires, and consequently something else for dinner. If this is true, it is possible that I could have wanted something else for dinner. Which is another way of saying there is a possible world where I had a different preference for food. So this person in that possible world is me. But to say there are certain possible worlds is just a metaphysically sounding way of saying that certain things are possible. Different counterfactual statements could be true of me, but I can’t exist at different locations. So indexical location is different from possible existence.)

I don’t quite understand interpretation 3. But interpretation 4 I understand even less. Beliefs

seem to beare clearly different from desires. The desire that p is different from the belief that p. They can be even seen as opposites in terms of direction of fit. I don’t understand what you find plausible about this theory, but I also don’t know much about UDT.Believing in 0P-preferences seems to be a map-territory confusion, an instance of the Tyranny of the Intentional Object. The robot can’t observe the grid in a way that isn’t mediated by its sensors. There’s no way for 0P-statements to enter into the robot’s decision loop, and accordingly act as something the robot can have preferences over, except by routing through 1P-statements. Instead of directly having a 0P-preference for “a square of the grid is red,” the robot would have to have a 1P-preference for “I believe that a square of the grid is red.”

It would be more precise to say the robot would prefer to get evidence which raises its degree of belief that a square of the grid is red.