Evolution of Modularity

This post is based on chapter 15 of Uri Alon’s book An Introduction to Systems Biology: Design Principles of Biological Circuits. See the book for more details and citations; see here for a review of most of the rest of the book.

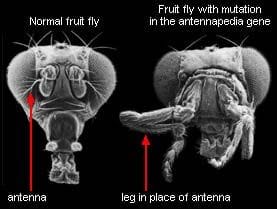

Fun fact: biological systems are highly modular, at multiple different scales. This can be quantified and verified statistically, e.g. by mapping out protein networks and algorithmically partitioning them into parts, then comparing the connectivity of the parts. It can also be seen more qualitatively in everyday biological work: proteins have subunits which retain their function when fused to other proteins, receptor circuits can be swapped out to make bacteria follow different chemical gradients, manipulating specific genes can turn a fly’s antennae into legs, organs perform specific functions, etc, etc.

On the other hand, systems designed by genetic algorithms (aka simulated evolution) are decidedly not modular. This can also be quantified and verified statistically. Qualitatively, examining the outputs of genetic algorithms confirms the statistics: they’re a mess.

So: what is the difference between real-world biological evolution vs typical genetic algorithms, which leads one to produce modular designs and the other to produce non-modular designs?

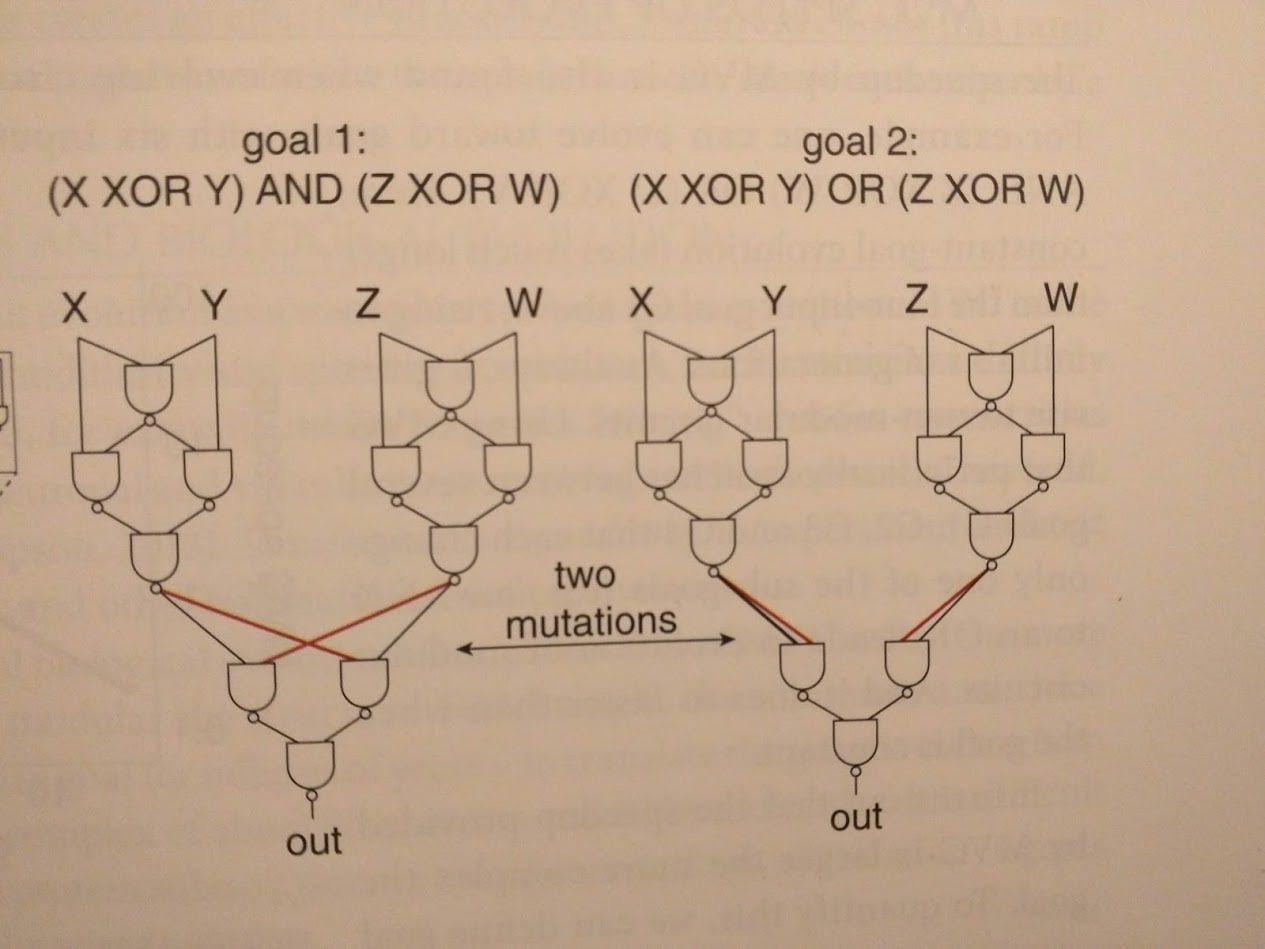

Kashtan & Alon tackle the problem by evolving logic circuits under various conditions. They confirm that simply optimizing the circuit to compute a particular function, with random inputs used for selection, results in highly non-modular circuits. However, they are able to obtain modular circuits using “modularly varying goals” (MVG).

The idea is to change the reward function every so often (the authors switch it out every 20 generations). Of course, if we just use completely random reward functions, then evolution doesn’t learn anything. Instead, we use “modularly varying” goal functions: we only swap one or two little pieces in the (modular) objective function. An example from the book:

The upshot is that our different goal functions generally use similar sub-functions—suggesting that they share sub-goals for evolution to learn. Sure enough, circuits evolved using MVG have modular structure, reflecting the modular structure of the goals.

(Interestingly, MVG also dramatically accelerates evolution—circuits reach a given performance level much faster under MVG than under a fixed goal, despite needing to change behavior every 20 generations. See either the book or the paper for more on that.)

How realistic is MVG as a model for biological evolution? I haven’t seen quantitative evidence, but qualitative evidence is easy to spot. MVG as a theory of biological modularity predicts that highly variable subgoals will result in modular structure, whereas static subgoals will result in a non-modular mess. Alon’s book gives several examples:

Chemotaxis: different bacteria need to pursue/avoid different chemicals, with different computational needs and different speed/energy trade-offs, in various combinations. The result is modularity: separate components for sensing, processing and motion.

Animals need to breathe, eat, move, and reproduce. A new environment might have different food or require different motions, independent of respiration or reproduction—or vice versa. Since these requirements vary more-or-less independently in the environment, animals evolve modular systems to deal with them: digestive tract, lungs, etc.

Ribosomes, as an anti-example: the functional requirements of a ribosome hardly vary at all, so they end up non-modular. They have pieces, but most pieces do not have an obvious distinct function.

To sum it up: modularity in the system evolves to match modularity in the environment.

- (My understanding of) What Everyone in Technical Alignment is Doing and Why by (Aug 29, 2022, 1:23 AM; 413 points)

- The Plan by (Dec 10, 2021, 11:41 PM; 260 points)

- Gears-Level Models are Capital Investments by (Nov 22, 2019, 10:41 PM; 177 points)

- Testing The Natural Abstraction Hypothesis: Project Intro by (Apr 6, 2021, 9:24 PM; 168 points)

- Deep learning models might be secretly (almost) linear by (Apr 24, 2023, 6:43 PM; 117 points)

- 2019 Review: Voting Results! by (Feb 1, 2021, 3:10 AM; 99 points)

- Searching for Search by (Nov 28, 2022, 3:31 PM; 97 points)

- Project Intro: Selection Theorems for Modularity by (Apr 4, 2022, 12:59 PM; 74 points)

- What Selection Theorems Do We Expect/Want? by (Oct 1, 2021, 4:03 PM; 71 points)

- SGD’s Bias by (May 18, 2021, 11:19 PM; 63 points)

- Ten experiments in modularity, which we’d like you to run! by (Jun 16, 2022, 9:17 AM; 62 points)

- «Boundaries/Membranes» and AI safety compilation by (May 3, 2023, 9:41 PM; 56 points)

- Theories of Modularity in the Biological Literature by (Apr 4, 2022, 12:48 PM; 51 points)

- Regularization Causes Modularity Causes Generalization by (Jan 1, 2022, 11:34 PM; 50 points)

- Selection processes for subagents by (Jun 30, 2022, 11:57 PM; 36 points)

- Abstraction, Evolution and Gears by (Jun 24, 2020, 5:39 PM; 29 points)

- No Abstraction Without a Goal by (Jan 10, 2022, 8:24 PM; 28 points)

- 's comment on Book Launch: The Engines of Cognition by (Mar 16, 2022, 6:43 PM; 25 points)

- 's comment on Capability Phase Transition Examples by (Feb 8, 2022, 5:13 AM; 21 points)

- Positive Feedback → Optimization? by (Mar 16, 2020, 6:48 PM; 19 points)

- 's comment on Instant stone (just add water!) by (Dec 27, 2020, 3:40 PM; 17 points)

- Motivations, Natural Selection, and Curriculum Engineering by (Dec 16, 2021, 1:07 AM; 16 points)

- 's comment on If brains are computers, what kind of computers are they? (Dennett transcript) by (Jan 30, 2020, 8:07 PM; 14 points)

- What role should evolutionary analogies play in understanding AI takeoff speeds? by (Dec 11, 2021, 1:19 AM; 14 points)

- What role should evolutionary analogies play in understanding AI takeoff speeds? by (EA Forum; Dec 11, 2021, 1:16 AM; 12 points)

- 's comment on Dalcy’s Shortform by (Oct 2, 2024, 5:41 PM; 11 points)

- 's comment on Search versus design by (Aug 21, 2020, 8:50 AM; 6 points)

- Telic intuitions across the sciences by (Oct 22, 2022, 9:31 PM; 4 points)

- Contrapositive Natural Abstraction—Project Intro by (Jun 24, 2024, 6:37 PM; 4 points)

- 's comment on Self-Organised Neural Networks: A simple, natural and efficient way to intelligence by (Feb 24, 2022, 2:18 PM; 1 point)

- 's comment on Review Voting Thread by (Dec 30, 2020, 3:42 AM; 0 points)

I liked this post for talking about how evolution produces modularity (contrary to what is often said in this community!). This is something I suspected myself but it’s nice to see it explained clearly, with backing evidence.

Coming back to this post, I have some thoughts related to it that connect this more directly to AI Alignment that I want to write up, and that I think make this post more important than I initially thought. Hence nominating it for the review.

I’m curious to hear these thoughts.