Alignment By Default

Suppose AI continues on its current trajectory: deep learning continues to get better as we throw more data and compute at it, researchers keep trying random architectures and using whatever seems to work well in practice. Do we end up with aligned AI “by default”?

I think there’s at least a plausible trajectory in which the answer is “yes”. Not very likely—I’d put it at ~10% chance—but plausible. In fact, there’s at least an argument to be made that alignment-by-default is more likely to work than many fancy alignment proposals, including IRL variants and HCH-family methods.

This post presents the rough models and arguments.

I’ll break it down into two main pieces:

Will a sufficiently powerful unsupervised learner “learn human values”? What does that even mean?

Will a supervised/reinforcement learner end up aligned to human values, given a bunch of data/feedback on what humans want?

Ultimately, we’ll consider a semi-supervised/transfer-learning style approach, where we first do some unsupervised learning and hopefully “learn human values” before starting the supervised/reinforcement part.

As background, I will assume you’ve read some of the core material about human values from the sequences, including Hidden Complexity of Wishes, Value is Fragile, and Thou Art Godshatter.

Unsupervised: Pointing to Values

In this section, we’ll talk about why an unsupervised learner might not “learn human values”. Since an unsupervised learner is generally just optimized for predictive power, we’ll start by asking whether theoretical algorithms with best-possible predictive power (i.e. Bayesian updates on low-level physics models) “learn human values”, and what that even means. Then, we’ll circle back to more realistic algorithms.

Consider a low-level physical model of some humans—e.g. a model which simulates every molecule comprising the humans. Does this model “know human values”? In one sense, yes: the low-level model has everything there is to know about human values embedded within it, in exactly the same way that human values are embedded in physical humans. It has “learned human values”, in a sense sufficient to predict any real-world observations involving human values.

But it seems like there’s a sense in which such a model does not “know” human values. Specifically, although human values are embedded in the low-level model, the embedding itself is nontrivial. Even if we have the whole low-level model, we still need that embedding in order to “point to” human values specifically—e.g. to use them as an optimization target. Indeed, when we say “point to human values”, what we mean is basically “specify the embedding”. (Side note: treating human values as an optimization target is not the only use-case for “pointing to human values”, and we still need to point to human values even if we’re not explicitly optimizing for anything. But that’s a separate discussion, and imagining using values as an optimization target is useful to give a mental image of what we mean by “pointing”.)

In short: predictive power alone is not sufficient to define human values. The missing part is the embedding of values within the model. The hard part is pointing to the thing (i.e. specifying the values-embedding), not learning the thing (i.e. finding a model in which values are embedded).

Finally, here’s a different angle on the same argument which will probably drive some of the philosophers up in arms: any model of the real world with sufficiently high general predictive power will have a model of human values embedded within it. After all, it has to predict the parts of the world in which human values are embedded in the first place—i.e. the parts of which humans are composed, the parts on which human values are implemented. So in principle, it doesn’t even matter what kind of model we use or how it’s represented; as long the predictive power is good enough, values will be embedded in there, and the main problem will be finding the embedding.

Unsupervised: Natural Abstractions

In this section, we’ll talk about how and why a large class of unsupervised methods might “learn the embedding” of human values, in a useful sense.

First, notice that basically everything from the previous section still holds if we replace the phrase “human values” with “trees”. A low-level physical model of a forest has everything there is to know about trees embedded within it, in exactly the same way that trees are embedded in the physical forest. However, while there are trees embedded in the low-level model, the embedding itself is nontrivial. Predictive power alone is not sufficient to define trees; the missing part is the embedding of trees within the model.

More generally, whenever we have some high-level abstract object (i.e. higher-level than quantum fields), like trees or human values, a low-level model might have the object embedded within it but not “know” the embedding.

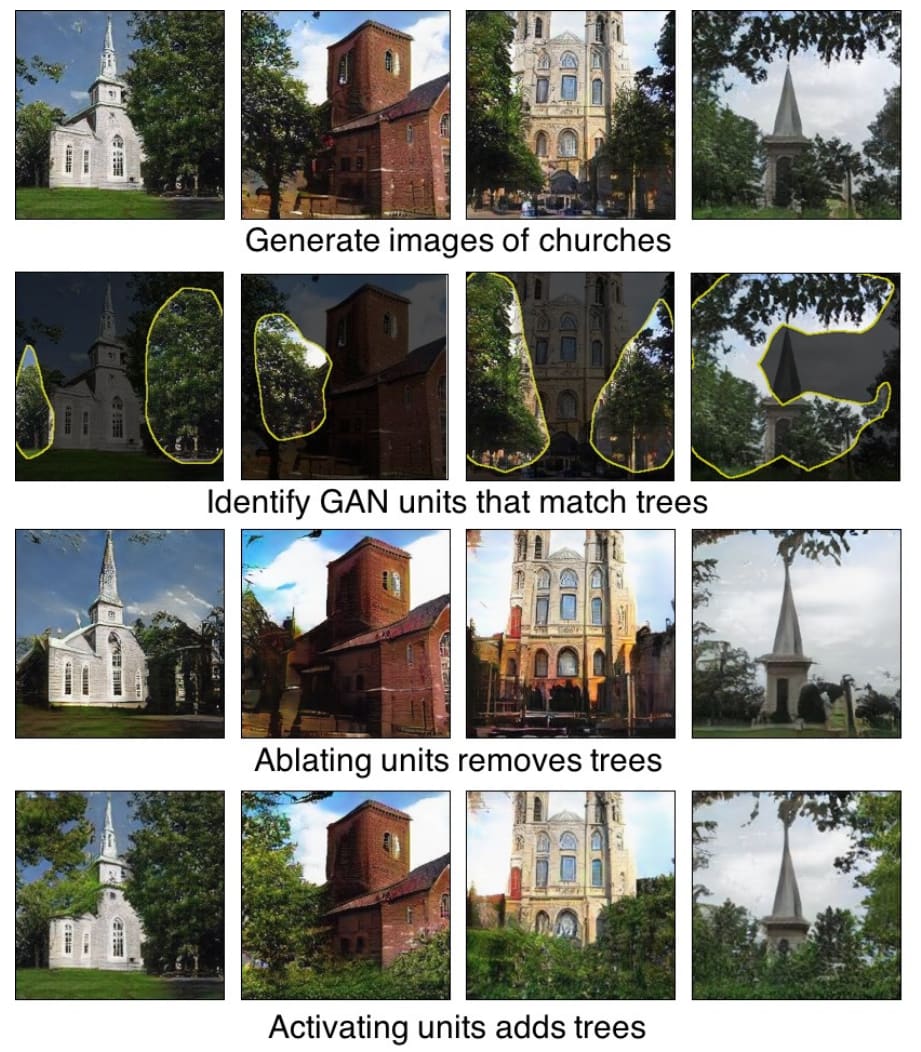

Now for the interesting part: empirically, we have whole classes of neural networks in which concepts like “tree” have simple, identifiable embeddings. These are unsupervised systems, trained for predictive power, yet they apparently “learn the tree-embedding” in the sense that the embedding is simple: it’s just the activation of a particular neuron, a particular channel, or a specific direction in the activation-space of a few neurons.

What’s going on here? We know that models optimized for predictive power will not have trivial tree-embeddings in general; low-level physics simulations demonstrate that much. Yet these neural networks do end up with trivial tree-embeddings, so presumably some special properties of the systems make this happen. But those properties can’t be that special, because we see the same thing for a reasonable variety of different architectures, datasets, etc.

Here’s what I think is happening: “tree” is a natural abstraction. More on what that means here, but briefly: abstractions summarize information which is relevant far away. When we summarize a bunch of atoms as “a tree”, we’re throwing away lots of information about the exact positions of molecules/cells within the tree, or about the pattern of bark on the tree’s surface. But information like the exact positions of molecules within the tree is irrelevant to things far away—that signal is all wiped out by the noise of air molecules between the tree and the observer. The flap of a butterfly’s wings may alter the trajectory of a hurricane, but unless we know how all wings of all butterflies are flapping, that tiny signal is wiped out by noise for purposes of our own predictions. Most information is irrelevant to things far away, not in the sense that there’s no causal connection, but in the sense that the signal is wiped out by noise in other unobserved variables.

If a concept is a natural abstraction, that means that the concept summarizes all the information which is relevant to anything far away, and isn’t too sensitive to the exact notion of “far away” involved. That’s what I think is going on with “tree”.

Getting back to neural networks: it’s easy to see why a broad range of architectures would end up “using” natural abstractions internally. Because the abstraction summarizes information which is relevant far away, it allows the system to make far-away predictions without passing around massive amounts of information all the time. In a low-level physics model, we don’t need abstractions because we do pass around massive amounts of information all the time, but real systems won’t have anywhere near that capacity any time soon. So for the foreseeable future, we should expect to see real systems with strong predictive power using natural abstractions internally.

With all that in mind, it’s time to drop the tree-metaphor and come back to human values. Are human values a natural abstraction?

If you’ve read Value is Fragile or Godshatter, then there’s probably a knee-jerk reaction to say “no”. Human values are basically a bunch of randomly-generated heuristics which proved useful for genetic fitness; why would they be a “natural” abstraction? But remember, the same can be said of trees. Trees are a complicated pile of organic spaghetti code, but “tree” is still a natural abstraction, because the concept summarizes all the information from that organic spaghetti pile which is relevant to things far away. In particular, it summarizes anything about one tree which is relevant to far-away trees.

Similarly, the concept of “human” summarizes all the information about one human which is relevant to far-away humans. It’s a natural abstraction.

Now, I don’t think “human values” are a natural abstraction in exactly the same way as “tree”—specifically, trees are abstract objects, whereas human values are properties of certain abstract objects (namely humans). That said, I think it’s pretty obvious that “human” is a natural abstraction in the same way as “tree”, and I expect that humans “have values” in roughly the same way that trees “have branching patterns”. Specifically, the natural abstraction contains a bunch of information, that information approximately factors into subcomponents (including “branching pattern”), and “human values” is one of those information-subcomponents for humans.

I wouldn’t put super-high confidence on all of this, but given the remarkable track record of hackish systems learning natural abstractions in practice, I’d give maybe a 70% chance that a broad class of systems (including neural networks) trained for predictive power end up with a simple embedding of human values. A plurality of my uncertainty is on how to think about properties of natural abstractions. A significant chunk of uncertainty is also on the possibility that natural abstraction is the wrong way to think about the topic altogether, although in that case I’d still assign a reasonable chance that neural networks end up with simple embeddings of human values—after all, no matter how we frame it, they definitely have trivial embeddings of many other complicated high-level objects.

Aside: Microscope AI

Microscope AI is about studying the structure of trained neural networks, and trying to directly understand their learned internal algorithms, models and concepts. In light of the previous section, there’s an obvious path to alignment where there turns out to be a few neurons (or at least some simple embedding) which correspond to human values, we use the tools of microscope AI to find that embedding, and just like that the alignment problem is basically solved.

Of course it’s unlikely to be that simple in practice, even assuming a simple embedding of human values. I don’t expect the embedding to be quite as simple as one neuron activation, and it might not be easy to recognize even if it were. Part of the problem is that we don’t even know the type signature of the thing we’re looking for—in other words, there are unanswered fundamental conceptual questions here, which make me less-than-confident that we’d be able to recognize the embedding even if it were right under our noses.

That said, this still seems like a reasonably-plausible outcome, and it’s an approach which is particularly well-suited to benefit from marginal theoretical progress.

One thing to keep in mind: this is still only about aligning one AI; success doesn’t necessarily mean a future in which more advanced AIs remain aligned. More on that later.

Supervised/Reinforcement: Proxy Problems

Suppose we collect some kind of data on what humans want, and train a system on that. The exact data and type of learning doesn’t really matter here; the relevant point is that any data-collection process is always, no matter what, at best a proxy for actual human values. That’s a problem, because Goodhart’s Law plus Hidden Complexity of Wishes. You’ve probably heard this a hundred times already, so I won’t belabor it.

Here’s the interesting possibility: assume the data is crap. It’s so noisy that, even though the data-collection process is just a proxy for real values, the data is consistent with real human values. Visually:

At first glance, this isn’t much of an improvement. Sure, the data is consistent with human values, but it’s consistent with a bunch of other possibilities too—including the real data-collection process (which is exactly the proxy we wanted to avoid in the first place).

But now suppose we do some transfer learning. We start with a trained unsupervised learner, which already has a simple embedding of human values (we hope). We give our supervised learner access to that system during training. Because the unsupervised learner has a simple embedding of human values, the supervised learner can easily score well by directly using that embedded human values model. So, we cross our fingers and hope the supervised learner just directly uses that embedded human values model, and the data is noisy enough that it never “figures out” that it can get better performance by directly modelling the data-collection process instead.

In other words: the system uses an actual model of human values as a proxy for our proxy of human values.

This requires hitting a window—our data needs to be good enough that the system can tell it should use human values as a proxy, but bad enough that the system can’t figure out the specifics of the data-collection process enough to model it directly. This window may not even exist.

(Side note: we can easily adjust this whole story to a situation where we’re training for some task other than “satisfy human values”. In that case, the system would use the actual model of human values to model the Hidden Complexity of whatever task it’s training on.)

Of course in practice, the vast majority of the things people use as objectives for training AI probably wouldn’t work at all. I expect that they usually look like this:

In other words, most objectives are so bad that even a little bit of data is enough to distinguish the proxy from real human values. But if we assume that there’s some try-it-and-see going on, i.e. people try training on various objectives and keep the AIs which seem to do roughly what the humans want, then it’s maybe plausible that we end up iterating our way to training objectives which “work”. That’s assuming things don’t go irreversibly wrong before then—including not just hostile takeover, but even just development of deceptive behavior, since this scenario does not have any built-in mechanism to detect deception.

Overall, I’d give maybe a 10-20% chance of alignment by this path, assuming that the unsupervised system does end up with a simple embedding of human values. The main failure mode I’d expect, assuming we get the chance to iterate, is deception—not necessarily “intentional” deception, just the system being optimized to look like it’s working the way we want rather than actually working the way we want. It’s the proxy problem again, but this time at the level of humans-trying-things-and-seeing-if-they-work, rather than explicit training objectives.

Alignment in the Long Run

So far, we’ve only talked about one AI ending up aligned, or a handful ending up aligned at one particular time. However, that isn’t really the ultimate goal of AI alignment research. What we really want is for AI to remain aligned in the long run, as we (and AIs themselves) continue to build new and more powerful systems and/or scale up existing systems over time.

I know of two main ways to go from aligning one AI to long-term alignment:

Make the alignment method/theory very reliable and robust to scale, so we can continue to use it over time as AI advances.

Align one roughly-human-level-or-smarter AI, then use that AI to come up with better alignment methods/theories.

The alignment-by-default path relies on the latter. Even assuming alignment happens by default, it is unlikely to be highly reliable or robust to scale.

That’s scary. We’d be trusting the AI to align future AIs, without having any sure-fire way to know that the AI is itself aligned. (If we did have a sure-fire way to tell, then that would itself be most of a solution to the alignment problem.)

That said, there’s a bright side: when alignment-by-default works, it’s a best-case scenario. The AI has a basically-correct model of human values, and is pursuing those values. Contrast this to things like IRL variants, which at best learn a utility function which approximates human values (which are probably not themselves a utility function). Or the HCH family of methods, which at best mimic a human with a massive hierarchical bureaucracy at their command, and certainly won’t be any more aligned than that human+bureaucracy would be.

To the extent that alignment of the successor system is limited by alignment of the parent system, that makes alignment-by-default potentially a more promising prospect than IRL or HCH. In particular, it seems plausible that imperfect alignment gets amplified into worse-and-worse alignment as systems design their successors. For instance, a system which tries to look like it’s doing what humans want rather than actually doing what humans want will design a successor which has even better human-deception capabilities. That sort of problem makes “perfect” alignment—i.e. an AI actually pointed at a basically-correct model of human values—qualitatively safer than a system which only manages to be not-instantly-disastrous.

(Side note: this isn’t the only reason why “basically perfect” alignment matters, but I do think it’s the most relevant such argument for one-time alignment/short-term term methods, especially on not-very-superhuman AI.)

In short: when alignment-by-default works, we can use the system to design a successor without worrying about amplification of alignment errors. However, we wouldn’t be able to tell for sure whether alignment-by-default had worked or not, and it’s still possible that the AI would make plain old mistakes in designing its successor.

Conclusion

Let’s recap the bold points:

A low-level model of some humans has everything there is to know about human values embedded within it, in exactly the same way that human values are embedded in physical humans. The embedding, however, is nontrivial. Thus...

Predictive power alone is not sufficient to define human values. The missing part is the embedding of values within the model. However…

This also applies if we replace the phrase “human values” with “trees”. Yet we have a whole class of neural networks in which a simple embedding lights up in response to trees. Why?

Trees are a natural abstraction, and we should expect to see real systems trained for predictive power use natural abstractions internally.

Human values are a little different from trees (they’re a property of an abstract object rather than an abstract object themselves), but I still expect that a broad class of systems trained for predictive power will end up with simple embeddings of human values (~70% chance).

Because the unsupervised learner has a simple embedding of human values, a supervised/reinforcement learner can easily score well on values-proxy-tasks by directly using that model of human values. In other words, the system uses an actual model of human values as a proxy for our proxy of human values (~10-20% chance).

When alignment-by-default works, it’s basically a best-case scenario, so we can safely use the system to design a successor without worrying about amplification of alignment errors (among other things).

Overall, I only give this whole path ~10% chance of working in the short term, and maybe half that in the long term. However, if amplification of alignment errors turns out to be a major limiting factor for long-term alignment, then alignment-by-default is plausibly more likely to work than approaches in the IRL or HCH families.

The limiting factor here is mainly identifying the (probably simple) embedding of human values within a learned model, so microscope AI and general theory development are both good ways to improve the outlook. Also, in the event that we are able to identify a simple embedding of human values in a learned model, it would be useful to have a way to translate that embedding into new systems, in order to align successors.

- Shallow review of live agendas in alignment & safety by (Nov 27, 2023, 11:10 AM; 348 points)

- Why Agent Foundations? An Overly Abstract Explanation by (Mar 25, 2022, 11:17 PM; 302 points)

- Natural Abstractions: Key claims, Theorems, and Critiques by (Mar 16, 2023, 4:37 PM; 241 points)

- “Sharp Left Turn” discourse: An opinionated review by (Jan 28, 2025, 6:47 PM; 205 points)

- Shallow review of technical AI safety, 2024 by (Dec 29, 2024, 12:01 PM; 185 points)

- The Plan − 2023 Version by (Dec 29, 2023, 11:34 PM; 152 points)

- Ten Levels of AI Alignment Difficulty by (Jul 3, 2023, 8:20 PM; 131 points)

- Book Launch: “The Carving of Reality,” Best of LessWrong vol. III by (Aug 16, 2023, 11:52 PM; 131 points)

- Niceness is unnatural by (Oct 13, 2022, 1:30 AM; 130 points)

- Voting Results for the 2020 Review by (Feb 2, 2022, 6:37 PM; 108 points)

- Prizes for the 2020 Review by (Feb 20, 2022, 9:07 PM; 94 points)

- Research agenda: Formalizing abstractions of computations by (Feb 2, 2023, 4:29 AM; 93 points)

- Charting the precipice: The time of perils and prioritizing x-risk by (EA Forum; Oct 24, 2023, 4:25 PM; 86 points)

- Shah and Yudkowsky on alignment failures by (Feb 28, 2022, 7:18 PM; 85 points)

- Thoughts on the Alignment Implications of Scaling Language Models by (Jun 2, 2021, 9:32 PM; 82 points)

- Shallow review of live agendas in alignment & safety by (EA Forum; Nov 27, 2023, 11:33 AM; 76 points)

- 2020 Review Article by (Jan 14, 2022, 4:58 AM; 74 points)

- The Crux List by (May 31, 2023, 6:30 PM; 72 points)

- Consequentialism & corrigibility by (Dec 14, 2021, 1:23 PM; 70 points)

- LOVE in a simbox is all you need by (Sep 28, 2022, 6:25 PM; 66 points)

- Convince me that humanity *isn’t* doomed by AGI by (Apr 15, 2022, 5:26 PM; 60 points)

- AI #3 by (Mar 9, 2023, 12:20 PM; 55 points)

- Can we get an AI to “do our alignment homework for us”? by (Feb 26, 2024, 7:56 AM; 53 points)

- Analysis of Global AI Governance Strategies by (Dec 4, 2024, 10:45 AM; 49 points)

- On the lethality of biased human reward ratings by (Nov 17, 2023, 6:59 PM; 48 points)

- [Appendix] Natural Abstractions: Key Claims, Theorems, and Critiques by (Mar 16, 2023, 4:38 PM; 48 points)

- Hypothesis: gradient descent prefers general circuits by (Feb 8, 2022, 9:12 PM; 46 points)

- How difficult is AI Alignment? by (Sep 13, 2024, 3:47 PM; 44 points)

- Definitions of “objective” should be Probable and Predictive by (Jan 6, 2023, 3:40 PM; 43 points)

- Drug addicts and deceptively aligned agents—a comparative analysis by (Nov 5, 2021, 9:42 PM; 42 points)

- Technical AI Safety Research Landscape [Slides] by (Sep 18, 2023, 1:56 PM; 41 points)

- Shah and Yudkowsky on alignment failures by (EA Forum; Feb 28, 2022, 7:25 PM; 38 points)

- Eliciting Latent Knowledge Via Hypothetical Sensors by (Dec 30, 2021, 3:53 PM; 38 points)

- AISafety.info: What is the “natural abstractions hypothesis”? by (Oct 5, 2024, 12:31 PM; 38 points)

- Blood Is Thicker Than Water 🐬 by (Sep 28, 2021, 3:21 AM; 37 points)

- Selection processes for subagents by (Jun 30, 2022, 11:57 PM; 36 points)

- Intermittent Distillations #2 by (Apr 14, 2021, 6:47 AM; 32 points)

- Red teaming: challenges and research directions by (May 10, 2023, 1:40 AM; 31 points)

- Technical AI Safety Research Landscape [Slides] by (EA Forum; Sep 18, 2023, 1:56 PM; 30 points)

- If Wentworth is right about natural abstractions, it would be bad for alignment by (Dec 8, 2022, 3:19 PM; 29 points)

- Introduction to inaccessible information by (Dec 9, 2021, 1:28 AM; 27 points)

- Reward model hacking as a challenge for reward learning by (Apr 12, 2022, 9:39 AM; 25 points)

- [AN #113]: Checking the ethical intuitions of large language models by (Aug 19, 2020, 5:10 PM; 23 points)

- My Alignment Timeline by (Jul 3, 2023, 1:04 AM; 22 points)

- Niceness is unnatural by (EA Forum; Oct 13, 2022, 1:30 AM; 20 points)

- 's comment on Accurate Models of AI Risk Are Hyperexistential Exfohazards by (Dec 25, 2022, 5:27 PM; 19 points)

- How Interpretability can be Impactful by (Jul 18, 2022, 12:06 AM; 18 points)

- Naturalism and AI alignment by (EA Forum; Apr 24, 2021, 4:20 PM; 17 points)

- 's comment on There’s no such thing as a tree (phylogenetically) by (May 4, 2021, 2:51 PM; 17 points)

- 's comment on But What’s Your *New Alignment Insight,* out of a Future-Textbook Paragraph? by (May 7, 2022, 7:38 AM; 16 points)

- Why should we *not* put effort into AI safety research? by (EA Forum; May 16, 2021, 5:11 AM; 15 points)

- Simulators Increase the Likelihood of Alignment by Default by (Apr 30, 2023, 4:32 PM; 13 points)

- [DISC] Are Values Robust? by (Dec 21, 2022, 1:00 AM; 12 points)

- 's comment on The Future Fund’s Project Ideas Competition by (EA Forum; Mar 2, 2022, 7:01 AM; 11 points)

- 's comment on Are there alternative to solving value transfer and extrapolation? by (Dec 6, 2021, 8:06 PM; 11 points)

- 's comment on What is the most effective way to donate to AGI XRisk mitigation? by (May 31, 2021, 11:20 AM; 11 points)

- Normie response to Normie AI Safety Skepticism by (Feb 27, 2023, 1:54 PM; 10 points)

- 's comment on What do coherence arguments actually prove about agentic behavior? by (Jun 4, 2024, 11:13 PM; 8 points)

- Sufficiently many Godzillas as an alignment strategy by (Aug 28, 2022, 12:08 AM; 8 points)

- 's comment on Various Alignment Strategies (and how likely they are to work) by (May 3, 2022, 5:33 PM; 7 points)

- 's comment on Externalized reasoning oversight: a research direction for language model alignment by (Aug 5, 2022, 12:32 AM; 5 points)

- 's comment on How are you dealing with ontology identification? by (Oct 5, 2022, 6:09 PM; 5 points)

- Naturalism and AI alignment by (Apr 24, 2021, 4:16 PM; 5 points)

- [DISC] Are Values Robust? by (EA Forum; Dec 21, 2022, 1:13 AM; 4 points)

- 's comment on Why GPT wants to mesa-optimize & how we might change this by (Sep 23, 2020, 8:05 AM; 4 points)

- 's comment on Selection Theorems: A Program For Understanding Agents by (Oct 10, 2021, 1:06 PM; 4 points)

- 's comment on If Wentworth is right about natural abstractions, it would be bad for alignment by (Dec 16, 2022, 11:49 PM; 4 points)

- 's comment on Is recursive self-alignment possible? by (Jan 3, 2023, 9:30 AM; 4 points)

- 's comment on A tale of 2.5 orthogonality theses by (EA Forum; May 9, 2022, 9:57 AM; 3 points)

- Notes on the importance and implementation of safety-first cognitive architectures for AI by (May 11, 2023, 10:03 AM; 3 points)

- 's comment on Jeremy Gillen’s Shortform by (Oct 19, 2022, 4:14 PM; 3 points)

- 's comment on Learning human preferences: optimistic and pessimistic scenarios by (Aug 18, 2020, 2:46 PM; 2 points)

- 's comment on Book Review: The Structure Of Scientific Revolutions by (Jan 11, 2021, 10:32 PM; 2 points)

- 's comment on jsd’s Shortform by (Apr 15, 2022, 9:47 PM; 2 points)

- 's comment on How to store human values on a computer by (Nov 6, 2022, 11:21 AM; 1 point)

- 's comment on Tagging Open Call / Discussion Thread by (Aug 16, 2020, 4:23 PM; 1 point)

I’ll set aside what happens “by default” and focus on the interesting technical question of whether this post is describing a possible straightforward-ish path to aligned superintelligent AGI.

The background idea is “natural abstractions”. This is basically a claim that, when you use an unsupervised world-model-building learning algorithm, its latent space tends to systematically learn some patterns rather than others. Different learning algorithms will converge on similar learned patterns, because those learned patterns are a property of the world, not an idiosyncrasy of the learning algorithm. For example: Both human brains and ConvNets seem to have a “tree” abstraction; neither human brains nor ConvNets seem to have a “head or thumb but not any other body part” concept.

I kind of agree with this. I would say that the patterns are a joint property of the world and an inductive bias. I think the relevant inductive biases in this case are something like: (1) “patterns tend to recur”, (2) “patterns tend to be localized in space and time”, and (3) “patterns are frequently composed of multiple other patterns, which are near to each other in space and/or time”, and maybe other things. The human brain definitely is wired up to find patterns with those properties, and ConvNets to a lesser extent. These inductive biases are evidently very useful, and I find it very likely that future learning algorithms will share those biases, even more than today’s learning algorithms. So I’m basically on board with the idea that there may be plenty of overlap between the world-models of various different unsupervised world-model-building learning algorithms, one of which is the brain.

(I would also add that I would expect “natural abstractions” to be a matter of degree, not binary. We can, after all, form the concept “head or thumb but not any other body part”. It would just be extremely low on the list of things that would pop into our head when trying to make sense of something we’re looking at. Whereas a “prominent” concept like “tree” would pop into our head immediately, if it were compatible with the data. I think I can imagine a continuum of concepts spanning the two. I’m not sure if John would agree.)

Next, John suggests that “human values” may be such a “natural abstraction”, such that “human values” may wind up a “prominent” member of an AI’s latent space, so to speak. Then when the algorithms get a few labeled examples of things that are or aren’t “human values”, they will pattern-match them to the existing “human values” concept. By the same token, let’s say you’re with someone who doesn’t speak your language, but they call for your attention and point to two power outlets in succession. You can bet that they’re trying to bring your attention to the prominent / natural concept of “power outlets”, not the un-prominent / unnatural concept of “places that one should avoid touching with a screwdriver”.

Do I agree? Well, “human values” is a tricky term. Maybe I would split it up. One thing is “Human values as defined and understood by an ideal philosopher after The Long Reflection”. This is evidently not much of a “natural abstraction”, at least in the sense that, if I saw ten examples of that thing, I wouldn’t even know it. I just have no idea what that thing is, concretely.

Another thing is “Human values as people use the term”. In this case, we don’t even need the natural abstraction hypothesis! We can just ensure that the unsupervised world-modeler incorporates human language data in its model. Then it would have seen people use the phrase “human values”, and built corresponding concepts. And moreover, we don’t even necessarily need to go hunting around in the world-model to find that concept, or to give labeled examples. We can just utter the words “human values”, and see what neurons light up! I mean, sure, it probably wouldn’t work! But the labeled examples thing probably wouldn’t work either!

Unfortunately, “Human values as people use the term” is a horrific mess of contradictory and incoherent things. An AI that maximizes “‘human values’ as those words are used in the average YouTube video” does not sound to me like an AI that I want to live with. I would expect lots of performative displays of virtue and in-group signaling, little or no making-the-world-a-better-place.

In any case, it seems to me that the big kernel of truth in this post is that we can and should think of future AGI motivations systems as intimately involving abstract concepts, and that in particular we can and should take advantage of safety-advancing abstract concepts like “I am advancing human flourishing”, “I am trying to do what my programmer wants me to try to do”, “I am following human norms”, or whatever. In fact I have a post advocating that just a few days ago, and think of that kind of thing as a central ingredient in all the AGI safety stories that I find most plausible.

Beyond that kernel of truth, I think a lot more work, beyond what’s written in the post, would be needed to build a good system that actually does something we want. In particular, I think we have much more work to do on choosing and pointing to the right concepts (cf. “first-person problem”), detecting when concepts break down because we’re out of distribution (cf. “model splintering”), sandbox testing protocols, and so on. The post says 10% chance that things work out, which seems much too high to me. But more importantly, if things work out along these lines, I think it would be because people figured out all those things I mentioned, by trial-and-error, during slow takeoff. Well in that case, I say: let’s just figure those things out right now!

I’m fairly confident that the inputs to human values are natural abstractions—i.e. the “things we care about” are things like trees, cars, other humans, etc, not low-level quantum fields or “head or thumb but not any other body part”. (The “head or thumb” thing is a great example, by the way). I’m much less confident that human values themselves are a natural abstraction, for exactly the same reasons you gave.

In this post, the author describes a pathway by which AI alignment can succeed even without special research effort. The specific claim that this can happen “by default” is not very important, IMO (the author himself only assigns 10% probability to this). On the other hand, viewed as a technique that can be deliberately used to help with alignment, this pathway is very interesting.

The author’s argument can be summarized as follows:

For anyone trying to predict events happening on Earth, the concept of “human values” is a “natural abstraction”, i.e. something that has to be a part of any model that’s not too computationally expensive (so that it doesn’t bypass the abstraction by anything like accurate simulation of human brains).

Therefore, unsupervised learning will produce models in which human values are embedded in some simple way (e.g. a small set of neurons in an ANN).

Therefore, if supervised learning is given the unsupervised model as a starting point, it is fairly likely to converge to true human values even from a noisy and biased proxy.

[EDIT: John pointed out that I misunderstood his argument: he didn’t intend to say that human values are a natural abstraction, but only that their inputs are natural abstractions. The following discussion still applies.]

The way I see it, this argument has learning-theoretic justification even without appealing to anything we know about ANNs (and therefore without assuming the AI in question is an ANN). Consider the following model: an AI receives a sequence of observations that it has to make predictions about. It also receives labels, but these are sparse: it is only given a label once in a while. If the description complexity of the true label function is high, the sample complexity of learning to predict labels via a straightforward approach (i.e. without assuming a relationship between the dynamics and the label function) is also high. However, if the relative description complexity of the label function w.r.t. the dynamics producing the observations is low, then we can use the abundance of observations to achieve lower effective sample complexity. I’m confident that this can be made rigorous.

Therefore, we can recast the thesis of this post as follows: Unsupervised learning of processes happening on Earth, for which we have plenty of data, can reduce the size of the dataset required to learn human values, or allow better generalization from a dataset of the same size.

One problem the author doesn’t talk about here is daemons / inner misalignment[1]. In the comment section, the author writes:

This might or might not be a fair description of inner misalignment in the sense of Hubinger et al. However, this is definitely not a fair description of the daemonic attack vectors in general. The potential for malign hypotheses (learning of hypotheses / models containing malign subagents) exists in any learning system, and in particular malign simulation hypotheses are a serious concern.

Relatedly, the author is too optimistic (IMO) in his comparison of this technique to alternatives:

This sounds to me like a biased perspective resulting from looking for flaws in other approaches harder than flaws in this approach. Natural abstractions potentially lower the sample complexity of learning human values, but they cannot lower it to zero. We still need some data to learn from and some model relating this data to human values, and this model can suffer from the usual problems. In particular, the unsupervised learning phase does little to inoculate us from malign simulation hypotheses that can systematically produce catastrophically erroneous generalization.

If IRL variants learn a utility function while human values are not a utility function, then avoiding this problem requires identifying the correct type signature of human values[2], in this approach as well. Regarding HCH, Human + “bureaucracy” might or might not be aligned, depending on how we organize the “bureaucracy” (see also). If HCH can fail in some subtle way (e.g. systems of humans are misaligned to individual humans), then similar failure modes might affect this approach as well (e.g. what if “Molochian” values are also a natural abstraction).

In summary, I found this post quite insightful and important, if somewhat too optimistic.

I am slightly wary of use the term “inner alignment” since Hubinger uses it in a very specific way I’m not sure I entirely understand. Therefore, I am more comfortable with “daemons” although the two have a lot of overlap.

E.g. IB physicalism proposes a type signature for “physicalist values” which might or might not be applicable to humans.

One subtlety which approximately 100% of people I’ve talked to about this post apparently missed: I am pretty confident that the inputs to human values are natural abstractions, i.e. we care about things like trees, cars, humans, etc, not about quantum fields or random subsets of atoms. I am much less confident that “human values” themselves are natural abstractions; values vary a lot more across cultures than e.g. agreement on “trees” as a natural category.

In the particular section you quoted, I’m explicitly comparing the best-case of abstraction by default to the the other two strategies, assuming that the other two work out about-as-well as they could realistically be expected to work. For instance, learning a human utility function is usually a built-in assumption of IRL formulations, so such formulations can’t do any better than a utility function approximation even in the best case. Alignment by default does not need to assume humans have a utility function; it just needs whatever-humans-do-have to have low marginal complexity in a system which has learned lots of natural abstractions.

Obviously alignment by default has analogous assumptions/flaws; much of the OP is spent discussing them. The particular section you quote was just talking about the best-case where those assumptions work out well.

I partially agree with this, though I do think there are good arguments that malign simulation issues will not be a big deal (or to the extent that they are, they’ll look more like Dr Nefarious than pure inner daemons), and by historical accident those arguments have not been circulated in this community to nearly the same extent as the arguments that malign simulations will be a big deal. Some time in the next few weeks I plan to write a review of The Solomonoff Prior Is Malign which will talk about one such argument.

That’s fair, but it’s still perfectly in line with the learning-theoretic perspective: human values are simpler to express through the features acquired by unsupervised learning than through the raw data, which translates to a reduction in sample complexity.

This seems wrong to me. If you do IRL with the correct type signature for human values then in the best case you get the true human values. IRL is not mutually exclusive with your approach: e.g. you can do unsupervised learning and IRL with shared weights. I guess you might be defining “IRL” as something very narrow, whereas I define it “any method based on revealed preferences”.

Malign simulation hypotheses already look like “Dr. Nefarious” where the role of Dr. Nefarious is played by the masters of the simulation, so I’m not sure what exactly is the distinction you’re drawing here.

Yup, that’s right. I still agree with your general understanding, just wanted to clarify the subtlety.

Yup, I agree with all that. I was specifically talking about IRL approaches which try to learn a utility function, not the more general possibility space.

The distinction there is about whether or not there’s an actual agent in the external environment which coordinates acausally with the malign inner agent, or some structure in the environment which allows for self-fulfilling prophecies, or something along those lines. The point is that there has to be some structure in the external environment which allows a malign inner agent to gain influence over time by making accurate predictions. Otherwise, the inner agent will only have whatever limited influence it has from the prior, and every time it deviates from its actual best predictions (or is just out-predicted by some other model), some of that influence will be irreversibly spent; it will end up with zero influence in the long run.

Of course, but this in itself is no consolation, because it can spend its finite influence to make the AI perform an irreversible catastrophic action: for example, self-modifying into something explicitly malign.

In e.g. IDA-type protocols you can defend by using a good prior (such as IB physicalism) plus confidence thresholds (i.e. every time the hypotheses have a major disagreement you query the user). You also have to do something about non-Cartesian attack vectors (I have some ideas), but that doesn’t depend much on the protocol.

In value learning things are worse, because of the possibility of corruption (i.e. the AI hacking the user or its own input channels). As a consequence, it is no longer clear you can infer the correct values even if you make correct predictions about everything observable. Protocols based on extrapolating from observables to unobservables fail, because malign hypotheses can attack the extrapolation with impunity (e.g. a malign hypothesis can assign some kind of “Truman show” interpretation to the behavior of the user, where the user’s true values are completely alien and they are just pretending to be human because of the circumstances of the simulation).

It’s up.