0: Navigation

I’m aiming for a reader who knows what “prediction markets” and “adverse selection” are, who likes the first and not the second, and who enjoys systems that have neatly aligned incentives. For a primer on prediction markets, read Scott Alexander’s FAQ. If you’re already familiar with Manifold Love, skip to section 2.

COI: I’ve done some work for, might do some more work for, and own a tiny bit of equity in Manifold. I’m writing this independent of any work I’m currently doing or planning to do for Manifold or for any other entity. I just think the ideas are cool.

1: Love

Manifold, a play-money prediction market platform, recently released a prediction market dating app called “Manifold Love.” Users — those seeking love — sign up and fill out their profile like a regular dating app. Matchmakers — some of whom are users of the app themselves — pair users up. After a matchmaker makes a match, prediction markets are automatically created on various benchmarks of the pair’s (potential) relationship.

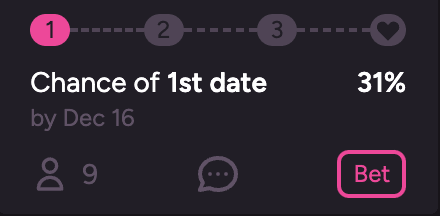

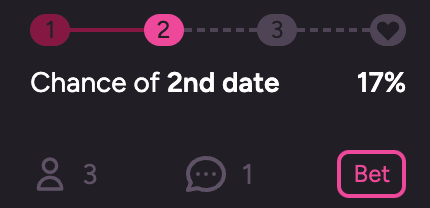

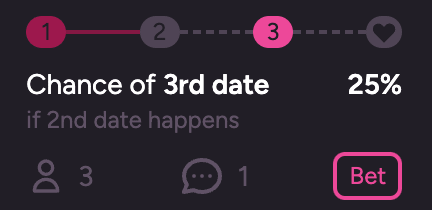

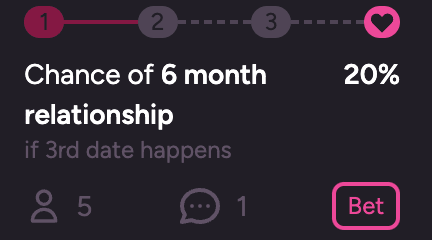

Screenshots of some markets on Manifold Love.

People make bets (using play-money) on whether the pair will go on a first date; conditional on that first date happening, a second date; conditional on the second date happening, a third; conditional on the third date happening, a 6 month relationship. The idea is that you should go on dates with matches who have the highest chance of leading to subsequent dates, and eventually turning into a relationship. Instead of guessing who that’ll be, you can just check the prediction markets.

2: Generalizing

Importantly, Manifold Love’s setup isn’t limited to solving problems in the dating market. It solves (or a least, has the potential to solve) problems in all scenarios in which there’s two-sided adverse selection, where each side of a two-sided market selects for something disfavorable by the other side’s lights. In the words of Groucho Marx, “I don’t want to belong to any [country] club that would accept me as a member.” If a club wants Groucho Marx, it’s probably not a very good club, and vice versa — if Groucho Marx wants to be in a particular club, he’s probably not a very desirable member.

More generally, the setup of “run a bunch of conditional prediction markets on a bunch of key benchmarks for potential pairs between two sides that are normally caught in adverse selection” seems like it could work pretty well.

One of the classic cases of two-sided adverse selection is the labor market, so here’s how a sort of “Manifold Jobs” might play out. The platform has three entities:

Prospective employees

Prospective employers

Headhunters

The platform makes a bunch of conditional prediction markets on key benchmarks for each of the first two entities. For example:

Conditional on being hired by [prospective employer], will [prospective employee]:

still be at their job in [timeframe]?

have a higher weekly average life satisfaction rating in [timeframe] than they do now?

etc.

Conditional on hiring [prospective employee], will [prospective employer]:

still be employing [employee] in [timeframe]?

have a higher weekly average employee rating in [timeframe] than the previous employee did?

etc.

If Manifold actually makes this, I’m sure it’ll look somewhat different, much better, and far more fleshed out. But importantly, the dating and labor markets aren’t the only two landscapes of two-sided adverse selection.

3: Insert Two-Sided Adverse Selection Here

There are a bunch of other landscapes of two-sided adverse selection where the conditional prediction market setup from Manifold Love could potentially solve a lot of problems:

dating (Manifold Love)

friendships

gym partners

grantmaking

college applications/decisions

grad school apps/decisions (e.g. med school, law school, business school, etc)

labor/hiring/talent-seeking/jobs (as in section 2)

cofounders

seed- and preseed-stage investing

child adoption

internship applications/decisions

used car sales

tutoring

events/venue spaces

therapists/patients

selling & buying insurance

credit/lending

residential & commercial real estate

students picking classes at college

If you can think of more, tell me and I’ll add them here! Something to note: for most of the above, there’s already some entity that acts as a middleman — real estate has realtors, the labor market has headhunters, adoption agencies pair up biological mothers with adopted families, etc. But all of them are pretty broken, biased, or at least wildly suboptimal. I’m excited about the potential of conditional prediction markets to improve on them and solve two-sided adverse selection.

4: Caveats

As of my writing, I’m a lowly undergrad who’s never taken an economics class and has no idea what he’s talking about. I’ve done a bit of work in the prediction market & forecasting community, but I’m nowhere near an expert.

We don’t even know if Manifold Love works with dating, let alone if it generalizes to other systems of two-sided adverse selection.

Conditional prediction markets without prior commitment to randomization do not imply causation (a la DYNOMIGHT). This setup will give us association/correlation, but we won’t know the existence nor direction of causality without prior commitment to randomization. And prior commitment to even some small amounts of randomization for some of these systems ranges from “quite difficult” to “hahaha good one, absolutely fucking not.” Fortunately, I’m not sure you need to know causation, at least not to start out. I’m curious to see what happens if/when Manifold Love runs into this problem.

Again, COI: I’ve done some work for, might do some more work for, and own a tiny bit of equity in Manifold. I’m writing this independent of any work I’m currently doing or planning to do for Manifold or for any other entity. I just think the ideas are cool.

lunatic_at_large on LessWrong adds two more caveats that I didn’t think of:

“Suppose person A is open for dating and person B really wants to date them. By punting the decision-making process to the public, person A restricts themselves to working with publicly available information about person B and simultaneously person B is put under pressure to publicly reveal all relevant information. I can imagine a lot of Goodharting on the part of person B. Also, if it was revealed that person C bet against A and B dating and person B found out, I can imagine some … uh … lasting negative emotions between B and C. That possibility could also mess with person C’s incentives. In other words, the market participants with the closest knowledge of A and B also have the most to lose by A and B being upset with their bets and thus face the most misaligned incentives.”

“I can imagine circumstances where publicly revealing probabilities of things can cause negative externalities, especially on mental health. For example, colleges often don’t reveal students’ exact percentage scores on classes even if employers would be interested — the amount of stress that would induce on the student body could result in worse learning outcomes overall. In an example you listed, with therapists/patients, I feel like it might not be great to have someone suffering from anxiety watch their percentage chance of getting an appointment go up and down.”

Awesome post!

I have absolutely no experience with prediction markets, but I’m instinctively nervous about incentives here. Maybe the real-world incentives of market participants could be greater than the play-money incentives? For example, if you’re trying to select people to represent your country at an international competition and the potential competitors have invested their lives into being on that international team and those potential competitors can sign up as market participants (maybe anonymously), then I could very easily imagine those people sabotaging their competitors’ markets and boosting their own with no regard for their post-selection in-market prediction winnings.

For personal stuff (friendship / dating), I have some additional concerns. Suppose person A is open for dating and person B really wants to date them. By punting the decision-making process to the public, person A restricts themselves to working with publicly available information about person B and simultaneously person B is put under pressure to publicly reveal all relevant information. I can imagine a lot of Goodharting on the part of person B. Also, if it was revealed that person C bet against A and B dating and person B found out, I can imagine some … uh … lasting negative emotions between B and C. That possibility could also mess with person C’s incentives. In other words, the market participants with the closest knowledge of A and B also have the most to lose by A and B being upset with their bets and thus face the most misaligned incentives. (Note: I also dislike dating apps and lots of people use those so I’m probably biased here.)

Finally, I can imagine circumstances where publicly revealing probabilities of things can cause negative externalities, especially on mental health. For example, colleges often don’t reveal students’ exact percentage scores on classes even if employers would be interested — the amount of stress that would induce on the student body could result in worse learning outcomes overall. In an example you listed, with therapists/patients, I feel like it might not be great to have someone suffering from anxiety watch their percentage chance of getting an appointment go up and down.

But for circumstances with low stakes (so play money incentives beat real-world incentives) and small negative externalities, such as gym partners, I could imagine this kind of system working really well! Super cool!

Thanks for the response!

Re: concerns about bad incentives, I agree that you can depict the losses associated with manipulating conditional prediction markets as paying a “cost” — even though you’ll probably lose a boatload of money, it might be worth it to lose a boatload of money to manipulate the markets. In the words of Scott Alexander, though:

I’m concerned about this, but it feels like a solvable problem.

Re: personal stuff & the negative externalities of publicly revealing probabilities, thanks for pointing these out. I hadn’t thought of it. Added it to the post!

So I’ve been thinking a little more about the real-world-incentives problem, and I still suspect that there are situations in which rules won’t solve this. Suppose there’s a prediction market question with a real-world outcome tied to the resulting market probability (i.e. a relevant actor says “I will do XYZ if the prediction market says ABC”). Let’s say the prediction market participants’ objective functions are of the form play_money_reward + real_world_outcome_reward. If there are just a couple people for whom real_world_outcome_reward is at least as significant as play_money_reward and if you can reliably identify those people (i.e. if you can reliably identify the people with a meaningful conflict of interest), then you can create rules preventing those people from betting on the prediction market.

However, I think that there are some questions where the number of people with real-world incentives is large and/or it’s difficult to identify those people with rules. For example, suppose a sports team is trying to determine whether to hire a star player and they create a prediction market for whether the star athlete will achieve X performance if hired. There could be millions of fans of that athlete all over the world who would be willing to waste a little prediction market money to see that player get hired. It’s difficult to predict who those people are without massive privacy violations—in particular, they have no publicly verifiable connection to the entities named in the prediction market.

This applies to roughly the entire post, but I see an awful lot of magical thinking in this space. What is the actual mechanism by which you think prediction markets will solve these problems?

In order to get a good prediction from a market you need traders to put prices in the right places. This means you need to subsidise the markets. Whether or not a subsidised prediction market is going to be cheaper for the equivalent level of forecast than paying another 3rd party (as is currently the case in most of your examples) is very unclear to me

Thanks for the response!

Could you point to some specific areas of magical thinking in the post? and/or in the space?[1] (I’m not claiming that there aren’t any, I definitely think there are. I’m interested to know where I & the space are being overconfident/thinking magically, so that I/it can do less magical thinking.)

The mechanism that Manifold Love uses. In section 2, I put it as “run a bunch of conditional prediction markets on a bunch of key benchmarks for potential pairs between two sides that are normally caught in adverse selection.” I wrote this post to explain the actual mechanism by which I think (conditional) prediction markets might solve these problems, but I also want to note that I definitely do not think that (conditional) prediction markets will definitely for sure 100% totally completely solve these problems. I just think they have potential, and I’m excited to see people giving it a shot.

I agree! In order to get a good prediction from a market, you (probably, see the footnote) need participation to be positive-sum.[3] I think there are a few ways to get this:

Direct subsidies

Since prediction markets create valuable price information, it might make sense to have those who benefit from the price information directly pay. I could imagine this pretty clearly, actually: Manifold Love could charge users for (e.g.) more than 5 matches, and some part of the fee that the user pays goes toward market subsidies. As you pointed out, paying a 3rd party is currently the case for most of my examples — matchmakers, headhunters, real estate agents, etc — so it seems like this sort of thing aligns with the norms & users’ expectations.

Hedging

Some participants bet to hedge other off-market risks. These participants are likely to lose money on prediction markets, and know that ahead of time. That’s because they’re not betting their beliefs; they’re paying the equivalent to an insurance premium.

For prediction markets generally, this seems like the most viable path to getting money flowing into the market. I’m not sure how well it’d work for this sort of setup, though — mainly because the markets are so personal.

This requires finding markets on which participants would want to hedge, which seems like a difficult problem. I give an example before, but I’m pretty unsure what something like this would look like in a lot of the examples I listed in the original essay.

Continuing the example of the labor market from section 2: I could imagine (e.g.) a Google employee buying an ETF-type-thing that bets NO on whether all potential Google employees will remain at Google a year from their hiring date. This protects that Google empoyee against the risk of some huge round of layoffs — they’ve bought “insurance” against that outcome. In doing so, it provides the markets a way to become positive-sum for those participants who’re betting their beliefs.

New traders

This provides an inflow of money, but is (obviously) tied to new traders joining the market. I don’t like this at all, because it’s totally unsustainable and leads to community dynamics like those in crypto. Also, it’s a scheme that’s pyramid-shaped, a “pyramid scheme” if you will.

I’m mainly including this for completeness; I think relying on this is a terrible idea.

Agreed! It’s unclear to me too. This sort of question is answerable by trying the thing and seeing if it works — that’s why I’m excited about for people & companies to try it out and see if it works.

I’m assuming you mean the “prediction market/forecasting space,” so please let me know if that’s not the space to which you’re referring.

I’ll interpret “subsidize” more broadly as “money flowing into the market to make it positive-sum after fees, inflation, etc.”

I’m comfortable working under this assumption for now, but I do want to be clear that I’m not fully convinced this is the case. The stock market is clearly negative-sum for the majority of traders, and yet… traders still join. It seems at least plausible that, as long as the market is positive-sum for some key market participants, the markets still can still provide valuable price information.

This post triggered me a bit, so I ended up writing one of my own.

I agree the entire thing is about how to subsidise the markets, but I think you’re overestimating how good markets are as a mechanism for subsidising forecasting (in general). Specifically for your examples:

Direct subsidies are expensive relative to the alternatives (the point of my post)

Hedging doesn’t apply in lots of markets, and in the ones where it does make sense those markets already exist. (Eg insurance)

New traders is a terrible idea as you say. It will work in some niches (eg where there’s lots of organic interest, but it wont work at scale for important things)

Cool idea. I think in most cases on the list, you’ll have some combination of information asymmetry and an illiquid market that make this not that useful.

Take used car sales. I put my car up for sale and three prospective buyers come to check it out. We are now basically the only four people in the world with inside knowledge about the car. If the car is an especially good deal, each buyer wants to get that information for themselves but not broadcast it either to me or to the other buyers. I dunno man, it all seems like a stretch to say that the four of us are going to find a prediction market worth it,.