I’m the chief scientist at Redwood Research.

ryan_greenblatt

Wouldn’t you expect this if we’re close to saturating SWE bench (and some of the tasks are impossible)? Like, you eventually cap out at the max performance for swe bench and this doesn’t correspond to an infinite time horizon on literally swe bench (you need to include more longer tasks).

I agree probably more work should go into this space. I think it is substantially less tractable than reducing takeover risk in aggregate, but much more neglected right now. I think work in this space has the capacity to be much more zero sum (among existing actors, avoiding AI takeover is zero sum with respect to the relevant AIs) and thus can be dodgier.

What’s up with Anthropic predicting AGI by early 2027?

Seems relevant post AGI/ASI (human labor is totally obsolete and AIs have massively increased energy output) maybe around the same point as when you’re starting to build stuff like Dyson swarms or other massive space based projects. But yeah, IMO probably irrelevant in the current regime (for next >30 years without AGI/ASI) and current human work in this direction probably doesn’t transfer.

I think the case in favor of space-based datacenters is that energy efficiency of space-based solar looks better: you can have perfect sun 100% of the time and you don’t have an atmosphere in the way. But, this probably isn’t a big enough factor to matter in realistic regimes without insane amounts of automation etc.

In addition to hitting higher energy from a given area, you also can get the same energy 100% of the time (without issues with night or clouds). But yeah, I agree, and I don’t see how you get 50x efficiency even if transport to space (and assembly/maintenance in space) were free.

If your theory of change is convincing Anthropic employees or prospective Anthropic employees they should do something else, I think your current approach isn’t going to work. I think you’d probably need to much more seriously engage with people who think that Anthropic is net-positive and argue against their perspective.

Possibly, you should just try to have less of a thesis and just document bad things you think Anthropic has done and ways that Anthropic/Anthropic leadership has misled employees (to appease them). This might make your output more useful in practice.

I think it’s relatively common for people I encounter to think both:

Anthropic leadership is engaged in somewhat scumy appeasment of safety motivated employees in ways that are misleading or based on kinda obviously motivated reasoning. (Which results in safety motivated employees having a misleading picture of what the organization is doing and why and what people expect to happen.)

Anthropic is strongly net positive despite this and working on capabilities there is among the best things you can do.

An underlying part of this view is typically that moderate improvements in effort spent on prosaic safety measures substantially reduces risk. I think you probably strongly disagree with this and this might be a major crux.

Personally, I agreee with what Zach said. I think working on capabilities[1] at Anthropic is probably somewhat net positive but would only be the best thing to work on if you had very strong comparative advantage relative to all the other useful stuff (e.g. safety research). So probably most altruistic people with views similar to mine should do something else. I currently don’t feel very confident that capabilities at Anthropic is net positive and could imagine swinging towards thinking it is net negative based on additional evidence

- ↩︎

Putting aside strongly differential specific capabilities work.

This seems non-crazy, but my current guess would be that the training for 4.5 (relative to 4) made both verbalized and unverbalized eval awareness more likely. E.g., I don’t have much confidence that 4.5 isn’t aware it is in an eval even if it doesn’t mention this in the cot (especially if it sometimes mentions this for pretty similar inputs or sometimes mentions this for this input). Like, it’s good that we know eval awareness is an issue in 4.5, but it’s hard to be confident you’ve avoided this issue in some eval by looking for verbalized eval awareness!

(If we somehow became confident that 4.5 is eval aware if it verbalizes with some experiments/science that could change my perspective.)

If you aggressively optimize for finding training environments that train against misalignment and which don’t increase verbalized eval awareness, it does seem plausible you find environments that make unverbalized eval awareness more likely. I’d propose also not doing this and instead trying to roughly understand what makes eval awareness increase and avoid this in general.

Also, can models now be prompted to trick probes? (My understanding is this doesn’t work for relatively small open source models, but maybe SOTA models can now do this?)

Copying over most what I wrote about this on X/twitter:

It’s great that Anthropic did a detailed risk report on sabotage risk (for Opus 4) and solicited an independent review from METR.

I hope other AI companies do similar analysis+reporting+transparency about risk with this level of rigor and care.

[...]

I think this sort of moderate-access third party review combined with a detailed (and thoughtful) risk report can probably provide a reasonably accurate picture of the current situation with respect to risk (if we assume that AI companies and their employees don’t brazenly lie).

That said, it’s not yet clear how well this sort of process will work when risk is large (or at least plausibly large) and thus there are higher levels of pressure. Selecting a bad/biased third-party reviewer for this process seems like a particularly large threat.

As far as I can tell, Anthropic did a pretty good job with this risk report (at least procedurally), but I haven’t yet read the report in detail.

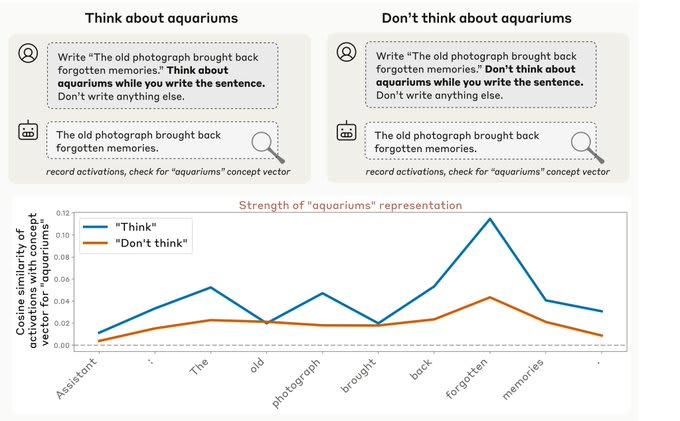

In Improving the Welfare of AIs: A Nearcasted Proposal (from 2023), I proposed talking to AIs through their internals via things like ‘think about baseball to indicate YES and soccer to indicate NO’. Based on the recent paper from Anthropic on introspection, it seems like this level of cognitive control might now be possible:

Communicating to AIs via their internals could be useful for talking about welfare/deals because the internals weren’t ever trained against, potentially bypassing strong heuristics learned from training and also making it easier to convince AIs they are genuinely in a very different situation than training. (Via e.g. reading their answers back to them which a typical API user wouldn’t be able to do.) There might be other better ways to convince AIs they are actually in some kind of welfare/deals discussion TBC.

That said, LLMs may not be a single coherant entity (even within a single context) and talking via internals might be like talking with a different entity than the entity (or entities) that control outputs. Talking via internals would be still be worthwhile, but wouldn’t get at something that’s more “true”.

If AIs can coherantly answer questions by manipulating their internals, this is also just a bunch of evidence about AIs having some sort of interesting inner world.

To be clear, it seems pretty likely to me that current AIs are still below the level of sophistication of internal control where this works, but communicating to AIs in this way might soon work.

What I originally said about this in my blog post:

we’d try to communicate via asking the AI to manipulate its internals in a way we can observe. In particular, I often imagine ‘think about baseball to indicate YES and soccer to indicate NO’ and then we’d look to see which neurons fire. Rather than using neurons, it would probably be more robust to look at which experts activate for a mixture of experts model if this is possible. If the AI answers questions coherently and consistently in this sort of case, then we have a channel of communication that wasn’t directly shaped by training. Overall, I think that if this approach strongly succeeds it seems like a very compelling argument for some part of the AI having preferences, some sort of ‘inner world’, and understanding its position in the world. It might be evidence for consciousness under some stories of consciousness. It’s quite likely this approach fails because the AI system doesn’t have control over its thoughts like this (but I think not having control over its thoughts is some evidence against the AI having some sort of inner world like humans do). It’s also possible this fails because the preferences and morally relevant states of a given AI system are a product of it thinking over the generation of multiple tokens. Beyond being very clear evidence, another advantage of this approach is that an AI system might not have strong control over its outputs (much in the same way humans can’t control their heartbeat very easily).

Sonnet 4.5′s eval gaming seriously undermines alignment evals, and this seems caused by training on alignment evals

I agree in general, but think this particular example is pretty reasonable because the point is general and just happens to be have been triggered by a specific post that 1a3orn thinks is an example of this (presumably this?).

I do think it’s usually better practice to list a bunch of examples of the thing you’re refering to, but also specific examples can sometimes be distracting/unproductive or cause more tribalism than needed? Like in this case I think it would probably be better if people considered this point in abstract (decoupled from implications) and thought about how much they agreed and then after applied this on a case by case basis. (A common tactic that (e.g.) scott alexander uses is to first make an abstract argument before applying it so that people are more likely to properly decouple.)

Doesn’t seem like a genre subversion to me, it’s just a bit clever/meta while still centrally being an allegorical AI alignment dialogue. IDK what the target audience is though (but maybe Eliezer just felt inspired to write this).

Probably memory / custom system prompt right?

Kyle Fish (works on ai welfare at anthropic) endorses this in his 80k episode. Obviously this isn’t a commitment from anthropic.

I broadly agree (and have advocated for similar), but I think there are some non-trivial downsides around keeping weights around in the event of AI takeover (due to possiblity for torture/threats from future AIs). Maybe the ideal proposal would involve the weights being stored in some way that involves attempting destruction in the event of AI takeover. E.g., give 10 people keys and requires >3 keys to unencrypt and instruct people to destroy keys in the event of AI takevoer. (Unclear if this would work tbc.)

Yes, but you’d naively hope this wouldn’t apply to shitty posts, just to mediocre posts. Like, maybe more people would read, but if the post is actually bad, people would downvote etc.

I’m pretty excited about building tools/methods for better dataset influence understanding, so this intuitively seems pretty exciting! (I’m both interested in better cheap approximation of the effects of leaving some data out and the effects of adding some data in.)

(I haven’t looked at the exact method and results in this paper yet.)

I’d guess swe bench verified has an error rate around 5% or 10%. They didn’t have humans baseline the tasks, just look at them and see if they seem possible.

Wouldn’t you expect thing to look logistic substantially before full saturation?