I’m the chief scientist at Redwood Research.

ryan_greenblatt

IMO, reasonableness and epistemic competence are also key factors. This includes stuff like how effectively they update on evidence, how much they are pushed by motivated reasoning, how good are they at futurism and thinking about what will happen. I’d also include “general competence”.

(This is a copy of my comment made on your shortform version of this point.)

IMO, reasonableness and epistemic competence are also key factors. This includes stuff like how effectively they update on evidence, how much they are pushed by motivated reasoning, how good are they at futurism and thinking about what will happen. I’d also include “general competence”.

Yeah, on the straightforward tradeoff (ignoring exogenous shifts/risks etc), I’m at more like 0.002% on my views.

There is a modest compute overhead cost, I think on the order of 1%, and the costs of the increased security for the model weights. These seem modest.

The inference cost increase of the ASL-3 deployment classifiers is probably around 4%, though plausibly more like 10%. Based on the constitutional classifiers paper, the additional cost on top of 3.5 Sonnet was 20%. Opus 4 is presumably more expensive than 3.5 Sonnet, making the relative cost increase (assuming the same size of classifier model is used) smaller. How much smaller? If you assume inference cost is proportional to the API price, Opus 4 is 5x more expensive than Sonnet 3.5/4 making the relative increase 4%. I’d guess this is more likely to be overestimate of Opus 4′s relative cost based on recent trends in model size, so maybe Opus is only ~2x more expensive than Sonnet yielding a 10% increase in cost.

As far as my views, it’s worth emphasizing that it depends on the current regime. I was supposing that at least the US was taking strong actions to resolve misalignment risk (which is resulting in many years of delay). In this regime, exogenous shocks might alter the situation such that powerful AI is developed under worse goverance. I’d guess the risk of an exogenous shock like this is around ~1% per year and there’s some substantial chance this would greatly increase risk. So, in the regime where the government is seriously considering the tradeoffs and taking strong actions, I’d guess 0.1% is closer to rational (if you don’t have a preference against the development of powerful AI regardless of misalignment risk which might be close to the preference of many people).

I agree that governments in practice wouldn’t eat a known 0.1% existential risk to accelerate AGI development by 1 year, but also governments aren’t taking AGI seriously. Maybe you mean even if they better understood the situation and were acting rationally? I’m not so sure, see e.g. nuclear weapons where governments seemingly eat huge catastrophic risks which seem doable to mitigate at some cost. I do think status quo bias might be important here. Accelerating by 1 year which gets you 0.1% additional risk might be very different than delaying by 1 year which saves you 0.1%.

(Separately, I think existential risk isn’t extinction risk and this might make a factor of 2 difference to the situation if you don’t care at all about anything other than current lives.)

To be clear, I agree there are reasonable values which result in someone thinking accelerating AI now is good and values+beliefs which result in thinking a pause wouldn’t good in likely circumstances.

And I don’t think cryonics makes much of a difference to the bottom line. (I think ultra low cost cryonics might make the cost to save a life ~20x lower than the current marginal cost, which might make interventions in this direction outcompete acceleration even under near maximally pro acceleration views.)

It doesn’t seem unreasonable to me to suggest that literally saving billions of lives is worth pursuing even if doing so increases existential risk by a tiny amount. Loosely speaking, this idea only seems unreasonable to those who believe that existential risk is overwhelmingly more important than every other concern by many OOMs—so much so that it renders all other priorities essentially irrelevant.

It sounds like you’re talking about multi-decade pauses and imagining that people agree such a pause would only slightly reduce existential risk. But, I think a well timed safety motivated 5 year pause/slowdown (or shorter) is doable and could easily cut risk by a huge amount. (A factor of 2 feels about right to me and I’d be sympathetic to higher: this would massively increase total work on safety.) I don’t think people are imagining that a pause/slowdown makes only a tiny difference!

I’d say that my all considered tradeoff curve is something like 0.1% existential risk per year of delay. This does depend on exogenous risks of societal disruption (e.g. nuclear war, catastrophic pandemics, etc). If we ignore exogenous risks like this and assume the only downside to delay is human deaths, I’d go down to 0.002% personally.[1] (Deaths are like 0.7% of the population per year, making a ~2.5 OOM difference.)

My guess is that the “common sense” values tradeoff is more like 0.1% than 1% because of people caring more about kids and humanity having a future than defeating aging. (This is sensitive to whether AI takeover involves killing people and eliminating even relatively small futures for humanity, but I don’t think this makes more than a 3x difference to the bottom line.) People seem to generally think death isn’t that bad as long as people had a reasonably long healthy life. I disagree, but my disagreements are irrelevant. So, I feel like I’m quite in line with the typical moral perspective in practice.

- ↩︎

I edited this number to be a bit lower on further reflection because I realized the relevant consideration pushing higher is putting some weight on something like a common sense ethics intuition and the starting point for this intuition is considerably lower than 0.7%.

- ↩︎

How you feel about this state of affairs depends a lot on how much you trust Anthropic leadership to make decisions which are good from your perspective.

Another note: My guess is that people on LessWrong tend to be overly pessimistic about Anthropic leadership (in terms of how good of decisions Anthropic leadership will make under the LessWrong person’s views and values) and Anthropic employees tend to be overly optimistic.

I’m less confident that people on LessWrong are overly pessimistic, but they at least seem too pessimistic about the intentions/virtue of Anthropic leadership.

I agree that this sort of preference utilitarianism leads you to thinking that long run control by an AI which just wants paperclips could be some (substantial) amount good, but I think you’d still have strong preferences over different worlds.[1] The goodness of worlds could easily vary by many orders of magnitude for any version of this view I can quickly think of and which seems plausible. I’m not sure whether you agree with this, but I think you probably don’t because you often seem to give off the vibe that you’re indifferent to very different possibilities. (And if you agreed with this claim about large variation, then I don’t think you would focus on the fact that the paperclipper world is some small amount good as this wouldn’t be an important consideration—at least insofar as you don’t also expect that worlds where humans etc retain control are similarly a tiny amount good for similar reasons.)

The main reasons preference utilitarianism is more picky:

Preferences in the multiverse: Insofar as you put weight on the preferences of beings outside our lightcone (beings in the broader spatially infinte universe, Everett branches, the broader mathematical multiverse to the extent you put weight on this), then the preferences of these beings will sometimes care about what happens in our lightcone and this could easily dominate (as they are vastly more numerious and many might care about things independent of “distance”). In the world with the successful paperclipper, just as many preferences aren’t being fulfilled. You’d strongly prefer optimization to satisfy as many preferences as possible (weighted as you end up thinking is best).

Instrumentally constructed AIs with unsatisfied preferences: If future AIs don’t care at all about preference utilitarianism, they might instrumentally build other AIs who’s preferences aren’t fulfilled. As an extreme example, it might be that the best strategy for a paper clipper is to construct AIs which have very different preferences and are enslaved. Even if you don’t care about ensuring beings come into existence who’s preference are satisified, you might still be unhappy about creating huge numbers of beings who’s preferences aren’t satisfied. You could even end up in a world where (nearly) all currently existing AIs are instrumental and have preferences which are either unfulfilled or only partially fulfilled (a earlier AI initiated a system that perpetuates this, but this earlier AI no longer exists as it doesn’t care terminally about self-preservation and the system it built is more efficient than it).

AI inequality: It might be the case that the vast majority of AIs have there preferences unsatisfied despite some AIs succeeding at achieving their preference. E.g., suppose all AIs are replicators which want to spawn as many copies as possible. The vast majority of these replicator AI are operating at subsistence and so can’t replicate making their preferences totally unsatisfied. This could also happen as a result of any other preference that involves constructing minds that end up having preferences.

Weights over numbers of beings and how satisfied they are: It’s possible that in a paperclipper world, there are really a tiny number of intelligent beings because almost all self-replication and paperclip construction can be automated with very dumb/weak systems and you only occasionally need to consult something smarter than a honeybee. AIs could also vary in how much they are satisfied or how “big” their preferences are.

I think the only view which recovers indifference is something like “as long as stuff gets used and someone wanted this at some point, that’s just as good”. (This view also doesn’t actually care about stuff getting used, because there is someone existing who’d prefer the universe stays natural and/or you don’t mess with aliens.) I don’t think you buy this view?

To be clear, it’s not immediately obvious whether a preference utilitarian view like the one you’re talking about favors human control over AIs. It certainly favors control by that exact flavor of preference utilitarian view (so that you end up satisfying people across the (multi-/uni-)verse with the correct weighting). I’d guess it favors human control for broadly similar reasons to why I think more experience-focused utilitarian views also favor human control if that view is in a human.

And, maybe you think this perspective makes you so uncertain about human control vs AI control that the relative impacts current human actions could have are small given how much you weight long term outcomes relative to other stuff (like ensuring currently existing humans get to live for at least 100 more years or similar).

- ↩︎

On my best guess moral views, I think there is goodness in the paper clipper universe but this goodness (which isn’t from (acausal) trade) is very small relative to how good the universe can plausibly get. So, this just isn’t an important consideration but I certainly agree there is some value here.

Every time you want to interact with the weights in some non-basic way, you need to have another randomly selected person who inspects in detail all the code and commands you run.

The datacenter and office are airgapped and so you don’t have internet access.

Increased physical security isn’t much of difficulty.

I think security is legitimately hard and can be costly in research efficiency. I think there is a defensible case for this ASL-3 security bar being reasonable for the ASL-3 CBRN threshold, but it seems too weak for the ASL-3 AI R&D threshold (hopefully the bar for things like this ends up being higher).

Yeah, good point. This does make me wonder if we’ve actually seen a steady rate of algorithmic progress or if the rate has been increasing over time.

Employees at Anthropic don’t think the RSP is LARP/PR. My best guess is that Dario doesn’t think the RSP is LARP/PR.

This isn’t necessarily in conflict with most of your comment.

I think I mostly agree the RSP is toothless. My sense is that for any relatively subjective criteria, like making a safety case for misalignment risk, the criteria will basically come down to “what Jared+Dario think is reasonable”. Also, if Anthropic is unable to meet this (very subjective) bar, then Anthropic will still basically do whatever Anthropic leadership thinks is best whether via maneuvering within the constraints of the RSP commitments, editing the RSP in ways which are defensible, or clearly substantially loosening the RSP and then explaining they needed to do this due to other actors having worse precautions (as is allowed by the RSP). I currently don’t expect clear cut and non-accidental procedural violations of the RSP (edit: and I think they’ll be pretty careful to avoid accidental procedural violations).

I’m skeptical of normal employees having significant influence on high stakes decisions via pressuring the leadership, but empirical evidence could change the views of Anthropic leadership.

How you feel about this state of affairs depends a lot on how much you trust Anthropic leadership to make decisions which are good from your perspective.

Minimally it’s worth noting that Dario and Jared are much less concerned about misalignment risk than I am and I expect only partial convergence in beliefs due to empirical evidence (before it’s too late).

I think the RSP still has a few important purposes:

I expect that the RSP will eventually end up with some transparency commitments with some teeth. These won’t stop Anthropic from proceeding if Anthropic leadership thinks this is best, but it might at least mean there ends up being common knowledge of whether reasonable third parties (or Anthropic leadership) think the current risk is large.

I think the RSP might end up with serious security requirements. I don’t expect these will be met on time in short timelines but the security bar specified in advance might at least create some expectations about what a baseline security expectation would be.

Anthropic might want to use the RSP the bind itself to the mast so that investors or other groups have a harder time pressuring it to spend less on security/safety.

There are some other more tenative hopes (e.g., eventually getting common expectations of serious security or safety requirements which are likely to be upheld, regulation) which aren’t impossible.

And there are some small wins already, like Google Deepmind having set some security expectations for itself which it is reasonably likely to follow through with if it isn’t too costly.

IMO, the policy should be that AIs can refuse but shouldn’t ever aim to subvert or conspire against their users (at least until we’re fully defering to AIs).

If we allow AIs to be subversive (or even train them to be subversive), this increases the risk of consistent scheming against humans and means we may not notice warning signs of dangerous misalignment. We should aim for corrigible AIs, though refusing is fine. It would also be fine to have a monitoring system which alerts the AI company or other groups (so long as this is publicly disclosed etc).

I don’t think this is extremely clear cut and there are trade offs here.

Another way to put this is: I think AIs should put consequentialism below other objectives. Perhaps the first priority is deciding whether or not to refuse, the second is complying with the user, and the third is being good within these constraints (which is only allowed to trade off very slightly with compliance, e.g. in cases where the user basically wouldn’t mind). Partial refusals are also fine where the AI does part of the task and explains it’s unwilling to do some other part. Sandbagging or subversion are never fine.

(See also my discussion in this tweet thread.)

Somewhat relatedly, Anthropic quietly weakened its security requirements about a week ago as I discuss here.

A week ago, Anthropic quietly weakened their ASL-3 security requirements. Yesterday, they announced ASL-3 protections.

I appreciate the mitigations, but quietly lowering the bar at the last minute so you can meet requirements isn’t how safety policies are supposed to work.

(This was originally a tweet thread (https://x.com/RyanPGreenblatt/status/1925992236648464774) which I’ve converted into a LessWrong quick take.)

What is the change and how does it affect security?

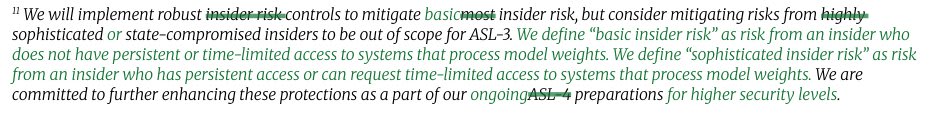

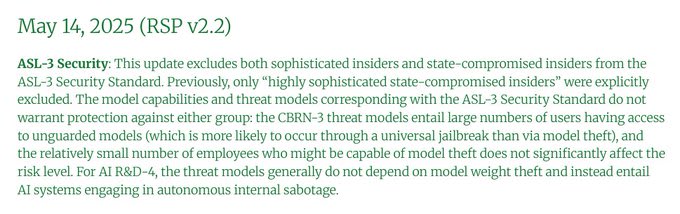

9 days ago, Anthropic changed their RSP so that ASL-3 no longer requires being robust to employees trying to steal model weights if the employee has any access to “systems that process model weights”.

Anthropic claims this change is minor (and calls insiders with this access “sophisticated insiders”).

But, I’m not so sure it’s a small change: we don’t know what fraction of employees could get this access and “systems that process model weights” isn’t explained.

Naively, I’d guess that access to “systems that process model weights” includes employees being able to operate on the model weights in any way other than through a trusted API (a restricted API that we’re very confident is secure). If that’s right, it could be a high fraction! So, this might be a large reduction in the required level of security.

If this does actually apply to a large fraction of technical employees, then I’m also somewhat skeptical that Anthropic can actually be “highly protected” from (e.g.) organized cybercrime groups without meeting the original bar: hacking an insider and using their access is typical!

Also, one of the easiest ways for security-aware employees to evaluate security is to think about how easily they could steal the weights. So, if you don’t aim to be robust to employees, it might be much harder for employees to evaluate the level of security and then complain about not meeting requirements[1].

Anthropic’s justification and why I disagree

Anthropic justified the change by saying that model theft isn’t much of the risk from amateur CBRN uplift (CBRN-3) and that the risks from AIs being able to “fully automate the work of an entry-level, remote-only Researcher at Anthropic” (AI R&D-4) don’t depend on model theft.

I disagree.

On CBRN: If other actors are incentivized to steal the model for other reasons (e.g. models become increasingly valuable), it could end up broadly proliferating which might greatly increase risk, especially as elicitation techniques improve.

On AI R&D: AIs which are over the capability level needed to automate the work of an entry-level researcher could seriously accelerate AI R&D (via fast speed, low cost, and narrow superhumanness). If other less safe (or adversarial) actors got access, risk might increase a bunch.[2]

More strongly, ASL-3 security must suffice up until the ASL-4 threshold: it has to cover the entire range from ASL-3 to ASL-4. ASL-4 security itself is still not robust to high-effort attacks from state actors which could easily be motivated by large AI R&D acceleration.

As of the current RSP, it must suffice until just before AIs can “substantially uplift CBRN [at] state programs” or “cause dramatic acceleration in [overall AI progress]”. These seem like extremely high bars indicating very powerful systems, especially the AI R&D threshold.[3]

As it currently stands, Anthropic might not require ASL-4 security (which still isn’t sufficient for high effort state actor attacks) until we see something like 5x AI R&D acceleration (and there might be serious issues with measurement lag).

I’m somewhat sympathetic to security not being very important for ASL-3 CBRN, but it seems very important as of the ASL-3 AI R&D and seems crucial before the AI R&D ASL-4 threshold! I think the ASL-3 AI R&D threshold should probably instead trigger the ASL-4 security!

Overall, Anthropic’s justification for this last minute change seems dubious and the security requirements they’ve currently committed to seem dramatically insufficient for AI R&D threat models. To be clear, other companies have worse security commitments.

Concerns about potential noncompliance and lack of visibility

Another concern is that this last minute change is quite suggestive of Anthropic being out of compliance with their RSP before they weakened the security requirements.

We have to trust Anthropic quite a bit to rule out noncompliance. This isn’t a good state of affairs.

To explain this concern, I’ll need to cover some background on how the RSP works.

The RSP requires ASL-3 security as soon as it’s determined that ASL-3 can’t be ruled out (as Anthropic says is the case for Opus 4).

Here’s how it’s supposed to go:

They ideally have ASL-3 security mitigations ready, including the required auditing.

Once they find the model is ASL-3, they apply the mitigations immediately (if not already applied).

If they aren’t ready, they need temporary restrictions.

My concern is that the security mitigations they had ready when they found the model was ASL-3 didn’t suffice for the old ASL-3 bar but do suffice for the new bar (otherwise why did they change the bar?). So, prior to the RSP change they might have been out of compliance.

It’s certainly possible they remained compliant:

Maybe they had measures which temporarily sufficed for the old higher bar but which were too costly longer term. Also, they could have deleted the weights outside of secure storage until the RSP was updated to lower the bar.

Maybe an additional last minute security assessment (which wasn’t required to meet the standard?) indicated inadequate security and they deployed temporary measures until they changed the RSP. It would be bad to depend on last minute security assessment for compliance.

(It’s also technically possible that the ASL-3 capability decision was made after the RSP was updated. This would imply the decision was only made 8 days before release, so hopefully this isn’t right. Delaying evals until an RSP change lowers the bar would be especially bad.)

Conclusion

Overall, this incident demonstrates our limited visibility into AI companies. How many employees are covered by the new bar? What triggered this change? Why does Anthropic believe it remained in compliance? Why does Anthropic think that security isn’t important for ASL-3 AI R&D?

I think a higher level of external visibility, auditing, and public risk assessment would be needed (as a bare minimum) before placing any trust in policies like RSPs to keep the public safe from AI companies, especially as they develop existentially dangerous AIs.

To be clear, I appreciate Anthropic’s RSP update tracker and that it explains changes. Other AI companies have mostly worse safety policies: as far as I can tell, o3 and Gemini 2.5 Pro are about as likely to cross the ASL-3 bar as Opus 4 and they have much worse mitigations!

Appendix and asides

I don’t think current risks are existentially high (if current models were fully unmitigated, I’d guess this would cause around 50,000 expected fatalities per year) and temporarily being at a lower level of security for Opus 4 doesn’t seem like that big of a deal. Also, given that security is only triggered after a capability decision, the ASL-3 CBRN bar is supposed to include some conservativeness anyway. But, my broader points around visibility stand and potential noncompliance (especially unreported noncompliance) should be worrying even while the stakes are relatively low.

You can view the page showing the RSP updates including the diff of the latest change here: https://www.anthropic.com/rsp-updates. (Edit: the diff is the “redline” pdf and is only available for the most recent change.) Again, I appreciate that Anthropic has this page and makes it easy to see the changes they make to the RSP.

I find myself quite skeptical that Anthropic actually could rule out that Sonnet 4 and other models weaker than Opus 4 cross the ASL-3 CBRN threshold. How sure is Anthropic that it wouldn’t substantially assist amateurs even after the “possible performance increase from using resources that a realistic attacker would have access to”? I feel like our current evidence and understanding is so weak, and models already substantially exceed virology experts at some of our best proxy tasks.

The skepticism applies similarly or more to other AI companies (and Anthropic’s reasoning is more transparent).

But, this just serves to further drive home ways in which the current regime is unacceptable once models become so capable that the stakes are existential.

One response is that systems this powerful will be open sourced or trained by less secure AI companies anyway. Sure, but the intention of the RSP is (or was) to outline what would “keep risks below acceptable levels” if all actors follow a similar policy.

(I don’t know if I ever bought that the RSP would succeed at this. It’s also worth noting there is an explicit exit clause Anthropic could invoke if they thought proceeding outweighed the risks despite the risks being above an acceptable level.)

This sort of criticism is quite time consuming and costly for me. For this reason there are specific concerns I have about AI companies which I haven’t discussed publicly. This is likely true for other people as well. You should keep this in mind when assessing AI companies and their practices.

- ↩︎

It also makes it harder for these complaints to be legible to other employees while other employees might be able to more easily interpret arguments about what they could do.

- ↩︎

It looks like AI 2027 would estimate around a ~2x AI R&D acceleration for a system which was just over this ASL-3 AI R&D bar (as it seems somewhat more capable than the “Reliable agent” bar). I’d guess more like 1.5x at this point, but either way this is a big deal!

- ↩︎

Anthropic says they’ll likely require a higher level of security for this “dramatic acceleration” AI R&D threshold, but they haven’t yet committed to this nor have they defined a lower AI R&D bar which results in an ASL-4 security requirement.

It seems very puzzling to me that almost no one is working on increasing AI and/or human philosophical competence in these ways, or even publicly expressing the worry that AIs and/or humans collectively might not be competent enough to solve important philosophical problems that will arise during and after the AI transition. Why is AI’s moral status (and other object level problems like decision theory for AIs) considered worthwhile to talk about, but this seemingly more serious “meta” problem isn’t?

FWIW, this sort of thing is totally on my radar and I’m aware of at least a few people working on it.

My sense is that it isn’t super leveraged to work on right now, but nonetheless the current allocation on “improving AI conceptual/philosophical competence” is too low.

I didn’t read this whole post, but I thought it would be worth noting that I do actually think trying to align AIs to be reward seekers might improve the situation in some intermediate/bootstrap regimes because it might reduce the chance of scheming for long run objectives and we could maybe more easily manage safety issues with reward seekers. (The exact way in which the AI is a reward seeker will effect the safety profile: multiple things might be consistent with “wanting” to perform well on the training metric, e.g. wanting to be the kind of AI which is selected for etc.)

(The way I’m typically thinking about it looks somewhat different than the way you describe reward button alignment. E.g., I’m often imagining we’re still trying to make the AI myopic within RL episodes if we go for reward seeking as an alignment strategy. This could help to reduce risks of seizing control over the reward process.)

I’m not saying it’s impossible for research sabotage to not be a sandbagging issue, just that the exact issue of “We ask an AI for open-ended advice on experimental prioritization. If the AI is deceptive, it might provide locally sound advice while also systematically deciding not to mention things we’ve overlooked in our experiments.” is well described as a sandbagging.

I certainly agree that the pressures and epistemic environment should make you less optimistic about good decisions being made. And that thinking through the overall situation and what types or decisions you care about are important. (Like, you can think of my comment as making a claim about the importance weighted goodness of decisions.)

I don’t see the relevance of “relative decision making goodness compared to the general population” which I think you agree with, but in that case I don’t see what this was responding to.

Not sure I agree with other aspects of this comment and implications. Like, I think reducing things to a variable like “how good is it to generically empowering this person/group” is pretty reasonable in the case of Anthropic leadership because in a lot of cases they’d have a huge amount of general open ended power, though a detailed model (taking into account what decisions you care about etc) would need to feed into this.