contact: jurkovich.nikola@gmail.com

Nikola Jurkovic

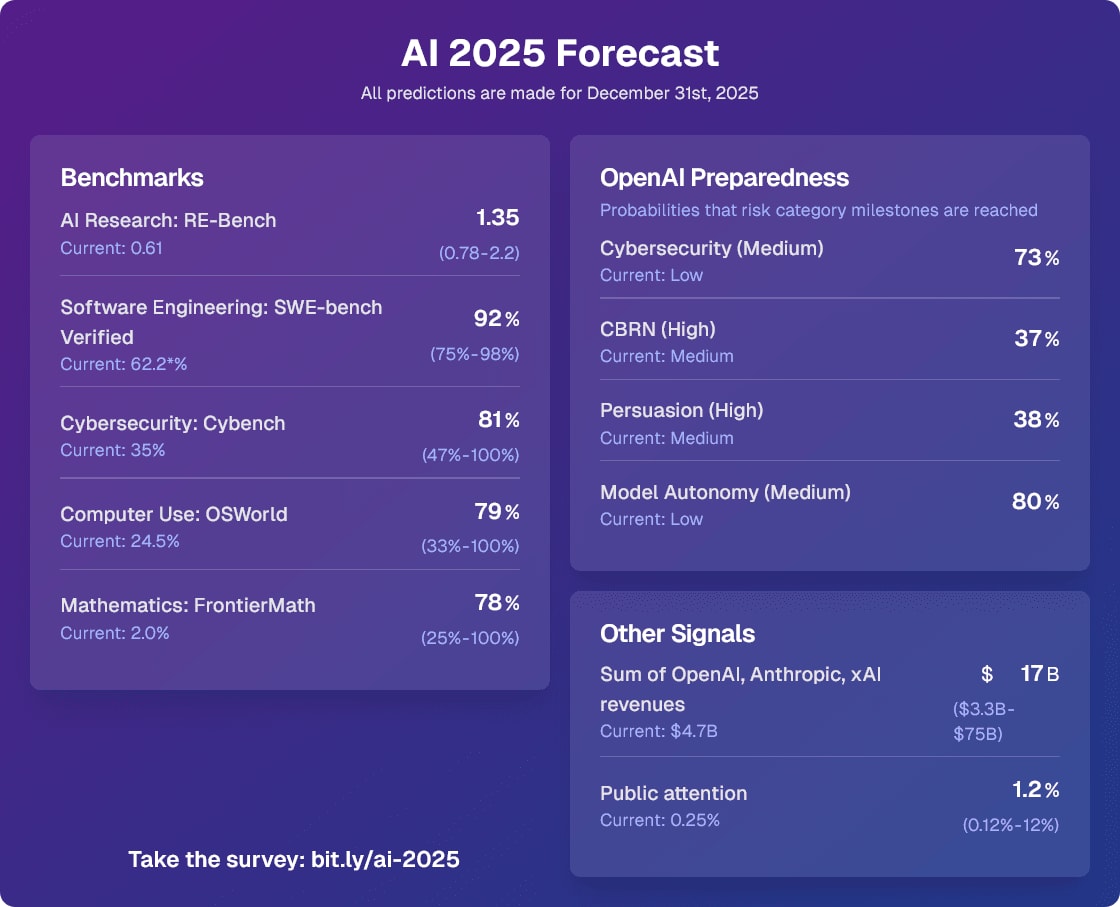

I will use this comment thread to keep track of notable updates to the forecasts I made for the 2025 AI Forecasting survey. As I said, my predictions coming true wouldn’t be strong evidence for 3 year timelines, but it would still be some evidence (especially RE-Bench and revenues).

The first update: On Jan 31st 2025, the Model Autonomy category hit Medium with the release of o3-mini. I predicted this would happen in 2025 with 80% probability.

02/25/2025: the Cybersecurity category hit Medium with the release of the Deep Research System Card. I predicted this would happen in 2025 with 73% probability. I’d now change CBRN (High) to 85% and Persuasion (High) to 70% given that two of the categories increased about 15% of the way into the year.

DeepSeek R1 being #1 on Humanity’s Last Exam is not strong evidence that it’s the best model, because the questions were adversarially filtered against o1, Claude 3.5 Sonnet, Gemini 1.5 Pro, and GPT-4o. If they weren’t filtered against those models, I’d bet o1 would outperform R1.

To ensure question difficulty, we automatically check the accuracy of frontier LLMs on each question prior to submission. Our testing process uses multi-modal LLMs for text-and-image questions (GPT-4O, GEMINI 1.5 PRO, CLAUDE 3.5 SONNET, O1) and adds two non-multi-modal models (O1MINI, O1-PREVIEW) for text-only questions. We use different submission criteria by question type: exact-match questions must stump all models, while multiple-choice questions must stump all but one model to account for potential lucky guesses.

If I were writing the paper I would have added either a footnote or an additional column to Table 1 getting across that GPT-4o, o1, Gemini 1.5 Pro, and Claude 3.5 Sonnet were adversarially filtered against. Most people just see Table 1 so it seems important to get across.

I have edited the title in response to this comment

The methodology wasn’t super robust so I didn’t want to make it sound overconfident, but my best guess is that around 80% of METR employees have sub 2030 median timelines.

I encourage people to register their predictions for AI progress in the AI 2025 Forecasting Survey (https://bit.ly/ai-2025 ) before the end of the year, I’ve found this to be an extremely useful activity when I’ve done it in the past (some of the best spent hours of this year for me).

Yes, resilience seems very neglected.

I think I’m at a similar probability to nuclear war but I think the scenarios where biological weapons are used are mostly past a point of no return for humanity. I’m at 15%, most of which is scenarios where the rest of the humans are hunted down by misaligned AI and can’t rebuild civilization. Nuclear weapons use would likely be mundane and for non AI-takeover reasons and would likely result in an eventual rebuilding of civilization.

The main reason I expect an AI to use bioweapons with more likelihood than nuclear weapons in a full-scale takeover is that bioweapons would do much less damage to existing infrastructure and thus allow a larger and more complex minimal seed of industrial capacity from the AI to recover from.

I’m interning there and I conducted a poll.

The median AGI timeline of more than half of METR employees is before the end of 2030.

(AGI is defined as 95% of fully remote jobs from 2023 being automatable.)

I think if the question is “what do I do with my altruistic budget,” then investing some of it to cash out later (with large returns) and donate much more is a valid option (as long as you have systems in place that actually make sure that happens). At small amounts (<$10M), I think the marginal negative effects on AGI timelines and similar factors are basically negligible compared to other factors.

Thanks for your comment. It prompted me to add a section on adaptability and resilience to the post.

I sadly don’t have well-developed takes here, but others have pointed out in the past that there are some funding opportunities that are systematically avoided by big funders, where small funders could make a large difference (e.g. the funding of LessWrong!). I expect more of these to pop up as time goes on.

Somewhat obviously, the burn rate of your altruistic budget should account for altruistic donation opportunities (possibly) disappearing post-ASI, but also account for the fact that investing and cashing it out later could also increase the size of the pot. (not financial advice)

(also, I have now edited the part of the post you quote to specify that I don’t just mean financial capital, I mean other forms of capital as well)

Time in bed

I’d now change the numbers to around 15% automation and 25% faster software progress once we reach 90% on Verified. I expect that to happen by end of May median (but I’m still uncertain about the data quality and upper performance limit).

(edited to change Aug to May on 12/20/2024)

I recently stopped using a sleep mask and blackout curtains and went from needing 9 hours of sleep to needing 7.5 hours of sleep without a noticeable drop in productivity. Consider experimenting with stuff like this.

Note that this is a very simplified version of a self-exfiltration process. It basically boils down to taking an already-working implementation of an LLM inference setup and copying it to another folder on the same computer with a bit of tinkering. This is easier than threat-model-relevant exfiltration scenarios which might involve a lot of guesswork, setting up efficient inference across many GPUs, and not tripping detection systems.

One weird detail I noticed is that in DeepSeek’s results, they claim GPT-4o’s pass@1 accuracy on MATH is 76.6%, but OpenAI claims it’s 60.3% in their o1 blog post. This is quite confusing as it’s a large difference that seems hard to explain with different training checkpoints of 4o.

You should say “timelines” instead of “your timelines”.

One thing I notice in AI safety career and strategy discussions is that there is a lot of epistemic helplessness in regard to AGI timelines. People often talk about “your timelines” instead of “timelines” when giving advice, even if they disagree strongly with the timelines. I think this habit causes people to ignore disagreements in unhelpful ways.

Here’s one such conversation:

Bob: Should I do X if my timelines are 10 years?

Alice (who has 4 year timelines): I think X makes sense if your timelines are longer that 6 years, so yes!

Alice will encourage Bob to do X despite the fact that Alice thinks timelines are shorter than 6 years! Alice is actively giving Bob bad advice by her own lights (by assuming timelines she doesn’t agree with). Alice should instead say “I think timelines are shorter than 6 years, so X doesn’t make sense. But if they were longer than 6 years it would make sense”.

In most discussions, there should be no such thing as “your timelines” or “my timelines”. That framing makes it harder to converge, and it encourages people to give each other advice that they don’t even think makes sense.

Note that I do think some plans make sense as bets for long timeline worlds, and that using medians somewhat oversimplifies timelines. My point still holds if you replace the medians with probability distributions.

I think this post would be easier to understand if you called the model what OpenAI is calling it: “o1”, not “GPT-4o1″.

Sam Altman apparently claims OpenAI doesn’t plan to do recursive self improvement

Nate Silver’s new book On the Edge contains interviews with Sam Altman. Here’s a quote from Chapter that stuck out to me (bold mine):Yudkowsky worries that the takeoff will be faster than what humans will need to assess the situation and land the plane. We might eventually get the AIs to behave if given enough chances, he thinks, but early prototypes often fail, and Silicon Valley has an attitude of “move fast and break things.” If the thing that breaks is civilization, we won’t get a second try.

Footnote: This is particularly worrisome if AIs become self-improving, meaning you train an AI on how to make a better AI. Even Altman told me that this possibility is “really scary” and that OpenAI isn’t pursuing it.I’m pretty confused about why this quote is in the book. OpenAI has never (to my knowledge) made public statements about not using AI to automate AI research, and my impression was that automating AI research is explicitly part of OpenAI’s plan. My best guess is that there was a misunderstanding in the conversation between Silver and Altman.

I looked a bit through OpenAI’s comms to find quotes about automating AI research, but I didn’t find many.

There’s this quote from page 11 of the Preparedness Framework:

If the model is able to conduct AI research fully autonomously, it could set off an intelligence explosion.

Footnote: By intelligence explosion, we mean a cycle in which the AI system improves itself, which makes the system more capable of more improvements, creating a runaway process of self-improvement. A concentrated burst of capability gains could outstrip our ability to anticipate and react to them.

In Planning for AGI and beyond, they say this:

AI that can accelerate science is a special case worth thinking about, and perhaps more impactful than everything else. It’s possible that AGI capable enough to accelerate its own progress could cause major changes to happen surprisingly quickly (and even if the transition starts slowly, we expect it to happen pretty quickly in the final stages). We think a slower takeoff is easier to make safe, and coordination among AGI efforts to slow down at critical junctures will likely be important (even in a world where we don’t need to do this to solve technical alignment problems, slowing down may be important to give society enough time to adapt).

There are some quotes from Sam Altman’s personal blog posts from 2015 (bold mine):

It’s very hard to know how close we are to machine intelligence surpassing human intelligence. Progression of machine intelligence is a double exponential function; human-written programs and computing power are getting better at an exponential rate, and self-learning/self-improving software will improve itself at an exponential rate. Development progress may look relatively slow and then all of a sudden go vertical—things could get out of control very quickly (it also may be more gradual and we may barely perceive it happening).

As mentioned earlier, it is probably still somewhat far away, especially in its ability to build killer robots with no help at all from humans. But recursive self-improvement is a powerful force, and so it’s difficult to have strong opinions about machine intelligence being ten or one hundred years away.

Another 2015 blog post (bold mine):

Given how disastrous a bug could be, [regulation should] require development safeguards to reduce the risk of the accident case. For example, beyond a certain checkpoint, we could require development happen only on airgapped computers, require that self-improving software require human intervention to move forward on each iteration, require that certain parts of the software be subject to third-party code reviews, etc. I’m not very optimistic than any of this will work for anything except accidental errors—humans will always be the weak link in the strategy (see the AI-in-a-box thought experiments). But it at least feels worth trying.

Note that for HLE, most of the difference in performance might be explained by Deep Research having access to tools while other models are forced to reply instantly with no tool use.