Utility Maximization = Description Length Minimization

There’s a useful intuitive notion of “optimization” as pushing the world into a small set of states, starting from any of a large number of states. Visually:

Yudkowsky and Flint both have notable formalizations of this “optimization as compression” idea.

This post presents a formalization of optimization-as-compression grounded in information theory. Specifically: to “optimize” a system is to reduce the number of bits required to represent the system state using a particular encoding. In other words, “optimizing” a system means making it compressible (in the information-theoretic sense) by a particular model.

This formalization turns out to be equivalent to expected utility maximization, and allows us to interpret any expected utility maximizer as “trying to make the world look like a particular model”.

Conceptual Example: Building A House

Before diving into the formalism, we’ll walk through a conceptual example, taken directly from Flint’s Ground of Optimization: building a house. Here’s Flint’s diagram:

The key idea here is that there’s a wide variety of initial states (piles of lumber, etc) which all end up in the same target configuration set (finished house). The “perturbation” indicates that the initial state could change to some other state—e.g. someone could move all the lumber ten feet to the left—and we’d still end up with the house.

In terms of information-theoretic compression: we could imagine a model which says there is probably a house. Efficiently encoding samples from this model will mean using shorter bit-strings for world-states with a house, and longer bit-strings for world-states without a house. World-states with piles of lumber will therefore generally require more bits than world-states with a house. By turning the piles of lumber into a house, we reduce the number of bits required to represent the world-state using this particular encoding/model.

If that seems kind of trivial and obvious, then you’ve probably understood the idea; later sections will talk about how it ties into other things. If not, then the next section is probably for you.

Background Concepts From Information Theory

The basic motivating idea of information theory is that we can represent information using fewer bits, on average, if we use shorter representations for states which occur more often. For instance, Morse code uses only a single bit (“.”) to represent the letter “e”, but four bits (“- - . -”) to represent “q”. This creates a strong connection between probabilistic models/distributions and optimal codes: a code which requires minimal average bits for one distribution (e.g. with lots of e’s and few q’s) will not be optimal for another distribution (e.g. with few e’s and lots of q’s).

For any random variable generated by a probabilistic model , we can compute the minimum average number of bits required to represent . This is Shannon’s famous entropy formula

Assuming we’re using an optimal encoding for model , the number of bits used to encode a particular value is . (Note that this is sometimes not an integer! Today we have algorithms which encode many samples at once, potentially even from different models/distributions, to achieve asymptotically minimal bit-usage. The “rounding error” only happens once for the whole collection of samples, so as the number of samples grows, the rounding error per sample goes to zero.)

Of course, we could be wrong about the distribution—we could use a code optimized for a model which is different from the “true” model . In this case, the average number of bits used will be

In this post, we’ll use a “wrong” model intentionally—not because we believe it will yield short encodings, but because we want to push the world into states with short -encodings. The model serves a role analogous to a utility function. Indeed, we’ll see later on that every model is equivalent to a utility function, and vice-versa.

Formal Statement

Here are the variables involved in “optimization”:

World-state random variables

Parameters which will be optimized

Probabilistic world-model representing the distribution of

Probabilistic world-model representing the encoding in which we wish to make more compressible

An “optimizer” takes in some parameter-values , and returns new parameter-values such that

… with equality if-and-only-if already achieves the smallest possible value. In English: we choose to reduce the average number of bits required to encode a sample from , using a code optimal for . This is essentially just our formula from the previous section for the number of bits used to encode a sample from using a code optimal for .

Other than the information-theory parts, the main thing to emphasize is that we’re mapping one parameter-value to a “more optimal” parameter-value . This should work for many different “initial” -values, implying a kind of robustness to changes in . (This is roughly the same concept which Flint captured by talking about “perturbations” to the system-state.) In the context of iterative optimizers, our definition corresponds to one step of optimization; we could of course feed back into the optimizer and repeat. We could even do this without having any distinguished “optimizer” subsystem—e.g. we might just have some dynamical system in which is a function of time, and successive values of satisfy the inequality condition.

Finally, note that our model is a function of . This form is general enough to encompass all the usual decision theories. For instance, under EDT, would be some base model conditioned on the data . Under CDT, would instead be a causal intervention on a base model , i.e. .

Equivalence to Expected Utility Optimization

Obviously our expression can be expressed as an expected utility: just set . The slightly more interesting claim is that we can always go the other way: for any utility function , there is a corresponding model , such that maximizing expected utility is equivalent to minimizing expected bits to encode using .

The main trick here is that we can always add a constant to , or multiply by a positive constant, and it will still “be the same utility”—i.e. an agent with the new utility will always make the same choices as the old. So, we set

… and look for which give us a valid probability distribution (i.e. all probabilities are nonnegative and sum to 1).

Since everything is in an exponent, all our probabilities will be nonnegative for any , so that constraint is trivially satisfied. To make the distribution sum to one, we simply set . So, not only can we find a model for any , we actually find a whole family of them—one for each .

(This also reveals a degree of freedom in our original definition: we can always create a new model with without changing the behavior.)

So What Does This Buy Us?

If this formulation is equivalent to expected utility maximization, why view it this way?

Intuitively, this view gives more semantics to our “utility functions”. They have built-in “meanings”; they’re not just preference orderings.

Mathematically, the immediately obvious step for anyone with an information theory background is to write:

The expected number of bits required to encode using is the entropy of plus the Kullback-Liebler divergence of (distribution of under model ) from (distribution of under model ). Both of those terms are nonnegative. The first measures “how noisy” is, the second measures “how close” the distributions are under our two models.

Intuitively, this math says that we can decompose the objective into two pieces:

Make more predictable

Make the distribution of “close to” the distribution , with closeness measured by KL-divergence

Combined with the previous section: we can take any expected utility maximization problem, and decompose it into an entropy minimization term plus a “make-the-world-look-like-this-specific-model” term.

This becomes especially interesting in situations where the entropy of cannot be reduced—e.g. thermodynamics. If the entropy is fixed, then only the KL-divergence term remains. In this case, we can directly interpret the optimization problem as “make the world-state distribution look like ”. If we started from an expected utility optimization problem, then we derive a model such that optimizing expected utility is equivalent to making the world look as much as possible like .

In fact, even when is not fixed, we can build equivalent models for which it is fixed, by adding new variables to . Suppose, for example, that we can choose between flipping a coin and rolling a die to determine . We can change the model so that both the coin flip and the die roll always happen, and we include their outcomes in . We then choose whether to set equal to the coin flip result or the die roll result, but in either case the entropy of is the same, since both are included. simply ignores all the new components added to (i.e. it implicitly has a uniform distribution on the new components).

So, starting from an expected utility maximization problem, we can transform to an equivalent minimum coded bits problem, and from there to an equivalent minimum KL-divergence problem. We can then interpret the optimization as “choose to make as close as possible to ”, with closeness measured by KL-divergence.

What I Imagine This Might Be Useful For

In general, interpretations of probability grounded in information theory are much more solid than interpretations grounded in coherence theorems. However, information-theoretic groundings only talk about probability, not about “goals” or “agents” or anything utility-like. Here, we’ve transformed expected utility maximization into something explicitly information-theoretic and conceptually natural. This seems like a potentially-promising step toward better foundations of agency. I imagine there’s probably purely-information-theoretic “coherence theorems” to be found.

Another natural direction to take this in is thermodynamic connections, e.g. combining it with a generalized heat engine. I wouldn’t be surprised if this also tied in with information-theoretic “coherence theorems”—in particular, I imagine that negentropy could serve as a universal “resource”, replacing the “dollars” typically used as a measuring stick in coherence theorems.

Overall, the whole formulation smells like it could provide foundations much more amenable to embedded agency.

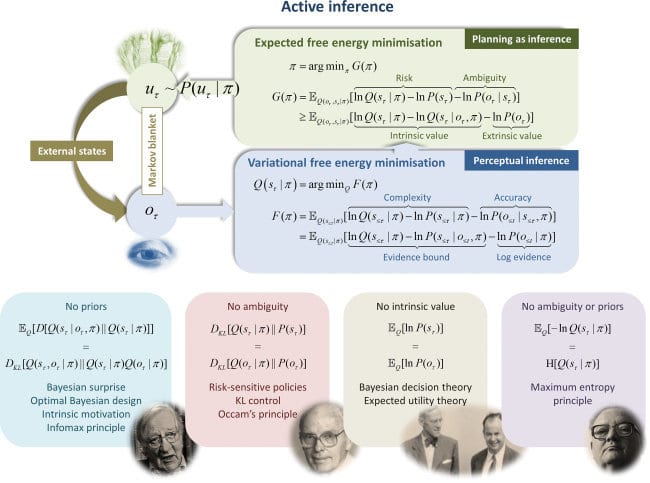

Finally, there’s probably some nice connection to predictive processing. In all likelihood, Karl Friston has already said all this, but it has yet to be distilled and disseminated to the rest of us.

- What Is The Alignment Problem? by (16 Jan 2025 1:20 UTC; 181 points)

- 2021 AI Alignment Literature Review and Charity Comparison by (EA Forum; 23 Dec 2021 14:06 UTC; 176 points)

- 2021 AI Alignment Literature Review and Charity Comparison by (23 Dec 2021 14:06 UTC; 168 points)

- What do coherence arguments actually prove about agentic behavior? by (1 Jun 2024 9:37 UTC; 127 points)

- Idealized Agents Are Approximate Causal Mirrors (+ Radical Optimism on Agent Foundations) by (22 Dec 2023 20:19 UTC; 77 points)

- Search-in-Territory vs Search-in-Map by (5 Jun 2021 23:22 UTC; 77 points)

- What Selection Theorems Do We Expect/Want? by (1 Oct 2021 16:03 UTC; 71 points)

- Distributed Decisions by (29 May 2022 2:43 UTC; 66 points)

- Clarifying the Agent-Like Structure Problem by (29 Sep 2022 21:28 UTC; 63 points)

- Some Summaries of Agent Foundations Work by (15 May 2023 16:09 UTC; 62 points)

- Framing Practicum: Stable Equilibrium by (9 Aug 2021 17:28 UTC; 57 points)

- Some Existing Selection Theorems by (30 Sep 2021 16:13 UTC; 56 points)

- Towards Measures of Optimisation by (12 May 2023 15:29 UTC; 53 points)

- How Would an Utopia-Maximizer Look Like? by (20 Dec 2023 20:01 UTC; 32 points)

- When bits of optimization imply bits of modeling: the Touchette-Lloyd theorem by (15 Dec 2025 4:21 UTC; 22 points)

- Towards the Operationalization of Philosophy & Wisdom by (28 Oct 2024 19:45 UTC; 20 points)

- Predictability is Underrated by (13 Oct 2025 22:40 UTC; 20 points)

- 's comment on Saving Time by (20 May 2021 4:48 UTC; 11 points)

- Does the Telephone Theorem give us a free lunch? by (15 Feb 2023 2:13 UTC; 11 points)

- 's comment on I’m no longer sure that I buy dutch book arguments and this makes me skeptical of the “utility function” abstraction by (22 Jun 2021 17:09 UTC; 11 points)

- 's comment on Why The Focus on Expected Utility Maximisers? by (29 Dec 2022 6:58 UTC; 10 points)

- 's comment on A Simple Toy Coherence Theorem by (4 Aug 2024 18:26 UTC; 5 points)

- Noisy environment regulate utility maximizers by (5 Jun 2022 18:48 UTC; 4 points)

- Optimizers: To Define or not to Define by (16 May 2021 19:55 UTC; 4 points)

- 's comment on DragonGod’s Shortform by (10 May 2023 21:05 UTC; 4 points)

- 's comment on The “Measuring Stick of Utility” Problem by (25 May 2022 20:15 UTC; 3 points)

- 's comment on Value systematization: how values become coherent (and misaligned) by (28 Oct 2023 21:20 UTC; 3 points)

- 's comment on The shortform of Ole Q Doc by (9 Jun 2023 14:22 UTC; 3 points)

- 's comment on quinn’s Quick takes by (EA Forum; 9 Jun 2023 14:21 UTC; 2 points)

- 's comment on Does life actually locally *increase* entropy? by (16 Sep 2024 20:33 UTC; 2 points)

- 's comment on We Are Less Wrong than E. T. Jaynes on Loss Functions in Human Society by (9 Jun 2023 13:45 UTC; 2 points)

- Towards the Operationalization of Philosophy & Wisdom by (EA Forum; 28 Oct 2024 19:45 UTC; 1 point)

- 's comment on 2021 AI Alignment Literature Review and Charity Comparison by (9 Apr 2022 15:10 UTC; 1 point)

- 's comment on A Generalization of the Good Regulator Theorem by (5 Jan 2025 14:40 UTC; 1 point)

Summary

I summarize this post in a slightly reverse order. In AI alignment, one core question is how to think about utility maximization. What are agents doing that maximize utility? How does embeddedness play into this? What can we prove about such agents? Which types of systems become maximizers of utility in the first place?

This article reformulates expected utility maximization in equivalent terms in the hopes that the new formulation makes answering such questions easier. Concretely, a utility function u is given, and the goal of a u-maximizer is to change the distribution M1 over world states X in such a way that E_M1[u(X)] is maximized. Now, assuming that the world is finite (an assumption John doesn’t mention but is discussed in the comments), one can find a>0, b such that a*u(X) + b = log P(X | M2) for some distribution/model of the world M2. Roughly, M2 assigns high probability to states X that have high utility u(X).

Then the equivalent goal of the u-maximizer becomes changing M1 such that E_M1[- log P(X | M2)] becomes minimal, which means minimizing H(X | M1) + D_KL(M1 | M2). The entropy H(X | M1) cannot be influenced in our world (due to thermodynamics) or can, by a mathematical trick, be assumed to be fixed, meaning that the problem reduces to just minimizing the KL-distance of distributions D_KL(M1 | M2). Another way of saying this is that we want to minimize the average number of bits required to describe the world state X when using the Shannon-Fano code of M2. A final tangential claim is that for powerful agents/optimization processes, the initial M1 with which the world starts shouldn’t matter so much for the achieved end result of this process.

John then speculates on how this reformulation might be useful, e.g. for selection theorems.

Opinion

This is definitely thought-provoking.

What I find interesting about this formulation is that it seems a bit like “inverse generative modeling”: usually in generative modeling in machine learning, we start out with a “true distribution” M1’ of the world and try to “match” a model distribution M2’ to it. This can then be done by maximizing average log P(X | M2’) for X that are samples from M1’, i.e. by performing maximum likelihood. So in some sense, a “utility” is maximized there as well.

But in John’s post, the situation is reversed: the agent has a utility function corresponding to a distribution M2 that weights up desired world states, and the agent tries to match the real-world distribution M1 to that.

If an agent is now both engaging in generative modeling (to build its world model) and in utility maximization, then it seems like the agent could also collapse both objectives into one: start out with the “wrong” prediction by already assuming the desired world state M2 and then get closer to predicting correctly by changing the real world. Noob question: is this what the predictive processing people are talking about? I’m wondering this since when I heard people saying things like “all humans do is just predicting the world”, I never understood why humans wouldn’t then just sit in a dark room without anything going on, which is a highly predictable world-state. The answer might be that they start out predicting a desirable world, and their prediction algorithm is somehow weak and only manages to predict correctly by “just” changing the world. I’m not sure if I buy this.

One thing I didn’t fully understand in the post itself is why the entropy under M1 can always be assumed to be constant by a mathematical trick, though another comment explored this in more detail (a comment I didn’t read in full).

Minor: Two minus signs are missing in places, and I think the order of the distributions in the KL term is wrong.