This is a snapshot of a new page on the AI Impacts Wiki.

We’ve made a list of arguments[1] that AI poses an existential risk to humanity. We’d love to hear how you feel about them in the comments and polls.

Competent non-aligned agents

Summary:

Humans will build AI systems that are ‘agents’, i.e. they will autonomously pursue goals

Humans won’t figure out how to make systems with goals that are compatible with human welfare and realizing human values

Such systems will be built or selected to be highly competent, and so gain the power to achieve their goals

Thus the future will be primarily controlled by AIs, who will direct it in ways that are at odds with long-run human welfare or the realization of human values

Selected counterarguments:

It is unclear that AI will tend to have goals that are bad for humans

There are many forms of power. It is unclear that a competence advantage will ultimately trump all others in time

This argument also appears to apply to human groups such as corporations, so we need an explanation of why those are not an existential risk

People who have favorably discussed[2] this argument (specific quotes here): Paul Christiano (2021), Ajeya Cotra (2023), Eliezer Yudkowsky (2024), Nick Bostrom (2014[3]).

See also: Full wiki page on the competent non-aligned agents argument

Second species argument

Summary:

Human dominance over other animal species is primarily due to humans having superior cognitive and coordination abilities

Therefore if another ‘species’ appears with abilities superior to those of humans, that species will become dominant over humans in the same way

AI will essentially be a ‘species’ with superior abilities to humans

Therefore AI will dominate humans

Selected counterarguments:

Human dominance over other species is plausibly not due to the cognitive abilities of individual humans, but rather because of human ability to communicate and store information through culture and artifacts

Intelligence in animals doesn’t appear to generally relate to dominance. For instance, elephants are much more intelligent than beetles, and it is not clear that elephants have dominated beetles

Differences in capabilities don’t necessarily lead to extinction. In the modern world, more powerful countries arguably control less powerful countries, but they do not wipe them out and most colonized countries have eventually gained independence

People who have favorably discussed this argument (specific quotes here): Joe Carlsmith (2024), Richard Ngo (2020), Stuart Russell (2020[4]), Nick Bostrom (2015).

See also: Full wiki page on the second species argument

Loss of control via inferiority

Summary:

AI systems will become much more competent than humans at decision-making

Thus most decisions will probably be allocated to AI systems

If AI systems make most decisions, humans will lose control of the future

If humans have no control of the future, the future will probably be bad for humans

Selected counterarguments:

Humans do not generally seem to become disempowered by possession of software that is far superior to them, even if it makes many ‘decisions’ in the process of carrying out their will

In the same way that humans avoid being overpowered by companies, even though companies are more competent than individual humans, humans can track AI trustworthiness and have AI systems compete for them as users. This might substantially mitigate untrustworthy AI behavior

People who have favorably discussed this argument (specific quotes here): Paul Christiano (2014), Ajeya Cotra (2023), Richard Ngo (2024).

See also: Full wiki page on loss of control via inferiority

Loss of control via speed

Summary:

Advances in AI will produce very rapid changes, in available AI technology, other technologies, and society

Faster changes reduce the ability for humans to exert meaningful control over events, because they need time to make non-random choices

The pace of relevant events could become so fast as to allow for negligible relevant human choice

If humans are not ongoingly involved in choosing the future, the future is likely to be bad by human lights

Selected counterarguments:

The pace at which humans can participate is not fixed. AI technologies will likely speed up processes for human participation.

It is not clear that advances in AI will produce very rapid changes.

People who have favorably discussed this argument (specific quotes here): Joe Carlsmith (2021).

See also: Full wiki page on loss of control via speed

Human non-alignment

Summary:

People who broadly agree on good outcomes within the current world may, given much more power, choose outcomes that others would consider catastrophic

AI may empower some humans or human groups to bring about futures closer to what they would choose

From 1, that may be catastrophic according to the values of most other humans

Selected counterarguments:

Human values might be reasonably similar (possibly after extensive reflection)

This argument applies to anything that empowers humans. So it fails to show that AI is unusually dangerous among desirable technologies and efforts

People who have favorably discussed this argument (specific quotes here): Joe Carlsmith (2024), Katja Grace (2022), Scott Alexander (2018).

See also: Full wiki page on the human non-alignment argument

Catastrophic tools

Summary:

There appear to be non-AI technologies that would pose a risk to humanity if developed

AI will markedly increase the speed of development of harmful non-AI technologies

AI will markedly increase the breadth of access to harmful non-AI technologies

Therefore AI development poses an existential risk to humanity

Selected counterarguments:

It is not clear that developing a potentially catastrophic technology makes its deployment highly likely

New technologies that are sufficiently catastrophic to pose an extinction risk may not be feasible soon, even with relatively advanced AI

People who have favorably discussed this argument (specific quotes here): Dario Amodei (2023), Holden Karnofsky (2016), Yoshua Bengio (2024).

See also: Full wiki page on the catastrophic tools argument

Powerful black boxes

Summary:

So far, humans have developed technology largely through understanding relevant mechanisms

AI systems developed in 2024 are created via repeatedly modifying random systems in the direction of desired behaviors, rather than being manually built, so the mechanisms the systems themselves ultimately use are not understood by human developers

Systems whose mechanisms are not understood are more likely to produce undesired consequences than well-understood systems

If such systems are powerful, then the scale of undesired consequences may be catastrophic

Selected counterarguments:

It is not clear that developing technology without understanding mechanisms is so rare. We have historically incorporated many biological products into technology, and improved them, without deep understanding of all involved mechanisms

Even if this makes AI more likely to be dangerous, that doesn’t mean the harms are likely to be large enough to threaten humanity

See also: Full wiki page on the powerful black boxes argument

Multi-agent dynamics

Summary:

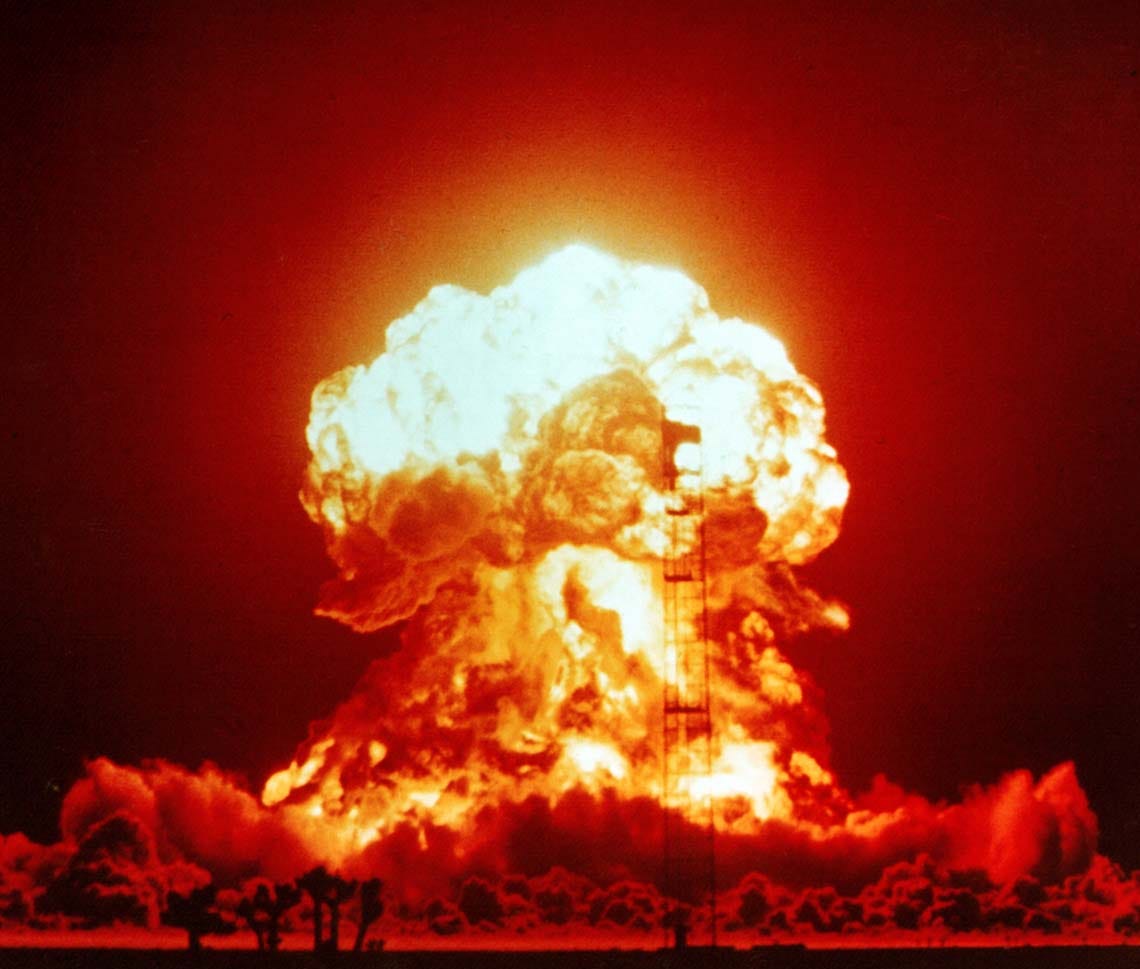

Competition can produce outcomes undesirable to all parties, through selection pressure for the success of any behavior that survives well, or through high stakes situations where well-meaning actors’ best strategies are risky to all (as with nuclear weapons in the 20th Century)

AI will increase the intensity of relevant competitions

Selected counterarguments:

It’s not clear what direction AI will have on the large number of competitive situations in the world

People who have favorably discussed this argument (specific quotes here): Robin Hanson (2001)

See also: Full wiki page on the multi-agent dynamics argument

Large impacts

Summary:

AI development will have very large impacts, relative to the scale of human society

Large impacts generally raise the chance of large risks

Selected counterarguments:

That AI will have large impacts is a vague claim, so it is hard to tell if it is relevantly true. For instance, ‘AI’ is a large bundle of technologies, so it might be expected to have large impacts. Many other large bundles of things will have ‘large’ impacts, for instance the worldwide continued production of electricity, relative to its ceasing. However we do not consider electricity producers to pose an existential risk for this reason

Minor changes frequently have large impacts on the world according to (e.g. the butterfly effect). By this reasoning, perhaps we should never leave the house

People who have favorably discussed this argument (specific quotes here): Richard Ngo (2019)

See also: Full wiki page on the large impacts argument

Expert opinion

Summary:

The people best placed to judge the extent of existential risk from AI are AI researchers, forecasting experts, experts on AI risk, relevant social scientists, and some others

Median members of these groups frequently put substantial credence (e.g. 0.4% to 5%) on human extinction or similar disempowerment from AI

Selected counterarguments:

Most of these groups do not have demonstrated skill at forecasting, and to our knowledge none have demonstrated skill at forecasting speculative events more than 5 years into the future

This is a snapshot of an AI Impacts wiki page. For an up to date version, see there.

- ^

Each ‘argument’ here is intended to be a different line of reasoning, however they are often not pointing to independent scenarios or using independent evidence. Some arguments attempt to reason about the same causal pathway to the same catastrophic scenarios, but relying on different concepts. Furthermore, ‘line of reasoning’ is a vague construct, and different people may consider different arguments here to be equivalent, for instance depending on what other assumptions they make or the relationship between their understanding of concepts.

- ^

Nathan Young puts 80% that at the time of the quote the individual would have endorsed the respective argument. They may endorse it whilst considering another argument stronger or more complete.

- ^

Superintelligence, Chapter 8

- ^

Human Compatible: Artificial Intelligence and the Problem of Control

Vote on the arguments here! Copying the structure from the substack polling, here are the four options (using similar reacts).

The question each time is: do you find this argument compelling?

1. Argument from competent non-aligned agents

2. Second species argument

3. Loss of control through inferiority argument

4. Loss of control through speed argument

5. Human non-alignment argument

6. Catastrophic tools argument

7. Powerful black boxes argument

8. Argument from multi-agent dynamics

9. Argument from large impacts

10. Argument from expert opinion

I really wish that I could respond more quantitatively to these. IMO all these arguments are cause for concern, but of substantially different scale.

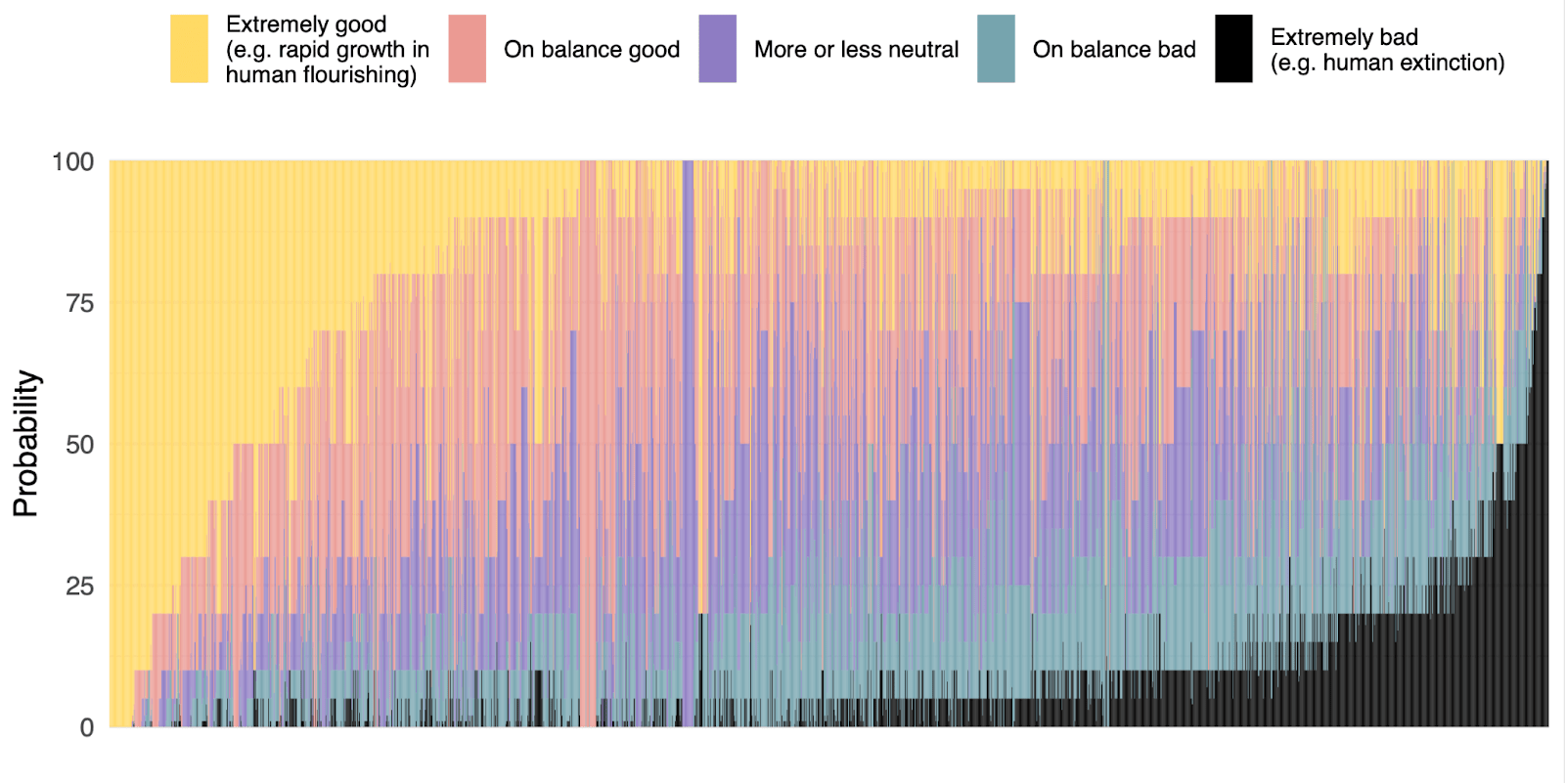

Perhaps something like this?

At the very least it would need to be on a logistic scale (i.e. in odds form). If something has 2:1 odds, then if I am at 50% then that’s a 25% probability mass movement, but when I am at 99% only a ~1% probability movement.

But my guess is even odds are too hard to assign to stuff like this.

After chatting with our voices, I believe you misunderstood me as asking “How many points of probability does this argument move you?” whereas I meant “If you had not seen any of the other arguments, and you were presented with this argument, what would your absolute probability of an existential catastrophe from AI be?”

I don’t stand by this as the ultimate bestest survey question of all time, and would welcome an improvement.

I’ll say I think the alternative of “What likelihood ratio does this argument provide?” (e.g. 2x, 10x, etc) isn’t great because I believe the likelihood ratios of all the arguments are not conditionally independent.

You have my and @katjagrace’s permission to test out other poll formats if you wish.

I’m concerned about AI, but none of these arguments are a very good explanation of why, so my vote is for “you’re not done finding arguments”.

Oh interesting. What’s a reason you’re concerned that isn’t addressed in the above 10?

My view is that human development during childhood implies AI self-improvement is possible. That means “threshold” problems.

One concern about AI is that it would be super-persuasive, and thus able to take over institutions. We already have people who believe something dumb because “GPT-4 said it so it must be true”. We have some powerful people enthusiastic about giving power to AI, more interested in that than giving power to smart humans. We have some examples of LLMs being more persuasive to average people than humans are. And we have many historical examples of notable high-verbal-IQ people doing that.

We have this recent progress that wasn’t predicted very well in advance, indicating uncharted waters.

instrumental convergence arguments

[redacted technical thing]

“This argument also appears to apply to human groups such as corporations, so we need an explanation of why those are not an existential risk”

I don’t think this is necessary. It seems pretty obvious that (some) corporations could pose an existential risk if left unchecked.

Edit: And depending on your political leanings and concern over the climate, you might agree that they already are posing an existential risk.

What do you think P(doom from corporations) is. I’ve never heard much worry about current non-AI corps?

I’m not confident that I could give a meaningful number with any degree of confidence. I lack expertise in corporate governance, bio-safety and climate forecasting. Additionally, for the condition to be satisfied that corporations are left “unchecked” there would need to be a dramatic Western political shift that makes speculating extremely difficult.

I will outline my intuition for why (very large, global) human corporations could pose an existential risk (conditional on the existential risk from AI being negligible and global governance being effectively absent).

1.1 In the last hundred years, we’ve seen that (some) large corporations are willing to cause harm on a massive scale if it is profitable to do so, either intentionally or through neglect. Note that these decisions are mostly “rational” if your only concern is money.

Copying some of the examples I gave in No Summer Harvest:

Exxon chose to suppress their own research on the dangers of climate change in the late 1970s and early 1980s.

Numerous companies ignored signs that leaded gasoline was dangerous and the introduction of the product resulted in half the US adult population being exposed to lead during childhood. Here is a paper that claims American adults born between 1966 to 1970 lost an average of 5.9 IQ points (McFarland et al., 2022, bottom of page 3)

IBM supported its German subsidiary company Dehomag throughout WWII. When the Nazis carried out the 1939 census, used to identify people with Jewish ancestry, they utilized the Dehomag D11, with “IBM” etched on the front. Later, Concentration camps would use Dehomag machines to manage data related to prisoners, resources and labor within the camps. The numbers tattooed onto prisoners’ bodies was used to track them via these machines.

1.2 Some corporations have also demonstrated they’re willing to cut corners and take risks at the expense of human lives.

NASA neglected the warnings of engineers and almost a decade of test data demonstrating that there was a catastrophic flaw with SRB O-rings, resulting in the Challenger disaster. (You may be interested in reading Richard Feynman’s observations given in the Presidential Report.)

Meta’s engagement algorithm is alleged to have driven the spread of anti-Rohingya content in Myanmar and contributed to genocide.

3787 people died and more than half a million were injured when a due to a gas leak at a pesticide plant in Bhopal, India. The corporation running the plant, Union Carbide India Limited, was majority owned by the US-based Union Carbide Corporation (UCC). Ultimately UCC would pay less than a dollar per person affected.

2. Without corporate governance, immoral decision making and risk taking behaviour could be expected to increase. If the net benefit of taking an action improves because there are fewer repercussions when things go wrong, they should reasonably be expected to increase in frequency.

3. In recent decades there has been a trend (at least in the US) towards greater stock market concentration. For large corporations to pose and existential risk, this trend would need to continue until individual decisions made by a small group of corporations can affect the entire world.

I am not able to describe the exact mechanism of how unchecked corporations would post an existential risk, similar to how the exact mechanism for an AI takeover is still speculation.

You would have a small group of organisations responsible for deciding the production activities of large swaths of the globe. Possible mechanism include:

Irreparable environmental damage.

A widespread public health crisis due to non-obvious negative externalities of production.

Premature widespread deployment of biotechnology with unintended harms.

I think if you’re already sold on the idea that “corporations are risking global extinction through the development of AI” it isn’t a giant leap to recognise that corporations could potentially threaten the world via other mechanisms.

Concretely, what does it mean to keep a corporation “in check” and do you think those mechanisms will not be available for AIs?

what does it mean to keep a corporation “in check”

I’m referring to effective corporate governance. Monitoring, anticipating and influencing decisions made by the corporation via a system of incentives and penalties, with the goal of ensuring actions taken by the corporation are not harmful to broader society.

do you think those mechanisms will not be available for AIs

Hopefully, but there are reasons to think that the governance of a corporation controlled (partially or wholly) by AGIs or controlling one or more AGIs directly may be very difficult. I will now suggest one reason this is the case, but it isn’t the only one.

Recently we’ve seen that national governments struggle with effectively taxing multinational corporations. Partially this is because the amount of money at stake is so great, multinational corporations are incentivized to invest large amounts of money into hiring teams of accountants to reduce their tax burden or pay money directly to politicians in the form of donations to manipulate the legal environment. It becomes harder to govern an entity as that entity invest more resources into finding flaws in your governance strategy.

Once you have the capability to harness general intelligence, you can invest a vast amount of intellectual “resources” into finding loopholes in governance strategies. So while many of the same mechanisms will be available for AI’s, there’s reason to think they might not be as effective.

The selected counterarguments seem consistently bad to me, like you haven’t really been talking to good critics. Here are some selected counterarguments I’ve noticed that I’ve found to be especially resilient, in some cases seeming to be stronger than the arguments in favor (but it’s hard for me to tell whether they’re stronger when I’m embedded in a community that’s devoted to promoting the arguments in favor).

This argument was a lot more compelling when natural language understanding seemed hard, but today, pre-AGI AI models have a pretty sophisticated understanding of human motivations, since they’re trained to understand the world and how to move in it through human writing, and then productized by working as assistants for humans. This is not very likely to change.

I don’t have a counterargument here but even if that’s all you could find, I don’t think it’s a good practice to give energy to bad criticism that no one would really stand by. We already have protein predictors. The idea that AI wont have applications in bioweapons is very very easy to dismiss. Autonomous weapons and logistics are another obvious application.

Contemporary AI is guileless, it doesn’t have access to its own weights and so cannot actively hide anything that’s going on in there. And interpretability research is going fairly well.

Self-improving AI will inevitably develop its own way of making its mechanisms legible to itself, which we’re likely to be able to use make its mechanisms legible to us, similar to the mechanisms that exist in biology: A system of modular, reusable parts has to be comprehensible in some frame, just to function, a system of parts where parts have roles that are incomprehensible to humans is not going to have the kind of interoperability that makes possible evolution or intentional design. (I received this argument some time ago from a lesswrong post written by someone with a background in biology that I’m having difficulty finding. It might not have been argued explicitly.)

Which is to say

It’s pretty likely that this is solvable (not an argument against the existence of risk, but something that should be mentioned here)

“black box” is kind of a mischaracterization.

There’s an argument of similar strength to the given argument, that nothing capable of really dangerously emergent capabilities can be a black box.

Influence in AI is concentrated to a few very similar companies in one state in one country, with many other choke-points in the supply chain. It’s very unlikely that competition in AI is going to be particularly intense or ongoing. Strategizing AI is equivalent to scaleable/mass-producible organizational competence, so is not vulnerable to the scaling limits that keep human organizations fragmented, so power concentration is likely to be an enduring theme.

AI is an information technology. All information technologies make coordination problems dramatically easier to solve. If I can have an AI reliably detect and trucks in satellite images, then I can easily and more reliably detect violations of nuclear treaties.

Relatedly, if I can build an artificial agent with an open design that proves to all parties that it is a neutral party that will only report treaty violations, then we can now have treaties that place these agents as observers inside all weapons research programs. Previously, neutral observation without the risk of leaking military secrets was arguably an unsolvable problem. We’re maybe a year away from that changing.

This trend towards transparency/coordination capacity as computers become more intelligent and more widely deployed is strong.

It’s unclear to what extent natural selection/competition generally matters in AI technologies. Competition within advanced ecologies/economies tends to lead towards symbiosis. Fruitful interaction in knowledge economies tends to require free cooperation. Breeding for competition so far hasn’t seemed very useful in AI training processes. (Unless you count adversarial training? But should you?)

I’m surprised that the least compelling argument here is Expert opinion.

Anyone want to explain to me why they dislike that one? It looks obviously good to me?

Depends on what exactly on what one means by “experts”, but at least historically expert opinion as defined to mean “experts in AI” seems to me to have performed pretty terribly. They mostly dismissed AGI happening, had timelines that seemed often transparently absurd, and their predictions were extremely framing dependent (the central result from the AI Impacts expert surveys is IMO that experts give timelines that differ by 20 years if you just slightly change the wording of how you are eliciting their probabilities).

Like, 5 years ago you could construct compelling arguments of near expert consensus against risks from AI. So clearly arguments today can’t be that much more robust, unless you have a specific story for why expert beliefs are now a lot smarter.

Sure, but still experts could not agree that AI is quite risky, and they do. This is important evidence in favour, especially to the extent they aren’t your ingroup.

I’m not saying people should consider it a top argument, but I’m surprised how it falls on the ranking.

.

Agree I could have been clearer here. I was taking the premise of the expert opinion section of the post as given, which is that expert opinion is an argument in-favor of AI existential risk.

AI isn’t dangerous because of what experts think, and the arguments that persuaded the experts themselves are not “experts think this”. It would have been a misleading argument for Eliezer in 2000 being among the first people to think about it in the modern way, or for people who weren’t already rats in maybe 2017 before GPT was in the news and when AI x-risk was very niche.

I also have objections to its usefulness as an argument; “experts think this” doesn’t give me any inside view of the problem by which I can come up with novel solutions that the experts haven’t thought of. I think this especially comes up if the solutions might be precise or extreme; if I was an alignment researcher, “experts think this” would tell me nothing about what math I should be writing, and if I was a politician, “experts think this” would be less likely to get me to come up with solutions that I think would work rather than solutions that are compromising between the experts coalition and my other constituents.

So, while it is evidence (experts aren’t anticorrelated with the truth), there’s better reasoning available that’s more entangled with the truth and gives more precise answers.

Expert opinion is an argument for people who are not themselves particularly informed about the topic. For everyone else, it basically turns into an authority fallacy.

Most such ‘experts’ have knowledge and metis in how to do engineering with machine learning, not in predicting the outcomes of future scientific insights that may or may not happen, especially when asked about questions like ‘is this research going to cause an event whose measured impacts will be larger in scope than the industrial revolution’. I don’t believe that there are relevant experts, nor that I should straightforwardly defer to the body of people with a related-but-distinct topic of expertise.

Often there are many epistemic corruptions within large institutions of people; they can easily be borked in substantive ways that sometimes allow me to believe that they’re mistaken and untrustworthy on some question. I am not saying this is true for all questions, but my sense is that most ML people are operating in far mode when thinking about the future of AGI and that a lot of the strings and floats they output when prompted are not very related to reality.

It would be interesting to see which arguments the public and policymakers find most and least concerning.

Note: This might be me who is not well-informed enough on this particular initiative. However, at this point, I’m still often confused and pessimistic about most communication efforts about AI Risk. This confusion is usually caused by the content covered and the style with which it is covered, and the style and content here does not seem to veer off a lot from what I typically identify as a failure mode.

I imagine that your focus demographic is not lesswrong or people who are already following you on twitter.

I feel confused.

Why test your message there? What is your focus demographic? Do you have a focus group? Do you plan to test your content in the wild? Have you interviewed focus groups that expressed interest and engagement with the content?

In other words, are you following well grounded advice on risk communication? If not, why?

I think that many people do not intend for their preferred policy to be implemented everywhere; so, at least they could be satisfied with a small region of universe. Though, AI-as-tool is quite likely to be created under control of those who want to have every power source and (an assumption) thus also want to steer most of the world; it’s unclear if AI-as-agent would have strong preferences about the parts of world it doesn’t see.

“Everyone who only cares about their slices of the world coordinates against those who want to seize control of the entire world” seems like it might be one of those stable equilibria.

Alas, the side that wants to win always beats the side that just wants to be left alone.

Which is why, since the beginning of the nuclear age, the running theme of international relations is “a single nation embarked on multiple highly destructive wars of conquest, and continued along those lines until no nations that could threaten it remained”.

I really liked the format and content of this post. This is very very central, and I would be happy to see much more discussion about the strengths and weaknesses of all those arguments.

This is a very interesting risk, but in my opinion an overinflated one. I feel that goals without motivations, desires or feelings are simply a means to an end. I don’t see why we wouldn’t be able to make programmed initiatives in our systems that are compatible with human values.

The LessWrong Review runs every year to select the posts that have most stood the test of time. This post is not yet eligible for review, but will be at the end of 2025. The top fifty or so posts are featured prominently on the site throughout the year.

Hopefully, the review is better than karma at judging enduring value. If we have accurate prediction markets on the review results, maybe we can have better incentives on LessWrong today. Will this post make the top fifty?