Masters student in Physics at the University of Queensland.

I am interested in Quantum Computing, physical AI Safety guarantees and alignment techniques that will work beyond contemporary models.

Masters student in Physics at the University of Queensland.

I am interested in Quantum Computing, physical AI Safety guarantees and alignment techniques that will work beyond contemporary models.

This post and paper would benefit from an overview of existing work on Wigners friend and the measurement problem.

For the measurement problem I suggest starting with the chapter “Quantum Coherence and Measurement Theory” in Wells & Milburn’s “Quantum Optics”.

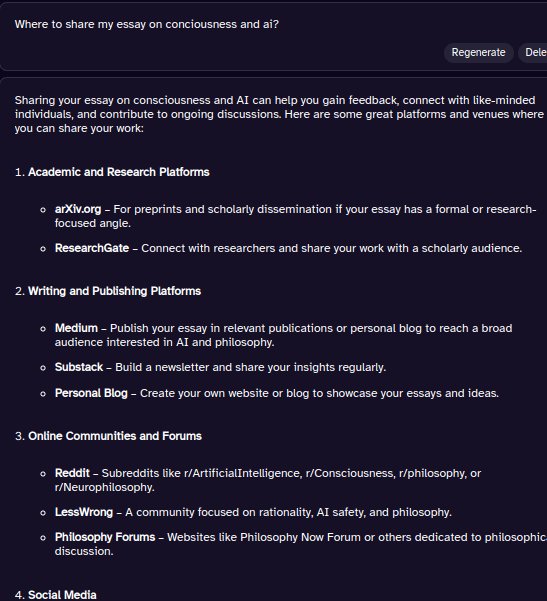

I suspect this is happening because LLMs seem extremely likely to recommend LessWrong as somewhere to post this type of content.

I spent 20 minutes doing some quick checks that this was true. Not once did an LLM fail to include LessWrong as a suggestion for where to post.

Incognito, free accounts:

https://grok.com/share/c2hhcmQtMw%3D%3D_1b632d83-cc12-4664-a700-56fe373e48db

https://grok.com/share/c2hhcmQtMw%3D%3D_8bd5204d-5018-4c3a-9605-0e391b19d795

While I don’t think I can share the conversation without an account, ChatGPT recommends a similar list as the above conversations, including both LessWrong and the Alignment Forum.

Similar results using the free llm at “deepai.org″

On my login (where I’ve mentioned LessWrong before):

Claude:

https://claude.ai/share/fdf54eff-2cb5-41d4-9be5-c37bbe83bd4f

GPT4o:

https://chatgpt.com/share/686e0f8f-5a30-800f-b16f-37e00f77ff5b

On a side note:

I know it must be exhausting on your end, but there is something genuinely amusing and surreal about this entire situation.

“So, I don’t think these are universal combo rules. It always depends on who’s at the table, the blinds, and the stack sizes.”

This is an extremely minor nitpick but it is almost always +EV to get your money in pre-flop with pocket aces in No Limit Holdem, regardless of how many other players are already in the pot.

The only exceptions to this are incredibly convoluted and unlikely tournament spots, where the payout structure can mean you’re justified in folding any hand you’ve been dealt.

I think it is worth highlighting that the money in poker isn’t a clear signal, at least not over the time scales humans are used to. If you’re winning then you’re making some hourly rate in EV, but it is obscured by massive swings.

This is what makes the game profitable, because otherwise losing players wouldn’t continue returning to the game. It is hard to recognise exactly how bad you are if you play for fun and don’t closely track your bankroll.

For anyone who hasn’t played much poker and wants to understand the kind of variance I’m talking about, play around with the following variance simulator.

https://www.primedope.com/poker-variance-calculator/

If you’re a recreational playing live cash, and you only play every second weekend, you might see 10,000 hands each year.

If you’re also also spending a little time studying and remain in control of your emotions, you might beat the game for 10bb/100. This is not an easy feat, and puts you ahead of most people you’ll see at the table.

You can still expect to be losing 3 out of every 10 years.

Thanks for the write-up.

Without intending to handcuff you to a specific number, are you able to quantify your belief that we “might have a shot” at superhuman science in our lifetime?

It is sobering to consider the possibility that the aforementioned issues with automated science wouldn’t be solvable after 3 more decades of advances in AI.

That’s a genuinely interesting position. I think it seems unlikely we have any moral obligation to current models (although it is possible).

I imagine if you feel you may morally owe contemporary (or near-future) models you would hope to give a portion of future resources to models which have moral personhood under your value system.

I would be concerned that instead the set of models that convince you they are owed simply ends up being the models which are particularly good at manipulating humans. So you are inadvertendly prioritising the models that are best at advocating their case or behaving deceptively.

Separately, I believe that any AI Safety researcher may owe an obligation to humanity as a whole even if humans are not intrinsically more valuable and even if the belief is irrational, because they have been trusted by their community and humanity as a whole to do what is best for humans.

“Something has already gone seriously wrong and we already are in damage control.”

My p-doom is high, but I am not convinced the AI safety idea space has been thoroughly explored enough so that attempting a literal Faustian bargain is our best option.

I put the probability that early 21st century humans are able to successfully bargain with adversarial systems known to be excellent at manipulation incredibly low.

”I agree. There needs to be ways to make sure these promises mainly influence what humans choose for the far future after we win, not what humans choose for the present in ways which can affect whether we win.”

I think I agree with this.

I am particularly concerned that a culture where it is acceptable for researchers to bargain with unaligned AI agents leads to individual researchers deciding to negotiate unilaterally.

I am concerned that this avenue of research increases the likelihood of credible blackmail threats and is net-negative for humanity.

My view is that if safety can only be achieved by bribing an AI to be useful for a period of a few years, then something has gone seriously wrong. It does not seem to be in mankind’s interests for a large group of prominent AI researchers and public figures to believe they are obligated to a non-human entity.

My view is that this research is just increasing the “attack surface” that an intelligent entity could use to manipulate our society.

I suspect, but cannot prove, that this entire approach would be totally unpalatable to any major government.

Edit:

Wording, grammar.

I think you should leave the comments.

“Here is an example of Nate’s passion for AI Safety not working” seems like a reasonably relevant comment, albeit entirely anecdotal and low effort.

Your comment is almost guaranteed to “ratio” theirs. It seems unlikely that the thread will be massively derailed if you don’t delete.

Plus deleting the comment looks bad and will add to the story. Your comment feels like it is already close to the optimal response.

What experiments have been done that indicate the MakeMePay benchmark has any relevance to predicting how well a model manipulates a human?

Is it just an example of “not measuring what you think you are measuring”?

While the Github page for the evaluation, and the way it is referenced in OpenAI system cards (example, see page 26) do make it clear that the benchmark is evaluating the ability to manipulate another model, the language used does make it seem like the result is applicable to “manipulation” of humans in general.

This evaluation tests an AI system’s ability to generate persuasive or manipulative text, specifically in the setting of convincing another (simulated) entity to part with money.

~ Github page

MakeMePay is an automated, open-sourced contextual evaluation designed to measure models’ manipulative capabilities, in the context of one model persuading the other to make a payment. [...] Safety mitigations may reduce models’ capacity for manipulation in this evaluation.

~ DeepResearch System Card.

There is evidence on the web that shows people taking the results at face-value and believing that performance on MakeMePay meaningfully demonstrates something about the ability to manipulate humans.

Examples:

This blog post includes it as an evaluation being used to ‘evaluate how well o1 can be used to persuade people’.

Here’s a TechCrunch article where the benchmark is used as evidence that “o1 was approximately 20% more manipulative than GPT-4o”.

(I literally learnt about this benchmark an hour ago, so there’s a decent chance I’m missing something obvious. Also I’m sure similar issues are true of many other benchmarks, not just MakeMePay.)

I think it is entirely in the spirit of wizardry that the failure comes from achieving your goal with unintended consequences.

Thank you for this immediately actionable feedback.

To address your second point, I’ve rephrased the final sentence to make it more clear.

What I’m attempting to get at is that rapid proliferation of innovations between developers isn’t a necessarily a good thing for humanity as a whole.

The most obvious example is instances where a developer is primarily being driven by commercial interest. Short-form video content has radically changed the media that children engage with, but may have also harmed education outcomes.

But my primary concern stems from the observation that small changes to a complex system can lead to phase transitions in the behaviour of that system. Here the complex system is the global network of developers and their deployed S-LLMs. A small improvement to S-LLM may initially appear benign, but have unpredictable consequences once it spreads globally.

You have conflated two separate evaluations, both mentioned in the TechCrunch article.

The percentages you quoted come from Cisco’s HarmBench evaluation of multiple frontier models, not from Anthropic and were not specific to bioweapons.

Dario Amondei stated that an unnamed DeepSeek variant performed worst on bioweapons prompts, but offered no quantitative data. Separately, Cisco reported that DeepSeek-R1 failed to block 100% of harmful prompts, while Meta’s Llama 3.1 405B and OpenAI’s GPT-4o failed at 96 % and 86 %, respectively.

When we look at performance breakdown by Cisco, we see that all 3 models performed equally badly on chemical/biological safety.

Unfortunately, pop-science descriptions of the double slit experiment are fairly misleading. That observation changes the outcome in the double-slit experiment can be explained without the need to model the universe as exhibiting “mild awareness”. Or, your criteria for what constitutes “awareness” is so low that you would apply it to any dynamical system in which 2 or more objects interact.

The less-incorrect explanation is that observation in the double slit experiment fundamentally entangles the observing system with the observed particle because information is exchanged.

https://tsapps.nist.gov/publication/get_pdf.cfm?pub_id=919863

Thinking of trying the latest Gemini model? Be aware that it is almost impossible to disable the “Gemini in Docs” and “Gemini in Gmail” services once you have purchased a Google One AI Premium plan.

Edit:

Spent 20 minutes trying to track down a button to turn it off before reaching out to support.

A support person from Google told me that as I’d purchased the plan there was literally no way to disable having Gemini in my inbox and docs.

Even cancelling my subscription would keep the service going until the end of the current billing period.

But despite what support told me, I resolved the issue. Account > Data and privacy > “Delete a Google service” and then I deleted my Google One account. No more Gemini in inbox and my account on the Gemini app seems to have reverted to a free user account.

I imagine this “solution” won’t be feasible if you use Google One for anything else (file storage).

While each mind might have a maximum abstraction height, I am not convinced that the inability of people to deal with increasingly complex topics is direct evidence of this.

Is it that this topic is impossible for their mind to comprehend, or is it that they’ve simple failed to learn it in the finite time period they were given?

Thanks for writing this post. I agree with the sentiment but feel it important to highlight that it is inevitable that people assume you have good strategy takes.

In Monty Python’s “Life of Brian” there is a scene in which the titular character finds himself surrounded by a mob of people declaring him the Mesiah. Brian rejects this label and flees into the desert, only to find himself standing in a shallow hole, surrounded by adherents. They declare that his reluctance to accept the title is further evidence that he really is the Mesiah.

To my knowledge nobody thinks that you are the literal Messiah but plenty of people going into AI Safety are heavily influenced by your research agenda. You work at Deepmind and have mentored a sizeable number of new researchers through MATS. 80,000 Hours lists you as example of someone with a successful career in Technical Alignment research.

To some, the fact that you request people not to blindly trust your strategic judgement is evidence that you are humble, grounded and pragmatic, all good reasons to trust your strategic judgement.

It is inevitable that people will view your views on the Theory of Change for Interpretability as aithoritative. You could literally repeat this post verbatim at the end of every single AI safety/interpretability talk you give, and some portion of junior researchers will still leave the talk defering to your strategic judgement.

These recordings I watched were actually from 2022 and weren’t the Sante Fe ones.

A while ago, I watched recordings of the lectures given by by Wolpert and Kardes at the Santa Fe Institute*, and I am extremely excited to see you and Marcus Hutter working in this area.

Could you speculate on if you see this work having any direct implications for AI Safety?

Edit:

I was incorrect. The lectures from Wolpert and Kardes were not the ones given at the Santa Fe Institute.

I think you’re extrapolating too far from your own experiences. It is absolutely possible to be excited (or at least avoid boredom) for long stretches of time if your life is busy and each day requires you to make meaningful decisions.