Mind Projection Fallacy

Would a non-humanoid alien, with a different evolutionary history and evolutionary psychology, sexually desire a human female? It seems rather unlikely. To put it mildly.

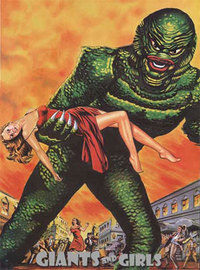

People don’t make mistakes like that by deliberately reasoning: “All possible minds are likely to be wired pretty much the same way, therefore a bug-eyed monster will find human females attractive.” Probably the artist did not even think to ask whether an alien perceives human females as attractive. Instead, a human female in a torn dress is sexy—inherently so, as an intrinsic property.

They who went astray did not think about the alien’s evolutionary history; they focused on the woman’s torn dress. If the dress were not torn, the woman would be less sexy; the alien monster doesn’t enter into it.

Apparently we instinctively represent Sexiness as a direct attribute of the Woman object, Woman.sexiness, like Woman.height or Woman.weight.

If your brain uses that data structure, or something metaphorically similar to it, then from the inside it feels like sexiness is an inherent property of the woman, not a property of the alien looking at the woman. Since the woman is attractive, the alien monster will be attracted to her—isn’t that logical?

E. T. Jaynes used the term Mind Projection Fallacy to denote the error of projecting your own mind’s properties into the external world. Jaynes, as a late grand master of the Bayesian Conspiracy, was most concerned with the mistreatment of probabilities as inherent properties of objects, rather than states of partial knowledge in some particular mind. More about this shortly.

But the Mind Projection Fallacy generalizes as an error. It is in the argument over the real meaning of the word sound, and in the magazine cover of the monster carrying off a woman in the torn dress, and Kant’s declaration that space by its very nature is flat, and Hume’s definition of a priori ideas as those “discoverable by the mere operation of thought, without dependence on what is anywhere existent in the universe”...

(Incidentally, I once read an SF story about a human male who entered into a sexual relationship with a sentient alien plant of appropriately squishy fronds; discovered that it was an androecious (male) plant; agonized about this for a bit; and finally decided that it didn’t really matter at that point. And in Foglio and Pollotta’s Illegal Aliens, the humans land on a planet inhabited by sentient insects, and see a movie advertisement showing a human carrying off a bug in a delicate chiffon dress. Just thought I’d mention that.)

- Raising the Sanity Waterline by (Mar 12, 2009, 4:28 AM; 241 points)

- Elements of Rationalist Discourse by (Feb 12, 2023, 7:58 AM; 224 points)

- A Crash Course in the Neuroscience of Human Motivation by (Aug 19, 2011, 9:15 PM; 204 points)

- Joy in the Merely Real by (Mar 20, 2008, 6:18 AM; 176 points)

- Value is Fragile by (Jan 29, 2009, 8:46 AM; 172 points)

- Thou Art Physics by (Jun 6, 2008, 6:37 AM; 153 points)

- Rational Toothpaste: A Case Study by (May 31, 2012, 12:31 AM; 129 points)

- Reductionism by (Mar 16, 2008, 6:26 AM; 128 points)

- 2-Place and 1-Place Words by (Jun 27, 2008, 7:39 AM; 125 points)

- Explaining vs. Explaining Away by (Mar 17, 2008, 1:59 AM; 110 points)

- Chaotic Inversion by (Nov 29, 2008, 10:57 AM; 108 points)

- A summary of every “Highlights from the Sequences” post by (Jul 15, 2022, 11:01 PM; 98 points)

- No Universally Compelling Arguments by (Jun 26, 2008, 8:29 AM; 90 points)

- Novum Organum: Introduction by (Sep 19, 2019, 10:34 PM; 86 points)

- Humans in Funny Suits by (Jul 30, 2008, 11:54 PM; 84 points)

- Hand vs. Fingers by (Mar 30, 2008, 12:36 AM; 82 points)

- Anthropomorphic Optimism by (Aug 4, 2008, 8:17 PM; 82 points)

- Excluding the Supernatural by (Sep 12, 2008, 12:12 AM; 80 points)

- Magical Categories by (Aug 24, 2008, 7:51 PM; 74 points)

- Configurations and Amplitude by (Apr 11, 2008, 3:14 AM; 71 points)

- Which Parts Are “Me”? by (Oct 22, 2008, 6:15 PM; 69 points)

- Elements of Rationalist Discourse by (EA Forum; Feb 14, 2023, 3:39 AM; 68 points)

- Possibility and Could-ness by (Jun 14, 2008, 4:38 AM; 67 points)

- A List of Nuances by (Nov 10, 2014, 5:02 AM; 67 points)

- Curating “The Epistemic Sequences” (list v.0.1) by (Jul 23, 2022, 10:17 PM; 65 points)

- Qualitatively Confused by (Mar 14, 2008, 5:01 PM; 63 points)

- The Meaning of Right by (Jul 29, 2008, 1:28 AM; 61 points)

- Inseparably Right; or, Joy in the Merely Good by (Aug 9, 2008, 1:00 AM; 57 points)

- Heading Toward: No-Nonsense Metaethics by (Apr 24, 2011, 12:42 AM; 55 points)

- Timeless Physics by (May 27, 2008, 9:09 AM; 54 points)

- Solomonoff Cartesianism by (Mar 2, 2014, 5:56 PM; 51 points)

- A summary of every “Highlights from the Sequences” post by (EA Forum; Jul 15, 2022, 11:05 PM; 47 points)

- Mach’s Principle: Anti-Epiphenomenal Physics by (May 24, 2008, 5:01 AM; 42 points)

- Bell’s Theorem: No EPR “Reality” by (May 4, 2008, 4:44 AM; 40 points)

- The So-Called Heisenberg Uncertainty Principle by (Apr 23, 2008, 6:36 AM; 40 points)

- Three Fallacies of Teleology by (Aug 25, 2008, 10:27 PM; 39 points)

- Agree, Retort, or Ignore? A Post From the Future by (Nov 24, 2009, 10:29 PM; 39 points)

- A Suggested Reading Order for Less Wrong [2011] by (Jul 8, 2011, 1:40 AM; 38 points)

- Grasping Slippery Things by (Jun 17, 2008, 2:04 AM; 36 points)

- Artificial Mysterious Intelligence by (Dec 7, 2008, 8:05 PM; 32 points)

- Some of the best rationality essays by (Oct 19, 2021, 10:57 PM; 29 points)

- An unofficial “Highlights from the Sequences” tier list by (Sep 5, 2022, 2:07 PM; 29 points)

- Dreams of Friendliness by (Aug 31, 2008, 1:20 AM; 29 points)

- Heading Toward Morality by (Jun 20, 2008, 8:08 AM; 27 points)

- Setting Up Metaethics by (Jul 28, 2008, 2:25 AM; 27 points)

- Market Misconceptions by (Aug 20, 2020, 4:46 AM; 27 points)

- A Sketch of an Anti-Realist Metaethics by (Aug 22, 2011, 5:32 AM; 26 points)

- Idols of the Mind Pt. 1 (Novum Organum Book 1: 38–52) by (Sep 24, 2019, 7:53 PM; 26 points)

- Decoherent Essences by (Apr 30, 2008, 6:32 AM; 24 points)

- Relative Configuration Space by (May 26, 2008, 9:25 AM; 22 points)

- Book Club Update and Chapter 1 by (Jun 15, 2010, 12:30 AM; 20 points)

- Examples of the Mind Projection Fallacy? by (Dec 13, 2011, 3:27 PM; 19 points)

- Another Non-Anthropic Paradox: The Unsurprising Rareness of Rare Events by (Jan 21, 2024, 3:58 PM; 19 points)

- 's comment on Useful Concepts Repository by (Jun 10, 2013, 5:50 PM; 18 points)

- 's comment on The noncentral fallacy—the worst argument in the world? by (Sep 14, 2012, 11:26 AM; 18 points)

- Lighthaven Sequences Reading Group #5 (Tuesday 10/08) by (Oct 2, 2024, 2:57 AM; 15 points)

- 's comment on What are the Best Hammers in the Rationalist Community? by (Jan 26, 2018, 10:13 PM; 15 points)

- 's comment on Why we need better science, example #6,281 by (Dec 12, 2011, 3:25 PM; 13 points)

- 's comment on Rationality Quotes January 2013 by (Jan 7, 2013, 2:45 PM; 10 points)

- 's comment on [Help] Critique my Admissions Essay on HPMoR by (Sep 18, 2011, 10:01 AM; 10 points)

- [SEQ RERUN] Mind Projection Fallacy by (Feb 23, 2012, 4:13 AM; 10 points)

- 's comment on How to deal with someone in a LessWrong meeting being creepy by (Sep 10, 2012, 4:57 AM; 10 points)

- Introducing Simplexity by (Sep 15, 2012, 3:34 AM; 9 points)

- Rationality Reading Group: Part P: Reductionism 101 by (Dec 17, 2015, 3:03 AM; 8 points)

- 's comment on Overcoming the Curse of Knowledge by (Oct 20, 2011, 9:26 PM; 7 points)

- What is an agent in reductionist materialism? by (Aug 13, 2022, 3:39 PM; 7 points)

- 's comment on People v Paper clips by (May 23, 2012, 12:00 AM; 7 points)

- 's comment on quila’s Quick takes by (EA Forum; Aug 23, 2024, 10:27 AM; 6 points)

- Cambridge LW: Rationality Practice: The Map is Not the Territory by (Mar 14, 2023, 5:56 PM; 6 points)

- 's comment on Bloggingheads: Yudkowsky and Aaronson talk about AI and Many-worlds by (Aug 18, 2009, 7:39 AM; 6 points)

- 's comment on Overcoming the Curse of Knowledge by (Oct 19, 2011, 11:10 AM; 5 points)

- 's comment on Image: Another uninformed perspective on risks from AI (humor) by (Jan 16, 2011, 6:11 PM; 5 points)

- 's comment on It’s not like anything to be a bat by (May 16, 2010, 5:36 PM; 5 points)

- 's comment on Rationality Quotes October 2011 by (Oct 22, 2011, 6:14 PM; 5 points)

- 's comment on Raise the Age Demographic by (Aug 11, 2011, 5:07 AM; 5 points)

- 's comment on The rational rationalist’s guide to rationally using “rational” in rational post titles by (May 28, 2012, 3:10 PM; 4 points)

- 's comment on Rational Romantic Relationships, Part 1: Relationship Styles and Attraction Basics by (Nov 11, 2011, 3:14 PM; 4 points)

- 's comment on Open Thread: March 2010, part 3 by (Mar 24, 2010, 4:56 AM; 4 points)

- 's comment on Litany of a Bright Dilettante by (Apr 22, 2013, 5:59 PM; 4 points)

- 's comment on Open Thread, May 16-31, 2012 by (May 16, 2012, 1:33 PM; 4 points)

- 's comment on Deep atheism and AI risk by (Jan 6, 2024, 10:28 AM; 4 points)

- Population Ethics Shouldn’t Be About Maximizing Utility by (Mar 18, 2013, 2:35 AM; 4 points)

- 's comment on Welcome to Less Wrong! (5th thread, March 2013) by (Apr 20, 2013, 7:16 PM; 4 points)

- 's comment on Harry Potter and the Methods of Rationality discussion thread, part 13, chapter 81 by (Mar 30, 2012, 3:50 AM; 4 points)

- 's comment on Third Wave Effective Altruism by (EA Forum; Jun 27, 2023, 9:14 PM; 3 points)

- 's comment on Welcome to Less Wrong! by (Jun 12, 2011, 4:34 PM; 3 points)

- 's comment on Analogies and learning by (May 24, 2011, 12:31 AM; 3 points)

- 's comment on Three more ways identity can be a curse by (May 2, 2013, 4:02 PM; 3 points)

- 's comment on Less Wrong: Open Thread, September 2010 by (Sep 16, 2010, 9:34 PM; 3 points)

- 's comment on Levels of communication by (Mar 24, 2010, 9:49 AM; 3 points)

- 's comment on Gauging the conscious experience of LessWrong by (Dec 20, 2020, 9:52 PM; 3 points)

- 's comment on Less Wrong views on morality? by (Jul 10, 2012, 2:58 AM; 3 points)

- 's comment on Why Not Just Outsource Alignment Research To An AI? by (Mar 13, 2023, 1:25 AM; 2 points)

- 's comment on Pluralistic Moral Reductionism by (Jun 8, 2011, 11:50 AM; 2 points)

- 's comment on This post is for sacrificing my credibility! by (Jun 2, 2012, 7:39 AM; 2 points)

- 's comment on Subjective Relativity, Time Dilation and Divergence by (Feb 12, 2011, 11:24 PM; 2 points)

- 's comment on The Semiotic Fallacy by (Feb 22, 2017, 8:23 PM; 2 points)

- 's comment on Supernatural Math by (May 19, 2009, 9:23 PM; 2 points)

- 's comment on The Ethical Status of Non-human Animals by (Jan 10, 2012, 7:05 AM; 2 points)

- 's comment on The non-painless upload by (May 26, 2011, 2:36 AM; 1 point)

- 's comment on Seeing Red: Dissolving Mary’s Room and Qualia by (Jun 6, 2011, 3:30 PM; 1 point)

- 's comment on Beware of Other-Optimizing by (Aug 7, 2011, 1:31 PM; 1 point)

- 's comment on “Is there a God” for noobs by (Mar 25, 2011, 6:07 AM; 1 point)

- What independence between ZFC and P vs NP would imply by (Dec 8, 2011, 2:30 PM; 1 point)

- 's comment on r/HPMOR on heroic responsibility by (Sep 17, 2012, 6:03 AM; 1 point)

- 's comment on Human consciousness as a tractable scientific problem by (Nov 7, 2011, 5:12 AM; 1 point)

- 's comment on Undiscriminating Skepticism by (Sep 26, 2011, 9:34 PM; 1 point)

- 's comment on Post Your Utility Function by (Jun 11, 2009, 9:38 PM; 1 point)

- 's comment on Book Club Update and Chapter 1 by (Jun 22, 2010, 7:37 AM; 1 point)

- 's comment on Subjective Anticipation and Death by (Mar 17, 2010, 1:16 PM; 1 point)

- 's comment on Dreams of AIXI by (Sep 2, 2010, 4:06 AM; 1 point)

- 's comment on Open thread, Oct. 13 - Oct. 19, 2014 by (Nov 6, 2014, 12:26 AM; 1 point)

- 's comment on Open Thread, May 16-31, 2012 by (May 16, 2012, 2:12 PM; 1 point)

- 's comment on Welcome to Less Wrong! (2010-2011) by (Aug 28, 2011, 6:26 AM; 1 point)

- Explanatory normality fallacy by (May 16, 2010, 3:26 AM; 1 point)

- 's comment on Anti-Akrasia Technique: Structured Procrastination by (Nov 23, 2009, 6:11 AM; 1 point)

- 's comment on Rationality quotes: March 2010 by (Mar 4, 2010, 4:02 AM; 1 point)

- 's comment on NAMSI: A promising approach to alignment by (Jul 29, 2023, 2:19 PM; 1 point)

- 's comment on Those who can’t admit they’re wrong by (Jul 1, 2011, 5:19 PM; 1 point)

- 's comment on Rationality Lessons Learned from Irrational Adventures in Romance by (Oct 4, 2011, 1:24 AM; 1 point)

- 's comment on The Domain of Your Utility Function by (Jun 23, 2009, 9:40 PM; 1 point)

- 's comment on The Relation Projection Fallacy and the purpose of life by (Dec 30, 2012, 5:14 AM; 0 points)

- 's comment on How to Not Lose an Argument by (Dec 14, 2011, 3:36 PM; 0 points)

- 's comment on Cultural norms in choice of mate by (Jul 10, 2012, 11:41 PM; 0 points)

- 's comment on Why safe Oracle AI is easier than safe general AI, in a nutshell by (Dec 4, 2011, 5:15 AM; 0 points)

- 's comment on Hacking Quantum Immortality by (Nov 16, 2013, 7:18 PM; 0 points)

- 's comment on Bayesianism in the face of unknowns by (Mar 16, 2011, 9:17 AM; 0 points)

- 's comment on On the etiology of religious belief by (Jun 25, 2012, 7:37 PM; 0 points)

- 's comment on Welcome to Less Wrong! (2012) by (Dec 31, 2011, 6:44 AM; 0 points)

- 's comment on Let’s all learn stats! by (Jun 21, 2012, 2:16 AM; 0 points)

- 's comment on Rationality Quotes: April 2011 by (Apr 6, 2011, 1:32 AM; 0 points)

- 's comment on 2014 Survey Results by (Jan 5, 2015, 9:56 PM; 0 points)

- 's comment on Changing accepted public opinion and Skynet by (May 25, 2009, 4:35 PM; 0 points)

- 's comment on Advice for a Budding Rationalist by (Nov 19, 2010, 5:04 PM; 0 points)

- 's comment on What is the Archimedean point of morality? by (Oct 30, 2010, 1:32 AM; 0 points)

- 's comment on Best career models for doing research? by (Dec 10, 2010, 7:47 PM; 0 points)

- 's comment on Population Ethics Shouldn’t Be About Maximizing Utility by (Mar 19, 2013, 3:23 AM; 0 points)

- 's comment on Nonperson Predicates by (Nov 18, 2011, 10:59 AM; 0 points)

- 's comment on Shortening the Unshortenable Way by (Jul 26, 2011, 6:58 AM; 0 points)

- 's comment on Strong substrate independence: a thing that goes wrong in my mind when exposed to philosophy by (Feb 19, 2011, 1:03 AM; -1 points)

- 's comment on Concepts Don’t Work That Way by (Sep 27, 2011, 10:14 PM; -1 points)

- 's comment on Antisocial personality traits predict utilitarian responses to moral dilemmas by (Aug 24, 2011, 12:14 AM; -3 points)

- A brief guide to not getting downvoted by (Oct 30, 2010, 2:32 AM; -3 points)

- I universally trying to reject the Mind Projection Fallacy—consequences by (Aug 30, 2024, 5:42 PM; -3 points)

It’s not about what the bug-eyed monster considers sexy. It’s about what the human reader considers sexy.

Exactly. I never conceived of the alien taking the woman because she was attractive. Weaker perhaps, but not because he found her sexy. Damsel in distress. I think it is your, author of this article, who suffered from mind projection fallacy, not necessarily the creators of the comic or the rest of the audience. To me, from the point of view of the story, it was just a tragic accident that the woman being hauled off was one I found beautiful.

Precisely.

If a bunch of aliens were to start capturing bunch of humans (and other terrestrial animals) for food, or slave labour, or what ever, it would be rational for males to go after the aliens with sexy human females first. The torn clothing would likely be widespread, and selection by torn clothing (as torn clothing improves ability to select for sexiness) may also be rational when one wants to maximize the sexiness of mates that one takes for rebuilding the human race. :-)

edit: One should also look for signs of struggle (such as torn clothing), as one would be interested in the relevant genes for the successful counter-attack by the next generation.

On the other hand, the woman who fought back against the alien might also be less submissive to the man that reclaims her. That may be a virtue or a flaw depending on what he values in women.

I ask you again—what is the other option? How can we deal with the world other than via “mind-projection?” I claim that you do it too, you just do it in a more sophisticated way. Do you have an alternative in mind?

As always, there’s the difference between “we’re all doomed to be biased, so I might as well carry on with whatever I was already doing” and “we’re all doomed to be somewhat biased, but less biased is better than more biased, so let’s try and mitigate them as we go”.

Someone really ought to name a website along those lines.

Disambiguating:

If you haven’t yet encountered the classic LW answer to “what is bias”, this sequence of posts is basically it—this post is a quick gesture in the right direction if you don’t want to read the others.

If you have read/osmosed the relevant posts, and you don’t think the LW account of bias is adequate for purposes like the one in my comment upthread, can you say more about why?

You could attempt to perceive the universe in all the other viewpoints of every other living being while you perceive it as you would naturally.

Or, to make it extremely simple: Keep in mind that everyone else does not think in the same way that you do.

Yeah, I can’t help but think that in many cases there is no implied inference that the alien especially desires the woman, but rather that the reader is especially affected by the fact that the abductee just happens to be an attractive woman. (King Kong would be an exception.) It’s the same reason that we rarely if ever see the alien carrying a cow instead; not because of its preferences, but because we wouldn’t be especially apprehensive about the cow’s fate.

If there’s a bias here, it’s one generated by the desire to tell interesting stories. Projection happens, but I don’t find this example terribly compelling.

(Five years on, but whatever)

I don’t think the inference is necessary, really; it’s fairly explicit that the scenario in which the comment is relevant is one in which the Alien Monster selected the Damsel for some lovin’. A competent writer, given only that cover art as the basis for their film, could get around that. The fact that the story is so frequently told incompetently is what makes it an unfortunately apt example for a much subtler and more insidious fallacy.

As a more contraversial, if slightly less ovious example, is the Christian condemning an Athiest to Hell. Their universe does not include a scenario in which there is any other result in store for someone who disbelieves, even without any objective basis for their claim. Besides Dante Aliegeri, but results have to be independently verifiable, after all.

Your example does not seem to depict a mind projection fallacy. You might contend that the Christian doesn’t have a basis for his assertion, but he doesn’t seem to be confusing the map with the territory.

The Christian in your example isn’t projecting ter mind into the atheist’s mind. Te is, OTOH, if te says, “You must hate God,” or “Why are you so pessimistic?”

I take your point about the mind projection fallacy, but actually in the particulars I may have to slightly disagree.

Consider these themes:

Furries Anime tentacle rape Bestiality/zoophilia etc etc etc...

In other words, we already know that there can be humans that find stuff either slightly human but modified, or increasingly alien to also, ahem. “tweak” them.

I bet somewhere either the author of that story (with the plant) that you mentioned, or some reader somewhere found that story particularly enjoyable, or at least the notion of it.

So, it does seem reasonable to suppose that some alien species would contain some mambers that have sufficiently different or broad or… creative… sexuality that they’d even find the scandalous notion of being attracted to a human, well, attractive.

Clearly then the Bug Eyed Monsters coming after the ladies in torn dresses are simply the deviants of that species. They’re self selected! (okay, was being somewhat silly throughout this entire comment, such happens when I am on lack of sleep...)

Benquo: Wait… what about Chupucabra? Clearly simply a very confused alien that’s simply trying to find a mate! chuckles

Actually wanting to bang Anything That Moves is common enough: alien perverts are just over-represented in fiction, because it’s more terrifying for us humans.

I for one thought the plant story was a definite turn-on...

If I assume that others have minds like mine I surely would also assume they “project” the same properties, so calling them “mental projection” is not likely to make this error go away. Conversely if I establish that a certain property is a real, non-projected property of an object, that doesn’t entitle me to assume that it will be perceived by an alien with a different evolutionary history. After all, humans only perceive a tiny percentage of the actual properties of objects. So I think that the “mind projection error” and the “all minds are alike” error are quite different.

Your error is falsely conclude that he fallacy is essential to human thinking, that the properties projected are always and the same across the board, and that they cannot differ. And what the heck does “human only perceive a tiny percentage of the actual properties of objects” even mean? We certainly don’t know all there is about a thing, but what properties are you even talking about and how?

One thing that comes to mind to me is color. We see red green and blue. Aliens might see yellow and ultraviolet instead. We might decide to camouflage ourselves so that they don’t see us, and fail completely because we’re using the wrong colors to blend in.

Technically, there’s no point where this stops and mind projection begins. How sexy you’d find a woman is much more complex than how red you’d find her dress, and it’s similarly less likely for an alien to notice, but it’s just a matter of degree.

Good post. I have a feeling I’ve read this very same example before from you Eliezer. I can’t remember where.

PK: I think Eliezer made the same point in “Cognitive Biases Potentially Affecting Judgement of Global Risks”

At least, I think that’s the one.

The aliens and monsters are stand-ins for the traditional fear: men from the other tribe. Since cross-tribal homicide and rape/abduction are quite common, it is no surprise that our Sci-Fi creations do the same thing.

Psy-Kosh,

We share an evolutionary history with different animals on earth. There could be attractive properties in animals that give us a similar representation of sexiness as they do to their own species. Same reason we find you will find different creatures finding other creatures cute e.g. humans to dogs and gorilla to human.

Additionally, there has been cases where dolphins attempted to sexually engage with humans. An intelligent alien will have a shared ancestry and therefore will not have sexiness representations from “sexual properties” exhibited in earth’s creatures.

That’s a rather weak explanation because you’re implying the presence of causes without elucidating them, or you’re creating a cause out of evolution, which is not a cause.

From the standpoint of being, we apprehend the accidental properties of a thing first, but from the point of view of the intellect, we apprehend “gorillaness” first, so in the context of gorillaness, sexiness is impossible in relation to human. One cannot find a gorilla sexy unless one is confused in some way. This is why zoophilia is a mental disorder.

You can find something about a gorilla beautiful, certainly, but this is not the same as sexy.

There is of course, the Red Dwarf episode “Camille” with the alien who appears as the projection of whatever you find sexiest.

I’m sure the historians of the recent “Imagining Outer Space, 1900-2000” conference would have a good time with analyzing the various pop cultural strands that came together to produce rote images such as the above cover.

PK: This example is also part of Eliezer’s “Hard AI Future Salon” lecture (starting at 1:35:33).

“Hard AI Future Salon” lecture, good talk. Most of the audience’s questions however were very poor.

One more comment about the mind projection fallacy. Eliezer, you also have to keep in mind that the goal of a sci-fi writer is to make a compelling story which he can sell. Realism is only important in so far as it helps him achieve this goal. Agreed on the point that it’s a fallacy, but don’t expect it to change unless the audience demands/expects realism. http://tvtropes.org/ if full of tropes that illustrate stuff like that.

Lior: good point.

The subject of human/alien sex was disposed of rather thoroughly by Larry Niven in “Man of Steel, Woman of Kleenex”.

I think it would be justified in that case. If the alien is of a species that looks exactly like humans, and was raised in a human culture, he would likely find the same females attractive. And that’s assuming Lois Lane is attractive by human standards. Is that ever specified?

That was more about human/superhero sex. It would be just as dangerous for a human to sleep with the Flash.

Speaking of interspecies vs intraspecies reproductive fitness evaluation—a human may judge a member of its own species (from a distant end of the genetic spectrum, i.e., whitest of the white vs. blackest of the black) less desirable than some hypothetical alien species with appropriately bulbous forms and necessary orifices or extrusions.

Are you sexually attracted to manikins?

What if the manikins were alive and intelligent?

It’s certainly not just sci-fi writers who go for this sort of reasoning. I knew a theology professor who would make mistakes like this on a consistent basis. For example, he would raise questions about whether there was an evolutionary pressure for babies to evolve to be cute, without even considering the possibility that we might evolve to find babies cute. It struck me as being much worse than the sort of unthinking assumption that goes into Attack of the Fifty Foot Whatever sci fi stories, since his preconceptions were actually hindering him in fairly serious attempts to understand the real world.

In case you hadn’t noticed, this is a general problem. And you’re right, the observer is not some guy speaking from some immutable “neutral point of view” but it’s an error made very commonly by certain “scientists” every day. His error was not one of theology, but one commonly made by “evolutionary psychologists”. But let’s consider this case: if evolution has free dibs on everything, and it lacks any teleological component whatsoever, then what are we to say about your status as a rational being?

First, welcome to Lesswrong! If you’d like to introduce yourself, the 2010 thread is still active.

You’ve asked some interesting questions on this thread—unfortunately, Desertopa is the only one of your potential interlocutors I know to be active on this site. Even Desertopa may be reluctant to respond because your tone (“In case you haven’t noticed”) could be construed as combative, and we try to avoid that because emotionally identifying with one side of an argument leads to biases.

Regarding your question in the last sentence: there are a few posts addressing the rationality of intelligences generated by a blind idiot god on LW: The Lens That Sees its Flaws is one of my favorites.

There may also be evolutionary pressure for babies to evolve to be cute.

There’s certainly evolutionary pressure for males to be sexy. Some species have pretty bizarre looking males.

Surely the pressure would be for adults to find babies cute, whatever they look like. In fact, isn’t that what “cute” means, evolutionarily speaking, just as “attractive” means “what good breeding material looks like”?

Of course, as in the sexual selection case, you can then get feedback, with babies evolving to look even more like they look.

URL is dead for me: http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.40.8618 says “This url does not match any document in our repository.”

I don’t know what document that link originally pointed to, but this document contains one of Jaynes’s earliest (if not the earliest) descriptions of the idea.

Oh, this has been around since Genesis 6. At least.

But… then… why do zombies eat brains? As anthropomorphism, that’s pretty disturbing.

[Incidentally, I just saw King Kong 1976 and was utterly amazed at how h-nt—it was. I had no idea.]

I know this is incidental, but I am dying of curiosity about the story of the Plant-Guy/Human-Guy… would you mind giving the reference ?

Is “the world is full of people” an example of the mind-projection fallacy? (Compare to “we can both recognize the pattern ‘person’ at a high-level in our multi-leveled models of levelless reality”)

Why would it be? It’s an ontological statement about the existence of many instances of what you have identified as persons. It doesn’t attribute anything to them. And following your pseudotechnical jargon, why would you presume the pal you’re speaking to (“we both recognize...”) is nothing but another instance of this “pattern”? Your biased. You’re exempting someone because you want them to be a person.

I just thought the Less Wrong community should know that a few minute ago, I was having trouble remembering the name of this fallacy, but I vaugely remembered the content of this post. So, I decided to use the search engine on this site to find this. I typed in “sexy,” and this is the first thing that came up.

I can only imagine how many people have scrolled up to the search bar to test this since this comment was written.

Just noticed this comment while looking in the archives… You might want to ponder information bubbles caused by website personalization because I suspect you are (or were when you wrote this comment?) inside of one. Something I’ve found generally useful in the past that seems like it would help is trying to imagine how you would discover “objectively important things to know about that you wouldn’t have normally run across”.

Oh, this wasn’t through a general Google search, just the site’s search engine. I would be shocked if a search of “sexy” on the internet returned a Less Wrong result anywhere near the top.

Still, that would be a happy day.

Perhaps we should rename it the “sexy search string fallacy” in your honor?

Or, you know… not.

I recall reading a book on Asperger’s syndrome in my school library which had an activity in it that is supposed to conclude weather or not a child has ‘Theory of Mind’. This is what I immediately thought about while reading this article.

Here is a version of the test, check it out. http://www.asperger-advice.com/sally-and-anne.html

If a bunch of aliens were to start capturing bunch of humans (and other terrestrial animals) for food, or slave labour, or what ever, it would be rational for males to go after the aliens with sexy human females first. The torn clothing would likely be widespread, and selection by torn clothing (as torn clothing improves ability to select for sexiness) may also be rational when one wants to maximize the sexiness of mates that one takes for rebuilding the human race.

Okay, so your saying that Woman.height makes sense, but Woman.sexiness doesn’t really. I’m not sure if you can even say that Woman.height makes sense. The reality is that the knowledge of any attribute is predicated on the perceptual apparatus that an organism has. Perhaps the alien is blind?

You may retort that if the alien is blind, then he most likely has some sort of apparatus to measure size in the world. Perhaps he can send out tenticles to feel the size of objects.

But then, at the same time why couldn’t he send out some sort of tenticles to measure the attractiveness of a lady. In humans attractiveness isn’t a real thing either. Really what where doing is looking at features such as hip ratio, symmetry, eye color and various attributes like that and that’s what creates the sense of attractiveness. These are all things that the alien could measure and combine also.

Yes, this would define “looks attractive to a certain subset of humans” (i.e. those who find this set of features attractive). However, there is no such thing as “looks attractive to all humans and aliens”, which is what Woman.sexiness is supposed to represent.

This comment was sitting at −2 when I saw it, which makes me think that maybe I don’t understand Eliezer’s point. I thought the OP was making the point that when we talk about something being “attractive” or “moral” or maybe even “sapient”, we project facts about our minds into the real world. “Attractive” really means “attractive to humans”, and if we forget this fact, we can end up inadvertently drawing wrong conclusions about the world. If that’s wrong, then what was this post actually about?

The part you highlight about shminux’s comment is correct, but this part:

is wrong; attractiveness is psychological reactions to things, not the things themselves. Theoretically you could alter the things and still produce the attractiveness response; not to mention the empirical observation that for any given thing, you can find humans attracted to it. Since that part of the comment is wrong but the rest of it is correct, I can’t vote on it; the forces cancel out. But anyway I find that to be a better explanation for its prior downvotation than a cadre of anti-shminux voters.

Mind you I downvoted JohnEPaton’s comment because he got all of this wrong.

It may have just been serial downvoting from people who dislike shminux.

Heck, I dislike him myself.

Papermachine isn’t much to write home about either.

It is a great example, but one cannot beat the metaphor to death. There are also artistic conventions which develop and self-reinforce (re Manga / Anime) as well as marketing forces—re Why do all female superheroes have giant breasts?

The strength of the metaphor is not that a rational analysis of alien / monster motivations leads the average fan-boy to conclude aliens intend to copulate with abducted females, but the unconscious projection of the fan-boy onto the alien / monster, and of course who would you grab then?

I downvoted, but the second paragraph is okay.

...what was the name of that story?

This is an inappropriate accusation to make about Kant in particular: he was explicit that space (in all its flatness) was a necessary condition of experience, not a feature of the world. He could not be more adamant that his claims about space were claims about the mind, and the world as it appears to the mind.

He was wrong, of course: non-euclidian geometry thrives. But this isn’t the mind projection fallacy. This is the exact opposite of the mind projection fallacy.

I know these sequence posts are old, but I’m finding a non-trivial number of these incorrect off-hand remarks, especially about philosophers. These errors are never really a problem for the main argument of the sequence, but there’s no good in leaving a bunch of epistemically careless claims in a series of essays about how not to be epistemically careless. A quick SEP lookup, or a quick deletion, suffices to fix stuff like this.

Oddly enough, the aliens never go after men in torn shirts.

One exception: Troma’s Monster in the Closet, where the monster does indeed fall for a guy. The movie is a real gem.

Considering that the aliens generally don’t try to ravish the captive woman, I’d suggest an alternative hypothesis: the alien carries off the woman for non-sexual reasons, and the torn clothes and the way the woman is drawn are only there to make her look attractive to the audience (and perhaps to give the human rescuers more of a motivation to rescue her), not to indicate the motivation of the alien.

And more women than men are carried off because 1) that’s what the writer chooses to write (or what the artist chooses to draw, if the story has scenes where aliens carry off both sexes), and 2) women in such stories tend to be bad at defending themselves, excessively curious, and other traits which make capture more likely.

The Creature from the Black Lagoon’s interest was sexual, of course, but the creature is related to humans and is not a typical case of an alien carrying women off.

Optical illusions might be a good example of this. Ex. http://www.buzzfeed.com/catesish/help-am-i-going-insane-its-definitely-blue#.hfZoPkgjK

People are freaking out over what color the dress “really is”. They’re projecting the property of “true color” onto the real world, when in reality “true color” is only in their mind.

The way I think about it:

Oh, dear Lord, this made it even to LW… X-/

Human language isn’t great for talking about colors. The word “blue”, for example, encompasses very different hues.

Let’s think about this in terms of the HSV (hue, saturation, value) model.

In this model, whitish colors have low saturation, high value (value is basically brightness), and the hue doesn’t matter. Similarly, blackish colors have high saturation, low value, and the hue doesn’t matter again.

The dress in this image has the blue hue, but high value (=brightness). That makes it light blue or bluish white—take your pick. The other color has the orange hue, but low value. That makes it dark brown (gold) or brownish black, again, take your pick.

Overlay on top of this the differences in uncalibrated monitors, personal idiosyncrasies, and some optical illusion effects, and you have a ridiculously successfull buzzfeed post :-D

Yeah, that’s a pale blue, but I find it hard to alieve (and, had I not read Generalizing From One Example, I’d also find it hard to believe) that a sizeable fraction of the population find it pale enough to call it “white” non-trollingly. This also applies if I look at it on a dark background such as the left panel of XKCD with my screen’s luminosity set to the maximum. (The only condition in which I would call such a thing “white” is if I thought it only looked like that because of the ambient light, but under so blue a light the dark parts of the dress would look quite black with hardly any trace of yellow whatsoever.)

I see the same shade of pale blue while walking to work over white snow.

Are they, actually? Or are they freaking out over what other people say it is?

If I saw only the text of the supposed controversy I would instantly diagnose it as a hoax for clickbait. There are colour constancy illusions, but I will not believe that this is one of them until I see two people actually taking opposite sides. However, that is socially impossible to achieve, because there will always be enough people to perversely take the opposite side for the lulz. The wording of the original Tumblr post, and the buzzfeed and Wired articles, deliberately encourage this.

What colours do you see in the dress in this picture? Not in any other picture, or the real dress, but this, the original picture that started it all. Feel free to experiment with different monitors and ambient lighting (but not editing the picture) and to give details in comments.

[pollid:831]

My introduction to this illusion was walking into the office yesterday morning, and having a co-worker show me the picture on their monitor while asking what colour the dress was. The office split fairly evenly between white & gold versus blue & black, and nobody seemed to be kidding/trolling.

The weird specificity of a couple of people’s experiences also suggested they were being serious. One person who originally saw white & gold, after skimming blog posts discussing/explaining the illusion, eventually said they could kiiiinda see how it could be seen as blue & black (but that could’ve been a social conformity effect). Another person saw the dress as white & gold on their computer screen, but saw it as visibly blue & black when viewing their screen’s image through someone’s smartphone, which presented it with lower brightness.

So I deem this legit. I think this illusion messes with people so successfully by turning the relatively well-known brightness/saturation illusion (as in the checker shadow illusion) into a colour illusion, by exploiting the facts that (1) very light blue looks white, (2) brown looks like yellow/gold or black depending on intensity, and (3) perceived intensity depends on the surroundings as well as the region of focus.

I see sky blue and bronze-ish brown, which I’d interpret as navy blue and black decolored by aggressive washing. I still voted “blue and black” as I’d still call clothes that color black in real life unless I’m being pedantic, and there’s no way I’d call them gold.

Here and here are two fragments of the picture I linked, expanded but otherwise unaltered, and saved in an uncompressed format. Compression artefacts from the original are clearly visible, but please ignore these and attend only to the overall colours.

Again, this question asks only about your experience of the colours, not any guesses you might make about what you would see if you were there, nor what colours you can convince yourself you might be able to see.

[pollid:832]

I wouldn’t go as far as to call it a striking difference, but I perceive the first image’s colours as lighter and more washed out than the second’s.

If I didn’t know where these came from and I was doing an XKCD color survey-like thing, I’d call the colors in the first bronze and mauve and those in the latter black and indigo. (I’m not a native English speaker.) I’d call the difference between the two blacks “striking” but not the difference between the two blues, so I picked “Just show me the results”.

I think it has more to do with the fact that optical illusions are generally human universals, i.e. all humans see the same thing. (Certain illusions may be susceptible to cultural influence, but I don’t think that really applies here—Buzzfeed commenters are all generally from similar demographics.) Given this, it’s really weird that some people are seeing one thing and other people are seeing something else.

Case in point: I see it as white-and-gold, and I’ve looked over it several times already, with no change. I am actually having difficulty imagining how anyone could perceive it as blue-and-black, despite being fairly certain that the people their claiming to see blue-and-black are not lying. What’s strange isn’t the illusion itself, but the extremely polarizing effect it has on people.

I definitely agree—it’s a particularly curious illusion. Some researcher seems to think so as well.

But the point remains that it can be explained by understanding the illusion, and that projecting “true color” onto the real world is a mistake.

Yes, I agree that projecting “true color” onto the real world is a mistake. I’m not sure those commenters are actually doing that, though. I think your interpretation of the Buzzfeed argument is something like this:

Whereas my interpretation, I feel, is slightly more charitable:

In other words, I feel that the dicussion isn’t quite as full of fallacious reasoning as you seem to be making it out to be (in that it could interpreted in a different way that makes it about something other than the mind projection fallacy). Of course, I could be wrong. What do you think?

I actually trust your interpretation over mine. I haven’t read through it too carefully and sense that my frustration has interfered with my interpretation a bit.

In fairness, they’re mostly kidding and exaggerating.

My interpretation is that a sizable majority are being very serious. People from my coding bootcamp have been discussing it on Slack for a while now… and it’s embarrassing.

I’m sure that deep down most people know that it’s some sort of optical illusion, but there’s a difference between “If I really really really examined my beliefs, this is what I’d find” and “this is what I believe after taking 5 seconds to think about it”.

I really get the sense that the overwhelming majority doesn’t get the idea that “true color” doesn’t exist.

Count me among them.

I define “true color” as the frequency mix of light together with its brightness. It’s perfectly well measurable—for example, I happen to own a device which will tell me what color it’s looking at. People in photography and design care about “true color” very much—they carefully calibrate their devices (monitors, printers, etc.) to show proper colors.

Go look at e.g. CIE color spaces.

I mean “true color” in this sense:

“In this sense” you defined dress.trueColor as undefined, so I still don’t see what you are talking about.

By the way, normally color is defined at perception point so it already includes both the lighting and the reflective characteristics of the object. It’s common to observe that something is color X under, say, sunlight, and the same thing is color Y under, say, fluorescent lights. Girls understand that well :-)

The crucial point is, I think, the “observer” argument. Even if you compress all the other parameters—lighting, reflective characteristics, etc.--what you’re left with is still a two-place function, such that if you pass in a different observer, a different result is returned. You’re free to define “true color” as whatever you like, but that’s not going to change the fact that some people might look at what you define as “gold” and see black instead—like, for instance, in the optical illusion we’re discussing in this thread—and then they’re going to argue with you. And then you might argue back, saying, “True color is the frequency mix of light together with its brightness!”, and then they’ll say, “Well, that’s not what I’m seeing, so explain that using your ‘true color’,” and so on and so forth, when really the only source of the argument is a failure to recognize that color perception differs depending on the person.

In short, you and adamzerner aren’t actually disagreeing about anything that’s happening here. Rather, you and he are defining the phrase “true color” differently (you as “the frequency mix of light together with its brightness” and adamzerner as “the visual sensation that, when perceived, maps in the brain to a certain word trigger associated with a color concept”). That’s all this whole argument is: an argument about the definition of a word, and those arguments are the most useless of all.

(Hence why Eliezer wrote this post. Seriously, this post is in my opinion one of the most useful posts ever written on LW; I’ve linked people to it more times than I can count. Why are people still making these sorts of elementary errors?)

I think you’re wrong.

First, as an aside, my disagreement with adamzerner isn’t about the definition of “true color”, he thinks such a thing just doesn’t exist at all.

Second, color is not a two-argument function, not any more than length or weight or, say, acidity. The output of the two-argument function is called perception of color.

Consider wine. One of it’s characteristics is acidity. Different people may try the same wine and disagree about its tartness—some would say the tannins mask it, some would disagree, some would be abnormally sensitive to acidity, some would have the wine with a meal which would affect the taste, etc. etc. And yet, acidity is not a two-argument function, I can get out the pH meter and measure—objectively—the concentration of hydrogen ions in the liquid.

While consumers might debate the acidity of a particular wine, the professionals—winemakers—do not rely on perception when they quality-control their batches of wine. They use pH meters and ignore the observer variation.

It’s the same thing with color. People can and do argue about perception of color, but if you want to see what the underlying reality is, you pull out your photospectrometer (or a decent proxy like any digital camera) and measure.

Professionals—people in photography, design, fashion—cannot afford to depend on observer perception so they profile and calibrate their entire workflow. Color management is a big and important thing, and it’s a science—it does not depend on people squinting at screens and declaring something to be a particular color.

Think about a photographer shooting a catalog for a fashion brand. In this application color accuracy is critical because if he screws up the color, the return rates for the item will skyrocket with the customers saying “it’s the wrong color, it looks different in real life than in the catalog”. And if that photographer tries to say that true color doesn’t exist and he just sees it that way, well, his professional career is unlikely to be long.

See—this, right here? This is what I mean by “argument about a definition of a word”. I don’t care what you think “color” is; I care if we’re talking about the same thing. If you insist on defining “color” as something else, we are no longer discussing the same topic, and so our disagreement is void. You are talking about one concept (call that concept “roloc”) and adamzerner is talking about another concept (call that concept “pbybe”).

So, does “roloc” exist objectively? Yes, and adamzerner doesn’t disagree with that.

Does “pbybe” exist objectively? No, because it’s a two-place function like I was talking about, and you don’t disagree with that.

So what’s our disagreement here, exactly? Are we arguing about how to define the word color? From your comment, specifically the portion I quoted above, I get the sense that to you, that is what we are arguing about. “Color is not x; it’s y.” Well, I say screw that. I’m not here to argue about definitions of words. You call your thing “color” if you want, and I’ll call mine something different, like “Bob”.

“Color” exists; “Bob” doesn’t. There, disagreement settled. Okay? Okay.

It’s cool how you talk to yourself, but why is this comment tagged as an answer to me?

Because your previous comment showed that you were still engaging in an argument about the definition of a word, despite your claims to the contrary, and I was under the impression that you would appreciate it if I pointed that out. Clearly I was mistaken, seeing as your reply contains 100% snark and 0% content. I regret to say that this will be my last reply to you on this thread, seeing as you are clearly not interested in polite or reasoned discussion. Insulting snark does not a good response make.

..

..

“Polite or reasoned discussion”, right… X-D

Bravo!