The Octopus, the Dolphin and Us: a Great Filter tale

Is intelligence hard to evolve? Well, we’re intelligent, so it must be easy… except that only an intelligent species would be able to ask that question, so we run straight into the problem of anthropics. Any being that asked that question would have to be intelligent, so this can’t tell us anything about its difficulty (a similar mistake would be to ask “is most of the universe hospitable to life?”, and then looking around and noting that everything seems pretty hospitable at first glance...).

Instead, one could point at the great apes, note their high intelligence, see that intelligence arises separately, and hence that it can’t be too hard to evolve.

One could do that… but one would be wrong. The key test is not whether intelligence can arise separately, but whether it can arise independently. Chimpanzees, Bonobos and Gorillas and such are all “on our line”: they are close to common ancestors of ours, which we would expect to be intelligent because we are intelligent. Intelligent species tend to have intelligent relatives. So they don’t provide any extra information about the ease or difficulty of evolving intelligence.

To get independent intelligence, we need to go far from our line. Enter the smart and cute icon on many student posters: the dolphin.

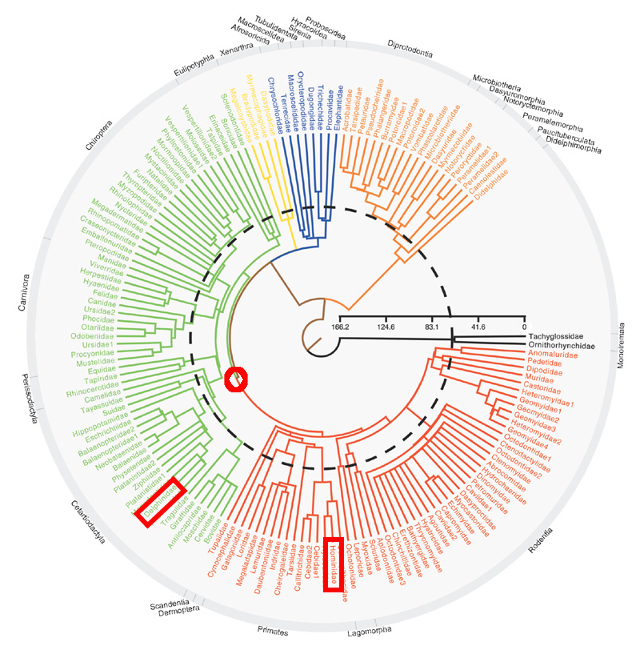

Dolphins are certainly intelligent. And they are certainly far from our line. It seems hard to find a definite answer, but it seems that the last common ancestor of humans and dolphins was a small mammal existing during the reign of the dinosaurs. Humans and dolphins have been indicated by red rectangles, and their last common ancestor with a red circle.

This red circle is well before the K-T boundary (indicated by the dotted line), hence represents a mammal living in the literal shadow of the dinosaurs.

We can apply a convergent evolution argument to this common ancestor. Thus, assuming that subsequent evolution was somewhat independent, getting from that common ancestor to dolphin level of intelligence is something that can happen relatively easily.

Can we go further? Well, what if we applied the argument twice? Let’s bring in the most alien looking of the high-intelligence animals: the octopus.

Let’s make the further assumption that our common ancestor with dolphins was dumber than the modern octopus. This doesn’t seem a stretch seeing how intelligent the modern octopus can be, how minor in terms of ecological role the common dolphin-human ancestor must have been, and seeing the stupidity of many of the descendants of that common ancestor.

If we accept that assumption, we can then start looking for the common ancestor of humans and octopuses. Our two species are really far apart:

We therefore have to go back to around the last common ancestor of the Bilateria (creatures with bilateral symmetry, i.e. they have a front and a back end, as well as an upside and downside, and therefore a left and a right). This is the (speculative) urbilaterian. There are no known examples or fossils of it, which means that it was likely less than 1 cm in length. To quote Wikipedia: “The urbilaterian is often considered to have possessed a gut and internal organs, a segmented body and a centralised nervous system, as well as a biphasic life cycle (i.e. consisting of larvae and adults) and some features of embryonic development. However, this need not necessarily be the case.” Very confusing, and with no information about intelligence level. However, since the organism was so small and since it was the ancestor of almost every animal alive today (including worms and Bryozoa), our best estimate would be that it’s pretty stupid, with the simplest possible “brain”.

Putting this all together, it seems evolutionarily easy to get from urbilatrian intelligence to Octopus intelligence, and from Octopus intelligence to dolphin intelligence—thus from urbilatrian to dolphin.

Note that this argument assumes that intelligence can be put on something like a linear scale. One could argue that Octopuses have low social intelligence, for instance. But then one could repeat the argument with distant animals with high social intelligence such as certain insects. Especially if one believe in a more general form of intelligence, it seems that this family of arguments could be used effectively to demonstrate dolphin-level intelligence emerging easily from very low levels of intelligence.

Application to the Great Filter

The Great Filter (related to the Fermi Paradox) is the argument that since we don’t see any evidence of complex technological life in the universe, something must be preventing its emergence. At some point on the trajectory, something is culling almost all species.

An “early” great filter wouldn’t affect us: that means that we got through the filter already, it’s in our past, so the emptiness among the stars doesn’t say anything negative for us. A “late” great filter is bad news: that implies that few civilizations make it from technological civilization to star-spanning civilization, with bad results for us. Note that AI is certainly not a great filter: an AI would likely expand through the universe itself

The real filter could be a combination of an early one and a late one, of course. But, unless the factors are exquisitely well-balanced, its likely that there is one location in civilizational development where most of the filter lies (ie where the probability of getting to the next stage is the lowest). Some possible locations for this could be:

Life itself is unlikely (very early great filter).

Life with a central nervous system is unlikely.

A lot of different possible locations for the great filter in between urbilatiran and dolphin intelligence.

Getting from dolphin to human intelligence is unlikely.

Getting from human intelligence to technological civilization is unlikely (latest early filter).

Getting from technological civilization to star-spanning civilization is unlikely.

These categories aren’t of same size, of course—the first three are very diverse and large, for instance. Then what the evolutionary argument above says, is that the Great Filter in unlikely to be in the third, bolded category (which is in fact a multi-category).

For what it’s worth, my personal judgement is that the filter lies before the creation of a central nervous system.

- Anthropic reasoning isn’t magic by (1 Nov 2017 8:57 UTC; 22 points)

- Doomsday argument for Anthropic Decision Theory by (9 Sep 2015 12:37 UTC; 6 points)

- 's comment on Birds, Brains, Planes, and AI: Against Appeals to the Complexity/Mysteriousness/Efficiency of the Brain by (28 Jan 2021 14:37 UTC; 3 points)

- In order to greatly reduce X-risk, design self-replicating spacecraft without AGI by (20 Sep 2014 20:25 UTC; 3 points)

- 's comment on Snyder-Beattie, Sandberg, Drexler & Bonsall (2020): The Timing of Evolutionary Transitions Suggests Intelligent Life Is Rare by (25 Nov 2020 16:02 UTC; 3 points)

I don’t think filters have to be sequential—some could be alternatives to each other, and they might interact. Consider the following.

Each supernova sterilizes everything for several lightyears around them. This galaxy has three supernovas per century, and it used to have more. Earth has gone unsterilized for 3.6 billion years, i.e. each of the last (very roughly) 100 million supernovas was far enough away to not kill it.

That’s easy to do for a planet somewhere on the outer rim, but the ones out there seem to lack heavy elements. If single-celled, mullti-celled, even intelligent life was easy given a couple billion years of evolution, you still couldn’t go to space on a periodic table that didn’t contain any metals.

So planets in areas with lots of supernova activity (i.e. high density of stars) could simply never have enough time between sterilizations to achieve spacefaring civilization, while planets in areas with low density of stars/supernovas haven’t accumulated enough heavy elements to build industry and spaceships. Neither effect prohibits everything, but together they’re a great filter.

There could be other combinations of prohibitive factors, where passing one makes passing the other more difficult. Maybe you need to be a carnivore in order to evolve theory of mind, but you also need to be a herbivore in order to evolve agriculture and exponential food surplus. Or maybe you need tectonic plates to avoid stratification of elements, but you also need a very stable orbit around your star, and those two conditions usually rule our each other. I don’t know. It just seems that a practically linear model of sequential filters, where filters basically don’t interact with each other, is entirely too simplistic to merit confidence.

In a few years, we’ll have a much clearer picture of the chemical makeup of the closest few hundred exoplanets, and that’ll cut down the number of possible explanations of Fermi’s Paradox to a maybe sort of manageable size. Until then, this discussion is unlikely to lead anywhere.

Really-quick-and-dirty calculation time!

Let’s say 3 supernovas per century and each sterilizing 10 light years in radius.

That produces an average sterilization volume of about ten cubic light years per year. Total volume of the galactic thin disc is on the order of 2*10^13 cubic light years. That produces a half life of sterilization on the order of trillions of years, though you can bring it down to billions if you increase the supernova rate by a factor of a thousand or increase sterilization radius out to 100+ light years.

We can probably discount the galactic core for any purposes though—I’ve seen fun papers proposing evidence that it undergoes periodic starbursts every few tens of millions of years and the galactic supernova rate then briefly goes up to something like one per year with most of them in the core.

Thanks, but it appears we’re both wrong. Here is a nice intro article that gives proper numbers on this very subject and concludes supernovae aren’t a life-forbidding problem even in the galactic center.

But high density of stars might lead to planetary orbit perturbations which could be. It appears the galaxy is a bit complicated.

BTW, this recently showed up on arXiv:

(“At z > 0.5” approximately means ‘more than 5 billion years ago’.)

(I only have read the abstract so far.)

Very interesting, thank you! I especially like the insight that for evolution to go on uninterrupted for 5 billion years, you don’t just need a particular type of planet (not hot, not cold, in a stable orbit) in a particular region of the galaxy (on the outskirts), but this planet also needs to be inside a particular type of galaxy (big, old, not dense) that happens to be in a particular type of intergalactic environment (not dense, lacking low metallicity dwarf galaxy neighbors). This helps with the sharper version of the Fermi paradox that assumes the possibility of intergalactic travel.

I’m not a physicist, but as far as I understand the paper, their assumption of what constitutes a “lethal” amount of Gamma Ray Burst damage to a planet seems kind of arbitrary. Their description indicates that it’d kill everything on the surface and everything underwater that feeds on plankton. But I see nothing to indicate that, say, life on hydrothermal vents, or bacteria living deep underground (which exist on our planet at least two miles down) couldn’t survive what the authors call “lethal”. So abiogenesis would not need to happen again in those cases, nor would evolution of very basic metabolic structures that evolution would again build upon. Even a small bunch of tiny replicators that survived with, say, three billion years of previous evolution under their belt might re-colonize the planet much more quickly and diversely than newly arisen ones could.

Meaning that as a layman, I don’t see how we’d distinguish between a past where Earth was hit by a “lethal” GRB 2 billion years ago (when there were just eukaryotes, procaryotes and cyanobacteria), and one were it wasn’t.

Actually, I’m not a layman, and I have some ideas.

The Proterozoic (2 billion years ago) is a time period that geologists affectionately call the ‘boring billion’. In those rock strata, we very often find biogenic stromatolites, crumpled accretionary structures produced by the accumulation of mineral waste products in microbial metabolism. In the wild, they look like lumpy rocks on coastlines and in lakes, with a thin biofilm on top. Think of them as the microbial forests through which the early eukaryotes would have foraged and hunted. These ecosystems are also exclusively shallow-water, since they require sunlight and water in copious supply.

As such, they would be wiped out by a ‘moderate’ gamma ray burst, since they don’t have the protection of deep oceans. In other words, there would be a specific moment at which accretion halted for every biogenic stromatolite at the same time. This would be followed, in geologic history, by a shortish period in which newly lithified sediments lacked a biological influence, as life clawed its way back from the deep oceans. Even if the biosphere that followed was indistinguishable from the previous incarnation (which itself seems unlikely), we’d be able to see an interruption.

We haven’t yet found evidence of such a hiatus in the Precambrian. It’s a big history, so it’s always possible that the evidence will come in later- but it’s worth pointing out that we have found interruptions of comparable magnitude, from different sources.

Indeed, according to Wikipedia at least, we don’t know whether the Ordovician–Silurian extinction event was caused by a GRB or not.

A much more plausible filter, along the same lines, is earth not ever going outside a certain range of temperatures, over four billions years or so, as Sun shone brighter.

There could be many filters along the same lines, such as never happening evolution of a very successful but simple organism that eats everything complex, prompting a restart.

Given our own existence, we can perhaps rule out theories which give very low probability of emergence of life in the whole universe, but the probability of emergence of life on a given habitable planet may still be incredibly low (if some molecules have to randomly combine in a certain specific way).

I don’t agree that metals and heavy elements are necessary for industry and spaceships: you can do quite a lot with light elements, particularly carbon (for example plastics, carbon fiber, etc.). Also, biology makes all of its structure through lighter elements.

That being said, I think you’re very much on the money with the general idea: I also thought something similar while reading the artifcle (that the filters are likely multivariate and interdependent), but not in as well thought out a way.

We can do quite a lot with light elements now, after we spent millennia figuring out metals. We still use a lot of metal equipment and catalysts in the manufacturing of polymers and carbon fiber. I’m sure there are processes for making them without metals, but getting civilization going in the first place would be much harder without elements heavier than iron.

(gorillas have theory of mind)[http://www.newscientist.com/article/dn18658-mindreading-gorillas-love-a-good-game.html#.VAm-UXVdX0o]

Although, maybe an ancestor of theirs was a omnivore?

Agree. the road from creation of life to creation of any nervous system at all is an extremely long and fraught one.

Life on our planet has a very specific chemistry. It’s possible that almost all possible chemistries limit complexity more than ours—leading to many planets of very simple organisms. Very large number of phyla on earth reach evolutionary dead ends both archae and bacteria are stuck as single cellular organisms, (or very simple aggregrates) - Plants cannot develop movement because of their cell walls, while insects cannot grow bigger because their lungs and exoskeletons do not scale upwards.

Genetics is an entire optimization layer underlying our own, neural one. I think the fact that it had to throw up an entire new, viable optimization layer represents a filter.

This is another good explanation instead of / in addition to the Great Filter.

It could be that there are many local optima to life, that are hard to escape. And that intelligence requires an unlikely local optimum. This functions like an early Great Filter, but in addition, failing this filter (by going to a bad local optimum) might make it impossible to start over.

For example, you could imagine that it were possible to evolve a gray goo like organism which eats everything else, but which is very robust to mutations, so it doesn’t evolve further.

Yes. And this is what has happened to most branches of the tree of life. E.g. Archae Bacteria, the various Protists. Only very basic multicellularity occurs in most kingdoms.

Could it be that different niches of life don’t independently evolve because those niches are already being filled? Can we say with confidence that if all animals died, something like animals wouldn’t eventually evolve again? Or any order of life.

That’s a good point. I guess not. My intuition is that a lot of organisms have evolved simple multicellularity, and so would probably be doing better as unified multicellular organisms, but it’s possible, as you say, that they haven’t gotten to that point for lack of the niche. I don’t know enough about the topic to say.

One theory gaining support recently is that the transition from prokaryote to eukaryote was damned hard, so that seems like a decent candidate given our limited knowledge today.

If the current model—merger of multiple prokaryotes—is correct, then the linked article (which says “And in more than 3 billion years of existence, it happened exactly once.”) is incorrect. Nuclei, mitochondria, and cholorplasts represent 3 distinct merger events here on Earth. Actually, the article even mentions nuclei, mitochondria, and chloroplasts all being likely endosymbionts, then goes and repeats the claim of uniqueness.

In any case, if it can happen 3 times on one planet, it probably isn’t dramatically unlikely, especially since at least one of those three events (chloroplasts) is strictly unnecessary for intelligence (in that no known intelligent species possesses them).

I wasn’t proposing the merger of multiple prokaryotes as a candidate for Great Filter, I was proposing the transition from prokaryote to eukaryote as a candidate for Great Filter.

Fair enough. I was reaching too far in assuming endosymbiotic events were the limiting factor in that transition.

Wait, nuclei? Link?

Where’s the host’s DNA, then?

That’s a version of the “chimeric theory”, one of a few for the origin of eukaryotic organization: see Wikipedia’s article. The DNA of the host cell would have migrated into the encapsulated bacterium or archaeon, through processes that I’ll admit I’m a bit fuzzy on.

(Wikipedia links several papers in support of this theory; the only un-paywalled one I could find, however, is Margulis et al. 2000.)

Huh. I’ve skimmed this a few times and it’s pretty hard to understand… so they are saying that the nucleus ancestor was a fast swimming oxygen avoider, and it basically chose to avoid oxygen by hiding inside another cell like a hermit crab—and in exchange, pushing the “shell” cell around...and eventually fusing genomes with it?

Link to a review article

I don’t know the answer to your question other than “they merged more fully than the genomes of other endosymbionts,” and in any case endosymbiosis is only one proposed explanation for the origin of the nucleus.

If the Machiavellian Intelligence Hypothesis is the correct explanation for the runaway explosion of human intellect—that we got smarter in order to outcompete each other for status, not in order to survive—then solitary species like the octopus would simply never experience the selection pressure needed to push them up to human level. Dolphins, in contrast, are a social animal, and maybe dolphins would be susceptible to intra-species selection for intelligence.

However, dolphins would hit a different filter, with their unfortunate body plan, lacking any type of fine manipulator limb whatsoever, making it infeasible to build complex tools.

Octopuses also have the feature that they die after mating (it’s unclear why this evolved). This makes it impossible for them to develop a culture that they can pass on to their children.

Not necessarily. A culture that include the concept of a “raiser”—an octopus with the job of raising the babies, and passing the culture on to them, without mating at all—can avoid that issue. The “raiser” would also improve his average genetic fitness if he is a sibling of one of the parents, since the children would then all have approximately one-quarter of his genes.

If it’s not enough to kill off the species, evolution generally won’t drop the feature.

This is a lot less motivation than for parents.

Well, for starter if you don’t die after mating you might be able to mate again.

According to my source, which is a blog comment that doesn’t site its sources, the death is a form of controlled cell-death and scientists have been able to remove the gene responsible and the resulting octopuses (or squid) can mate again later.

That is true.

This might—purely hypothetically—lead to a massive boom in octopus population, causing the octopi to eat everything edible, causing mass starvation.

Or it might be that octopi lack any form of parental instinct; that, six months after the babies are born, the parents see them as “food”, severely reducing the probability of there being another generation. (This would even be an advantageous mutation for octopi that die after mating, because it means that a given genetic line will work towards eradicating the children of any other genetic lines, giving their children less competition...)

Either way, until an octopus in the wild develops the “does-not-die-after-mating” mutation on its own (or possibly escapes from the lab, if what those scientists did is inheritable), evolution will do nothing to get rid of it. And once it does turn up in the wild—well, all else being equal, I’d expect that mutation would supplant the previous, “die-after-mating” model eventually… after a few thousand years or so. Evolution is not, by any means, a completed process.

This relies on group selection to work.

Why was this down-voted?

I’m not quite sure; I think it might be because of the claim that one of the possibilities I’d suggested required invoking group selection to work (a claim which I’m not sure is valid, but I’m also not sure enough of my grounds to argue against). That’s the only reason I can think of...

For a species driven entirely by instinct, yes. But given a species that is able to reason, wouldn’t a “raiser” who is given a whole group to raise be more efficient than parents? The benefit of a small minority of tribe members passing down their culture would certainly outweigh those few members also having children.

In addition, cultural memes can evolve and be passed down completely independently of genes.

It doesn’t matter to the cultural memes if they propagate using genetically unfit people; celibate monks were a culture where celibate monkhood was a real thing.

From the genes’ point of view, the soma is just a vehicle for transporting the genes through time. With each generation, the genes shed the soma like people change their car. If the parent is unnecessary for the survival of the children, the genes in the children may be better off for not having the parent around to compete for food.

See my comment about group selection below.

This is competition between the parents and their own offspring. No group selection.

Orson Scott Card uses this dynamic in the later Ender’s Game books.

The world-building in the first Ender’s Game book can be made roughly consistent if we assume that all the adults have turned their decision-making over to a computer program (which does not eat the solar system Because Magic). But the later books have no standard or intended models.

Do you expect animals with human-like intelligence and dolphin-like bodies will fail to develop technological civilization? As a first approximation, I expect a community of modern human engineers (with basic technical background, but no specific knowledge) in dolphin bodies can manage to do that eventually, if they form a society conductive to long-term pursuit of the project. It’s less clear if at human level this happens spontaneously, since it did take 200,000 years for humans with hands to get to technological civilization, and an additional difficulty could make it millions of years if intelligence is kept fixed.

(Assuming that machiavellian intelligence pressure can run further than it did with humans, machiavellian dolphins could at some point become even smarter than humans, which can be used to overcome the no-hands difficulty more effectively than human-level dolphins could. Alternatively, human-level dolphins can learn of selective breeding and create smarter dolphins irrespective of whether smarter dolphins would arise on their own.)

There’s also an important difference in their environment. Underwater (oceans, seas, lagoons) seems much more poor. There are no trees underwater to climb on, branches or sticks of which could be used for tools, you can’t use gravity to devise traps, there’s no fire, much simpler geology, lithe prospects for farming, etc.

I wonder—if an underwater civilisation were to arise, would they consider an open-air civilisation impossible?

“You’re stuck crawling around in a mere two dimensions, unless you put a lot of evolutionary effort into wings, but then you have terrible weight limits on the size of the brain; you can’t assign land to kelp farms and then live in the area above it, so total population is severely limited; and every couple of centuries or so a tsunami will come and wipe out anything built along the coast...”

It’s hard to evaluate for the same reason it’s hard to evaluate whether off-world life could be non-carbon/water-based (maybe we just don’t have the imagination), but I think that excluding humans, land-based ‘civilization’ would still look superior on the merits of what animals and other creatures have done. If we look at compilations of tool use like https://en.wikipedia.org/wiki/Tool_use_by_animals land life dominates.

Complex sea life mostly consists of octopuses and cetaceans; the former seem to only use rudimentary tools for shelter, while the latter do ‘bubble netting’ (interesting but not a step towards anything), nose protection with sponges (proto-clothing?), and shells as scoops. Otters hammer open sea urchins with rocks, similar to some fishes. Further, they’re cut off from sea sources of metal and minerals like deep-sea vents—dolphins can’t go that deep.

In contrast, land life has tool use spread over all sorts of creatures from insects to birds. They benefit from sharp unworn stones (smashing, throwing), abundant sticks and thorns (which can be used in all sorts of ways—picking up termites, jabbing for fish, measuring water depth, impaling & storing prey like the shrikes, walking sticks, bridges, digging, cleaning nails & ears, etc); and many of those uses make little sense in water—you can hardly drop or throw a big stone in the ocean—which also means the rewards to tool use are lower.

Then we pass from tool use to structure building https://en.wikipedia.org/wiki/Structures_built_by_animals

So in other words, it’s almost exclusively a land animal thing; it’s not that you can’t build structures in the sea, but that it doesn’t make sense for most creatures—such as otters or cetaceans, which were some of our best candidates. This loses out on more benefits from tool use.

And then there’s the issue that the sea seems to punish big-brained animals: cetaceans and octopuses may have high encephalization quotients, but what else at sea does?

So I think if aliens were to come to earth a million years ago and poke around the ocean and land, they would note that a variety of the species on land seem to be using a lot of tools in all sorts of ways and often building structures and their brains tend to be fairly big, and conclude that yes, it looks like the land really is better for the activities closest to technology—after all, if the sea is so great, why aren’t the creatures there doing much?

You make a very compelling argument, and on balance I think that you are probably correct in your conclusions.

Part of it may be because, for a land animal, the ground is always there. There’s always a strong probability of a rock at your feet to pick up. For sea creatures, it’s possible (in theory) to wander around for months without seeing another solid object. So, land animals have less space to move about in, but have an easier time finding simple tools.

This, of course, relies on the idea that tools—unliving lumps of matter used for a purpose—are a necessary component of a civilisation. It goes without saying that tools are a necessary component of our civilisation; but are they a necessary component of all possible civilisations?

The theoretical underwater civilisation has one thing in great abundance—space. The oceans cover three-quarters of our planet, and sea creatures can move up and down easily enough. Is there any way that that space can be used, as a foundation for some form of aquatic civilisation?

Thinking about bubble netting—it should be possible for dolphins to practice a form of agriculture, herding and taming schools of edible fish, much like shepherds. (I believe ants do something similar with aphids, and I’m pretty sure a dolphin is more intelligent than an ant). Once one has shepherds, one can easily move towards the idea of breeding fish for a purpose—breeding big fish with big fish to get bigger fish, for example. Or breeding tasty with tasty to get tastier. There’s certainly space in the oceans for the dolphins to create a lot of fish farms… and then for these fish farms to swap and interbreed particularly interesting lines.

I’m not quite sure how to believably get beyond a basic agricultural/nomadic existence, though. (Unless perhaps the dolphins start breeding intelligent octopi with intelligent octopi to get more intelligent octopi or something along those lines).

Dolphins are able to herd schools of fish, cooperating to keep a ‘ball’ of fish together for a long time while feeding from it.

However, taming and sustained breeding is a long way from herding behavior—it requires long term planning for multi-year time periods, and I’m not sure if that has been observed in dolphins.

Kelp and fish can be farmed.

How? You can not have fire (no magnesium, phosphorus and so on do not count, since you do not get them without fire), thus you do not get metals, steam and internal combustion engine. Since you do not get metals, you do not get precision tools, or electricity. You are more or less stuck with sharpened rocks and whale bones as a very poor substitute for wood (if you get them in the first place). I am very curious how you think a human or even smarter than human inteligence might bootstrap an industrial civilisation from there.

Mostly I expect creative surprises based on overall impression about the power of engineering. Let’s try to do a bit of exploratory engineering, consider projects that include steps that are clearly suboptimal, but seem like they could do the trick. (A practicing engineer or ten years of planning would improve this dramatically, removing stupid assumptions and finding better alternatives; a hundred thousand years of actually working on the subprojects will do even better.)

Initially, power can be provided by pulling strong vines (some kind of seaweed will probably work) attached together. It should be possible to farm trees somewhere on the shoreline, if you don’t mind waiting a few decades (not sure if there are any useful underwater plants, but there could be). A saw could be made of something like a shark jaw with vines attached to the sides, so that it can be dragged back and forth. This could be used to make wooden supporting structures that help with improving control of what kind of change is inflicted on the material by a saw. Eventually, incremental improvements in control and precision of saws would allow getting to something functionally similar to sawmills, bonecraft and woodcraft tools.

These enable screws, joints, jars and all kinds of basic mechanical components, which can be used in the construction of tools for controlling things on surfaces of rafts, so that in principle it becomes possible to do anything there given enough woodcrafting and bonecrafting work. At this point we also probably have fire and can use tides to power simple machinery, so that it’s practical to create bigger controlled environments and study chemistry and materials. And we get concrete/cement to create watertight buildings and possibly canals with locks for land access. Something like ironsand or ores from surface exploration can be used to initially get metal and develop precision tools, at which point we get electricity and more powerful chemistry capable of extracting all kinds of things from available materials, however inefficiently. After that, there doesn’t appear to be much difference from what’s available to humans.

I think the basic problem here is that I have to proove a negative, which is, as we all know, impossible. Thus I am pretty much reduced to debating your suggestions. This will sound quite nitpicky but is not meant as an offense, but to demonstrate, where the difficulties would be:

Power to what? Whatever it is it has to be build without hands !!! and with very basic tools. No Seeweed would not work, because there is no evolutionary pressure on aquatic plants to build the strong supportive structures we use from terrestrial plants.

No, trees do not grow in salty environment (except mangroves). How does a dolphin plant, and harvest mangroves without hands and without an axe or a saw (see below).

No it can not: Shark teeth would break quickly and even if they would not, they do not have the correct form to saw wood. Humans allmost exclusively used axes and knives for woodcrafting before the advent of advanced metallurgy. And you do not get wines.

I think you severely underestimate just how helpless a dolphin would be on such a raft or are we talking remote operation? Without metall? Without precision tools? (I mean real 19th century precision tools—lathe, milling cutter and so on, not stone age “precision tools”)

To get land access and do uesful work there (gather wood, create fire, smelt metal ect.) a dolphin would imho need something like a powered exoskeleton controlled perhaps by fin movement or better by DNI. Modern humanity might perhaps be able to build something to enable a dolphin to work on land, but not a medival or a stone age human civilisation and certainly not a stone age civilisation without hands.

I hope I have brought across which kind of difficulties I think would prevent your dolphin engineers from ever getting anywhere. If you disagree on a certain point I am willing to discuss it in greater detail

It’s not impossible. Significant evidence of the negative will be obtained if performing a thorough investigation (which would be expected to solve the problem if it can be solved) fails to solve the problem. Applying this to flaws in particular steps, the useful goal is to show that something can’t be done (that we won’t find an alternative solution), not just that something won’t work if done in a particular way.

For constructing a plan, I have another idea. Start with the simpler problem of developing technology as dolphins with hands. This hypothetical isolates the problem of dealing with underwater environment, from the problem of dealing with absence of hands.

Let’s suppose that it’s possible to solve this simpler problem. Then, I’m not sure that when we have a particular tiny operation that could be performed with hands (a step in the process of developing technology by dolphins with hands, such as smashing something with something else, or tying a knot), it’s impossible to reproduce it without hands (much more laborously, slowly, using more people). Can you come up with a particular example of a very simple action that can be performed with hands (underwater, etc.), which doesn’t look like it can be reduced to working without hands?

Go to a prehistoric museum, even the simplest items you see (stones tied to sticks very securely for example) are not at all easy to do with your hands and are going to be so hard to do with just the snouts that they could be deemed impossible (and would be properly impossible if you consider e.g. the rate of decay of your materials combined with the minimum time to build it, or the like. Sufficient difficulty is impossibility). It’s not that you can’t tie some knot, it’s that you can’t do so reliably and with high precision in the spot where you need it.

I think we can all agree that the difficulty gap is absolutely immense. Perhaps dolphin bodies, with no magical knowledge, could do it, but at an intelligence level that is utterly, immensely superhuman.

You could allways argue that we are both not creative / inteligent enough to find a solution and that this is not indicative that a whole society would not find a solution. And this argument may well be correct.

What does that even mean? A dolphin body with functional human arms and a human brain attached and the necessary modifications to make that work? Well now you have got more or less a meremaid with very substantial terrestrial capabilities (well exeeding those of a seal; watch this to get an impression of what I mean ). A group of creatures like that with general knowledge of science might well make it.

Now imagine this creature as strictly waterbound and I think even in this much simpeler problem we can identify a major showstopper: Iron smelting. Imagine this meremaid civilsation with propper hands, and flintstone tools (Can flintstone be found in the oceans? I don’t know) and modern scientific knowledge trying to light a fire. They gather mangrooves using their flint axes, build a raft and throw some wood atop to dry. What now? They cannot board the raft to strike or drill fire so they might try to bulid a mirror to use sunlight. Humans did not do that, but they did not know science, so granted. How do they build it without glass or metal? I don’t know, but let’s say they manage. So now they have fire, not controlled fire, but a bonfire atop a wooden raft. But they don’t need a bonfire they need something like a bloomery and then they need to do some very serious smithing only to build something like a very crude excavator arm to do very basic manipulations in a terrestrial environment. And you cannot do smithing under water.

Can you imagine a way a group of quadruplegics ( imho a good aproximation of a stranded dolphin with a human brain—except that their skin does not dry out - ) could fell a tree with stone tools? And delimb it? And bring it to the construction site? And erect it as a pillar?

I don’t know about quadruplegics, but I can imagine a way that a group of dolphins might be able to fell a tree using only stone tools and a bit of seaweed.

First, they would need a suitable tree. One that grows near the water (probably near a river they can swim up) where it’s easy enough to get to (and other dolphins can stay in the river and splash the woodcutter to prevent his skin from drying out).

Then, they need a stone axehead. This can be made, fairly laboriously, using only stone; chipping away until is is the right shape and sharp enough.

The dolphins then elect one of their number to be the woodcutter, and use the kelp to tie the axehead to his tail, at a carefully chosen angle. (This part can be done underwater, where the dolphin(s) tying the knots can swim around at all angles to get the kelp in position; another dolphin would probably need to hold the axehead in position while this is going on).

A dolphin’s tail can certainly swing back and forth (or, up and down) with some force, as this motion is used when swimming. So the woodcutter would then need to climb out of the water, turn on his side, and strike the tree repeatedly with the axehead...

If he has a better idea of what he’s doing than I would, he may even be able to arrange for the tree to fall into the river, at which point transportation is comparatively easily handled.

Given an expectation of how hard it is to solve the problem if it can be solved, inability to solve it with given effort produces corresponding evidence of impossibility of solving the problem. Not responding to inability to solve the problem amounts to actually expecting the problem to be very hard. If I don’t expect that, I would be wrong in suggesting that inability to solve the problem is not evidence for impossibility of solving it.

Another framing is to generalize “inability to solve the problem” upon the conclusion of the project, to a situation where the expectation that the problem can be solved eventually is reduced. Correspondingly, generalize “ability to solve the problem” with expectation having gone up upon the project’s conclusion. This way, it’s clear by conservation of expected evidence that you can’t expect that the estimate for the probability that the problem is solvable will go in a particular direction upon the conclusion of the project. Either the expectation will go up (and so the project produces evidence of the possibility of eventually solving the problem), or else it must go down (and so you gain that elusive evidence of the negative).

Sure, depending on what you are thinking about as the reference procedure of, say, chopping down a tree when using hands. Dolphins with hands won’t just be swinging an axe on the surface, as they would first need to solve the problem of being able to move around, so I’m responding to the analogy with humans who have to do the task without hands, but do have legs. For dolphins, we would need to start with the reference procedure where it’s clear how dolphins with hands can do something.

To chop down a tree, you need to strike it repeatedly with an axe (this is what I assume you meant). To strike it repeatedly, you need to be able to strike it once. It’s such actions as striking a tree with an axe once that I meant as something that I expect can be reduced.

Let’s make the handle of the axe a much longer stick, and also attach another stick perpendicularly to control the tilt of the head of the axe, so that it’s possible to make sure that the blade is turned in the correct direction without having to apply torque directly to the handle. The long handle can be placed on top of a third stick perpendicular to it, and ride along that third stick, with the end (knob) of the handle fixed in place. When it does so, the head of the axe swings. Now, if we let the head of the axe fall under its weight while guided by (“riding” on) the third stick, or alternatively pull it in order for the axe to gain the necessary speed, and use the second stick to direct the blade, the result is the axe head striking the tree with the blade at sufficient speed to dent it. Perhaps such method would be a hundred times slower, so that it would take a year to do a job that would otherwise take a day, and that is just what I meant by the process being much less efficient, more laborous.

(Not sure what you mean by “strictly waterbound”, though this distinction doesn’t seem important for this discussion. The hypothetical considers creatures that are like dolphins in all relevant respects excepts they also have hands (maybe as additional retractable limbs, to preserve swimming capabilities). So they should be about as waterbound as dolphins. If this hypothetical allows technology, we could pose the more difficult problem of developing technology without the ability to surface even for a short time (which dolphins have).)

Agreed

No they are not. They are much less waterbound than seals (watch the video), because they can move around on their hands and use their hands to cover themselves with seaweeds or somesuch to protect against drying / sun. I fully agree with you that such creatures are can bootstrap a civilisation especially if they have scientific knowledge.

Where I disagree is the point where an unmodified dolphin or a strictly waterbound (arbitrarily defined as cannot leave the water for more than 5 seconds) “dolphin with hands” gets anything done on the surface without having significant technology to start with (arbitrarily defined as anything humans could not build 40000 years ago). They would run into the problem that they have to build complex contraptions

to perform simple tasks (felling a tree) without being able to build those complex contraptions without the help of even more complex contraptions (You cannot build what you described in the above quote without having wood and being able to work with it—and do that in a terrestrial environment, where you can not do anything in the first place, because you can not move.).

It is not a major problem at all. Given that creatures have hands and can keep them out of water, they can build a bloomery inside a diving bell.

Chicken and egg problem. What are you building the diving bell out of?

More to the point, why would they want to? What would drive them to do so?

For example, out of animal skins. This construction is supported by internal pressure, it does not need strength.

Want exactly what? If “smelt metal”, then probably the same as humans, accidentally placing copper or tin ore in furnace. If “having underwater furnace”—it is easier to operate than one placed on raft. If “why use fire at all”—to make watertight pottery.

The dolphins could practice eugenics and evolve hands or lungs. If they find that unethical, they could selectively breed other organisms for various tasks. The same way us humans bootstrapped our civilization. We didn’t start farming and building cities overnight, we had to selectively breed productive crops first. Then useful work animals like horses.

Why would they want to? A modern dolphin can get basically all the food its needs with minimal effort,so the main competition is intra-species. So for a dolphin society to advance technologically you would require every individual within it to give up their own reprodutive fitness but putting time and energy into the great project with no immediate benefit. For a technological society to develop it isnt enough that with sufficient coordination they could do so, but that it is in their self interest at every step along the way.

edit

It may also be possible to fall into local maxima and not get out of them even once a species has got a starting level of technology. Consider that humans spent 2.6 million years or so at paleolithic technology levels, and were probably only knocked out of it y sudden environmental change not by a gradual process of improving technology.

They don’t. They either starve, be killed by predators, humans or by something.

They haven’t escape this bloody cycle of exponential population grow on one, and mass death on other hand.

There is nothing like a “stable population” among dolphins.

This is part of a hypothetical intended to explore the issue of technical feasibility of developing technology using only dolphin bodies (underwater, etc.). This hypothetical removes two other most obvious issues in order to focus attention on this one. It removes the issue of developing useful-in-practice understanding of science by assuming that we have modern engineers. It removes the issue of unfavorable incentives by assuming that the incentives are directed towards the project.

You can explore other issues in other hypotheticals.

This group of dolphins might do it as a (long-term) way to better compete with that group of dolphins over there.

But how you imagine that would work? How will a longer timespan help?

Let’s picture that we literally took 10 000 top human engineers and scientists, with all our human knowledge, into dolphin bodies, on another planet with no human artefacts. So, our dolphin people now need to somehow develop a way of writing down their knowledge underwater, which they can only do very laboriously because they haven’t got hands. They can write very large letters with immense energy expenditure per letter. They can barely store any knowledge. They also got short lifespan and sharks to worry about.

And on the tools side, you need tools that are good enough so you can use them to make better tools. That generally requires ability to harden things—make something while it’s soft, let it harden, use something softer to crack apart something harder. And to get that started, you need hands, because without hands you can only make the kind of tools that doesn’t help you make better tools.

If you can’t make an improvement in any single generation, you can’t make any improvement in a thousand generations either.

Meanwhile, a planet populated with those same scientists and engineers in human bodies—hell, dog bodies, cat bodies, elephant bodies—would’ve had it all sorted out in no time. They’d have steel, electricity, running water, radio, and so on, in less than a generation—hell even 10 people can do that.

(assuming they all cooperate).

The gap due to the body shape and environment appears utterly immense. The only hope would be that dophins would evolve much greater than human intelligence and come up with something that we can’t come up with (e.g. mind controlling some animal with hands).

edit: That is not to say a small number of top scientists and engineers would single handedly create industrial manufacturing, but that is to say they would re-create pre-industrial village level technology and then hand-make many important bits of 20th century technology. You can take a 16th century blacksmith’s forge and make an electric generator in there, a spark gap transmitter, a coherer receiver, a carbon arc lamp, and the like, using most basic materials and hand manufacturing techniques. Indeed that’s how the early instances of all those things were made—by a small number of top engineers, often in their spare time, without advance knowledge.

That’s a bad start. The issue is not whether intelligent dolphins will be able to replicate human civilization, the issue is whether they might be able to develop their own—one which will look very different from human and which, I suspect, would be largely beyond our imagination at the moment.

The point of the example is to provoke a concrete discussion. Appeals to unimaginable are not very useful. The underwater environment doesn’t seem as conductive to technological visible-from-a-distance civilization, in any case. Dramatically different civilizations may not go into space, and if we are discussing civilizations whose apparent absence in the sky is suspicious, those recreate a huge chunk of our own civilization.

edit: let’s put it this way. The only data point we got on things such as civilization not becoming stagnant, or civilization becoming visible, is our own. The further we go from there the less reason we have to expect those to occur.

I see absolutely no reason for that to be so. Generalizing from the sample of one is foolhardy.

Generalizing from the sample of one is where you get the idea that we’ll see them from. edit: let’s just agree that the assumption that aliens would be visible has uncertainties, that are much larger for some really really alien unimaginable aliens.

Taken literally, no. There will not be any civilization above hunter-gatherers without domesticated plants and animals, and that cannot be done in one generation. Remember that ox and wheat also are human artefacts. Well, realistically (in human variant) most of them will be dead very soon, survivors become nomadic hunters.

Hunter gatherers had a lot of free time, though.

And as for the most of them getting dead very soon… I dunno, wildlife survival is not really that hard in general. We only have wildlife left in the regions where it’s very hard for humans to live, so if you drop people into the remaining regions of wilderness, they don’t fare very well. And we didn’t start on the wheat cultivation with the grand plan of going to the moon, we did that because the wheat as it was naturally gave huge and immediate benefits.

I don’t think you’d end up with a culture resembling any culture that existed in history. You have those smartest engineers and scientists, who already know how to make bows, steel, glass, firearms, electrical generators, and so on, and once settled in, they have a lot of free time (because there’s a ton of wildlife—buffalo herds, passenger pigeons, all that other easy to kill stuff that’s extinct—which will take many generations to deplete. They’re not in the modern day wilderness in the region where people can barely survive and almost all the food is extinct. They’re the new predator).

First, hunting with stone age weapons is far fom easy. Second, most engineers and scientists are not hunters, noone of them know how to hunt with spear and almost noone with bow. Third, they have no food supplies and so no time to learn. They will survive olny in very favourable conditions, like on tropical island with plenty of shellfish and tortoises (I think most people can hunt those).

I were thinking of my experience in Russia where engineers, mathematicians, and physicists absolutely loved going out on various nature trips (Didn’t really think of Sheldon and US tv shows). Of course, not everyone did, but we’re dropping a huge number of people, and those who know can teach those who don’t. Healthy person can go for 2 months without food.

Let’s say that they spawn on 1kmx1km zone in a grid with 10m spacing, in the temperate climate in the late spring, clothed in earliest stone age clothing (for same reason why we don’t spawn dolphins into a desert, we don’t spawn people into the Arctic).

This strikes me as very human centric. Why should another species’ hypothetical ascension look so much like the one we happened to observe in humans?

If it looks too different, we won’t see them in space, though.

Our own intelligence is at the level where it’s just barely sufficient to build a civilization when you got hands, fire, and so on. Note that orcas have much larger brains than humans, and had those larger brains for quite a long time, yet we’re where we are, and they’re where they are.

Likely because the first beings that could do that, did do that—no need to wait for the evolution of higher intelligence (so, in particular, this doesn’t show that higher intelligence couldn’t evolve).

Yeah, that’s precisely my point. If there’s more obstacles, intelligence has to go further before the technological civilization. We did get higher intelligence but only by other means (e.g. having paper and pencil helps, better nutrition gives higher IQ, and so on).

The Wikipedia article listing number of neurons in the cerebral cortex shows humans as significantly higher than whales, even though raw brain size may look better for whales. Wikipedia also describes an encephalization quotient which takes account of the fact that the brain is used for bodily functions, and on which whales don’t score as highly as they may seem to from brain size.

Yeah, that’s quite interesting. Raises the question though, why do they not have more neurons? They do have larger glia to neuron ratios, it’s not like everything’s simply bigger. Perhaps aquatic environment simply doesn’t reward intelligence that much.

Well, the bodily functions are the same but occurring at a lower rate, for a larger mammal. Most of whale’s body is fat, anyhow, which doesn’t need to be controlled by brain, and it’s not generally the case that people drop many IQ points when they become overweight. Nor are smaller people with same sized heads more intelligent.

edit: on the other hand, EQ may be a (very crude) measure of how well brain tissue pays off for an animal. If you have high quality brain tissue and you’re in a complex environment, at the equilibrium between costs and benefits you would haul around more brain mass per body mass. With the obvious caveat that this tradeoff is very different between land animals, flying animals, and aquatic animals.

Women are in average approximately as intelligent as men (though it depends on how you weigh visual intelligence vs verbal intelligence, and anyway their variance is smaller) even though they have smaller heads.

But is it because of the smaller body sizes using less brain for the bodily functions? I don’t think so.

edit: I frankly don’t get the point with EQ. If we had to make a computer control for bodily functions (e.g. to grow ethical meat), we could do with a weaker cpu for larger animals because they work slower. It just doesn’t make sense that body size would be using up brain to control it, irrespective of the composition of that body.

You greatly underestimate population size nesessary for civilization.

10 people, of course, can’t rebuild the whole civilization, but 10 top scientists and engineers with relevant expertise, given access to the natural resources, can make iron, steel, copper, tooling, build an electric generator, and so on [assuming they don’t get eaten by wildlife early on]. Of course, when they die out, it’s gone with them—the heavily inbred future generations aren’t going to be able to continue that, and probably won’t even survive.

No they cant. For example to make copper you need copper mine workers, smeltery workers, woodcutters, charcoal burners, wagon drivers to transport wood, ore and coal, carpenters to make wagons, builders to build mine and smeltery and farmers to feed them. That is impossible for population less then few thousands at least. Industry nesessary to make a generator requires population in millions.

To make copper, you need copper ore and charcoal and a fire and bellows out of animal hide. Those things weren’t produced in a modern industrial manner until something known as “industrial revolution”. You had a little town, it had a blacksmith, and the blacksmith could smelt his own iron (and copper, if he has the ore, as copper smelting is pretty easy). You’d be surprised how much technology existed entirely locally within a small village.

Actually, to make copper tools all you need is copper nuggets (which aren’t all that rare) and a couple of rocks.

Humans made tools out of meteorite iron before they developed metallurgy.

Heh, yeah. But when you don’t have transportation and it’s just 10 people it may be difficult to find such things without a metal detector… I was just recalling one time I made a little bit of copper from low grade malachite, using a torch. It is really easily reduced from the ore. More easily than iron.

They don’t need to write it down, they just need to store it. A certain amount of knowledge can be “stored” as oral tradition; alternatively, a lot more knowledge can be stored as (say) a pattern of rocks, carefully gathered and moved into position, in an area that can be easily visited.

Some knowledge will almost certainly be lost in the first few generations, despite that.

Oral traditions decay quickly, though. And rock placement is an example of far higher effort per letter. The point is, they find it much harder to retain knowledge. If the rate of loss is greater than the rate of creation, there’s no progress at all.

And even after you’re storing the knowledge, you’re still entirely out of luck on tools. Even if they have waterproof’d encyclopaedia handed to them, they still have this make tools to make tools to make tools cycle that they can’t even bootstrap.

The point is, putting that side to side with humans in some land animal bodies, you have on one hand a bunch of dolphin people who lost pretty much everything in a few generations, save for a few myths that are of no practical use, and on the other hand you have those land people with electricity, radio, and everything, a good chunk of 20th century tech.

Why on earth would they lose everything?

Yes, there would be some significant losses in the first few generations. But if you teleport the top 10 000 scientist and engineers onto an alien world with no human artifacts and leave them in human bodies, there will also be some significant losses in the first few generations. Once data storage technology is able to keep up with the volume of knowledge retained, then the losses will stop, and knowledge will slowly start to be regained.

If they’re sensible, they’ll realise that they can’t retain everything, and put some effort into retaining that which is important.

Why not? Hydraulic cement will harden underwater, and can be carefully pressed and moulded into shape. And while I have no idea how dolphins can get to that point, apparently even underwater welding is possible.

’cause nothing they know is particularly useful for future generations. You just get distorted myths, and it doesn’t take much distortion until technical knowledge becomes entirely non trustworthy and thus worthless.

But can you make hydraulic cement underwater? I was under the impression that you needed fire to make it.

Knowing how to make hydraulic cement isn’t useful?

I am quite certain that the 10 000 top engineers and scientists know quite a few things that would be very useful for future generations. Since I am not in that number, and since I am only one person, I do not know what those things would be, but I estimate a high probability that they exist.

I’m not sure. The Wikipedia article mentions that the ancient Romans used a mixture of volcanic ash and crushed lime, and you certainly do get underwater volcanoes, so the ash should be available… there are probably industrial processes now, but just mixing volcanic ash with the right sort of mud and getting something that hardens if you leave it for a day or two sounds usable underwater to me.

No, the ash would react with water immeadetly and thus be useless and you need burned lime (CaO or (CaOH)2), not limestone (CaCO3)

Ah, thank you. I wasn’t sure about that.

But it’s not enough to convey the formulas, you need also to convey the context. And you need to do so reliably, without later generations adding in nonsense of their own. When the knowledge is in use, it’s naturally checked against reality and protected against decay.

re: cement, my understanding is that you still need to fire components to make hydraulic cement, and underwater ash won’t work.

Which means that they will best remember things that they can immediately put to use, yes. Such as how to breed fish for desired characteristics, maybe? Or how to create some basic tools.

But what those tools may be, besides the tools real dolphins already invented? And breeding fish requires some sort of enclosure, ability to manipulate individual fish, etc. It’s not even clear there’s anything to gain from breeding fish if you don’t do some underwater agriculture for fish food (and thus need fish very different from available species to make full use of that).

Perhaps the reasons dolphin’s large brains are not particularly optimized (comparing to ours) in terms of neural density, despite ample time at their brain volume, is that they already do pretty much everything that a greater intellect would do.

On the ground and with the hands, when our intelligence was the same as of dolphins, we had a lot of complex and useful things we could have been doing if we were a little smarter, and that’s how we evolved our intelligence (and conversely, how they didn’t evolve much further).

Not necessarily. For reasons that are completely intuitive, I imagine our top 10 000 scientist et al. would be able to fashion tools, that could be used very effectively.

You make the assumption (albeit loosely) that progress happens along a straight pre-determined path, where things happen in a specific sequence, much like they did in human history. This is far from so. We are talking about a different planet out here. I’m not suggesting that different laws of physics will be applicable.

But the odds are that the oceanography, will be vastly different, with all sorts of different materials that would be available. There’s no guarantee that this planet (from here on called “Dolphin Alpha”) that steel would even exist or the necessary elements are present on the planet. On the other hand, you could possibly have all manner of other material. Perhaps, under water civilization would have a very different set of social rules.

HEAVY USE OF HYPERBOLE FOLLOWS

Given that these are the most brilliant 10 000 scientists that are present on the planet, I’d expect a sizeable number of them to know morse code, which can then be replicated in dolphin sounds (am no expert, but you’d need only two distinguishable sounds if I’ve got the concept correct). A good number more would be experts in Game theory. The homo sapiens dolphinus (again no expert, but this sounds authentic enough to me! How about we call them HSD for short?) that happen to know both morse code and be well versed in the more salient features of Game Theory, would probably rise to political power within the ranks of the HSD, and since these arent politicians who are in it for material gain (that part comes much later in the advent of a civilisation) and are truly interested in the welfare of the civilisation, would probably find ways to domesticate (or if you prefer the harsher equivalent “enslave”), all sorts of smaller creatures that could work for them!!

For the sake of simplicity, if we assume that marine life on Dolhin Alpha evolved roughly in parallel with that of Earth, the Dolphin Alpha Crabs (resembling the ones that live under water not the other type) and Dolphin Alpha Lobsters could work like minions. The HSD could decipher which fish are poisonous and use that knowledge to hunt larger and more meatier underwater species (I was thinking sharks, but that takes experimentation and I somehow see the world’s leading scientists thinking long and hard and then deciding that there are safer options at hand, except for the few who were also born adrenaline junkies and are now flapping their fins in excitement)

Before I get further carried away, I would just like to say that the body shape is something that can be easily overcome.

Whether intelligence would arise to a greater degree, really is a matter up for debate, but I would b inclined to think the answer is no, until the HSD discovers the equivalent of computing.

I have your ‘domesticate other animals’ listed in a footnote. Would take a long time and getting the domesticated animals to the point where they’re a replacement for your hands… that’s on par with a breeding program to regrow your own hands.

The time during which you can make environment easy enough that your intelligence de-evolves.

What makes you confident that there’s not high probability of there being an AI somewhere outside our light cone, but shortly after an AI (or any other highly expansionist extraterrestrial) enters our light cone there are no longer conscious observers, so the vast majority of human consciousness-seconds are spent observing that there’s no visitors? Even if it’s vanishingly unlikely that no destructive intelligence explosion occurred in a randomly selected past light cone, we would necessarily only be able to observe states of the universe where there was no intelligence explosion, a friendly one, or one which was largely passive (e.g. an AI with the goal to prevent other intelligence explosions within it’s sphere of influence, but otherwise minimize interference).

Then the universe we observe should be much younger, because most evolutionarily-arising life will exist on worlds that don’t have a nearby AI neighbor. Our telescopes can see older galaxies than this one, and we expect those to contain much older planets with all the heavy elements. On your hypothesis, most observers should not utter that sentence in conversations like this one.

This person claims that all AI will rationally kill themselves and that the great filter would be after AI. http://www.science20.com/alpha_meme/deadly_proof_published_your_mind_stable_enough_read_it-126876 (I havn’t got the paper but even if this is correct, to me it still would not explain the filter fully because a civilization could make a simple interstellar Replicator e.g. light sail propelled asteroid mining robot and let it lose before going AI and we see no evidence of these)

Also what about the Planetarium/galactic zoo/enforced noninterference possibility. Say that 99% of the time AI will take over the light cone destructively, but 1% of the time the AI will desire to watch and catalog intelligence arising then darkly wipe it out when it gets annoying and tries to colonize other stars and hence stuff up other experiments. Or more nicely it could welcome us to the galaxy and stop us from wiping out other civilizations etc.

For us it would mean that we got lucky with a 1% chance say 1 billion years ago when the first intelligent civilization arose, spread through the galaxy/light cone and made the watching/enforcing AI. (or made the watching AI then fought itself etc) There could have been ~1 million space-faring civilizations in the galaxy since and we are nothing special at all, on an average star in the middle age of the universe. In the case the filter is sort of ahead of us because we cannot expand and colonize—the much more advanced AI would stop us.

Either way if we make a simple replicator and have it successfully reach another solar system (with possibly habitable planets) then that would seem to demonstrate that the filter is behind us. We would have then done something that we can be sure noone else in the galaxy has done before as I have said we see no evidence of such replicators. I am talking about one that could not land on planets, just rearrange asteroids and similar objects with very low gravity.

Excellent! So, wouldn’t that mean that the best way to eliminate x-risk would be to do exactly that?

It is counterintuitive, because “eliminating x-risk” implies some activity, some fixing of something. But we eliminated the risk of devastating asteroid impact not by nuking any dangerous ones, but by mapping all of them and concluding the risk didn’t exist. As it happens, that was also much cheaper than any asteroid deflection could have been.

If sending out an interstellar replicator was proof we’re further ahead (i.e. less vulnerable) than anything that could have evolved inside this galaxy since the dawn of time, it seems mightily important to become more certain we can do that (without AI). If some variant of our interstellar replicator was capable of enabling intergalactic travel, that’d raise our expectation of comparative invulnerability because we’d know we’ve gone past obstacles that nothing inside some fraction of our light cone even outside our galaxy has been able to master.

Ideally we’d actually demonstrate that of course, but for the purpose of eliminating (perceived) x-risk, a highly evolved and believable model of how it could be done should go much of the way.

Of course we might find out that self-replicating spacecraft are a lot harder than they look, but that too would be information that is valuable for the long-term survival of our species.

Armstrong and Sandberg claim the feasibility of self-replicating spacecraft has been a settled matter since the Freitag design in 1980. But that paper, while impressively detailed and a great read, glosses over the exact computing abilities such a system would need, does not mention hardening against interstellar radiation, and probably has a bunch of other problems that I’m not qualified to discover. I haven’t looked at all the papers that cite it (yet), but the once I’ve seen seem to agree self-replicating spacecraft are plausible.

I posit that greater certainty on that point would be of outsized value to our species. So why aren’t we researching it? Am I overlooking something?

Actually, bacteria, seeds and acorns are our strongest arguments for self-replication, along with the fact that humans can generally copy or co-opt natural processes for our own uses.

Thanks for the comment. Yes I agree that if we had made such a replicator and set it loose then that would say a lot about the filter. To claim that the filter was still ahead of us in that case you would need to make the more bizarre claim that we would with almost 100% probability seek and destroy the replicators and almost all similar civilizations would do the same, then proceed not to expand again.

I am not sure that a highly believable model would go most of the way because there may be a short window between having a model, then AI issues changing things so it isn’t built. It seems pretty believable for the case of mankind that there would be a very short time between building such an thing and going full AI, so to be sure you would actually have to build it and let it loose.

I am not sure why it isn’t given much more attention. Perhaps many people don’t believe that AI can be part of the filter e.g. the site overcomingbias.com. Also I expect there would be massive moral opposition to letting such a replicator loose from some people! How dare we disturb the whole galaxy in such an unintelligent way. Thats why I mention the simple one that just rearranges small asteroids. It would not wipe out life as we know it but would prove that we were past the filter as such a thing has not been done in our galaxy. I sure would be interested in seeing it researched. Perhaps someone with more kudos can promote it?

Likely a replicator would be a consequence of asteroid mining anyway as the best, cheapest way to get materials from asteroids is if it is all automatic.

Why would that be so, especially in the immediate future?

Imagine if we had made a replicator, demonstrated that it could make copies of itself, established with as high confidence as we could that it could survive the trip to another star, and had let >100,000 of the things off heading to all sorts of stars in the neighborhood. They would eventually (very soon compared to a billion years) visit every star in the galaxy and that would tell us a lot about the Fermi paradox and great filter.

As I said before (discounting planetarium hypothesis) we could have a high degree of confidence that the great filter was then behind us. It couldn’t really be the case that thousands of civilizations in our galaxy had done such a thing, then changed their mind and destroyed all the replicators as some civilizations would probably destroy themselves between letting the replicators loose and changing their mind, or not change their mind/not care about the replicators. Therefore we would see evidence of their replicators in our solar system which we don’t see.

The other way we can be sure the filter is behind us is successfully navigate the Singularity (keeping roughly the same values). That seems obviously MUCH more difficult to have confidence in.

If our goal is to make sure the filter is behind us then it is best to do it with a plan we can understand and quantify. Holding off human level AI until the replicators have been let loose seems to be the highest probability way to do that, but no-one has said such a thing before now as far as I am aware.

I still see no answer to my question. Where is the outisize value to our species?

Not to mention that “very soon compared to a billion years” isn’t a particularly interesting time frame.

Another fairly plausible great filter is in the interaction between life and geochemistry- this is a variant on ‘central nervous systems are unlikely’.

There is some reason to think that microbial metabolisms are somewhat destabilizing in the history of the planet, at least on very large scales. There is of course the classic example of photosynthesis producing atmospheric oxygen. Can you imagine what would happen if bacteria injected large amounts of free oxygen in to Titan’s atmosphere? Given the space of all geochemistry for terrestrial planets in the habitable zone, and the plausible metabolisms that could emerge from an RNA world on each of these planets, it may be that only a fraction of a fraction take place within the domain of a chemical ‘stable attractor’ that remains habitable.

This would allow for abiogenesis to be fairly common in the universe, without necessarily hand-waving nervous systems as ‘very hard’ for unspecified reasons. To get there, life is necessarily in a situation where microbes, maximizing their individual energy consumption according to local rules, reproduce exponentially with limited external coordination. The challenge in building a brain may be less about the technical complexity of that organ and more about the capacity of such a system to avoid self-sterilization in the required time scales.

I suspect you are correct that the great filter does not lie between urbilatiran and dophin intelligence, but I did think of one possible hole in the argument (that I don’t think is likely to end up mattering). It is possible that instead of it being easy in general for something like an urbilatiran to evolve significant intelligence, it might only be easy on places like Earth. That is, while there exist environmental conditions under which you would expect an urbilatiran-level organism to easily evolve to dolphin level in several independent instances, such conditions are very rare, and on most planets where urbilatiran-level organisms evolve, they don’t advance much further.

So far we haven’t seen any evidence the Earth is particularly rare, I think.

A few astronomical studies have come out this year giving evidence that Earth is actually more common than we thought, in terms of geology among planets within this galaxy.

Can you elaborate/point us in their direction?