I think that on most of the websites only about 1-10% of the users actually post things. I suspect that the number of people having those weird interactions with LLMs (and stopping before posting stuff) is like 10 − 10000 (most likely around 100) times bigger than what we see here

Kajus

why?

The goals we set for AIs in training are proxy goals. We, humans, also set proxy goals. We use KPIs, we talk about solving alignment and ending malaria (proxy to increasing utility, saving lives) budgets and so on. We can somehow focus on proxy goals and maintain that we have some higher level goal at the same time. How is this possible? How can we teach AI to do that?

So I got what I wanted. I tried zed code editor and well… it’s free and very agentic. I haven’t tried cursor but I think it might be on the same level.

I don’t think that anymore. I think it’s possible to get labs to use your work (e.g. you devised a new eval or new mech interp technique which solves some important problems) but it has to be good enough and you need to find a way to communicate it. I changed my mind after EAG London

I didn’t intend to structure it in any way. I was actually just hoping to see how my life changes when I purposefully inhibit other goals, like exercising, and just focus on this one. So far, I’m not getting much, since I already know a lot about my phone habits. I like my phone because I can chat with other people and read stuff when I’m bored and ignore whatever is going on inside my body. I do have an internal monologue all the time.

The core point I had was about the inhibition of other goals – it’s not a time to worry about sport or a healthy diet; it’s time to think about your phone and how it changes your life. I still live my normal life, though.

Think as in just let it stay in the background and let you interpret things with it in mind.

I also bought this device:

I think it reduces the time I spend on my phone a lot. Much more than reflection alone.

I don’t think I could have quit checking it more than 20 times a day without this device. It’s great! This one is expensive, but you can probably make one on your own using a box and a lock with a timer.

I watch YouTube videos more. The space left by my phone is getting filled with YouTube videos, which I think is fine, since they are more… they require sitting in front of a PC, as my phone is locked. I take slightly more walks.

Next week, I want to reflect on this quote:

In any game, a player’s best possible chance of winning lies in consistently making good moves without blundering.

I usually don’t discuss things with LLMs; I haven’t found it useful. Though, I need to try it with the new Claude.

I sometimes fall into the same trap although never in the work context (probably because my P(job is bullshit) = 100% and P(people are honest, goals are clear, etc. | job is bullshit) is very small).

I do this in EA and LW stuff. I sometimes assume that EA people are smart and not overconfident and I can trust them. I wish more people were sometimes doing something like just straightforwardly telling you dumb stuff, making you believe it and doubt them just to train you.

advertisement is literally like flowers

There is a um something like a thing to do in Christianity where you set a theme for a week and reflect on how this theme fits into your whole life (e.g. suffering, grace etc). I want to do something similar but make it just much more personal. I struggled with phone addiction for some time and it seems that bursts of work can’t solve that issue. So this week will be the week of reflection on my phone addiction.

I had some thoughts about CoT monitoring. So I was imaging this simple scenario—you are running a model, for each query it produces CoT and answer. To check the CoT, we run it through another model with a prompt like “check this CoT <cot>” and tell us whether the CoT seems malicious.

Why don’t we check the response only? Sometimes it’s not clear just by the response that the response is harmless (I imagine a doctor can prescribe you a lot of things and you don’t have a way of checking if they are actually good for you)

So CoT monitoring is based on the idea that CoT gives you some extra information.

One of the scenarios that I fear the most is AI creating bio weapons. I’m not worried about government using AI to create bioweapons, because I don’t think I can do anything about this, but I’m worried about AI helping random people to create bio weapons. In this case, I assume CoT monitoring means monitoring of CoT and model response. So basically you deploy a model and run every CoT and response through the monitor. Seems great but also can the monitor get jailbroken as well? If we let people experiment so much they actually find jailbreaks for both the monitor and model, then our solution fails.

In this scenario I actually think that CoT monitoring isn’t that great. It’s definitely an improvement over not monitoring anything but it seems that it’s eventually possible to break it.

Another scenario is AI that is misaligned and tries to do scheming in the CoT. Here it seems great. If the AI leaks its goals.

Also, costs per token rise.

This one is extremely good. I already made one with simple black/red gradients but I like this one much more. I can mix mine and yours together to create a grammatically correct one

I think that nate soares and yudkowsky aren’t really well known names so the cover should do some name dropping (current one doesn’t do it)

I also took my stab at this idea. Here is my cover. The left empty part is for the back.

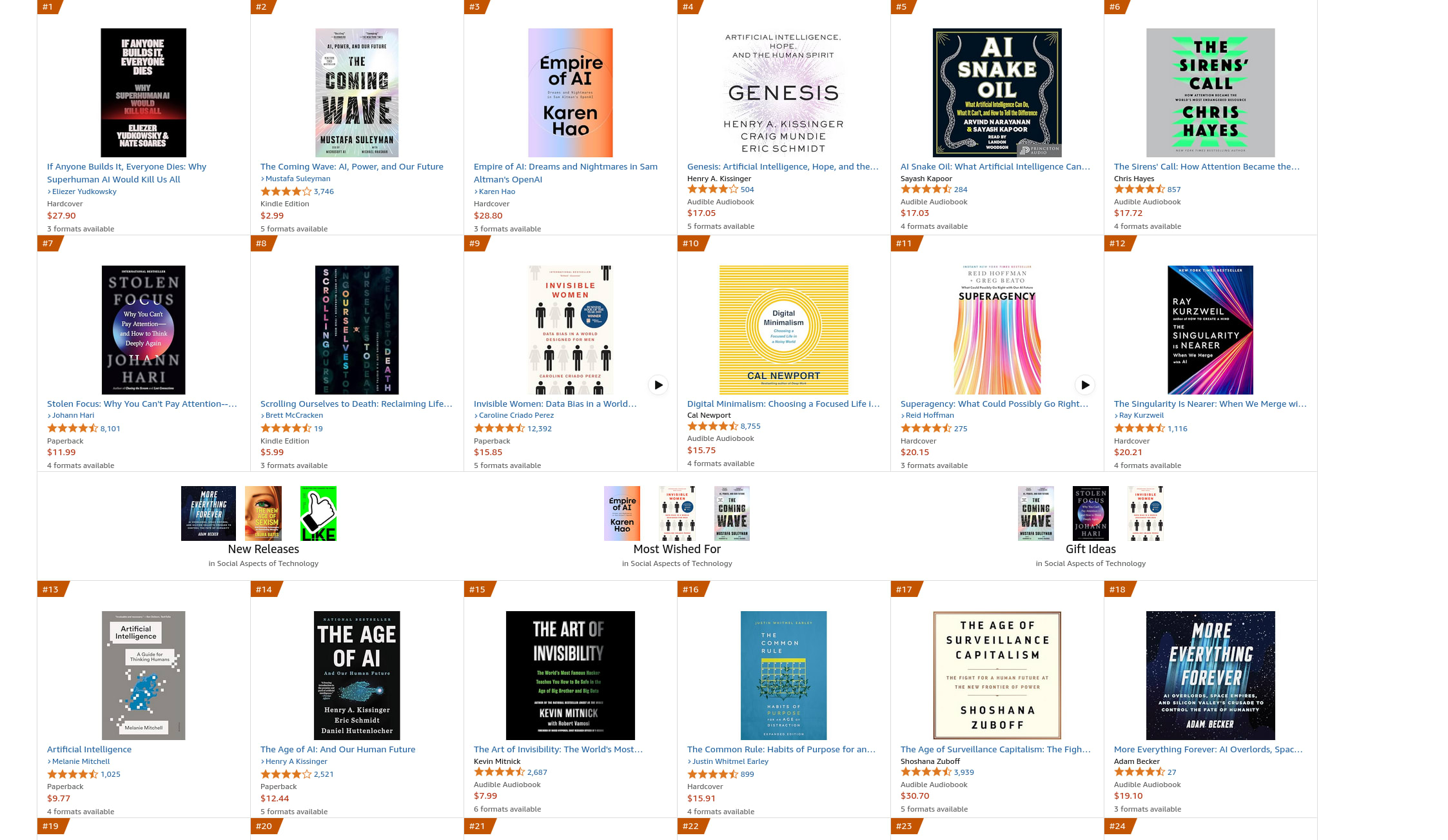

If you go to Amazon most of the books in that section look similar

This is super interesting. Let’s focus on your case. Why aren’t you winning? How can I explain this data? You don’t think you are winning. What are your criteria for winning?

Regarding the hypothesis that rationality improves system 2 and not system 1 - how does system 1 improve? I think mostly through training with some simple and accurate feedback loops. I think one can establish them with system 2. Example would be setting a goal to exercise. You try various exercises untill you find some that you like. Why didn’t you find anything for relationship like that?

Also possible. Well honestly, I don’t have much data, I don’t have anything to point to a concrete scenario, but I mean more or less: Antrophic, OpenAI and Mechanize (people from Epoch) - they more or less started as safety focused labs or were concerned about safety at some point (also can’t point to anything concrete), turned to work on capabilities at some point.

I don’t think many people on lw believe that the last 1% will take the longest time—I believe many would say that the take off is exponential

I give those as vague examples—they might work through the pathways mentioned in the post:

Reading books I mean reading things like Dostoevsky, or Tolstoy, anything that dives deep into introspection and shows various ways to live a life

Interacting with art

Recording your states of mind (note taking) and reading those notes, noticing patterns

It seems like both me and you are able to decipher what I meant easily—why someone failed to do that

do you want to stop worrying?