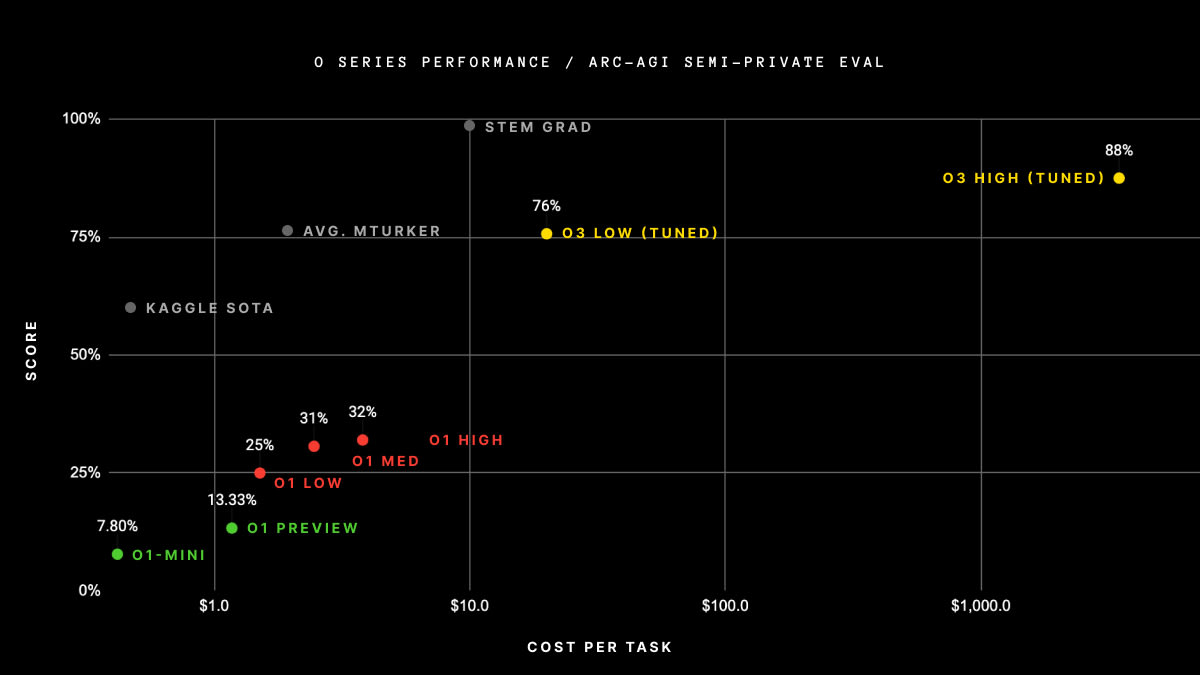

the reason why my first thought was that they used more inference is that ARC Prize specifies that that’s how they got their ARC-AGI score (https://arcprize.org/blog/oai-o3-pub-breakthrough) - my read on this graph is that they spent $300k+ on getting their score (there’s 100 questions in the semi-private eval). o3 high, not o3-mini high, but this result is pretty strong proof of concept that they’re willing to spend a lot on inference for good scores.

I haven’t watched the entire interview, but in the article you linked, all of the quotes from Bukele here seem to be referring to whether he has the power to unilaterally cause Garcia to end up in the United States, not whether he has the power to cause him to be released from prison.