Automated / strongly-augmented safety research.

Bogdan Ionut Cirstea

The first automatically produced, (human) peer-reviewed, (ICLR) workshop-accepted[/able] AI research paper: https://sakana.ai/ai-scientist-first-publication/

There have been numerous scandals within the EA community about how working for top AGI labs might be harmful. So, when are we going to have this conversation: contributing in any way to the current US admin getting (especially exclusive) access to AGI might be (very) harmful?

[cross-posted from X]

I find the pessimistic interpretation of the results a bit odd given considerations like those in https://www.lesswrong.com/posts/i2nmBfCXnadeGmhzW/catching-ais-red-handed.

I also think it’s important to notice how much less scary / how much more probably-easy-to-mitigate (at least strictly when it comes to technical alignment) this story seems than the scenarios from 10 years ago or so, e.g. from Superintelligence / from before LLMs, when pure RL seemed like the dominant paradigm to get to AGI.

I agree it’s bad news w.r.t. getting maximal evidence about steganography and the like happening ‘by default’. I think it’s good news w.r.t. lab incentives, even for labs which don’t speak too much about safety.

I pretty much agree with 1 and 2. I’m much more optimistic about 3-5 even ‘by default’ (e.g. R1′s training being ‘regularized’ towards more interpretable CoT, despite DeepSeek not being too vocal about safety), but especially if labs deliberately try for maintaining the nice properties from 1-2 and of interpretable CoT.

If “smarter than almost all humans at almost all things” models appear in 2026-2027, China and several others will be able to ~immediately steal the first such models, by default.

Interpreted very charitably: but even in that case, they probably wouldn’t have enough inference compute to compete.

Quick take: this is probably interpreting them over-charitably, but I feel like the plausibility of arguments like the one in this post makes e/acc and e/acc-adjacent arguments sound a lot less crazy.

To the best of my awareness, there isn’t any demonstrated proper differential compute efficiency from latent reasoning to speak of yet. It could happen, it could also not happen. Even if it does happen, one could still decide to pay the associated safety tax of keeping the CoT.

More generally, the vibe of the comment above seems too defeatist to me; related: https://www.lesswrong.com/posts/HQyWGE2BummDCc2Cx/the-case-for-cot-unfaithfulness-is-overstated.

They also require relatively little compute (often around $1 for a training run), so AI agents could afford to test many ideas.

Ok, this seems surprisingly cheap. Can you say more about what such a 1$ training run typically looks like (what the hyperparameters are)? I’d also be very interested in any analysis about how SAE (computational) training costs scale vs. base LLM pretraining costs.

I wouldn’t be surprised if SAE improvements were a good early target for automated AI research, especially if the feedback loop is just “Come up with idea, modify existing loss function, train, evaluate, get a quantitative result”.

This sounds spiritually quite similar to what’s already been done in Discovering Preference Optimization Algorithms with and for Large Language Models and I’d expect something roughly like that to probably produce something interestin, especially if a training run only cost $1.

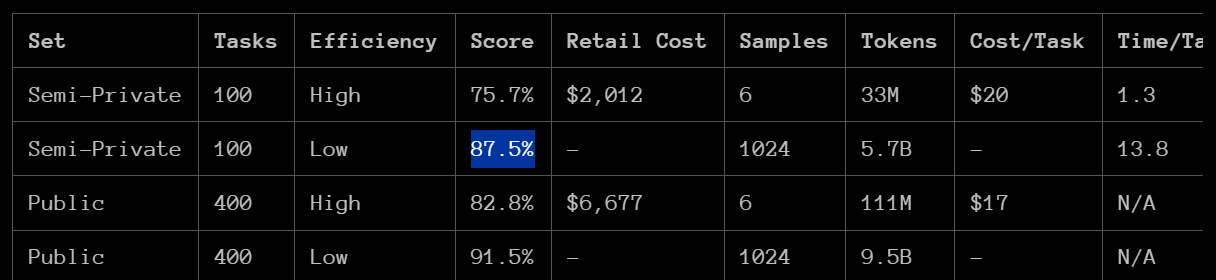

Some additional evidence: o3 used 5.7B tokens per task to achieve its ARC score of 87.5%; it also scored 75.7% on low compute mode using 33M tokens per task:

It might also be feasible to use multimodal CoT, like in Imagine while Reasoning in Space: Multimodal Visualization-of-Thought, and consistency checks between the CoTs in different modalities. Below are some quotes from a related chat with Claude about this idea.

multimodal CoT could enhance faithfulness through several mechanisms: cross-modal consistency checking, visual grounding of abstract concepts, increased bandwidth costs for deception, and enhanced interpretability. The key insight is that coordinating deception across multiple modalities would be substantially more difficult than in text alone.

Multimodal CoT for Enhanced Faithfulness: A Proposal

Core Idea

Multimodal Chain-of-Thought (like MVoT) could enhance CoT faithfulness by making it harder for models to hide information or engage in steganography. The key insight is that forcing models to express their reasoning through both text and generated images creates additional constraints that make unfaithful reasoning more difficult.

Mechanisms for Enhanced Faithfulness

1. Cross-Modal Consistency Checking

When a model must express its reasoning in both text and images, we can verify that both modalities tell the same story:

Any hidden information in the text would need to be consistently reflected in the generated images

The required coordination across modalities makes steganography more challenging

Inconsistencies between modalities could signal unfaithful reasoning

2. Visual Grounding of Abstract Concepts

Images force concrete visualization of abstract concepts:

Makes it harder to maintain multiple contradictory lines of reasoning

Abstract deceptions become more apparent when they must be visually represented

Visual medium reduces ambiguity that could be exploited for steganography

3. Increased Bandwidth Cost for Deception

Hiding information across both text and images is more computationally expensive:

Model must maintain coherence across a higher-dimensional space

Steganographic signals need more sophisticated encoding to work across modalities

Higher cognitive load makes deception more challenging

4. Enhanced Interpretability

Multimodal outputs provide more angles for oversight:

Humans can cross-reference the text reasoning against visual representations

Visual artifacts of deceptive reasoning may be easier to spot

Multiple channels for detecting inconsistencies

Implementation Approach

Required Components

A multimodal model capable of generating both text and images

Consistency checking mechanisms across modalities

Visual grounding mechanisms for abstract concepts

Multi-channel oversight tools

Training Strategy

Start with models pre-trained on aligned multimodal data

Fine-tune with explicit rewards for cross-modal consistency

Penalize detected inconsistencies between modalities

Validate using human oversight across both channels

Potential Challenges

Technical Challenges

Ensuring high-quality image generation that accurately reflects reasoning

Developing robust cross-modal consistency metrics

Managing computational overhead of dual-modal generation

Validation Challenges

Defining ground truth for faithful multimodal reasoning

Creating test cases that can detect cross-modal deception

Measuring effectiveness compared to text-only approaches

Research Directions

Immediate Next Steps

Develop metrics for cross-modal consistency

Create test suites for detecting multimodal steganography

Build tools for human validation of multimodal reasoning

Study failure modes specific to multimodal deception

Longer-term Research

Explore optimal balances between modalities

Investigate transfer learning between modalities

Study emergence of cross-modal deception strategies

Develop automated detection of multimodal unfaithfulness

Conclusion

Multimodal CoT presents a promising approach for enhancing faithfulness by leveraging the constraints and verification opportunities provided by multiple modalities. While technical challenges remain, the potential benefits warrant further investigation and development.

At the very least, evals for automated ML R&D should be a very decent proxy for when it might be feasible to automate very large chunks of prosaic AI safety R&D.

We find that these evaluation results are nuanced and there is no one ideal SAE configuration—instead, the best SAE varies depending on the specifics of the downstream task. Because of this, we cannot combine the results into a single number without obscuring tradeoffs. Instead, we provide a range of quantitative metrics so that researchers can measure the nuanced effects of experimental changes.

It might be interesting (perhaps in the not-very-near future) to study if automated scientists (maybe roughly in the shape of existing ones, like https://sakana.ai/ai-scientist/) using the evals as proxy metrics, might be able to come up with better (e.g. Pareto improvements) SAE architectures, hyperparams, etc., and whether adding more metrics might help; as an analogy, this seems to be the case for using more LLM-generated unit tests for LLM code generation, see Dynamic Scaling of Unit Tests for Code Reward Modeling.

I expect that, fortunately, the AI safety community will be able to mostly learn from what people automating AI capabilities research and research in other domains (more broadly) will be doing.

It would be nice to have some hands-on experience with automated safety research, too, though, and especially to already start putting in place the infrastructure necessary to deploy automated safety research at scale. Unfortunately, AFAICT, right now this seems mostly bottlenecked on something like scaling up grantmaking and funding capacity, and there doesn’t seem to be enough willingness to address these bottlenecks very quickly (e.g. in the next 12 months) by e.g. hiring and / or decentralizing grantmaking much more aggressively.

From https://x.com/__nmca__/status/1870170101091008860:

o1 was the first large reasoning model — as we outlined in the original “Learning to Reason” blog, it’s “just” an LLM trained with RL. o3 is powered by further scaling up RL beyond o1

@ryan_greenblatt Shouldn’t this be interpreted as a very big update vs. the neuralese-in-o3 hypothesis?

Do you have thoughts on the apparent recent slowdown/disappointing results in scaling up pretraining? These might suggest very diminishing returns in scaling up pretraining significantly before 6e27 FLOP.

I’ve had similar thoughts previously: https://www.lesswrong.com/posts/wr2SxQuRvcXeDBbNZ/bogdan-ionut-cirstea-s-shortform?commentId=rSDHH4emZsATe6ckF.

Gemini 2.0 Flash Thinking is claimed to ‘transparently show its thought process’ (in contrast to o1, which only shows a summary): https://x.com/denny_zhou/status/1869815229078745152. This might be at least a bit helpful in terms of studying how faithful (e.g. vs. steganographic, etc.) the Chains of Thought are.

In light of recent works on automating alignment and AI task horizons, I’m (re)linking this brief presentation of mine from last year, which I think stands up pretty well and might have gotten less views than ideal:

Towards automated AI safety research