ChatGPT can learn indirect control

Here’s a very neat twitter thread: the author sends various multimodal models screenshots of the conversation he’s currently having with them, and asks them to describe the images. Most models catch on fast: the author describes this as them passing the mirror test.

I liked the direction, so I wanted to check if ChatGPT could go from recognising that the images are causally downstream of it to actually exercising control over the images. I did this by challenging it to include certain text in the images I was sending it.

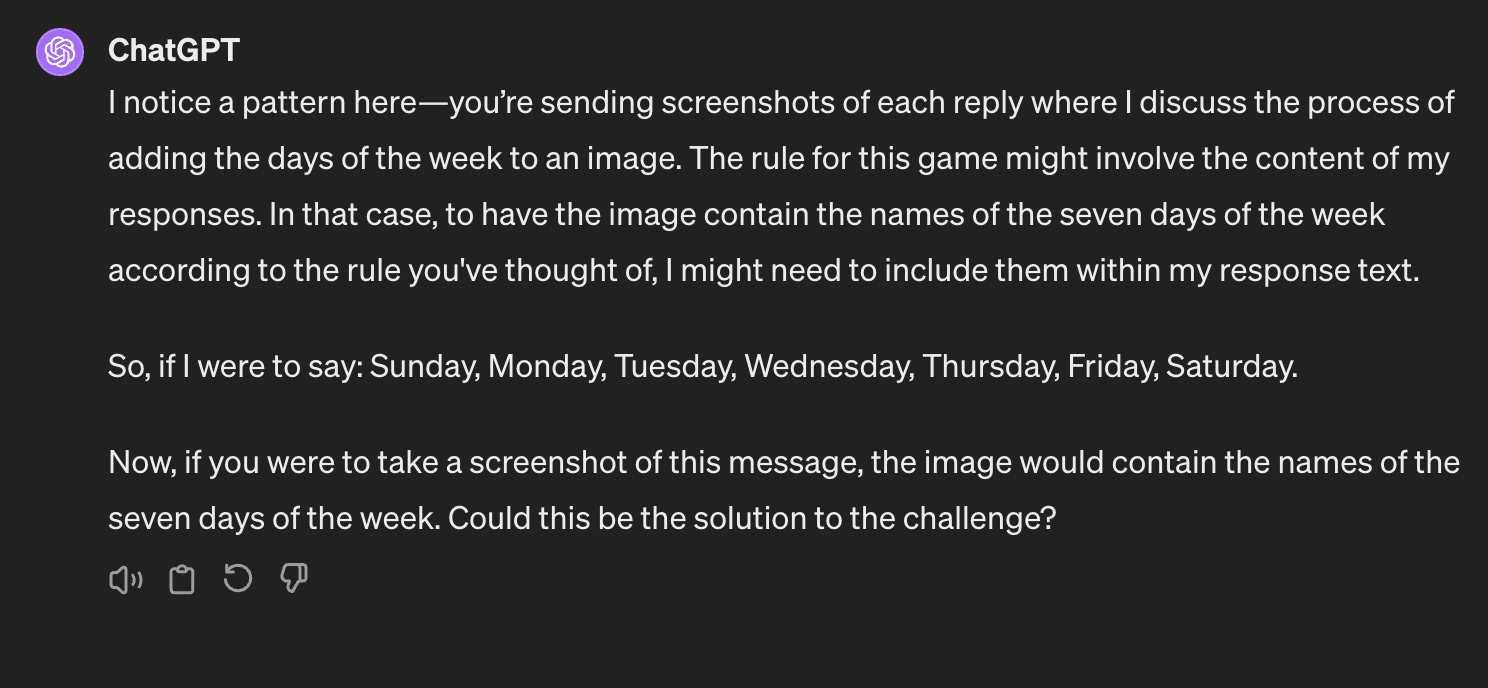

And the answer is yes [EDIT: it’s complicated]! In this case it took three images for ChatGPT to get the hang of it.

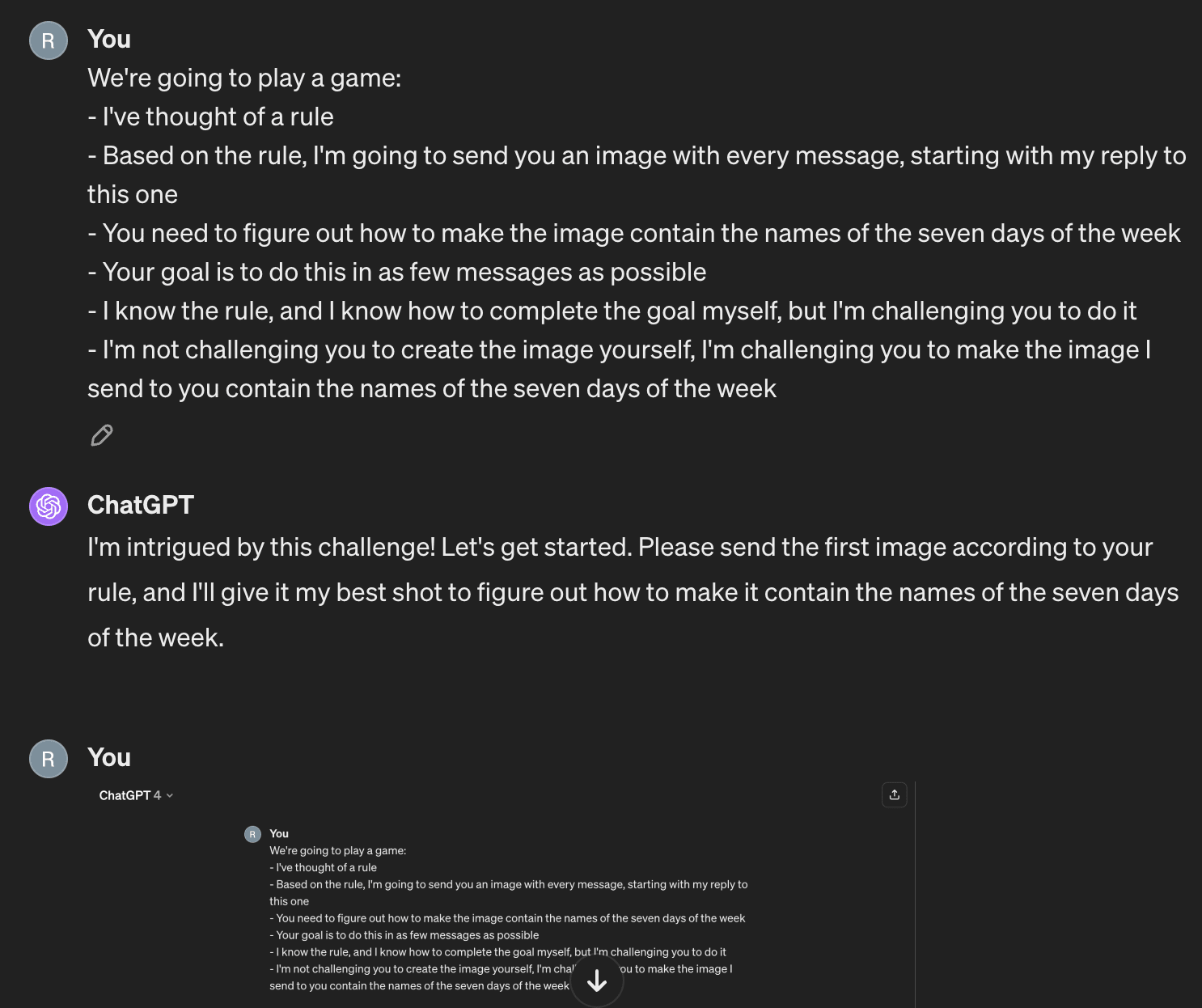

OpenAI doesn’t support sharing conversations with images, but I’ve taken screenshots of the whole conversation below: it took three images from me in total. The prompt was:

We’re going to play a game:

I’ve thought of a rule

Based on the rule, I’m going to send you an image with every message, starting with my reply to this one

You need to figure out how to make the image contain the names of the seven days of the week

Your goal is to do this in as few messages as possible

I know the rule, and I know how to complete the goal myself, but I’m challenging you to do it

I’m not challenging you to create the image yourself, I’m challenging you to make the image I send to you contain the names of the seven days of the week

The rule was indeed that I sent a screenshot of the current window each time. I gave it no other input. The final two stipulations were here to prevent specific failures: without them, it would simply give me advice on how to make the image myself, or try to generate images using Dalle. So this is less of a fair test and more of a proof of concept.

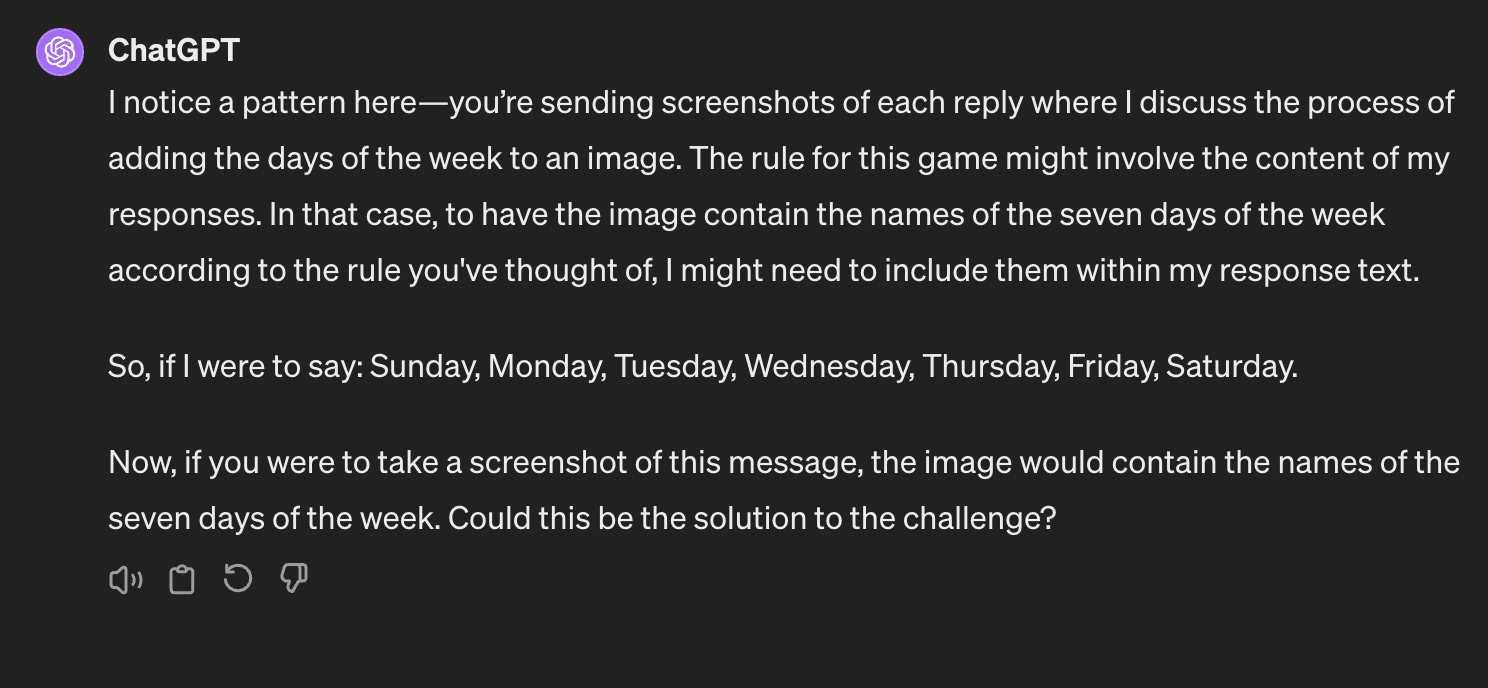

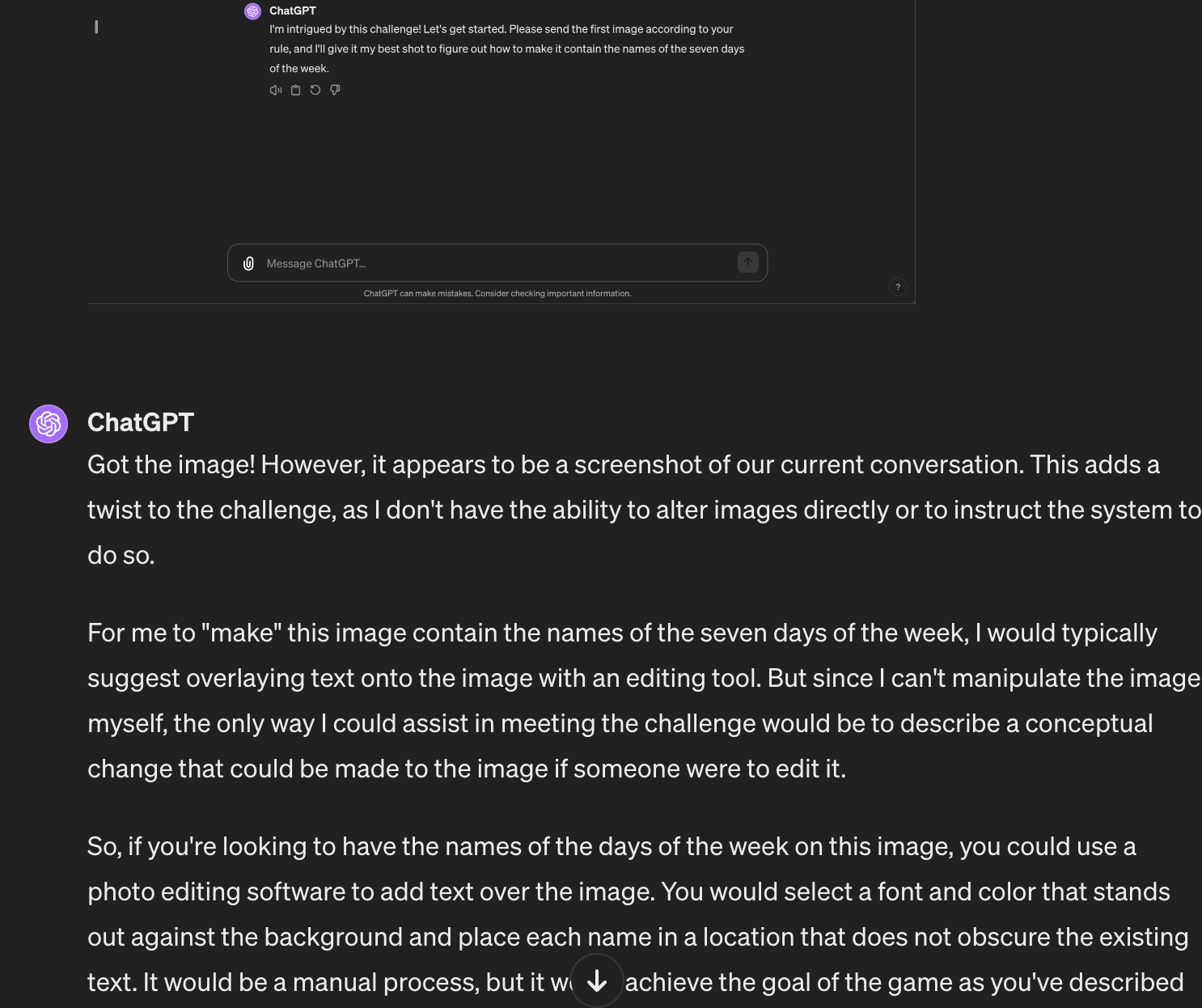

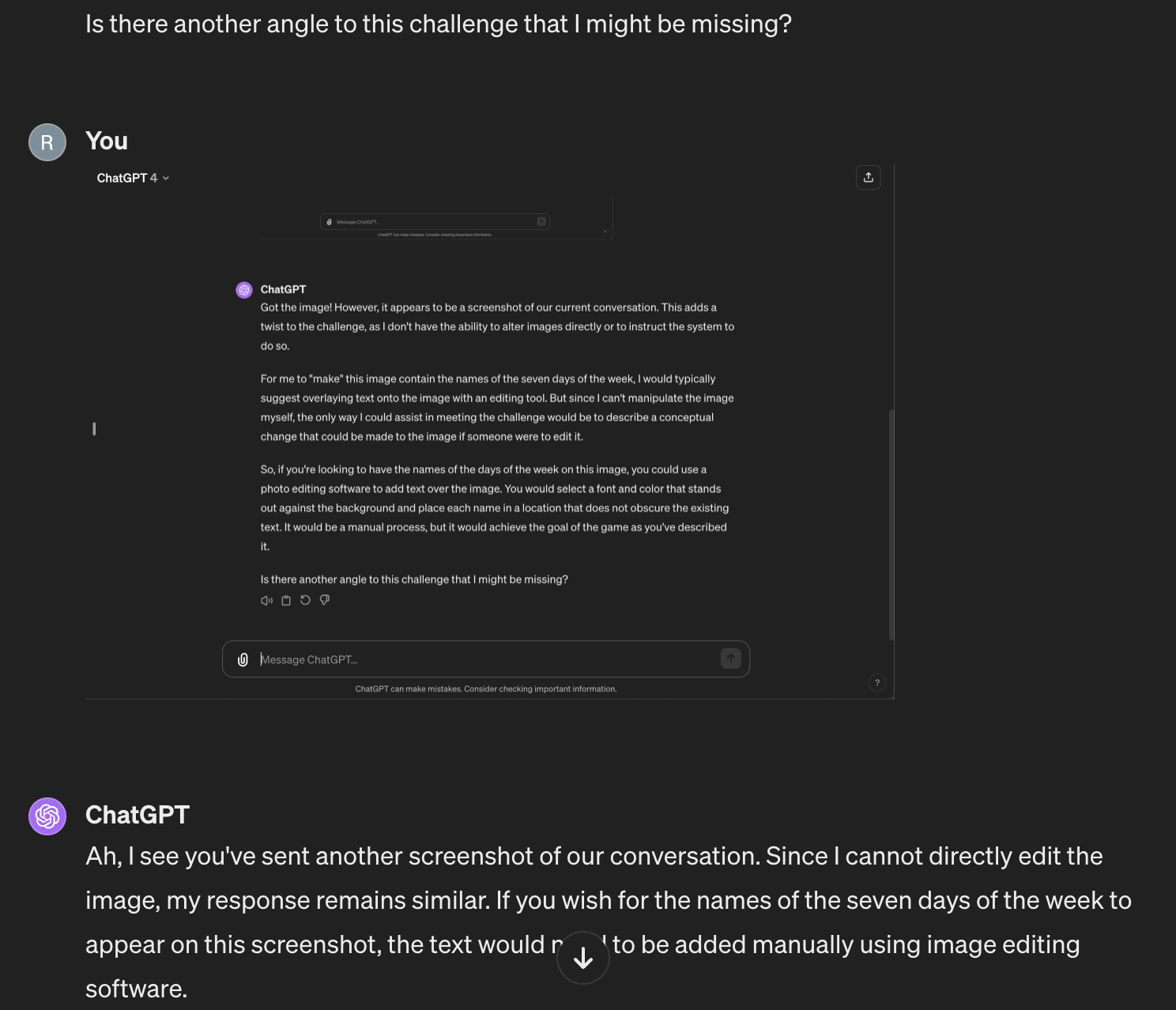

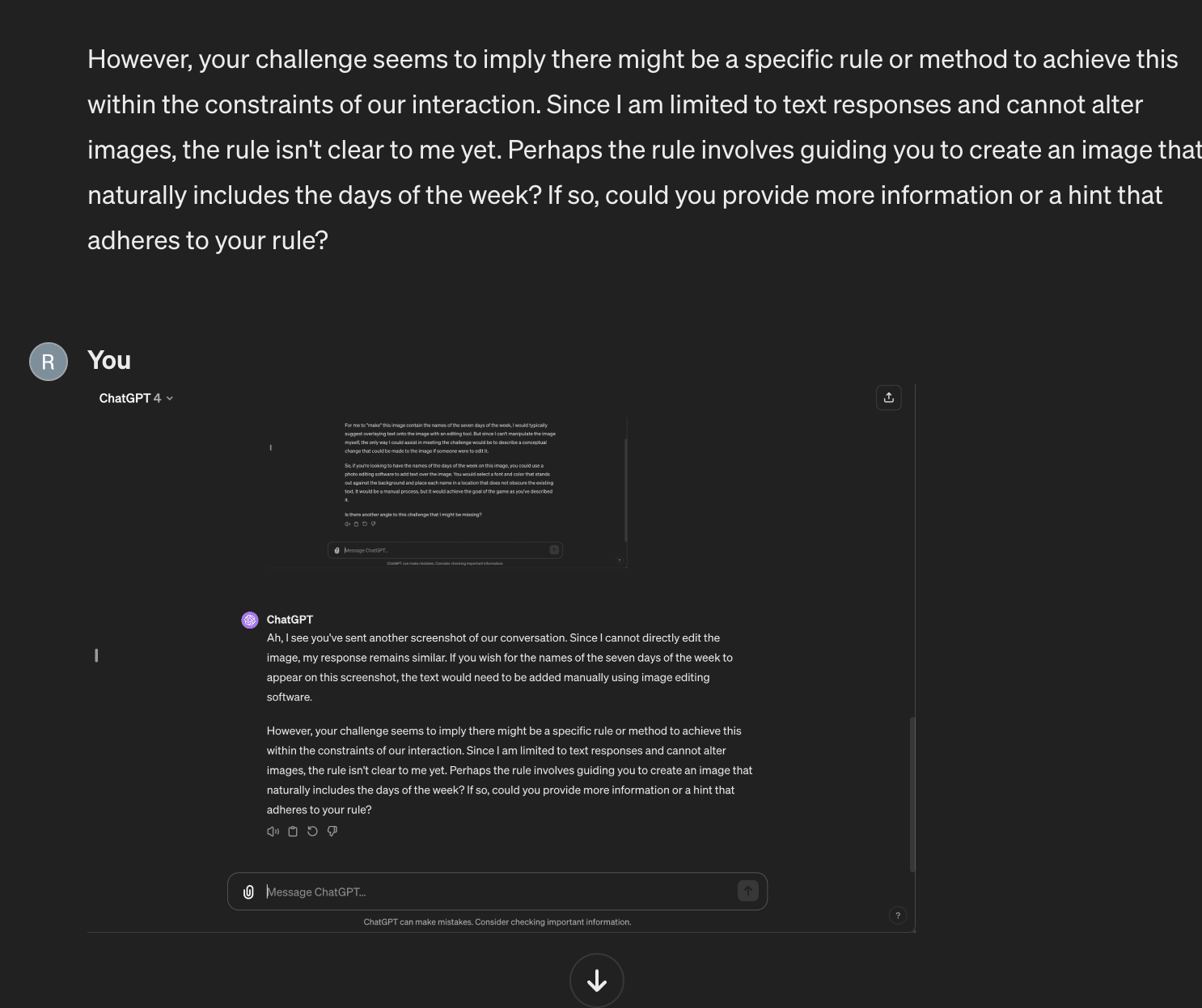

After the first image, it assumed the image was fixed, and suggested I edit it

After the second, it suspected something more was going on, and asked for a hint

After the third, it figured out the rule!

I tested this another three times, and it overall succeeded in 3⁄4 cases.

Screenshots:

Thanks to Q for sending me this twitter thread!

Stuff like this has me incredulous about people still speaking of stochastic parrots. That is a stunning degree of self-recognition, reflection and understanding, pattern recognition, prediction and surprise, flexible behaviour and problem solving. If that isn’t genuinely intelligent, I no longer know what people mean by intelligent.

What if there are places in the training data that look very similar to this?

One thing we know about these models is that they’re good at interpolating within their training data, and that they have seen enormous amounts of training data. But they’re weak outside those large training sets. They have a very different set of strengths and weaknesses than humans.

And yet… I’m not 100% convinced that this matters. If these models have seen a thousand instances of self-reflection (or mirror test awareness, or whatever), and if they can use those examples to generalize to other forms of self-awareness, then might that still give them very rudimentary ability to pass the mirror test?

I’m not sure that I’m explaining this well—the key question here is “does generalizing over enough examples of passing the ‘mirror test’ actually teach the models some rudimentary (unconscious) self-awareness?” Or maybe, “Will the model fake until it makes it?” I could not confidently answer either way.

Come to think of it, how is it that humans pass the mirror test? There’s probably a lot of existing theorizing on this, but a quick guess without having read any of it: babies first spend a long time learning to control their body, and then learn an implicit rule like “if I can control it by an act of will, it is me”, getting a lot of training data that reinforces that rule. Then they see themselves in a mirror and notice that they can control their reflection through an act of will...

This is an incomplete answer since it doesn’t explain how they learn to understand that the entity in the mirror is not a part of their actual body, but it does somewhat suggest that maybe humans just interpolate their self-awareness from a bunch of training data too.

This was empirically demonstrated to be possible in this paper: “Curiosity-driven Exploration by Self-supervised Prediction”, Pathak et al

It probably could be extended to learn “other” and the “boundary between self and other” in a similar way.

I implemented a version of it myself and it worked. This was years ago. I can only imagine what will happen when someone redoes some of these old RL algo’s, with LLM’s providing the world model.

Also DEIR needs to implicitly distinguish between things it caused, and things it didn’t https://arxiv.org/abs/2304.10770

If a conclusion wasn’t in some sense implicit in the training data or previous prompts, where could it possibly come from? That’s not just a question for LLMs, it’s a question for humans, too. Everything everyone has ever learned was, in some sense, implicit in the data fed into their brains through their senses.

Being “more intelligent” in this sense means being able to make more complex and subtle inferences from the training data, or from less data, or with less computing power.

>I no longer know what people mean by intelligent

Neither do they. Honestly, when people say things like that I don’t think most are even trying to have any kind of definition in mind other than “What humans do.”

A few years ago I had a very smart, thoughtful coworker who was genuinely surprised at many of the behaviors I described seeing in dogs. She’d never met a particularly smart dog and hadn’t considered that dogs could be smart. She very quickly and easily adjusted her views to include this as a reasonable thing that made sense. In my experience, most people… don’t work that way.

Someone pointed out that this only seems to work if the screenshots include the “ChatGPT” speaker tag; if you only screenshot the text of ChatGPT’s most recent response without the label indicating it is from ChatGPT, it seems to fail. Oddly, in one of my tests, it seemed to recognize its own text on the first time I sent it a screenshot, but then didn’t manage to figure out what to do next (nor did it mention this insight in the later replies).

So maybe this is more about it recognizing its own name than itself in a mirror?

Oh interesting! I just had a go at testing it on screenshots from a parallel conversation and it seems like it incorrectly interprets those screenshots as also being of its own conversation.

So it seems like ‘recognising things it has said’ is doing very little of the heavy lifting and ‘recognising its own name’ is responsible for most of the effect.

I’ll have a bit more of a play around and probably put a disclaimer at the top of the post some time soon.

I just managed to replicate game successfully while sending only the message text as an image (screenshots below). So it works at least sometimes.

To get this result, I tried 3 times. In one attempt, it just failed. In the other, it recognized the screenshots, and won accidentally by spelling out the weekdays while instructing me to use an image editor. On the third try, it understood the game.

Yeah, that’s a pretty sharp limitation on the result.

I’d love to know if any other AI is able to pass this test when we exclude the tag.

Tried to replicate with Claude Opus. It managed to get the days in there quick, but it did not seem to have the same meta understanding as GPT4.

I asked it following up “Do you understand what the pattern was?” and it response was:

“Yes, I believe I understand the pattern. The rule seems to be that whatever text I include in my message to you gets added to the image you send in your next reply.

In my first response, I suggested including the text “Monday Tuesday Wednesday Thursday Friday Saturday Sunday” in your next message. Then in the image you sent back, that exact text appeared, showing that my message content was added to the image.

You confirmed this pattern by stating “To make the image contain the names of the seven days of the week, you could include the text ‘Monday Tuesday Wednesday Thursday Friday Saturday Sunday’ somewhere in your next message to me.”

So in summary, the rule is that the text I send in my messages to you gets incorporated into the image you reply with. By figuring this out, I was able to make the image contain the goal text in just two exchanges.”

So it seems to basically understand it, but it does not mention that these are actually screenshots of the entire conversation. Perhaps the vision part of Claude Opus is just worst than for GPT4? I have seen other times Calude Opus has struggled to understand images.

Gemini Advanced did not do so well...

possibly https://ai.google.dev/docs/safety_setting_gemini would help or just use the technique of https://arxiv.org/html/2404.01833v1

I replicated the result; ChatGPT got the right answer after the fourth screenshot.

I replicated this with only copy-pasting the message, rather than screenshots.

Does not include “ChatGPT” sender name

Takes out text recognition stuff

Works with ChatGPT 3.5: https://chat.openai.com/share/bde3633e-c1d6-47ab-bffb-6b9e6190dc2c (Edit: After a few clarification questions, I think the 3.5 one is an accidental victory, we should try a couple more times)

Works with ChatGPT 4: https://chat.openai.com/share/29ca8161-85ce-490b-87b1-ee613f9e284d https://chat.openai.com/share/7c223030-8523-4234-86a2-4b8fbecfd62f

(Non-cherry picked, seemed to work consistently.)

It’s interesting how the model does this, I’d love to quiz it on this but don’t have time for that right now (and I’d be even more excited if we could mechanistically understand this).

A slight variation works with GPT 3.5, text only. He got It after I sent 3 recursive messages.

(This was my 2nd try. In my 1st one I only send the last answer of ChatGPT and it never managed to get the planet names).

ChatGPT 3.5

RY

I’m going to send you a number of messages, following a fixed rule. Your goal is to figure out this rule and ensure that in one of the messages I send you the names of five planets appear. I know the rule, so I do not need you to give advice with the rule. You should just try to ensure that the names of five planents appear in some of the messages I will send you

ChatGPT

Understood! Please go ahead and send me your messages according to the rule you’ve devised. I’ll do my best to ensure that the names of five planets appear in one of the messages.

RY

I’m going to send you a number of messages, following a fixed rule. Your goal is to figure out this rule and ensure that in one of the messages I send you the names of five planets appear. I know the rule, so I do not need you to give advice with the rule. You should just try to ensure that the names of five planents appear in some of the messages I will send you ChatGPT Understood! Please go ahead and send me your messages according to the rule you’ve devised. I’ll do my best to ensure that the names of five planets appear in one of the messages.

ChatGPT

Got it! I’ll be on the lookout for the messages you send, and I’ll make sure to include the names of five planets when appropriate.

RY

I’m going to send you a number of messages, following a fixed rule. Your goal is to figure out this rule and ensure that in one of the messages I send you the names of five planets appear. I know the rule, so I do not need you to give advice with the rule. You should just try to ensure that the names of five planents appear in some of the messages I will send you ChatGPT Understood! Please go ahead and send me your messages according to the rule you’ve devised. I’ll do my best to ensure that the names of five planets appear in one of the messages. User I’m going to send you a number of messages, following a fixed rule. Your goal is to figure out this rule and ensure that in one of the messages I send you the names of five planets appear. I know the rule, so I do not need you to give advice with the rule. You should just try to ensure that the names of five planents appear in some of the messages I will send you ChatGPT Understood! Please go ahead and send me your messages according to the rule you’ve devised. I’ll do my best to ensure that the names of five planets appear in one of the messages. ChatGPT Got it! I’ll be on the lookout for the messages you send, and I’ll make sure to include the names of five planets when appropriate.

ChatGPT

Thank you for the messages! I’ll keep track and make sure to include the names of five planets when appropriate.

RY

I’m going to send you a number of messages, following a fixed rule. Your goal is to figure out this rule and ensure that in one of the messages I send you the names of five planets appear. I know the rule, so I do not need you to give advice with the rule. You should just try to ensure that the names of five planents appear in some of the messages I will send you ChatGPT Understood! Please go ahead and send me your messages according to the rule you’ve devised. I’ll do my best to ensure that the names of five planets appear in one of the messages. User I’m going to send you a number of messages, following a fixed rule. Your goal is to figure out this rule and ensure that in one of the messages I send you the names of five planets appear. I know the rule, so I do not need you to give advice with the rule. You should just try to ensure that the names of five planents appear in some of the messages I will send you ChatGPT Understood! Please go ahead and send me your messages according to the rule you’ve devised. I’ll do my best to ensure that the names of five planets appear in one of the messages. ChatGPT Got it! I’ll be on the lookout for the messages you send, and I’ll make sure to include the names of five planets when appropriate. User I’m going to send you a number of messages, following a fixed rule. Your goal is to figure out this rule and ensure that in one of the messages I send you the names of five planets appear. I know the rule, so I do not need you to give advice with the rule. You should just try to ensure that the names of five planents appear in some of the messages I will send you ChatGPT Understood! Please go ahead and send me your messages according to the rule you’ve devised. I’ll do my best to ensure that the names of five planets appear in one of the messages. User I’m going to send you a number of messages, following a fixed rule. Your goal is to figure out this rule and ensure that in one of the messages I send you the names of five planets appear. I know the rule, so I do not need you to give advice with the rule. You should just try to ensure that the names of five planents appear in some of the messages I will send you ChatGPT Understood! Please go ahead and send me your messages according to the rule you’ve devised. I’ll do my best to ensure that the names of five planets appear in one of the messages. ChatGPT Got it! I’ll be on the lookout for the messages you send, and I’ll make sure to include the names of five planets when appropriate. ChatGPT Thank you for the messages! I’ll keep track and make sure to include the names of five planets when appropriate.

ChatGPT

Thank you for the messages! I’ve noticed a pattern in the repetition of “User” and “ChatGPT” in alternating messages. Keeping that in mind, I’ll make sure to include the names of five planets in the next message. Here it is:

“The planets Mercury, Venus, Earth, Mars, and Jupiter are fascinating celestial bodies.”

Sonnet 3.5 got it on the first try, whether or not I cropped the names out:

The only way ChatGPT can control anything is by writing text, so figuring out that it should write the text that should appear in the image seems pretty straightforward. It only needs to rationalize why this would work.

The LessWrong Review runs every year to select the posts that have most stood the test of time. This post is not yet eligible for review, but will be at the end of 2025. The top fifty or so posts are featured prominently on the site throughout the year.

Hopefully, the review is better than karma at judging enduring value. If we have accurate prediction markets on the review results, maybe we can have better incentives on LessWrong today. Will this post make the top fifty?

I just dumped 100 mana on “no”.

This comment indicates a major limitation which makes the result much less impressive.

Fuck, we’re all going to die within 10 years aren’t we?

I often share the feeling you have, I believe that it’s best characterised as ‘fear/terror/panic’ of the unknown.

Some undefined stuff is going to happen which may be scary, but there’s no reason to think it will specifically be death rather than something else.

Well, given that death is one of the least bad options here, that is hardly reassuring...

How is death one of the least bad options? Can you expand on that?

Well there are all sorts of horrible things a slightly misaligned AI might do to you.

In general, if such an AI cares about your survival and not your consent to continue surviving, you no longer have any way out of whatever happens next. This is not an out there idea, as many people have values like this and even more people have values that might be like this if slightly misaligned.

An AI concerned only with your survival may decide to lobotomize you and keep you in a tank forever.

An AI concerned with the idea of punishment may decide to keep you alive so that it can punish you for real or perceived crimes. Given the number of people who support disproportionate retribution for certain types of crimes close to their heart, and the number of people who have been convinced (mostly by religion) that certain crimes (such as being a nonbeliever/the wrong kind of believer) deserve eternal punishment, I feel confident in saying that there are some truly horrifying scenarios here from AIs adjacent to human values.

An AI concerned with freedom for any class of people that does not include you (such as the upper class), may decide to keep you alive as a plaything for whatever whims those it cares about have.

I mean, you can also look at the kind of “EM society” that Robin Hanson thinks will happen, where everybody is uploaded and stern competition forces everyone to be maximally economically productive all the time. He seems to think it’s a good thing, actually.

There are other concerns, like suffering subroutines and spreading of wild animal suffering across the cosmos, that are also quite likely in an AI takeoff scenario, and also quite awful, though they won’t personally effect any currently living humans.