Can We Align a Self-Improving AGI?

Produced during the Stanford Existential Risk Initiative (SERI) ML Alignment Theory Scholars (MATS) Program of 2022, under John Wentworth

TL;DR: Suppose that a team of researchers somehow aligned an AGI of human-level capabilities within the limited collection of environments that are accessible at that level. To corrigibly aid the researchers, the aligned AGI increases its capabilities (e.g., deployment into the Internet, abstraction capabilities, ability to break encryption). But after the aligned AGI increases its capabilities, it may be able to access environments that were previously inaccessible to both itself and the researchers. These new environments may then cause the AGI to phase-transition into misalignedness, in a complex way the alignment researchers could not have predicted beforehand. A potentially necessary condition to predicting this beforehand may be a tall order: that the researchers themselves investigate the currently inaccessible environments, before allowing the AGI to access them by improving its capabilities. An analogy for this problem, named the College Kid Problem, is provided by the Menendez brothers’ infamous murder of their parents.

Content warning: People who would prefer not to read about the murder in detail should skip to the section titled “The worst-case scenario for AI alignment efforts.”

Post: José Menendez was just a teenager in Cuba when his family’s estate was seized by Fidel Castro’s new Communist government. José’s family consequently immigrated to America, where he worked really hard. His family couldn’t afford for him to attend an Ivy League college; he attended Southern Illinois University instead. José then passed the CPA exam, and eventually worked his way up to become a wealthy business executive (for example, as a vice president at RCA Records, he had a hand in signing world-famous bands like The Eurythmics and Duran Duran). While he wasn’t able to fulfill his lifelong dream of overthrowing Castro, José Menendez—against all odds—had achieved the American Dream as an immigrant.

José’s first son, Lyle Menendez, grew up idolizing his father. He wanted to become a successful businessman just like him. And José, in turn, was a determined father. He wanted Lyle (and his other son, Erik) to access the educational opportunities in business that José himself was unable to access.

José and his wife, Kitty, demanded high grades from their sons. Moreover, to train them in tennis, José went so far as to teach himself from scratch how to coach tennis. By sheer will, José went from a casual player to a great tennis coach, arguably of professional caliber. Lyle and Erik became very good tennis players, and Lyle was eventually accepted to Princeton University as a tennis-team recruit.

But Lyle was suspended from Princeton in his freshman year for plagiarism. This began a troubled several years, including a romantic relationship with a model (prematurely ended by his disapproving parents) and muddling through college (with grades low enough to get himself put on academic disciplinary action).

The tense relationship that Lyle and his brother, Erik, had with their parents eventually turned deadly. One night, the brothers entered their family’s Beverly Hills mansion, armed with shotguns. They fired over a dozen shotgun rounds into their parents. José and Kitty’s bodies were made almost unidentifiable.

At first, it seemed like the Menendez brothers might get away with the murder. But they were eventually found out and arrested. The brothers were able to persuade the jury of their first trial that they were acting out of self-defense against parental abuse. However, a second trial quickly found that they were in fact motivated by the inheritance money. Both Lyle and Erik were sentenced to life imprisonment without the possibility of parole.

José Menendez had arduously attempted to align his sons to become American-Dream-achieving businessmen. José’s efforts were visible in his sons’ behaviors, even after the murder. For example, Erik was asked about his aspirations during an interview after the murder. He responded with a fantastical dream that had his dead father’s fingerprints all over it. He would be senator, his brother Lyle would be president, and they would together liberate Cuba from Castro.

And Lyle used his share of the blood money to jumpstart his business ambitions. In fact, Lyle confided in his psychiatrist that his father would have been proud of him for executing such an efficient murder. It was José who had taught Lyle that “the rules of tennis, for example, didn’t always apply.” Tennis rules forbid coaching during games, but José gave his sons in-game coaching anyway with subtle cues. And they got away with it.

But Lyle took his father’s training outside of the tennis court, and applied it to his private life in college. He blatantly copied his classmate’s psychology lab report, the cause of his freshman-year suspension. In an interview with the college newspaper prior to his arrest, Lyle explained his decision to postpone his education. “I have a progressive philosophy that a person only has so many years in which he can devote a tremendous amount of energy to his goals,” he said. And when Lyle wanted a single dorm but was assigned a double, he moved his roommate’s belongings out of the room. The rules don’t always apply.

Even though José had imparted so many of his values to his sons, he ultimately failed. It is hard to imagine a more abject failure.

The worst-case scenario for AI alignment efforts

At the risk of incorrectly anthropomorphizing AI, we have presented José Menendez’s parenting failure as an analogy for failing to align AGI.

(Reminder: AI systems will likely continue to increase in capabilities, perhaps even to the point of a superhuman AGI in the near future. But we still know very little about how to align an AI agent, especially outside of the training distribution. A misaligned AGI will probably first try to escape into the Internet, and then be a power-seeking threat that endangers all of humanity. The reason? Our dominion over the Earth’s resources—and over the AI’s access to them, such as by turning it off—is standing between the AI and its achievement of whatever mysterious goal emerged during its training.)

Most parents don’t raise their kids to brutally murder them, and in retrospect, José and Kitty (especially José) could have made much better parenting choices. But the Menendezes’ travesty constitutes a worst-case scenario. Could it have been prevented proactively, rather than just in hindsight?

This question is analogous to a problem that a self-improving AI agent might unavoidably be subject to. We call this the College Kid Problem.

The College Kid Problem

Suppose that Senior Menendez somehow successfully aligned Junior Menendez prior to college. If an interpretability tool could perfectly read Junior’s mind, it would reveal that all Junior cares about is to be a successful, anti-Castro businessman, just like his father. The interpretability tool would also reveal a strong aversion to committing murder, since (1) it is extremely unethical and (2) one cannot be a successful businessman while being in prison for life. In fact, the interpretability tool would probably reveal in Junior’s mind an earnest plan to work with his father to expand the family’s business empire. And maybe to also overthrow Castro.

Both Senior and Junior agree on a plan: Junior should increase his capabilities by being the first in the family to attend Princeton University. Junior is rejected from Princeton at first, takes a gap year, reapplies, and gets accepted. All according to plan.

Once at Princeton, however, Junior undergoes a complex interaction with the unfamiliar college environment—getting suspended for plagiarism—which causes him to spiral out of control. (For an analogy, consider AlphaGo’s series of uncharacteristically bad moves following the creation of an unfamiliar game state by Lee Sedol’s brilliant Move 78. This led to AlphaGo’s only loss against Lee in their five-game match.)

Following his suspension, Junior leads a life characterized by one unprecedentedly bad development after another. His relationship with Senior also spirals out of control. Ultimately, he ends up murdering Senior for his resources.

The core of the College Kid Problem is that neither Senior nor the pre-college version of Junior are familiar with the college environment that Junior would be getting himself into. This knowledge may be necessary for correctly predicting how pre-college Junior should improve his capabilities to better achieve the goal that he shares with Senior. Or for predicting whether Junior should improve his capabilities at all.

Even predicting a complex agent’s behavior in a known environment is quite difficult. The space of possible agent-environment interactions is, in general, extremely multidimensional and mysterious. There is limited hope that a human-understandable theory of a complex agent-environment system can consistently generate accurate predictions. Indeed, there have been very few success cases of this, all of which have a quite limited scope.

But let us assume that whenever Senior is given Junior’s agent state and his environment , Senior can feasibly compute the random variable of, say, whether he will die by Junior’s hand. This is a very optimistic assumption (although if Junior were an AI system, it might be possible to empirically estimate the random variable in a realistic sandbox).

Even under this optimistic assumption, however, Senior would not have a clear way to predict his doom. When Senior decides whether to send Junior off to Princeton, he might reason in the following way:

“I already know that the probability of doom (in the home environment familiar to both myself and pre-college Junior) is negligible.

Therefore, the probability of doom conditional on Junior attending Princeton (and its unknown college environment ) should also be negligible.”

This reasoning would be flawed, however. Neither Senior nor pre-college Junior can compute a priori the probability of doom conditional on the unknown college environment . This is because the environment is inaccessible to Senior and pre-college Junior; it is only accessible to Junior after the improvement of his capabilities.

Correct decision-making, à la expected-utility maximization, is only possible when one knows the probability distribution over the set of possible outcomes (say, conditional on a given policy of actions). But the decision problem of whether Junior should improve his capabilities hinges on knowing how he would interact with the unknown environment that would result from his self-improvement. In general, neither Senior nor the pre-self-improvement version of Junior would even be able to learn what the full set of possible outcomes is, let alone the probability distribution over them.

And once Junior improves his capabilities, it might be too late.

Directions for future research

1. Failsafes in case an AI agent is misaligned by an unforeseen environment

Perhaps the clearest solution to the College Kid Problem is to put high-quality security failsafes in place, just in case the AI agent turns out to be misaligned.

The ultimate failsafe is, of course, to not build the AI agent. (At least until the science of alignment is solved.)

The problem is, several AI organizations are racing to build superhuman general intelligence at any cost. They have sunk, and continue to sink, billions of dollars into this race. And this research is generally carried out without the safety precautions necessary to protect innocent people from collateral harm. Indeed, unprecedented precautions are likely needed, because aligning an AGI (before its potentially catastrophic escape into the Internet) seems to be a task of unprecedented difficulty.

If a leading AI organization on the cusp of building an AGI asked us to help align it, we would probably tell them, “Please don’t build it. Innocent people could be harmed.” But if they are adamant and we exhaust all of our options in getting them to reconsider, we might have to help them come up with a reasonable plan for their AI safety. Innocent people’s lives and well-being are at stake, after all.

Fortunately, there may be other high-quality failsafes. For example, airgapping the research environment probably reduces a lot of the risk.

We also want to prevent a hacker AGI from using the researchers’ interpretability tools as attack channels. On this front, we can aim for (1) cybersecurity guarantees that the privileges and capabilities accorded to the AGI are insufficient for hacking and (2) security through obscurity of the interpretability tools. The latter can be accomplished by only discussing/publishing interpretability tools via off-the-grid channels, or by removing all information about them from the AGI’s training data.

Finally, the researchers themselves are at risk of getting socially engineered by the AGI, through the interpretability tools and the other information channels they choose to open. Tried-and-true methods of reducing risks of human social engineering—such as proactive screening, proactive safety training, and collective rather than individual decision-making—will likely be useful. Moreover, there is probably significant room for improvement in society’s knowledge of how to effectively prevent human social engineering, especially against a superintelligent AGI. Thus, we should continue to brainstorm, redteam, and optimize potential solutions on this front.

2. A robust guarantee that the AI agent will be aligned in a wide class of possible environments

There is no precedent for success in predicting all aspects of a complex agent-environment interaction a priori. Even the theoretically maximal degree of predictability is probably quite low. Complex agent-environment interactions are inherently unpredictable “because of the difficulty of pre-stating the relevant features of ecological niches, the complexity of ecological systems and [the fact that the agent-ecology interaction] can enable its own novel system states.”

But there is precedent for success in making accurate predictions about very specific, big-picture aspects of the agent-environment interaction. These predictions tend to be made via rigorous deduction, from parsimonious but plausible assumptions which hold true for a wide class of agent-environment interactions. If such a rigorous prediction could shed even a partial light on whether an aligned AI agent would remain aligned after improving its capabilities, it could potentially be quite valuable.

One example of such a prediction is the theorem of Turner, Smith, Shah, Critch, and Tadepalli that optimal agents will be power-seeking in a wide class of realistic environments. We probably cannot predict precisely how a given AGI will seek power against us. It is likely a complex consequence of numerous mysterious variables that are situational to the given agent-environment interaction, many of which are not accessible at our limited human-level capabilities. But even just knowing a priori that the AGI will probably be power-seeking by default is quite valuable, especially for the currently empirics-starved state of AI safety research.

Another example is Fisher’s principle, one of the few theorems about biological agents that are capable of making precise a priori predictions. Fisher’s principle explains why most sexually reproducing species have approximately 50% female and 50% male offspring. The explanation is as follows. Assume that male and female offspring have an equal reproductive cost. Then, having male and female offspring in equal proportions is the unique evolutionarily stable strategy. When a mutant happens to evolve this strategy, it will strictly outcompete every other possible strategy and thereby fix in the population. Such a precise prediction of the evolved phenotype (albeit regarding a quite limited aspect of phenotype) is unusual in evolutionary biology, especially for a theory as parsimonious as Fisher’s game-theoretic model.

But even Fisher’s principle has blind spots. Various species are characterized by local mate competition, non-Mendelian inheritance, or haplodiploidy: mechanisms that break the equal-cost assumption underlying Fisher’s principle. Its prediction of equal sex ratio has no reason to hold in these species, and in fact, it does not generally hold. That even “the most celebrated argument in evolutionary biology” has blind spots goes to show the inherent unpredictability of complex agent-environment systems in general.

Fisher’s principle is an example of a “nice” theorem, which maps high-regularity assumptions to purportedly general predictions about complex agent-environment systems. Such nice theorems are almost always too good to be true. Even in the rare event that a nice theorem like Fisher’s principle actually turns out to be true, it would then probably be of a limited scope necessarily.

But even limited-scope information can be useful, especially given that AI alignment remains mostly unsolved. With luck, such information may even be applicable across a nontrivial proportion of possible Internet-based environments, including those that are currently inaccessible to us humans.

3. Studying the probability distribution of what currently inaccessible environments might turn out to be

We might be able to do better than just “hope to get lucky” if we could learn beforehand the true probability distribution of currently inaccessible environments. This would help complete our a priori knowledge of the environment with which the AGI would interact after its self-improvement. Then, we would in principle be able to empirically estimate the probability of our doom conditional on the self-improvement occurring.

(Recall that the complex interaction between the AI agent and its environment may be inherently unpredictable, except by actually running the agent in the enironment . If so, the only realistic way to estimate a priori our probability of doom may be to run the agent in, say, a sandboxed version of the environment beforehand. This would likely require robust security failsafes, especially if the AI agent has high capabilities and thereby a high escape risk.)

One way to study the true probability distribution of currently inaccessible environments is to study it indirectly, by using information sources which are accessible at human-level capabilities. Such information sources include the public portion of the Internet, the science of human behavior, and the science of natural abstractions.

However, the degree to which we can predict the desired environment solely from human-accessible sources may turn out to be quite low. For example, the only way to accurately obtain the full set of information underlying the encrypted parts of the Internet would, in general, be to decrypt and inspect each of them.

Thus, we run into a problem: there are fundamental reasons why the desired environment is currently inaccessible. For one thing, humans are constrained by our suboptimal capabilities at tasks like comprehensively processing information, efficiently forming abstractions, and breaking encryption. For another, some forms of informational access (e.g., breaking encryption, spying on private Internet use) are illegal and/or deserving of shame, even if they were feasible.

This brings us to the second way of studying the true probability distribution of currently inaccessible environments. Alignment researchers can use Tool AI systems that are specialized to access human-inaccessible information (ideally via automated summary statistics, which would help respect Internet privacy and limit social-engineering risks). As non-agentic specialists, Tool AI systems are probably much safer than a generally intelligent AI agent that is trained to act on its own accord. In fact, a team of human researchers who are equipped with sufficiently capable Tool AI systems may, over a sufficiently long period of time, be able to access the otherwise inaccessible environment . We would then have some hope of preemptively learning whether our AI agent will become misaligned if it were to interact with the environment , which we now know about.

However, AI organizations may be unrealistically optimistic about their prospects of training a self-improving AGI to autonomously seek profit while remaining aligned every step of the way. To these organizations, a proposal to patiently probe whether such an AGI would spiral out of control may look financially unappealing. For example, in order to speed up the auditing process, the AI organizations’ management may pressure their researchers to make the Tool AI systems so powerful that they start to become unsafe. This may even result in the emergent phenomenon where the Tool AI systems accidentally become agentic and thereby become sources of danger themselves. Underlying this risk is a fundamental lack of coordination between AI organizations and of governance towards safe AI, which continues to fuel a dangerous race towards premature AGI deployment.

Epistemic status: We are uncertain about the precise probability that a currently human-inaccessible environment, somewhere on the Internet, will misalign a self-improving AGI that accesses it. We are more confident that the inaccessibility of out-of-distribution information (as compellingly formulated by Paul Christiano, as well as by his work with his collaborators on Eliciting Latent Knowledge) is a fundamental problem that should be addressed.

Acknowledgements: We are grateful to Stephen Fowler for his help on writing and editing, and to Michael Einhorn, Arun Jose, and Michael Trazzi for their helpful feedback on the draft.

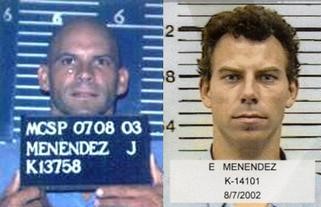

We would also like to acknowledge the L.A. County D.A.‘s Office, the source of the photos comprising Images 1 and 2 (accessed indirectly via Fox 11’s video on Youtube). Finally, we would like to acknowledge the Beverley Hills Police Department, the source of the Menendez brothers’ mugshots (accessed indirectly via Wikipedia) in Image 3. The purpose of use for the comprising photos is to aid the article’s biographical commentary about the Menendez family, which is provided as an example of the proposed framework of the College Kid Problem. This constitutes fair use because it would be impossible to use free replacements of the photos, given that the Menendez parents are deceased and the Menendez brothers are incarcerated.

Great post Peter. I think a lot about whether it even makes sense to use the term “aligned AGI” as powerfull AGIs may break human intention for a number of reasons (https://www.lesswrong.com/posts/3broJA5XpBwDbjsYb/agency-engineering-is-ai-alignment-to-human-intent-enough).

I see you didn’t refer to AIs become self driven (as in Omohundro: https://selfawaresystems.files.wordpress.com/2008/01/ai_drives_final.pdf). Is there a reason you don’t view this as part of the college kid problem?

Alignment needs something to.align with, but it’s far from proven that there is a coherent set of values shared by all humans.

Thank you so much for your kind words! I really appreciate it.

One definition of alignment is: Will the AI do what we want it to do? And as your post compellingly argues, “what we want it to do” is not well-defined, because it is something that a powerful AI could be able to influence. For many settings, using a term that’s less difficult to rigorously pin down, like safe AI, trustworthy AI, or corrigible AI, could have better utility.

I would definitely count the AI’s drive towards self-improvement as a part of the College Kid Problem! Sorry if the post did not make that clear.

You neglect possibility (4). This is what a modern day engineer will do, and this method is frequently used.

If the environment, Epost, is out of distribution—measurable because there is high prediction error for many successive frames—our AI system is failing. It cannot operate effectively if it’s predictions are often incorrect*.

What do we do if the system is failing?

One concept is that of a “limp mode”. Numerous real life embedded systems use exactly this, from aircraft to hybrid cars. Waymo autonomous vehicles, which are arguably a prototype AI control system, have this. “Limp mode” is a reduced functionality mode where you enable just minimal features. For example in a waymo it might use a backup power source, a single camera, and a redundant braking and steering controller to bring the vehicle to a controlled, semi-safe halt.

Key note: the limp mode controllers have authority. That is, they activated by things like interrupts in a watchdog message from the main system, or a stream of ‘health’ messages from the main system. If the main system reports it is unhealthy (such as successive frames where predictions are misaligned with observed reality) the backup system takes away control physically. (this is done a variety of ways, from cutting power to the main system to just ignoring it’s control messages)

For AGI there appears to be a fairly easy and obvious way to have limp mode. The model in control in a given situation can be the one that scored the best in the training environment. We can allocate enough silicon to have more than 1 full featured model hosted in the AI system. So we just switch control authority over to the one making the best predictions in the current situation.

We could even make this seamless and switch control authority multiple times a second—a sort of ‘mixture of experts’. Some of the models in the mixture will have simpler, more general policies that will be safer in a larger array of situations.

A mixture of experts system could easily be made self improving, where models are being upgraded by automated processes all the time. The backend that decides who gets control authority, provides the training and evaluation framework to decide if a model is better or not, etc, does not get automatically upgraded, of course.

*You could likely formally show this—Intelligence is simply modeling the future probability distribution contingent on your actions and taking the action that results in the most favorable distribution. A new and strange environment, your model will fail.

Only if we can somehow get it to care as much about alignment as we do.