Cross-posted from the AI Impacts blog

Summary

In this post I outline apparent regularities in how major new technological capabilities and methods come about. I have not rigorously checked to see how broadly they hold, but it seems likely to me that they generalize enough to be useful. For each pattern, I give examples, then some relatively speculative explanations for why they happen. I finish the post with an outline of how AI progress will look if it sticks to these patterns. The patterns are:

The first version of a new technology is almost always of very low practical value

After the first version comes out, things improve very quickly

Many big, famous, impressive, and important advances are preceded by lesser-known, but still notable advances. These lesser-known advances often follow a long period of stagnation

Major advances tend to emerge while relevant experts are still in broad disagreement about which things are likely to work

Introduction

While investigating major technological advancements, I have noticed patterns among the technologies that have been brought to our attention at AI Impacts. I have not rigorously investigated these patterns to see if they generalize well, but I think it is worthwhile to write about them for a few reasons. First, I hope that others can share examples, counterexamples, or intuitions that will help make it clearer whether these patterns are “real” and whether they generalize. Second, supposing they do generalize, these patterns might help us develop a view of the default way in which major technological advancements happen. AI is unlikely to conform to this default, but it should help inform our priors and serve as a reference for how AI differs from other technologies. Finally, I think there is value in discussing and creating common knowledge around which aspects of past technological progress are most informative and decision-relevant to mitigating AI risk.

What I mean by ‘major technological advancements’

Throughout this post I am referring to a somewhat narrow class of advances in technological progress. I won’t try to rigorously define the boundaries for classification, but the kinds of technologies I’m writing about are associated with important new capabilities or clear turning points in how civilization solves problems. This includes, among many others, flight, the telegraph, nuclear weapons, the laser, penicillin, and the transistor. It does not include achievements that are not primarily driven by improvements in our technological capabilities. For example, many of the structure height or ship size advances included in our discontinuities investigation seem to have occurred mainly because someone had the means and desire to build a large ship or structure and the materials and methods were available, not because of a substantial change in known methods or available materials. It also does not include technologies granting impressive new capabilities that have not yet been useful outside of scientific research, such as gravitational wave interferometers.

I have tried to be consistent with terminology, but I have probably been a bit sloppy in places. I use ‘advancement’ to mean any discrete event in which a new capability is demonstrated or a device is created that demonstrates a new principle of operation. In some places, I use ‘achievement’ to mean things like flying across the ocean for the first time, and ‘new method’ to mean things like using nuclear reactions to release energy instead of chemical reactions.

Things to keep in mind while reading this:

The primary purpose of this post is to present claims, not to justify them. I give examples and reasons why we might expect the pattern to exist, but my goal is not to make the strongest case that the patterns are real.

I make these claims based on my overall impression of how things go, having spent part of the past few years doing research related to technological progress. I have done some searching for counterexamples, but I have not made a serious effort to analyze them statistically.

The proposed explanations for why the patterns exist (if they do indeed exist) are largely speculative, though they are partially based on well-accepted phenomena like experience curves.

There are some obvious sources of bias in the sample of technologies I’ve looked at. They’re mostly things that started out as candidates for discontinuities, which were crowd sourced and then examined in a way that strongly favored giving attention to advancements with clear metrics for progress.

I’m not sure how to precisely offer my credence on these claims, but I estimate roughly 50% that at least one is mostly wrong. My best guess for how I will update on new information is by narrowing the relevant reference class of advancements.

Although I did notice these patterns at least somewhat on my own, I do not claim these are original to me. I have seen related things in a variety of places, particularly the book Patterns of Technological Innovation by Devendra Sahal.

The patterns

The first version of a new device or capability is almost always terrible

The first transatlantic flight was a success, but just barely.

Most of the time when a major milestone is crossed, it is done in a minimal way that is so bad it has little or no practical value. Examples include:

The first ship to cross the Atlantic using steam power was slower than a fast ship with sails.

The first transatlantic telegraph cable took an entire day to send a message the length of a couple Tweets, would stop working for long periods of time, and failed completely after three weeks.

The first flight across the Atlantic took the shortest reasonable path and crash-landed in Ireland.

The first apparatus for purifying penicillin did not produce enough to save the first patient.

The first laser did not produce a bright enough spot to photograph for publication

Although I can’t say I predicted this would be the case before learning about it, in retrospect I do not think it is surprising. As we work toward an invention or achievement, we improve things along a limited number of axes with each design modification, oftentimes at the expense of other axes. In the case of improving an existing technology until it is fit for some new task, this means optimizing heavily on whichever axes are most important for accomplishing that task. For example, to modify a World War I bomber to cross the ocean, you need to optimize heavily for range, even if it requires giving up other features of practical value, like the ability to carry ordinance. Increasing range without making such tradeoffs makes the problem much harder. Not only does this make it less likely you will beat competitors to the achievement, it means you need to do design work in a way that is less iterative and requires more inference.

This also applies to building a device that demonstrates a new principle of operation, like the laser or transistor. The easiest path to making the device work at all will, by default, ignore many unnecessary practical considerations. Skipping straight past the dinky minimal version to the practical version is difficult, not only because designing something is harder with more constraints, but because we can learn a lot from the dinky minimal version on our way to the more practical version. This appears related to why Theodore Maiman won the race to build a laser. His competitors were pursuing designs that had clearer practical value but were substantially more difficult to get right.

The clearest example I’m aware of for a technology that does not fit this pattern is the first nuclear weapon. The need to create a weapon that was useful in the real world quickly and in secret using extremely scarce materials drove the scientists and engineers of the Manhattan Project to solve many practical problems before building and testing a device that demonstrated the basic principles. Absent these constraints, it would likely have been easier to build at least one test device along the way, which would most likely have been useless as a weapon.

Progress often happens more quickly following a major advancement

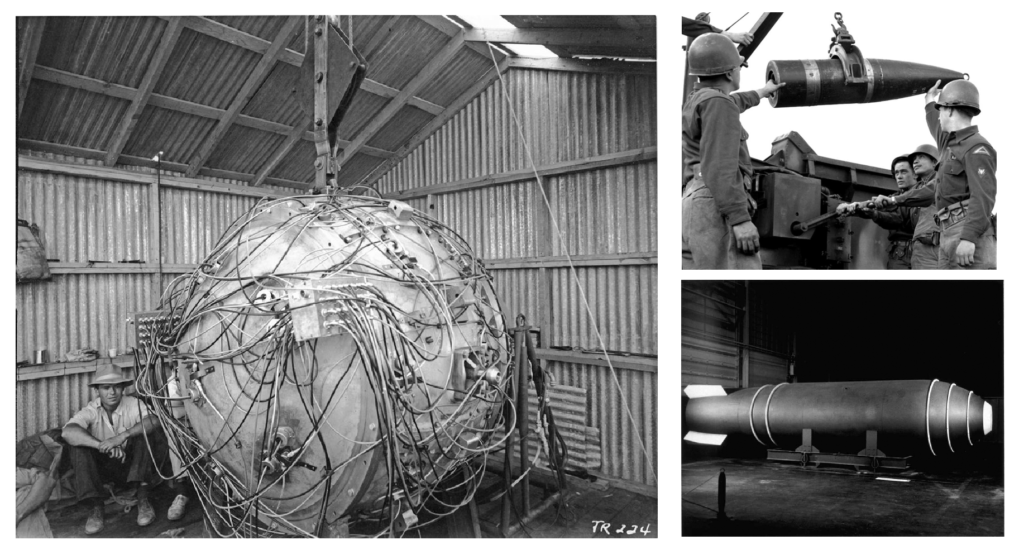

In less than ten years, nuclear weapons advanced from large devices with a yield of 25 kilotons (left) to artillery shells with a yield of 15 kilotons (top right) and large 25 megaton devices (lower right)

New technologies may start out terrible, but they tend to improve quickly. This isn’t just a matter of applying a constant rate of fractional improvement per year to an increase in performance—the doubling time for performance decreases. Examples:

Average speed to cross the Atlantic in wind-powered ships doubled over roughly 300 years, after which crossing speed for steam-powered ships doubled in about 50 years, and crossing speed for aircraft doubled twice in less than 40 years.

Telecommunications performance, measured as the product of bandwidth and distance, doubled every 6 years before the introduction of fiber optics and doubled every 2 years after1

The energy released per mass of conventional explosive increased by 20% during the 100 years leading up to the first nuclear weapon in 1945, and by 1960 there were devices with 1000x the energy density of the first nuclear weapon.

The highest intensity available from artificial light sources had an average doubling time of about 10 years from 1800 to 1945. Following the invention of the laser in 1960, the doubling time averaged just six months for over 15 years. Lasers also found practical applications very quickly, with successful laser eye surgery within 18 months and the laser rangefinders a few years after that.

The rate of progress in carrying a military payload across the Atlantic increased each time the method changed

This pattern is less robust than the others. An obvious counterexample is Moore’s Law and related trajectories for progress in computer hardware, which remain remarkably smooth across major changes in underlying technology. Still, even if major technological advances do not always accelerate progress, they seem to be one of the major causes of accelerated progress.

Short-term increases in the rate of progress may be explained by the wealth of possible improvements to a new technology. The first version will almost always neglect numerous improvements that are easy to find. Furthermore, once a the technology is out in the world, it becomes much easier to learn how to improve it. This can be seen in experience curves for manufacturing, which typically show large improvements per unit produced in manufacturing time and cost when a new technology is introduced.

I’m not sure if this can explain long-running increases in the rate of progress, such as those for telecommunications and transatlantic travel by steamship. Maybe these technologies benefited from a large space of design improvements and sufficient, sustained growth in adoption to push down the experience curve quickly2. It may be related to external factors, like the advent of steam power increasing general economic and technological progress enough to maintain the strong trend in advancements for crossing the ocean.

Major advancements are usually preceded by rapid or accelerating progress

Searchlights during World War II used arc lamps, which were, as far as I know, the first artificial sources of light with greater intensity than concentrated sunlight. They were surpassed by explosively-driven flashes during the Manhattan Project.

Major new technological advances are often preceded by lesser-known, but substantial advancements. While we were investigating candidate technologies for the discontinuities project, I was often surprised to find that this preceding progress was rapid enough to eliminate or drastically reduce the calculated size of the candidate discontinuity. For example:

The invention of the laser in 1960 was preceded by other sources of intense light, including the argon flash in the early 1940s and various electrical discharge lamps by the 1930s. Progress was fairly slow from ~1800 to the 1930s, and more-or-less nonexistent for centuries before that.

Penicillin was discovered during a period of major improvements in public health and treatments for bacterial infections, including a drug that was such an improvement over existing treatments for infection it was called the “magic bullet”.

The Haber Process for fixing nitrogen, often given substantial credit for improvements in farming output that enabled the huge population growth of the past century, was invented in 1920. It was preceded by the invention of two other processes, in 1910 and 1912, the latter of which was more than a factor of two improvement over the former.

The telegraph and flight both crossed the Atlantic for the first time during a roughly 100 year period of rapid progress in steamships, during which crossing times decreased by a factor of four.

Progress in light intensity for artificial sources exploded in the mid-1900s. Most progress is from improvements in lasers, but the Manhattan Project led to the development of explosively-driven flashes 15-20 years prior.

Progress in intense light sources since 1800

This pattern seems less robust than the previous one and it is, to me, less striking. The accelerated progress preceding a major advancement varies widely in terms of overall impressiveness, and there is not a clear cutoff for what should qualify as fitting the pattern. Still, when I to come up with a clear example of something that fails to match the pattern, I had some difficulty. Even nuclear weapons were preceded by at least one notable advance in conventional explosives a few years earlier, following what seems to have been decades of relatively sluggish progress.

An obvious and perhaps boring explanation for this is that progress on many metrics was accelerating during the roughly 200-300 year period when most of these took place. Technological progress exploded between 1700 and 2000, in a way that seems to have been accelerating pretty rapidly until around 1950. Every point on an aggressively accelerating performance trend follows a period of unprecedented progress. It is plausible to me that this fully explains the pattern, but I’m not entirely convinced.

An additional contributor may be that the drivers for research and innovation that result in major advances tend to cause other advancements along the way. For example, wars increase interest in explosives, so maybe it is not surprising that nuclear weapons were developed around the same time as some advances in conventional explosives. Another potential driver for such innovation is a disruption to incremental progress via existing methods that requires exploration of a broader solution space. For example, the invention of the argon flash would not have been necessary if it had been possible to improve the light output of arc lamps.

Major advancements are produced by uncertain scientific communities

Alexander Fleming (left foreground) receives his Nobel Prize for his discovery of penicillin, along with Howard Florey (back left) and Ernst Chain (back, second from left). Florey and Chain pursued penicillin as an injectable therapeutic, in spite of Fleming’s insistence that it was only suitable for treating surface infections.

Nearly every time I look into the details of the work that went into producing a major technological advancement, I find that the relevant experts had substantial disagreements about which methods were likely to work, right up until the advancement takes place, sometimes about fairly basic things. For example:

The team responsible for designing and operating the first transatlantic telegraph cable had disagreements about basic properties of signal propagation on very long cables, which eventually led to the cable’s failure.

The scientists of the Manhattan Project had widely varying estimates for the weapon’s yield, including scientists who expected the device to fail entirely.

Widespread skepticism, misunderstanding, and disagreement of penicillin’s chemical and therapeutic properties were largely responsible for a 10 year delay in its development.

Few researchers involved in the race to build a functioning laser expected the design that ultimately prevailed to be viable.

The default explanation for this seems pretty clear to me—empirical work is an important part of clearing up scientific uncertainty, so we should expect successful demonstrations of, novel, impactful technologies to eliminate a lot of uncertainty. Still, it did not have to be the case that the eliminated uncertainty is substantial and related to basic facts about the functioning of the technology. For example, the teams competing for the first flight across the Atlantic did not seem to have anything major to disagree about, though they may have made different predictions about the success of various designs.

There is a distinction to be made here, between high levels of uncertainty on the one hand, and low levels of understanding on the other. The communities of researchers and engineers involved in these projects may have collectively assigned substantial credence to various mistaken ideas about how things work, but my impression is that they did at least agree on the basic outlines of the problems they were trying to solve. They mostly knew what they didn’t know. For example, most of the disagreement about penicillin was about the details of things like its solubility, stability, and therapeutic efficacy. There wasn’t a disagreement about, for example, whether the penicillium fungus was inhibiting bacterial growth by producing an antibacterial substance or by some other mechanism. I’m not sure how to operationalize this distinction more precisely.

Relevance to AI risk

As I explained at the beginning of this post, one of my goals is to develop a typical case for the development of major technological advancements, so we can inform our priors about AI progress and think about the ways in which it may or may not differ from past progress. I have my own views on the ways in which it is likely to differ, but I don’t want to get into them here. To that end, here is what I expect AI progress will look like if it fits the patterns of past progress.

Major new methods or capabilities for AI will be demonstrated in systems that are generally pretty poor.

Under the right conditions, such as a multi-billion-dollar effort by a state actor, the first version of an important new AI capability or method may be sufficiently advanced to be a major global risk or of very large strategic value.

An early system with poor practical performance is likely to be followed by very rapid progress toward a system that is more valuable or dangerous

Progress leading up to an important new method or capability in AI is more likely to be accelerating than it is to be stagnant. Notable advances preceding a new capability may not be direct ancestors to it.

Although high-risk and transformative AI capabilities are likely to emerge in an environment of less uncertainty than today, the feasibility of such capabilities and which methods can produce them are likely to be contentious issues within the AI community right up until those capabilities are demonstrated.

Thanks to Katja Grace, Daniel Kokotajlo, Asya Bergal, and others for the mountains of data and analysis that most of this article is based on. Thanks to Jalex Stark, Eli Tyre, and Richard Ngo for their helpful comments. All views and mistakes are my own.

All charts in this post are original to AI Impacts. All photographs are in the public domain.

Those conclusions are relevant/important! I’m particularly struck by the one about expert disagreement since that was new to me. Another potential historical example to add to the list: When airplanes were invented experts were still arguing about how birds were able to soar. “They watched buzzards glide from horizon to horizon without moving their wings, and guessed they must be sucking some mysterious essence of upness from the air. ” (To be clear some experts had the right answer, or at least a sketch of it.)

I can think of two intertwined objections to your conclusions-as-applied-to-AI risk:

One is “Your selection of historical techs is biased because it draws heavily from the discontinuities project. There are other important technologies that were developed non-discontinuously, and maybe AI will be one of them. For example computers. Or guns. Or cell phones. For these technologies, the lessons you draw do not apply.”

The other is “AI will in fact be more like big ships or buildings than like lasers or nukes. It’ll mostly be scaled-up versions of pre-existing stuff, possibly even stuff that already exists today.”

What do you think of those objections?

Yeah, I can’t really tell how much to conclude from the examples I give on this. The problem is that “uncertainty” is both hard to specify in a way that makes for good comparisons and hard to evaluate in retrospect.

I’m glad you brought up flight, because I think it may be a counterexample to my claim that uncertain communities have produced important advances, but confused communities have not. My impression is that everyone was pretty confused about flight in 1903, but I don’t know that much about it. There may also be a connection between level of confusion and ability to make the first version less terrible or improve on it quickly (for example, I think the Manhattan Project scientists were less confused than the scientists working on early lasers).

I think this objection is basically right, in that this sample (and arguably the entire reference class) relies heavily on discreteness in a way that may ultimately be irrelevant to TAI. Like, maybe there will be no clear “first version” of an AI that deeply and irrevocably changes the world. Still, it may be worth mentioning that some of the members of this reference class, such as penicillin and the Haber process, turned out not to be discontinuities (according to our narrow definition).

This doesn’t seem crazy. I think the lesson from historical building sizes is “Whoa, building height really didn’t track underlying tech at all”. If for some reason AI performance tracks limits of underlying technology very badly, we might expect the first version of a scary thing to conform badly to these patterns.

I would guess this is not what will happen, though, since most scary AI capabilities that we worry about are much more valuable than building height. Still, penicillin was a valuable technology that sat around for stupid reasons for a decade, so who knows.

Hard disagree. The most difficult part of building the first nuclear weapon was acquiring sufficient fissile materials. The design of nuclear bombs is constrained by physics (critical mass/critical density). Either of these two facts is sufficient to negate the usefulness of building a lower-yield test device first; it is neither practical nor desirable.

This issue is ridiculously over-determined, to the point where enormous research efforts had to go into lowering nuclear weapon yields to create something comparable with conventional bombs (and only barely so, you’re still talking about MOAB-level explosive yields here). The relatively low-yield nukes in the kiloton range that do exist today are very inefficient in terms of fissile material/energy released.

The less useful device would (probably) not have been (much) lower yield, it would have been much larger and heavier. For example, part of what led to the implosion device was the calculation for how long a gun-type plutonium weapon would need to be, which showed it would not fit on an aircraft. I agree that the scarcity of the materials is likely sufficient to limit the kind of iterated “let’s make sure we understand how this works in principle before we try to make something useful” process that normally goes into making new things (and that was part of “these constraints” you quote, though maybe I didn’t write it very clearly).

Edited to add:

Also, my phrasing “scarcity of materials” may be downplaying the extent to which scaling up uranium and plutonium production was part of the technological progress necessary for making a nuclear weapon. But I sometimes see people attribute the impressive and scary suddenness of deployable nuclear weapons entirely to the physics of energy release from a supercritical mass, and I think this is a mistake.

I disagree. I think it is a mistake to shoehorn “patterns” onto the history of technological progress where you deliberately pick the time window and the metric and ignore timescale in order to fit a narrative.

I don’t know what motivates people to try to dissolve historical discontinuities such as the advent of the nuclear bomb, but they did manage to find a metric along which early nuclear bombs were comparable to conventional bombs, namely explosive yield per dollar. But the real importance of the atom bomb is that it’s possible at all; that physics allows it; that it is about a million times more energy-dense than chemical explosives—not a hundred, not a trillion; a million. That is what determined the post-ww2 strategic landscape and the predicament humanity is currently in. That which is determined by the laws of nature and not the dynamics of human societies.

You can’t get that information out of drawing lines through the rate of improvement of explosive yields or whatever. You wouldn’t even have thought of drawing that particular line. This whole exercise is mistaking hindsight for wisdom. The only lesson to learn from history is to not learn lessons from it, especially when something as freaky and unprecedented as AGI is concerned.

This seems like a pretty wild claim to me, even as someone who agrees that AGI is freaky and unprecedented, possibly to the point that we should expect it to depart drastically from past experience.

My issue here is with “past experience”. We don’t have past experience of developing AGI. If this was about secular cycles in agricultural societies where boundary conditions remain the same over millennia, I’d be much more sympathetic. But lack of past experience is inherent to new technologies. Inferring future technological progress from the past necessitates shaky analogies. You can see any pattern you want and deduce any conclusion you want from history, by cherry-picking the technology, the time-window and the metric. You say “Wright Brothers proved experts are Luddites”, I say ” Where is the flying car I’ve been promised”. There is no way to not cherry-pick. Zoom in far enough and any curve looks smooth, including a hard AI takeoff.

My point is don’t look at Wright Brothers, the Manhattan Project or Moore’s Law, look at streamlines, atomic mass spectra and the Landauer limit to infer where we’re headed. Even if the picture is incomplete it’s still more informative than vague analogies with the past.

What does streamlines refer to in this context? And what is the relevance of atomic mass spectra?

Looking at atomic mass spectra of uranium and its fission products (and hence the difference in their energy potential) in the early 20th century would have helped you predict just how big a deal nuclear weapons will be, in a way that looking at the rate of improvement of conventional explosives would not have.

Little Boy was a gun-type device with hardly any moving parts; it was the “larger and heavier” and inefficient and impractical prototype and it still absolutely blew every conventional bomb out of the water. Also, this is reference class tennis. If the rules allow for changing the metric in the middle of the debate, I shoot back with “the first telegraph cable improved transatlantic communication latency more than ten-million-fold the instant it was turned on; how’s that for a discontinuity”.

To be clear, I’m not saying “there’s this iron law about technology and you might thing nuclear weapons disprove it, but they don’t because <reasons>” (I’m not claiming there are any laws or hard rules about anything at all). What I’m saying is that there’s a thing that usually happens, but it didn’t happen with nuclear weapons, and I think we can see why. Nuclear weapons absolutely do live in the relevant reference class, and I think the way their development happened should make us more worried about AGI.

It was, and this is a fair point. But Little Boy used like a billion dollars worth of HEU, which provided a very strong incentive not to approach the design process in the usual iterative way.

For contrast, the laser’s basic physics advantage over other light sources (in coherence length and intensity) is at least as big as the nuclear weapons advantage over conventional explosives, and the first useful laser was approximately as simple as Little Boy, but the first laser was still not very useful. My claim is that this is because the cost of iterating was very low and there was no need to make it useful on the first try.

We agree on the object level. On the meta level though, what’s so important about the very first laser? There is a lot of ambiguity in what counts as the starting point. For instance, when was the first steam engine invented? You could cite the Newcomen engine, or you could refer to various steam-powered contraptions from antiquity. The answer is going to differ by millennia, and along with it all the other parameters characterizing the development of this particular technology, but I don’t see what I’m supposed to learn from it.

Actually, I think the steam engine is the best example of Richard’s thesis, and I wish he had talked about it more. Before Newcomen, there was Denis Papin and Thomas Savery, who invented steam devices which were nearly useless and arguably not engines. Newcomen’s engine was the first commercially successful true engine, and even then it was arguably inferior to wind, water, or muscle power, only being practical in the very narrow case of pumping water out of coal mines. It wasn’t until the year 1800 (decades after the first engines) that they became useful enough for locomotion.

There was even an experience curve, noticed by Henry Adams, where each successive engine did more work and used less coal. Henry Adam’s curve was similar to Moore’s Law in many respects.

If the thesis is “There exists for every technological innovation in history some metric along which its performance is a smooth continuation of previous trends within some time window”, then yes, like I said, I agree at the object level, and my objection is at the meta level, namely that such an observation is worthless as there is basically no way to violate it. Disjunct over enough terms and the statement is bound to become true, but then it explains everything and therefore nothing.

Taking AGI as an example: Does slow takeoff fit the bill? Check. Scaling hypothesis implies AGI will become gradually more competent with more compute. Does hard takeoff fit the bill? Check. Recursive self-improvement implies there is a continuous chain of subagents bootstrapping from a seed AGI to superintelligence (even though it looks like Judgment Day from the outside).

If humanity survives a hard AI takeoff, I bet some future econblogger is going to draw a curve and say “Look! Each of these subagents is only a modest improvement over the last, there’s no discontinuity! Like every other technology, AI follows the same development pattern!”