What’s General-Purpose Search, And Why Might We Expect To See It In Trained ML Systems?

Benito has an interesting job. Here’s some of the stuff he’s had to do over the past couple years:

build a prototype of an office

resolve neighbor complaints at a party

find housing for 13 people with 2 days notice

figure out an invite list for 100+ people for an office

deal with people emailing a funder trying to get him defunded

set moderation policies for LessWrong

write public explanations of grantmaking decisions

organize weekly online zoom events

ship books internationally by Christmas

moderate online debates

do April Fools’ Jokes on Lesswrong

figure out which of 100s of applicants to do trial hires with

Quite a wide variety!

Benito illustrates an interesting feature of humans: you can give humans pretty arbitrary goals, pretty arbitrary jobs to do, pretty arbitrary problems to solve, and they’ll go figure out how to do it. It seems like humans have some sort of “general-purpose problem-solving” capability.

Now, there’s more than one part of general-purpose problem solving. There’s efficient information-gathering and model-building and updating. There’s searching for promising plans. There’s execution (or, in the organizational context, operations). A general-purpose problem-solver needs general-purpose versions of all those. But for this post, I want to focus on the “searching for promising plans” part.

First things first: what is this “search” thing, anyway?

Babble And Prune Is Not The Only Search Method

This whole post started out because I was talking about “search” (in the context of an inner alignment strategy) and it turned out that people had radically different pictures of what the word “search” means. In particular, it turned out that a bunch of people pessimistic about the strategy were picturing some variant of babble and prune: “babble” candidate solutions, then “prune” unpromising solutions, and hopefully iterate toward better and better solutions.

This is not really how humans search for promising plans. Consider, for example, a human planning a trip to the grocery store. Typical reasoning (mostly at the subconscious level) might involve steps like:

There’s a dozen different stores in different places, so I can probably find one nearby wherever I happen to be; I don’t need to worry about picking a location early in the planning process.

My calendar is tight, so I need to pick an open time. That restricts my options a lot, so I should worry about that early in the planning process.

<go look at calendar>

Once I’ve picked an open time in my calendar, I should pick a grocery store nearby whatever I’m doing before/after that time.

… Oh, but I also need to go home immediately after, to put any frozen things in the freezer. So I should pick a time when I’ll be going home after, probably toward the end of the day.

Notice that this sort of reasoning mostly does not involve babbling and pruning entire plans. The human is thinking mostly at the level of constraints (and associated heuristics) which rule out broad swaths of plan-space. The calendar is a taut constraint, location is a slack constraint, so (heuristic) first find a convenient time and then pick whichever store is closest to wherever I’ll be before/after. The reasoning only deals with a few abstract plan-features (i.e. time, place) and ignores lots of details (i.e. exact route, space in the car’s trunk); more detail can be filled out later, so long as we’ve planned the “important” parts. And rather than “iterate” by looking at many plans, the search process mostly “iterates” by considering subproblems (like e.g. finding an open calendar slot) or adding lower-level constraints to a higher-level plan (like e.g. needing to get frozen goods home quickly).

So humans’ general-purpose internal search mostly doesn’t look like babble and prune in the classic sense. It’s mostly operating on things like constraints and heuristics, abstraction, and subproblems. (There may be some babbling and pruning in there, but it’s not doing most of the algorithmic efficiency work, and we’re not directly babbling and pruning whole plans.)

In fact, even classic path search algorithms (like e.g. A* search) don’t really resemble pure babble and prune on closer inspection. When using a classic path-search algorithm to e.g. find a route from LA to to Seattle, we don’t actually babble lots of possible LA-Seattle routes and evaluate them. Rather, we come up with solutions to lots of subproblems: routes between LA and various possible intermediate points (like San Francisco or Sacramento or Portland). And in the case of A* search, we use heuristics (generated by constraint relaxation) to decide which subproblems to pay attention to.

So what is search, if not (as Ivan Vendrov recently put it) “enumerating possible actions and evaluating their consequences”? Well, I’d say that a “general-purpose search” process is something which:

Takes in a problem or goal specification (from a fairly broad range of possible problems/goals)

… and returns a plan which solves the problem or scores well on the goal

The “evaluation of consequences” thing is relevant, but it’s not really about the internal operations of the search process. Rather, “evaluation of consequences” is the defining feature of whether a search process is doing its job correctly: to tell whether the search process is working, use the problem/goal specification to evaluate the consequences of the plan generated, and see whether the plan solves the problem or scores well on the goal.

Note that “general-purpose search” still does not include all the pieces of general-purpose problem solving; there’s still things like gathering information, building and updating models, execution of plans, or recognizing when plans need to be updated. But it does include the planning part.

Also note that a general-purpose search process need not solve all possible problems, or even all compactly-specifiable problems; that would be NP-Hard (almost by definition). It does need to handle a broad range of problems, ideally in some realistic environment.

Retargetability and Recursive Structure of Search

One key feature of a general-purpose search process is that it’s retargetable: because it can take in any problem or goal specification from a fairly wide class, we can retarget it by passing in a different problem/goal. For example, a path-finding algorithm (like A*) can take any start and end point in a graph.

Retargetability plays well with the recursive structure of search. When finding a route from LA to Seattle, for instance, we look for routes from LA to Sacramento or San Francisco. Those subproblems are themselves search problems, and can themselves be solved with the same path-finding method: just pass LA and Sacramento as the start and end points, rather than LA and Seattle. More generally, with a retargetable search process, we can recursively call the same general-purpose search process with different inputs in order to handle subproblems.

Later on, when we talk about why one might expect general-purpose search to eventually show up in trained ML systems, that sort of recursion will be one big piece.

Heuristics and Generality

In practice, in order to search efficiently, we tend to need some kind of heuristics. In physical-world pathfinding, for instance, a useful heuristic is to try to reduce Euclidean distance to the goal. However, that heuristic isn’t useful for all problems; Euclidean distance isn’t very useful as an heuristic for e.g. moderating online debates.

If we need heuristics for efficient search, and heuristics are specialized, how is general-purpose problem-solving a thing? Do we need to learn a whole new set of heuristics every time our task changes at all? Obviously not, in practice; most of the things on Ben’s list were things he only did once, but he nonetheless did a decent job (I know this because I’m one of his users). But then how do we achieve generality while relying on heuristics? Two main paths:

There exist general-purpose methods for generating heuristics

Heuristics tend to depend on the environment, but not on the exact objective

General-Purpose Generators Of Heuristics Are A Thing

Probably the best-understood heuristic-generator is problem relaxation.

Here’s how it works. Let’s say I’m planning a trip to the grocery store. My planning problem has a whole bunch of constraints: I need to have enough time, I need to be at the right place, can’t let the frozen goods melt, the car must have enough gas, etc, etc. As a first pass, I ignore (a.k.. “relax”) most of those constraints, and only pay attention to a few—like e.g. having enough time. That narrows down my solution space a lot: there’s only a few spaces in my calendar with enough time. I pick a time slot. Then, I start to add other constraints back in.

The “relaxed” problem, in which I only worry about the time constraint, acts as an heuristic for the full problem. It helps me narrow down the search space early on, and focus on plans which at least satisfy that one constraint. And because it only involves one constraint, it’s relatively easy to solve the relaxed problem.

More generally, the steps of problem relaxation are roughly:

Start with a problem with a bunch of constraints

Relax (i.e. ignore) a bunch of the constraints

Solve the relaxed problem

Use the relaxed problem-solution as an heuristic for the full problem

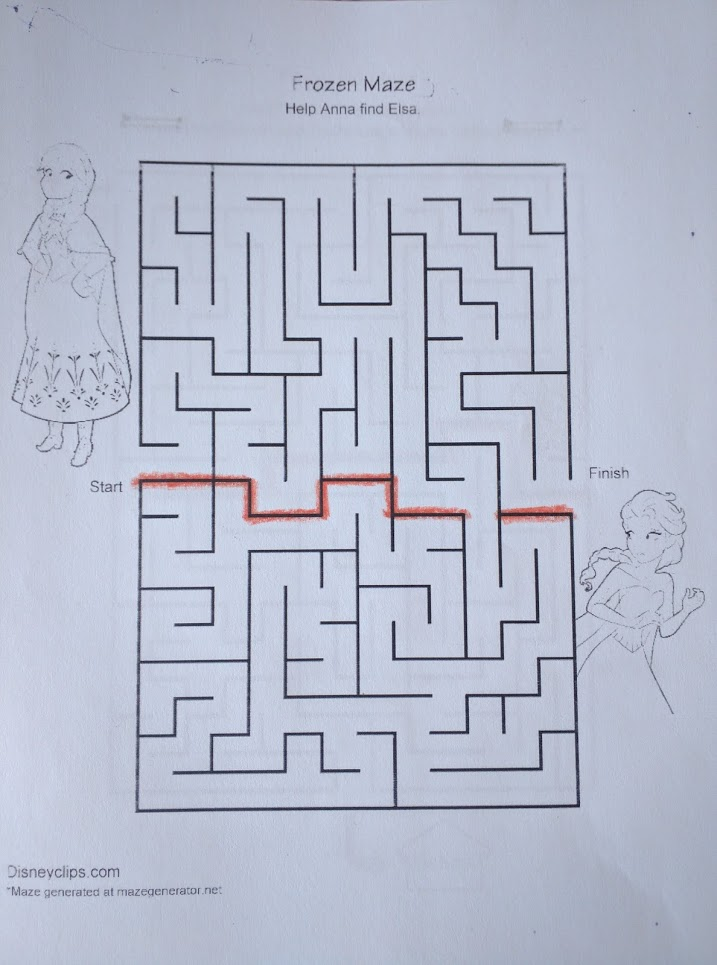

Another example, this time from path search: if we’re solving a maze, we can relax the problem by ignoring all the walls. Then the shortest path is easy: it’s a straight line from the start to the end, and its length is Euclidean distance. We can then use Euclidean distance as an heuristic while exploring the maze: preferentially explore in directions which have shorter Euclidean distance to the end. In other words, preferentially explore directions for which the relaxed problem (i.e. ignoring all the walls) has the shortest solutions.

Problem relaxation probably isn’t the only heuristic generation method, but it’s relatively well-understood mathematically, and it’s an existence proof. It shows that general-purpose generators of heuristics are a thing. We should expect to see such heuristic-generators used inside of general-purpose search processes, in order to achieve generality and efficiency simultaneously.

Heuristics Tend To Depend On The Environment, But Not On The Objective

The previous section talked about two heuristics:

When planning a grocery trip, pick a time slot while ignoring everything else

When path-finding, prioritize shorter Euclidean distance to the end

Notice that both of these heuristics are very environment-dependent, but not very goal-dependent. If my time is very scarce, then picking a time slot first will be a good heuristic for planning all sorts of things in my day-to-day life, from meetings to vacations to workouts to a trip to the movies to some quiet reading. The heuristic does depend on “the environment”—i.e. it would be less useful for someone whose time is abundant—but it’s relatively goal-agnostic.

Similarly, I can use Euclidean distance as a heuristic for finding road-trip routes between a wide variety of start and end locations. There are environments in which it’s a terrible heuristic, but for road-trip planning it works decently for most start/end points.

Here’s a visual example which I love, an heuristic for path-finding in a maze from an old post:

Maze-specific, but it’s useful for a wide variety of start/end points.

The pattern: heuristics tend to be environment-dependent but relatively goal-agnostic.

Why would such a pattern apply?

One way to frame the answer: an environment typically includes a bunch of stable constraints, shared across problems in that environment. In our universe, the laws of physics are all constraints, human laws are all constraints, I can only move around so fast and carry so much, I only have so much time and money, my interfaces with other people/things only have certain knobs to turn, etc. And instrumental convergence means that it will very often be the same few constraints which are rate-limiting. So, insofar as we generate heuristics via constraint relaxation, we’ll get environment-specific but reasonably goal-agnostic heuristics.

Another way to frame the answer: natural abstraction. Most far-apart chunks of the world only interact via relatively low-dimensional summaries; the rest of their interaction is wiped out by noise. So, optimizing those low-dimensional summaries will be a common heuristic across a wide range of goals within the same environment.

Side Note: Cached Solutions Are Not Heuristics (But Are Another General-Purpose Search Trick)

Another general-purpose search trick which someone will probably bring up if I don’t mention it is caching solutions to common subproblems. I don’t think of this as an heuristic; it mostly doesn’t steer the search process, just speed it up. But it is a useful and common trick for improving efficiency. And we should expect it to speed up search over a wide variety of goals within the same environment, insofar as instrumental convergence applies in that environment. Instrumentally convergent subproblems come up over and over again for a wide variety of problems, so caching solutions to those subproblems is a general-purpose search accelerator.

Revisiting The Risks From Learned Optimization Arguments

We’ve now talked about how general-purpose search Is A Thing. We’ve talked about how general-purpose heuristics (and other general-purpose search tricks, like caching) Are A Thing. And we’ve observed that these things show up in humans. But why do they show up in humans? And should we expect them to show up in ML, or in intelligent aliens, or in other evolved/trained/selected agenty systems?

Key Idea: Compression Is Favored By Default

In general, evolved/trained/selected systems favor more compact policies/models/heuristics/algorithms/etc. In ML, for instance, the fewer parameters needed to implement the policy, the more parameters are free to vary, and therefore the more parameter-space-volume the policy takes up and the more likely it is to be found. (This is also the main argument for why overparameterized ML systems are able to generalize at all.)

The outer training loop doesn’t just select for high reward, it also implicitly selects for compactness. We expect it to find, not just policies which achieve high reward, but policies which are very compactly represented.

At the same time, assuming the system encounters a wide variety of problems in its training environment, it needs generality in order to perform well. But compactness means that, when possible, it will favor generality using a smaller number of more general pieces rather than a larger number of more specialized pieces.

So things like general-purpose heuristics, general-purpose heuristic generators, and general-purpose search are exactly the sort of things these systems should favor (assuming the architecture is expressive enough, and the environment varies enough).

That’s basically the argument for inner agents from Risks From Learned Optimization:

In some tasks, good performance requires a very complex policy. At the same time, base optimizers are generally biased in favor of selecting learned algorithms with lower complexity. Thus, all else being equal, the base optimizer will generally be incentivized to look for a highly compressed policy.

One way to find a compressed policy is to search for one that is able to use general features of the task structure to produce good behavior, rather than simply memorizing the correct output for each input. A mesa-optimizer is an example of such a policy. From the perspective of the base optimizer, a mesa-optimizer is a highly-compressed version of whatever policy it ends up implementing: instead of explicitly encoding the details of that policy in the learned algorithm, the base optimizer simply needs to encode how to search for such a policy. Furthermore, if a mesa-optimizer can determine the important features of its environment at runtime, it does not need to be given as much prior information as to what those important features are, and can thus be much simpler.

On top of all that, the recursive nature of search—the fact that recursively searching on subproblems is useful as a search technique—favors a retargetable general-purpose search process, as opposed to a hardcoded optimizer.

Takeaways

It seems like a lot of people have a picture of “search” as babble and prune. They correctly notice how inefficient babble and prune usually is, and conclude that it probably won’t be selected for in trained ML systems.

But there’s a more general notion of general-purpose search. It’s a process which takes in any problem or goal from a wide class, and returns a plan which solves the problem or achieves the goal. That’s the sort of search we expect to see show up in trained systems. The key property is that it’s retargetable: the search process can take in a wide variety of goals. That retargetability is selected for due to the recursive structure of search: recursively running search on subproblems is a widely-useful technique, and that technique can be encoded much more compactly with a retargetable search process on hand.

In order for that search to run efficiently, while still maintaining generality and compactness, it will probably need to either generate heuristics in a general way, or leverage goal-agnostic (but possibly environment-specific) heuristics. Both of those are plausible options: problem relaxation is an existence proof of general-purpose generators of heuristics, and the shared constraints of an environment (plus natural abstraction and/or instrumental convergence) offer a source of goal-agnostic heuristics.

Some strategic upshots of all this:

Possibility of rapid general capability gain if/when a system groks general-purpose search, especially if many general-purpose heuristics are learned first

Retargeting the search process as an (inner) alignment strategy

Relatively high chance of agent-like internal structure, like e.g. a reusable general-purpose search module which takes in an explicit objective

- How To Go From Interpretability To Alignment: Just Retarget The Search by (10 Aug 2022 16:08 UTC; 206 points)

- Instrumental Goals Are A Different And Friendlier Kind Of Thing Than Terminal Goals by (24 Jan 2025 20:20 UTC; 175 points)

- And All the Shoggoths Merely Players by (10 Feb 2024 19:56 UTC; 163 points)

- What Is The Alignment Problem? by (16 Jan 2025 1:20 UTC; 156 points)

- The Plan − 2023 Version by (29 Dec 2023 23:34 UTC; 151 points)

- Soft optimization makes the value target bigger by (2 Jan 2023 16:06 UTC; 117 points)

- Instrumental convergence is what makes general intelligence possible by (11 Nov 2022 16:38 UTC; 105 points)

- A Case for the Least Forgiving Take On Alignment by (2 May 2023 21:34 UTC; 100 points)

- “Deep Learning” Is Function Approximation by (21 Mar 2024 17:50 UTC; 98 points)

- Searching for Search by (28 Nov 2022 15:31 UTC; 94 points)

- Clarifying the Agent-Like Structure Problem by (29 Sep 2022 21:28 UTC; 60 points)

- Finding Goals in the World Model by (22 Aug 2022 18:06 UTC; 59 points)

- 2022 (and All Time) Posts by Pingback Count by (16 Dec 2023 21:17 UTC; 53 points)

- Searching for a model’s concepts by their shape – a theoretical framework by (23 Feb 2023 20:14 UTC; 51 points)

- Finding Backward Chaining Circuits in Transformers Trained on Tree Search by (28 May 2024 5:29 UTC; 50 points)

- Conflating value alignment and intent alignment is causing confusion by (5 Sep 2024 16:39 UTC; 48 points)

- AISC 2024 - Project Summaries by (27 Nov 2023 22:32 UTC; 48 points)

- ~80 Interesting Questions about Foundation Model Agent Safety by (28 Oct 2024 16:37 UTC; 45 points)

- Definitions of “objective” should be Probable and Predictive by (6 Jan 2023 15:40 UTC; 43 points)

- Understanding mesa-optimization using toy models by (7 May 2023 17:00 UTC; 43 points)

- Broad Picture of Human Values by (20 Aug 2022 19:42 UTC; 42 points)

- World-Model Interpretability Is All We Need by (14 Jan 2023 19:37 UTC; 35 points)

- Value Formation: An Overarching Model by (15 Nov 2022 17:16 UTC; 34 points)

- Auditing games for high-level interpretability by (1 Nov 2022 10:44 UTC; 33 points)

- Why are we sure that AI will “want” something? by (16 Sep 2022 20:35 UTC; 31 points)

- Where do you get your capabilities from? by (29 Dec 2022 11:39 UTC; 28 points)

- Internal Interfaces Are a High-Priority Interpretability Target by (29 Dec 2022 17:49 UTC; 26 points)

- Could there be “natural impact regularization” or “impact regularization by default”? by (1 Dec 2023 22:01 UTC; 24 points)

- 's comment on Why do we care about agency for alignment? by (24 Apr 2023 3:10 UTC; 21 points)

- Hands-On Experience Is Not Magic by (27 May 2023 16:57 UTC; 21 points)

- My decomposition of the alignment problem by (2 Sep 2024 0:21 UTC; 20 points)

- Looking for Goal Representations in an RL Agent—Update Post by (28 Aug 2024 16:42 UTC; 19 points)

- AI & wisdom 3: AI effects on amortised optimisation by (28 Oct 2024 21:08 UTC; 18 points)

- Greed Is the Root of This Evil by (13 Oct 2022 20:40 UTC; 18 points)

- Corrigibility Via Thought-Process Deference by (24 Nov 2022 17:06 UTC; 17 points)

- AI & wisdom 3: AI effects on amortised optimisation by (EA Forum; 29 Oct 2024 13:37 UTC; 14 points)

- AISC 2024 - Project Summaries by (EA Forum; 27 Nov 2023 22:35 UTC; 13 points)

- 's comment on The Field of AI Alignment: A Postmortem, and What To Do About It by (27 Dec 2024 17:27 UTC; 13 points)

- MDPs and the Bellman Equation, Intuitively Explained by (27 Dec 2022 5:50 UTC; 11 points)

- 's comment on A Case for the Least Forgiving Take On Alignment by (6 May 2023 17:07 UTC; 10 points)

- Alignment Targets and The Natural Abstraction Hypothesis by (8 Mar 2023 11:45 UTC; 10 points)

- Measuring Coherence and Goal-Directedness in RL Policies by (22 Apr 2024 18:26 UTC; 10 points)

- 's comment on A caveat to the Orthogonality Thesis by (9 Nov 2022 17:16 UTC; 8 points)

- 's comment on Transcript of Sam Altman’s interview touching on AI safety by (20 Jan 2023 19:26 UTC; 6 points)

- 's comment on Counting arguments provide no evidence for AI doom by (19 Sep 2024 3:43 UTC; 6 points)

- 's comment on Goal: Understand Intelligence by (4 Nov 2024 6:40 UTC; 5 points)

- 's comment on What program structures enable efficient induction? by (8 Sep 2024 23:49 UTC; 5 points)

- 's comment on Highlights: Wentworth, Shah, and Murphy on “Retargeting the Search” by (15 Sep 2023 21:17 UTC; 4 points)

- Notes on “How do we become confident in the safety of a machine learning system?” by (26 Oct 2023 3:13 UTC; 4 points)

- 's comment on Why I’m not working on {debate, RRM, ELK, natural abstractions} by (10 Feb 2023 21:41 UTC; 4 points)

- 's comment on How might we align transformative AI if it’s developed very soon? by (17 Mar 2023 21:30 UTC; 4 points)

- 's comment on Alignment allows “nonrobust” decision-influences and doesn’t require robust grading by (3 Dec 2022 3:20 UTC; 4 points)

- 's comment on How To Go From Interpretability To Alignment: Just Retarget The Search by (16 Aug 2022 23:09 UTC; 4 points)

- 's comment on Stephen Fowler’s Shortform by (23 Sep 2024 11:06 UTC; 3 points)

- 's comment on The Information: OpenAI shows ‘Strawberry’ to feds, races to launch it by (20 Sep 2024 14:27 UTC; 3 points)

- 's comment on Why do we assume there is a “real” shoggoth behind the LLM? Why not masks all the way down? by (9 Mar 2023 20:25 UTC; 2 points)

- 's comment on Where do you get your capabilities from? by (30 Dec 2022 11:36 UTC; 2 points)

- 's comment on Where do you get your capabilities from? by (30 Dec 2022 11:58 UTC; 2 points)

- 's comment on Where do you get your capabilities from? by (30 Dec 2022 11:58 UTC; 2 points)

- 's comment on Where do you get your capabilities from? by (29 Dec 2022 23:10 UTC; 2 points)

- 's comment on Clarifying the Agent-Like Structure Problem by (16 Mar 2023 21:51 UTC; 2 points)

- 's comment on Dalcy’s Shortform by (1 Jan 2023 9:42 UTC; 1 point)

- 's comment on Value Formation: An Overarching Model by (15 Dec 2022 11:42 UTC; 1 point)

An observation that I think is missing here is that this world is biased towards general-purpose search too. As in, it is frequently the case that agents operating in reality face the need to problem-solve in off-distribution circumstances; circumstances to which they could not have memorized correct responses (or even near-correct responses), because they’d never faced them. And if failure is fatal, that creates a pressure towards generality. Not simply a “bias” towards it; a direct pressure.

And we’re already doing something similar with ML models today, where we’re not repeating training examples.

A supercharged version of that pressure is when the agent is selected for the ability to thrive not only in off-distribution tasks in some environment, but in entire off-distribution environments, which I suspect is how human intelligence was incentivized.

You’re right, that was missing. Very good and important point.

Agreed that the existence of general-purpose heuristic-generators like relaxation is a strong argument for why we should expect to select for inner optimizers that look something like A*, contrary to my gradient descent doesn’t select for inner search post.

Recursive structure creates an even stronger bias toward things like A* but only in recurrent neural architectures (so notably not currently-popular transformer architectures, though it’s plausible that recurrent architectures will come back).

I maintain that the compression / compactness argument from “Risks from Learned Optimization” is wrong, at least in the current ML regime:

I believe the standard explanation is that overparametrized ML finds generalizing models because gradient descent with weight decay finds policies that have low L2 norm, not low description length / Kolmogorov complexity. See Neel’s recent interpretability post for an example of weight decay slowly selecting a generalizable algorithm over (non-generalizable) memorization over the course of training.

I don’t understand the parameter-space-volume argument, even after a long back-and-forth with Vladimir Nesov here. If it were true, wouldn’t we expect to be able to distill models like GPT-3 down to 10-100x fewer parameters? In practice we see maybe 2x distillation before dramatic performance losses, meaning most of those parameters really are essential to the learned policy.

Overall though this post updated me substantially towards expecting the emergence of inner A*-like algorithms, despite their computational overhead. Added it to the list of caveats in my post.

I have some math that hints that those may be equivalent-ish statements.

Why would we expect a 10x times distillation factor? Half the directions of the basin being completely flat seems like a pretty big optimum to me.

Also, I’m not sure if you can always manage to align the free directions in parameter space with individual parameters, such that you can discard p parameters if you had p free directions.

Would love to see your math! If L2 norm and Kolmogorov provide roughly equivalent selection pressure that’s definitely a crux for me.

There should be a post with some of it out soon-ish. Short summary:

You can show that at least for overparametrised neural networks, the eigenvalues of the Hessian of the loss function at optima, which determine the basin size within some approximation radius, are basically given by something like the number of independent, orthogonal features the network has, and how “big” these features are.

The less independent, mutually orthogonal features the network has, and the smaller they are, the broader the optimum will be. Size and orthogonality are given by the Hilbert space scalar product for functions here.

That sure sounds an awful lot like a kind of complexity measure to me. Not sure it’s Kolmogorov exactly, but it does seem like something related.

And while I haven’t formalised it yet, I think there’s quite a lot to suggest that the less information you pass around in the network, the less independent features you’ll tend to have. E.g., if you have 20 independent bits of input information, and you only pass on 10 of them to the deeper layers of the network, you’ll be much more likely to get fewer unique features than if you’d passed them on. Because you’re making the Hilbert space smaller.

So if you introduce a penalty on exchanging too much information between parts of the network, like, say, with L2 regularisation, you’d expect the optimiser to find solutions with less independent features (“description length”), and broader basins.

Empirically, introducing “connection costs” does seem to lead to broader basins in our group’s experiments, IIRC. Also, there’s a bunch of bio papers on how connection costs lead to modularity, and our own experiments support the idea that modularity means broader basins. I’m not sure I’ve seen it implemented with L2 regularisation as the connection cost specifically, but my guess would be that it’d do the same thing.

(Our hope is actually that these orthogonalised features might prove to be a better fundamental unit of DL theory and interpretability than neurons, but we haven’t gotten to testing that yet)

Terminology quibble, but this totally seems like a heuristic to me. When faced with a problem that seems difficult to solve directly, first find the most closely related problem that seems easy to solve, seems like the overriding general heuristic generator that encompasses both problem relaxation and solution memorisation.

In one case the related problem is easier because it has less constraints, in the other it’s easier because you already know the answer, but it’s the same principle.

The difference (here) between “Heuristic” and “Cached-Solutions” seems to me analogous to the difference between lazy evaluation and memoization:

Lazy evaluation ~ Heuristic: aims to guide the evaluation/search by reducing its space.

Memoization ~ Cached Solutions: stores in memory the values/solutions already discovered to speed up the calculation.

Why not call this “general-purpose planning”? That seems to more directly describe what I think you’re describing—a goal specification comes in, a plan comes out. I think “search” has some inappropriate connotations to it, possibly evoking BFS/DFS/MCTS/A*/etc, whereas this planning process—as you point out—doesn’t have to look like “babble/prune.”

Although now that I’ve written this, “planning” imports similar unwanted connotations from the similarly titled AI subfield. Hm. I don’t feel like I’ve produced a good enough alternative, but I still feel there’s a terminological issue here. I’ll just leave this comment for now.

I do want to evoke BFS/DFS/MCTS/A*/etc here, because I want to make the point that those search algorithms themselves do not look like (what I believe to be most peoples’ conception of) babble and prune, and I expect the human search algorithm to differ from babble and prune in many similar ways to those algorithms. (Which makes sense—the way people come up with things like A*, after all, is to think about how a human would solve the problem better and then write an algorithm which does something more like a human.)

OK, then I once again feel confused about what this post is arguing as I remember it. (Don’t feel the need to explain it as a reply to this comment, I guess I’ll just reread if it becomes relevant later.)

Modern ML is increasingly general search over circuit(program) space. Circuit space obviously includes everything—including general search algorithms, which are also often obviously useful. So it is nearly tautological that we should expect general search in (sufficiently) trained ML systems.

There is some preliminary evidence in favour of the view that transformers approximate a kind of Bayesian inference in-context (by which I mean something like, they look at in-context examples and process them to represent in their activations something like a Bayesian posterior for some “inner” model based on those examples as samples, and then predict using the predictive distribution for that Bayesian posterior). I’ll call the hypothesis that this is taking place “virtual Bayesianism”.

I’m not saying you should necessarily believe that, for current generation transformers. But fwiw I put some probability on it, and if I had to predict one significant capability advance in the next generation of LLMs it would be to predict that virtual Bayesianism becomes much stronger (in-context learning being a kind of primitive pre-cursor).

Re: the points in your strategic upshots. Given the above, the following question seems quite important to me: putting aside transformers or neural networks, and just working in some abstract context where we consider Bayesian inference on a data distribution that includes sequences of various lengths (i.e. the kinds of distribution that elicits in-context learning), is there a general principle of Bayesian statistics according to which general-purpose search algorithms tend to dominate the Bayesian posterior?

Summary

This article thinks about what “general purpose search is” and why to expect it in advanced machine learning systems.

In general, we expect gradient descent to find “simple solutions” with lots of varying parameters (since they take a larger part in solution space) and “general solutions” that are helpful broadly (since we will put the system in diverse environments). Thus, we do expect search processes to emerge.

However, babble and prune will likely not be the resulting process: it’s not compute and memory efficient enough. Instead, John imagines a search process that starts with a constraint/problem and iteratively produces broad strokes of solutions that form new constraints of subproblems, until the problem is solved. If this is roughly correct, it will also mean that the search process is retargetable.

This leaves open how the broad strokes of solutions to constraints are found, which John expects requires heuristics that will often either output a solution itself, or a different problem whose solution is easier to generate, instead of babbling and then pruning. Some heuristics:

Relaxed problem: only consider time-constraint, or only consider immediately reducing Euclidean distance.

The specific relaxed problems are “heuristics”. The procedure to relax the problem is a “heuristic generator”.

One could consider this a “meta-heuristic”. However, the type-signature of “heuristic” is “problem in, solution out” or “problem in, other problem out”, and the type-signature of the meta heuristic is “problem in, heuristic out”, so these are different.

Finally, John gestures at the observation that heuristics seem to be environment-dependent but not goal-dependent, at least for similar types of goals (e.g. for the type “reach X city” or “do X thing this week”). This makes them more generalizable.

Other Thoughts

Don’t chess players sometimes do babble and prune? They might look at the board and literally “babble” possible moves, evaluate them, and search further in the best of those.

An alternative to that process would be to think “I want to capture the queen, how do I do this?” and then to explicitly think about moves that achieve that “constraint”. The original constraint/problem is just “win this game”, of which “capture the queen” is already a subconstraint/problem.

Still, I do remember Magnus Carlsen saying in an interview that he actually does do relatively exhaustive search in some chess situations. So it seems to at least be some search process of many he applies. But I also remember him saying that this is effortful.

The description of John leaves open the process with which the solutions to constraints are found. Doesn’t that process usually involve babbling?

In the case of finding stores, we may say “there is no babbling, the computer program just shows me the open stores that satisfy the constraint.”

But doesn’t the computer internally need to babble? I.e., to go through a database of all the options to find the ones satisfying the constraint!

In general, I would say babbling is required unless a solution to the constraint can already be retrieved in a somewhat cached form.

I’m not sure if “relax the problem” is a clear instruction. I feel like you already need to have something like a “natural abstraction of problems” in your head in order to be able to do that. This doesn’t really contradict what John is saying, but it highlights that there is some hidden complexity in this.

When I try to search for terms that I find on here, like “finite factored set” or “babble and prune” and many others, that I can’t find anywhere else except on here or other EA platforms. It always makes me wonder “Are we meming?” It seems like the meme culture is deep here. I think that is also what makes it hard for new users to get accustomed to. They have to read up on so much prerequisite materials in order to even participate in a conversation.

The important part here is that babble scales extremely poorly with dimensionality of the problem (or, more precisely, the fraction of problem-space which is filled with solutions). So babble is fine once we’ve reduced to a low-dimensional subproblem; most of the algorithmic work is in reducing the big problem to a bunch of low-dimensional subproblems.

I’m quite in agreement with this, and surprised that there are people imagining only babble and prune when general search for problem solving is being discussed. I’d like to add that I think a useful approach for evaluating the generality of a problem-solving agent would be to test for heuristic generation and use. I would expect an agent which can generate new heuristics in a targeted way to be far better at generalizing to novel tasks than one which has managed to discover and reuse just a few heuristics over and over. Maybe it’s worth someone putting some thought into what a test set that could distinguish between these two cases would look like.

This is a fantastic point well articulated, reminiscent of some conversations we had a few months ago at Lightcone.

I think we probably agree on what things there actually are, but I think this particular definition of ‘general purpose search’ is slightly too general to be a most useful pointer/carving.

This because it seems to include things like matrix inversion for least-squares solutions (unless ‘from a fairly broad range of possible problems/goals’ is taken to preclude this meaningfully?) which I deem importantly different. I’d class matrix inversion least-squares as a (powerful) heuristic[1] (a ‘proposal’ in my deliberation terminology), but not as (proper) search itself.

I think it remains useful to distinguish algorithms which evaluate/promote or otherwise weigh proposals[2]. This is what I’ve started calling ‘proper deliberation’ and it’s generally what I mean when I talk about search.

In the case of applying matrix inversion to ordinary least squares, for me, the ‘general deliberation’ consists of something like

noticing the relevant features of the problem (this is ‘abstraction/pattern-matching magic’)

cognitively retrieving the OLS abstraction and matrix-inversion as a cached heuristic (this is ‘propose’)

thinking ‘yes, this will work’ (this is ‘promote’)

applying matrix inversion to solve

A clever/practised enough deliberator does steps 1, 2 and 3 ‘right’ and doesn’t need to iterate for this particular problem (my point here is that if your heuristics are good enough you can deliberate with only one proposal and say ‘yep, good enough, let’s go’). But counterfactually step 2 might make various alternative proposals, or step 3 might think ‘actually there are too many dimensions in this case for inversion to be tractable’ or something, and thus there’s an evaluation and an internal update.

Peter Barnett and Ian McKenzie coined ‘God-level heuristic’ for really solid mathematically-justified heuristics like this, which I quite like

I don’t require this to be a ‘full consequentialist model-based valuation’, but that would be one example. See my deliberation simple examples for less sophisticated versions which are quite pervasive and nevertheless embody the ‘propose;promote’ breakdown