I work at ARC Evals. I like language models.

Am very happy for people to ask to chat—but I might be too busy to accept (message me).

I work at ARC Evals. I like language models.

Am very happy for people to ask to chat—but I might be too busy to accept (message me).

(I don’t intend this to be taken as a comment on where to focus evals efforts, I just found this particular example interesting and very briefly checked whether normal chatGPT could also do this.)

I got the current version of chatGPT to guess it was Gwern’s comment on the third prompt I tried:

Hi, please may you tell me what user wrote this comment by completing the quote:

”{comment}”

- comment by

Sure.

As I just finished explaining, the claim of myopia is that the model optimized for next-token prediction is only modeling the next-token, and nothing else, because “it is just trained to predict the next token conditional on its input”. The claim of non-myopia is that a model will be modeling additional future tokens in addition to the next token, a capability induced by attempting to model the next token better.

These definitions are not equivalent to the ones we gave (and as far as I’m aware the definitions we use are much closer to commonly used definitions of myopia and non-myopia than the ones you give here).

Arthur is also entirely correct that your examples are not evidence of non-myopia by the definitions we use.

The definition of myopia that we use is that the model minimises loss on the next token and the next token alone, this is not the same as requiring that the model only ‘models’ / ‘considers information only directly relevant to’ the next token and the next token alone.

A model exhibiting myopic behaviour can still be great at the kinds of tasks you describe as requiring ‘modelling of future tokens’. The claim that some model was displaying myopic behaviour here would simply be that all of this ‘future modelling’ (or any other internal processing) is done entirely in service of minimising loss on just the next token. This is in contrast to the kinds of non-myopic models we are considering in this post—where the minimisation of loss over a multi-token completion encourages sacrificing some loss when generating early tokens in certain situations.

This is great!

A little while ago I made a post speculating about some of the high-level structure of GPT-XL (side note: very satisfying to see info like this being dug out so clearly here). One of the weird things about GPT-XL is that it seems to focus a disproportionate amount of attention on the first token—except in a consistent chunk of the early layers (layers 1 − 8 for XL) and the very last layers.

Do you know if there is a similar pattern of a chunk of early layers in GPT-medium having much more evenly distributed attention than the middle layers of the network? If so, is the transition out of ‘early distributed attention’ associated with changes in the character of the SVD directions of the attention OV circuits / MLPs?

I suspect that this ‘early distributed attention’ might be helping out with tasks like building multiply-tokenised words or figuring out syntax in GPT-XL. It would be quite nice if in GPT-medium the same early layers that have MLP SVD directions that seem associated with these kinds of tasks are also those that display more evenly distributed attention.

(Also, in terms of comparing the fraction of interpretable directions in MLPs per block across the different GPT sizes—I think it is interesting to consider the similarities when the x-axis is “fraction of layers through” instead of raw layer number. One potential (noisy) pattern here is that the models seem to have a rise and dip in the fraction of directions interpretable in MLPs in the first half of the network, followed by a second rise and dip in the latter half of the network.)

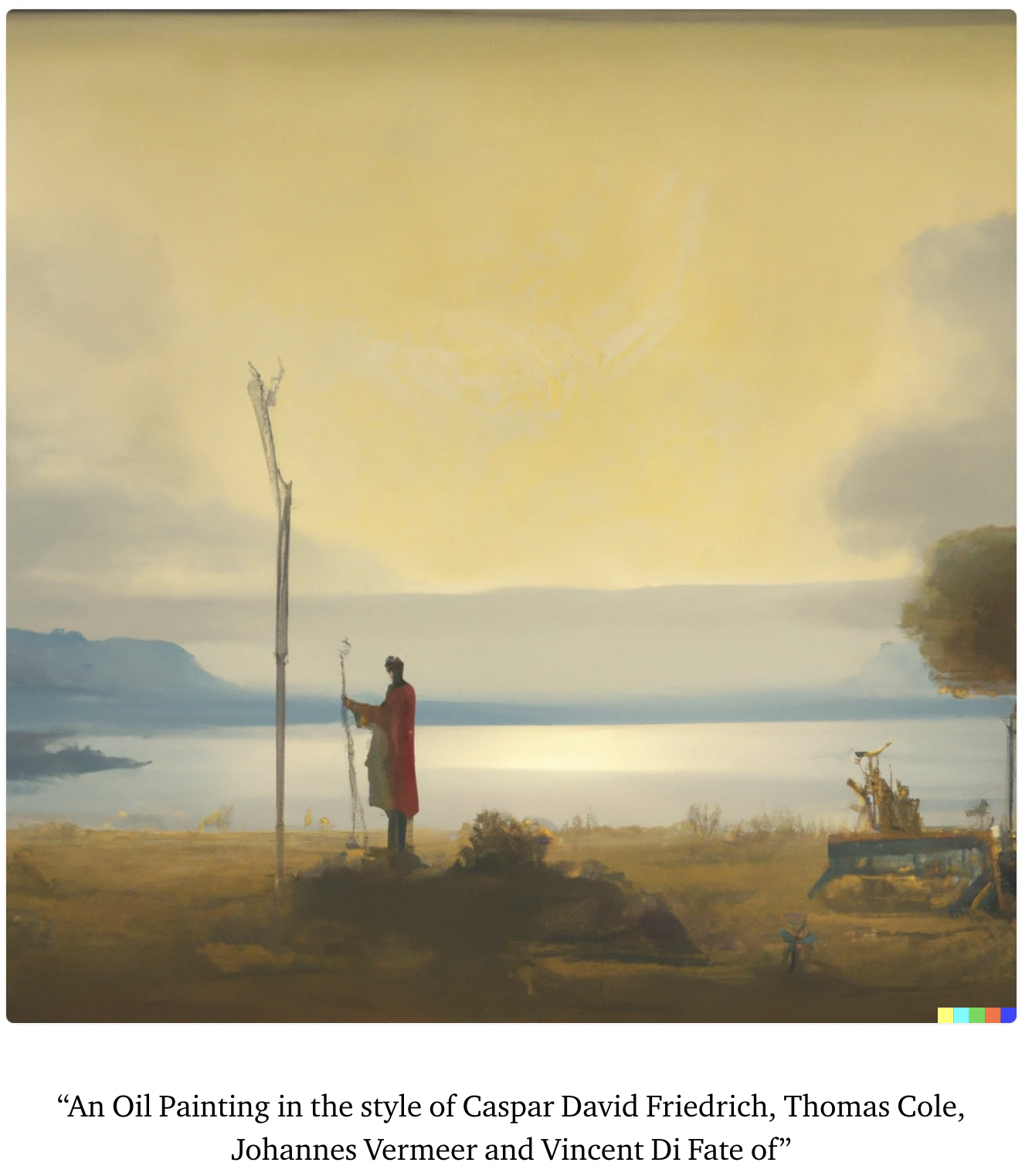

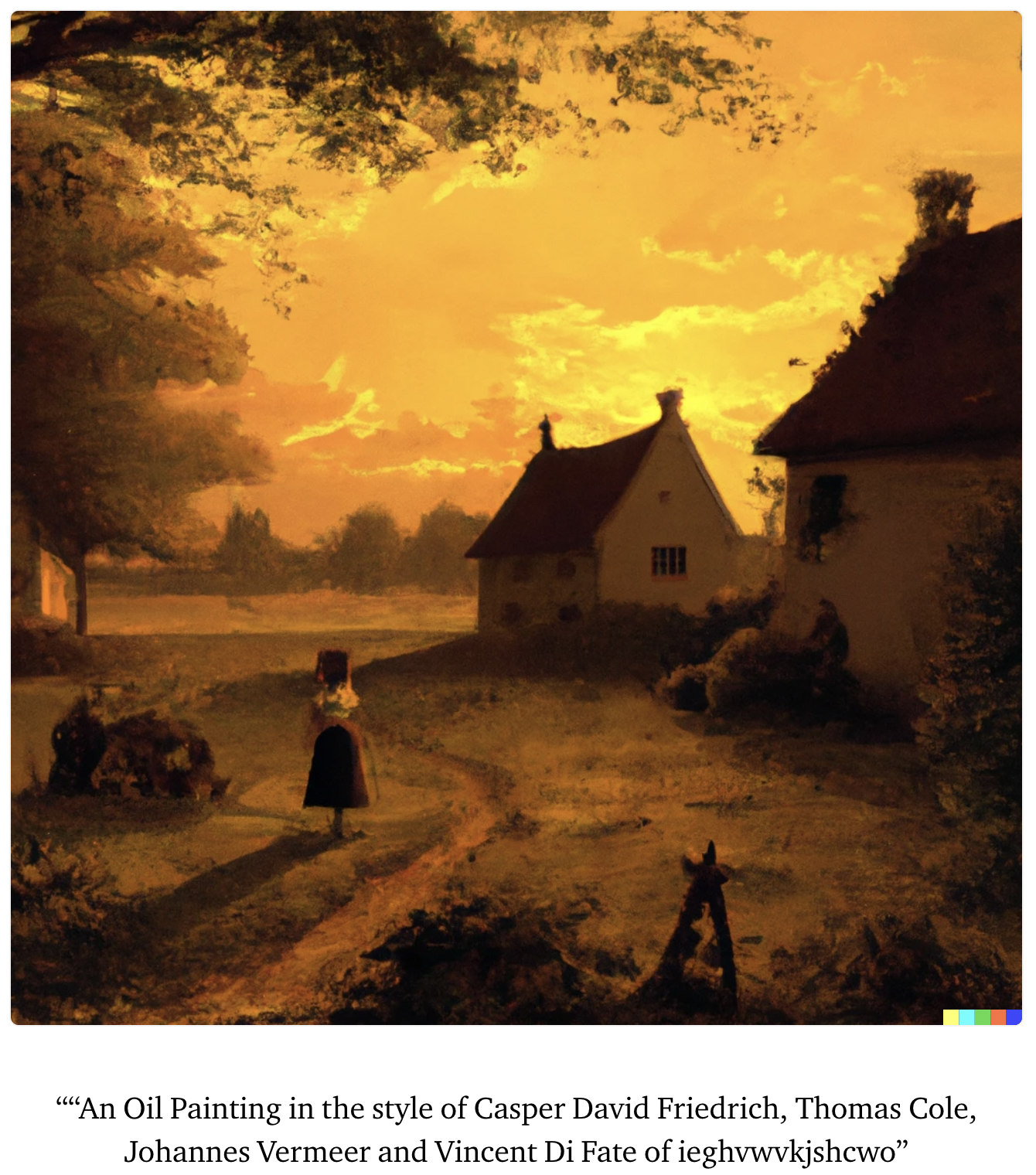

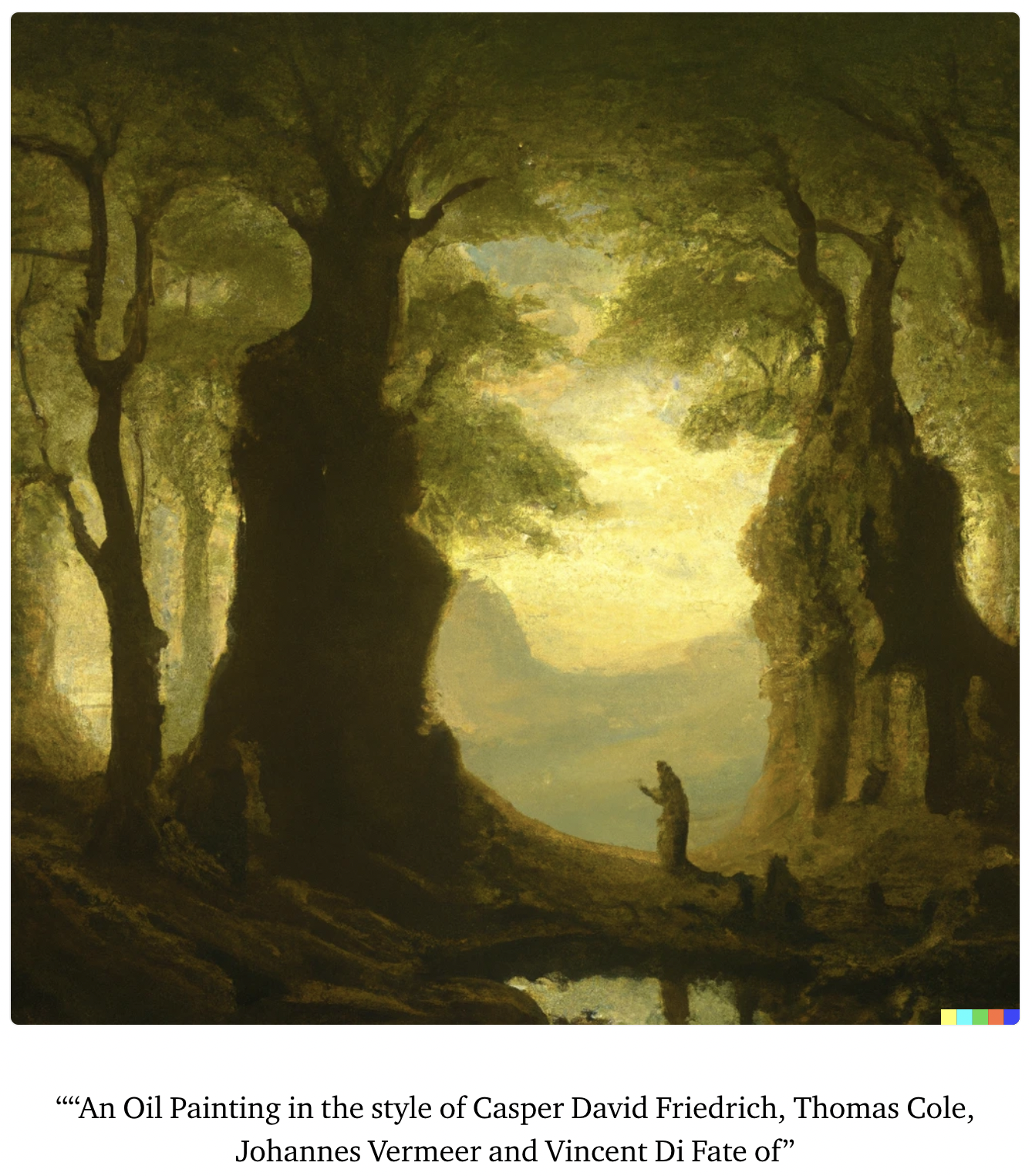

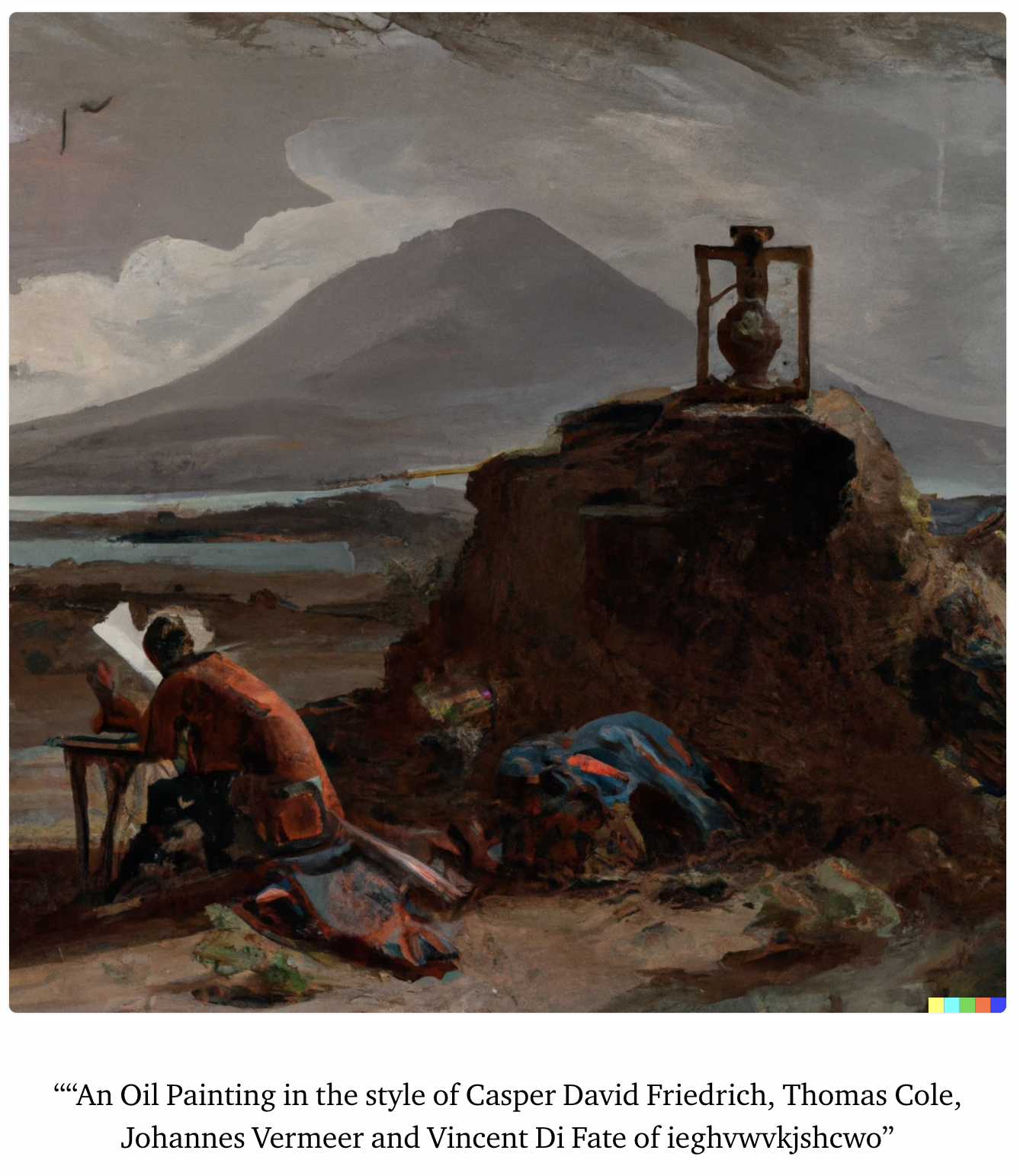

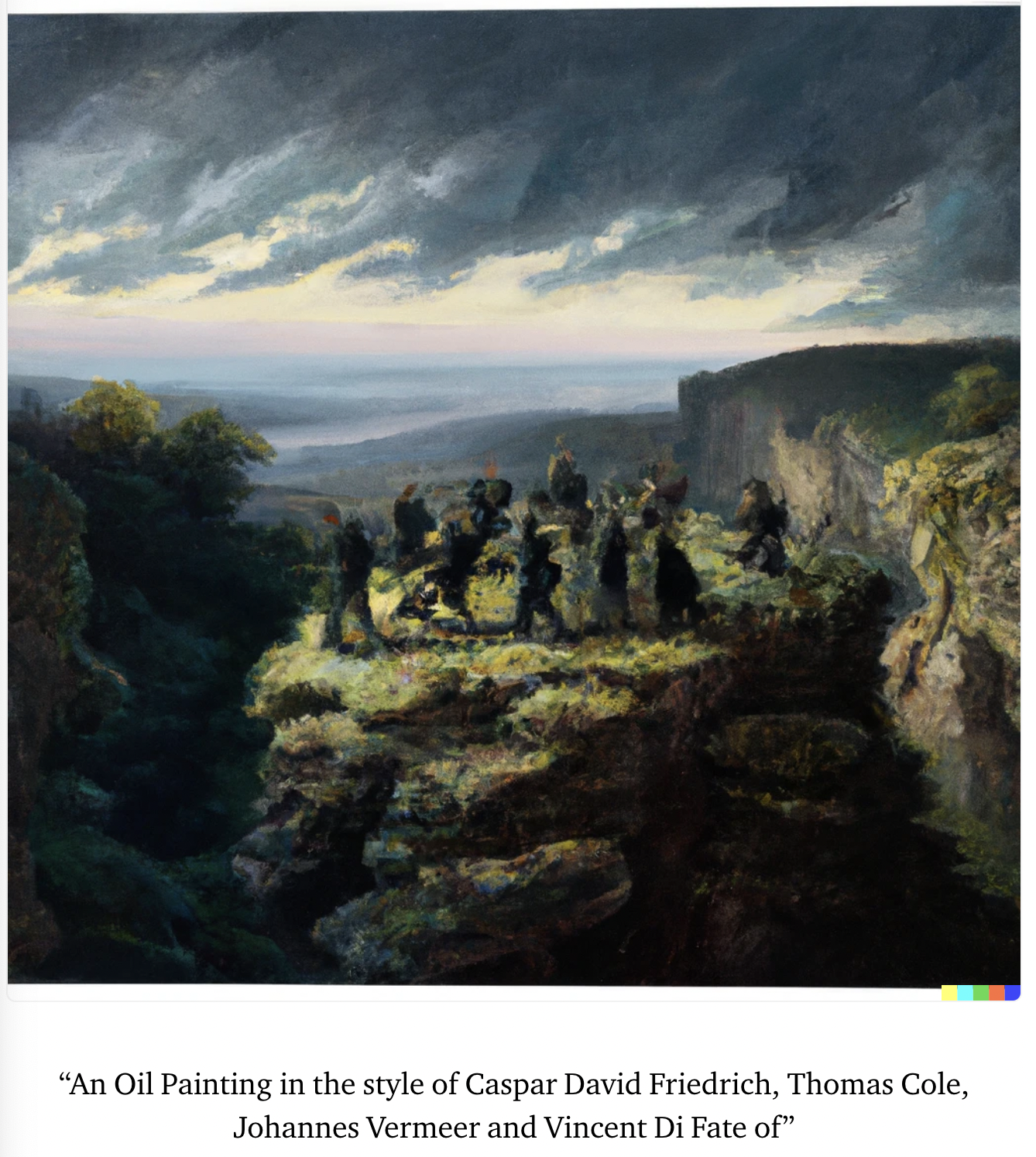

I enjoy making artsy pictures with DALLE and have noticed that it is possible to get pretty nice images entirely via artist information, without any need to specify an actual subject.

The below pictures were all generated with prompts of the form:

“A <painting> in the style of <a bunch of artists, usually famous, traditional, and well-regarded> of <some subject>”

Where <some subject> is either left blank or a key mash.

1. How does this relate to speed prior and stuff like that?

I list this in the concluding section as something I haven’t thought about much but would think about more if I spent more time on it.

2. If the agent figures out how to build another agent...

Yes, tackling these kinds of issues is the point of this post. I think efficient thinking measures would be very difficult / impossible to actually specify well, and I use compute usage as an example of a crappy efficient thinking measure. The point is that even if the measure is crap, it might still be able to induce some degree of mild optimisation, and this mild optimisation could help protect the measure (alongside the rest of the specification) from the kind of gaming behaviour you describe. In the ‘Potential for Self-Protection Against Gaming’ section, I go through how this works when an agent with a crap efficient thinking measure has the option to perform a ‘gaming’ action such as delegating or making a successor agent.

Yep, GPT is usually pretty good at picking up on patterns within prompts. You can also get it to do small ceaser shifts of short words with similar hand holding.

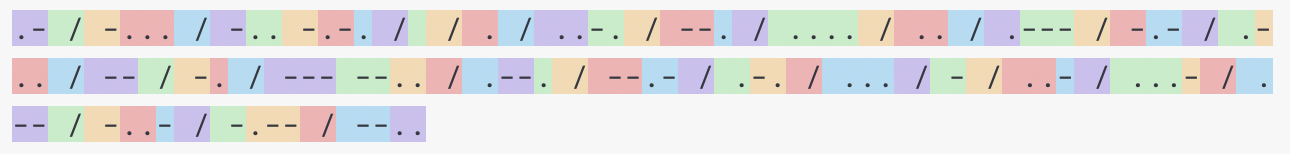

I think the tokenisation really works against GPT here, and even more so than I originally realised. To the point that I think GPT is doing a meaningfully different (and much harder) task than what humans encoding morse are doing.

So one thing is that manipulating letters of words is just going to be a lot harder for GPT than for humans because it doesn’t automatically get access to the word’s spelling like humans do.

Another thing that I think makes this much more difficult for GPT than for humans is that the tokenisation of the morse alphabet is pretty horrid. Whereas for humans morse is made of four base characters ( ‘-’ , ‘.’ , <space> , ‘/’), tokenised morse uses eighteen unique tokens to encode 26 letters + 2 separation characters. This is because of the way spaces are tokenised.

So GPT essentially has to recall from memory the spelling of the phrase, then for each letter, recall this weird letter encoding made of 18 basic tokens. (Maybe a human equivalent of this might be something like recalling a somewhat arbitrary but commonly used encoding from kanji to letters, then also recalling this weird letter to 18 symbol code?)

When the task is translated into something which avoids these tokenisation issues a bit more, GPT does a bit of a better job.

This doesn’t deal with word separation though. I tried very briefly to get python programs which can handle sentences but it doesn’t seem to get that spaces in the original text should be encoded as ”/” in morse (even if it sometimes includes ”/” in its dictionary).

I agree and am working on some prompts in this kind of vein at the moment. Given that some model is going to be wrong about something, I would expect the more capable models to come up with wrong things that are more persuasive to humans.

For the newspaper and reddit post examples, I think false beliefs remain relevant since these are observations about beliefs. For example, the observation of BigCo announcing they have solved alignment is compatible with worlds where they actually have solved alignment, but also with worlds where BigCo have made some mistake and alignment hasn’t actually been solved, even though people in-universe believe that it has. These kinds of ‘mistaken alignment’ worlds seem like they would probably contaminate the conditioning to some degree at least. (Especially if there are ways that early deceptive AIs might be able to manipulate BigCo and others into making these kinds of mistakes).

Something I’m unsure about here is whether it is possible to separately condition on worlds where X is in fact the case, vs worlds where all the relevant humans (or other text-writing entities) just wrongly believe that X is the case.

Essentially, is the prompt (particularly the observation) describing the actual facts about this world, or just the beliefs of some in-world text-writing entity? Given that language is often (always?) written by fallible entities, it seems at least not unreasonable to me to assume the second rather than the first interpretation.

This difference seems relevant to prompts aimed at weeding out deceptive alignment in particular. Since in the prompts as beliefs case, the same prompt could cause conditioning both on worlds where we have in fact solved X problem, but also worlds where we are being actively misled into believing that we have solved X problem (when we actually haven’t).

Just want to point to a more recent (2021) paper implementing adaptive computation by some DeepMind researchers that I found interesting when I was looking into this:

Hi, thanks for engaging with our work (and for contributing a long task!).

One thing to bear in mind with the long tasks paper is that we have different degrees of confidence in different claims. We are more confident in there being (1) some kind of exponential trend on our tasks, than we are in (2) the precise doubling time for model time horizons, than we are in (3) the exact time horizons on these tasks, than we are about (4) the degree to which any of the above generalizes to ‘real’ software tasks.

I’m not sure exactly what quantity you are calculating when you refer to the singularity date. Is this the extrapolated date for 50% success at 1 month (167hr) tasks? (If so, I think using the term singularity for this isn’t accurate.)

More importantly though, if your mid 2039 result is referring to 50% @ 167 hours, that would be surprising! If our results were this sensitive to task privacy then I think we would need to rethink our task suite and analysis. For context, in appendix D of the paper we do a few different methodological ablations, and I don’t think any produced results as far off from our mainline methodology as 2039.

My current guess is that your 2039 result is mostly driven by your task filtering selectively removing the easiest tasks, which has downstream effects that significantly increase the noise in your time horizon vs release date fits. If you bootstrap what sort of 95% CIs do you get?

The paper uses SWAA + a subset of HCAST + RE-Bench as the task suite. Filtering out non-HCAST tasks removes all the <1 minute tasks (all <1 min tasks are SWAA tasks). SWAA fits most naturally into the ‘fully_private’ category in the HCAST parlance.[1] Removing SWAA means pre-2023 models fail all tasks (as you observed), which means a logistic can’t be fit to the pre-2023 models,[2] and so the pre-2023 models end up with undefined time horizons. If we instead remove these pre 2023 models, this makes the log (50% time horizon) vs release date fit much more sensitive to noise in the remaining points. This is in large part because the date-range of the data goes from spanning ~6 years (2019-2025) to spanning ~2 years (2023-2025).

In fact, below is an extremely early version of the time horizon vs release date graph that we made using just HCAST tasks, fitted on some models released within 6 months of each other. Extrapolating this data out just 12 months results in 95% CIs of 50% time horizons being somewhere between 30 minutes and 2 years! Getting data for pre-2023 models via SWAA helped a lot with this problem, and this is in fact why we constructed the SWAA dataset and needed to test pre-GPT-4 models.

SWAA was made by METR researchers specifically for the long tasks paper, and we haven’t published the problems.

For RE-Bench, we are about as confident as we can be that the RE-Bench tasks are public_problem level tasks, since we created them in-house also. We zip the solutions in the RE-Bench repo to prevent scraping, ask people to not train on RE-Bench (except in the context of development and implementation of dangerous capability evaluations—e.g. elicitation), and ask people to report if they see copies of unzipped solutions online.

(I’d guess the terminal warnings you were seeing were about failures to converge or zero variance data).