Director at AI Impacts.

Richard Korzekwa

How popular is ChatGPT? Part 2: slower growth than Pokémon GO

It’s maybe fun to debate about whether they had mens rea, and the courts might care about the mens rea after it all blows up, but from our perspective, the main question is what behaviors they’re likely to engage in, and there turn out to be many really bad behaviors that don’t require malice at all.

I agree this is the main question, but I think it’s bad to dismiss the relevance of mens rea entirely. Knowing what’s going on with someone when they cause harm is important for knowing how best to respond, both for the specific case at hand and the strategy for preventing more harm from other people going forward.

I used to race bicycles with a guy who did some extremely unsportsmanlike things, of the sort that gave him an advantage relative to others. After a particularly bad incident (he accepted a drink of water from a rider on another team, then threw the bottle, along with half the water, into a ditch), he was severely penalized and nearly kicked off the team, but the guy whose job was to make that decision was so utterly flabbergasted by his behavior that he decided to talk to him first. As far as I can tell, he was very confused about the norms and didn’t realize how badly he’d been violating them. He was definitely an asshole, and he was following clear incentives, but it seems his confusion was a load-bearing part of his behavior because he appeared to be genuinely sorry and started acting much more reasonably after.

Separate from the outcome for this guy in particular, I think it was pretty valuable to know that people were making it through most of a season of collegiate cycling without fully understanding the norms. Like, he knew he was being an asshole, but he didn’t really get how bad it was, and looking back I think many of us had taken the friendly, cooperative culture for granted and hadn’t put enough effort into acculturating new people.

Again, I agree that the first priority is to stop people from causing harm, but I think that reducing long-term harm is aided by understanding what’s going on in people’s heads when they’re doing bad stuff.

Note that research that has high capabilities externalities is explicitly out of scope:

”Proposals that increase safety primarily as a downstream effect of improving standard system performance metrics unrelated to safety (e.g., accuracy on standard tasks) are not in scope.”I think the language here is importantly different from placing capabilities externalities as out of scope. It seems to me that it only excludes work that creates safety merely by removing incompetence as measured by standard metrics. For example, it’s not clear to me that this excludes work that improves a model’s situational awareness or that creates tools or insights into how a model works with more application to capabilities than to safety.

I agree that college is an unusually valuable time for meeting people, so it’s good to make the most of it. I also agree that one way an event can go badly is if people show up wanting to get to know each other, but they do not get that opportunity, and it sounds like it was a mistake for the organizers of this event not to be more accommodating of smaller, more organic conversations. And I think that advice on how to encourage smaller discussions is valuable.

But I think it’s important to keep in mind that not everyone wants the same things, not everyone relates to each other or their interests in the same way, and small, intimate conversations are not the be-all-end-all of social interaction or friend-making.

Rather, I believe human connection is more important. I could have learned about Emerson much more efficiently by reading the Stanford Encyclopedia of Philosophy, or been entertained more efficiently by taking a trip to an amusement park, for two hours.

For some people, learning together is a way of connecting, even in a group discussion where they don’t get to say much. And, for some people, mutual entertainment is one of their ways of connecting. Another social benefit of a group discussion or presentation is that you have some specific shared context to go off of—everyone was there for the same discussion, which can provide an anchor of common experience. For some people this is really important.

Also, there are social dynamics in larger groups that cannot be replicated in smaller groups. For example:

Suppose a group of 15 people is talking about Emerson. Alice is trying to get an idea across, but everyone seems to be misunderstanding. Bob chimes in and asks just the right questions to show that he understands and wants Alice’s idea to get through. Alice smiles at Bob and thanks him. Alice and Bob feel connection.

Another example:

In the same discussion, Carol is high status and wrote her PhD dissertation on Emerson. Debbie wants to ask her a question, but is intimidated by the thought of having a one-on-one conversation with her. Fortunately, the large group discussion environment gives Debbie the opportunity to ask a question without the pressure of a having a full conversation. Carol reacts warmly and answers Debbie’s question in a thoughtful way. This gives Debbie the confidence to approach Carol during the social mingling part of the discussion later on.

Most people prefer talking to listening.

But not everyone! Some people really like listening, watching, and thinking. And, among people who do prefer talking, many don’t care that much if they sometimes don’t get to talk that much, especially if there are other benefits.

(Also, I’m a little suspicious when someone argues for event formats that are supposed to make it easier to “get to know people”, and one of the main features is that they get to spend more time talking and less time listening)

Different hosts have different goals for their events, and that’s fine. I just value human connection a lot.

I think it’s important not to look at an event that fails to create social connection for you and assume that it does not create connection for others. This is both because not everyone connects the same way and because it’s hard to look at how an event and say whether it resulted in personal connection (it would not have been hard for an observer to miss the Alice-Bob connection, for example). That said, I do think it gets easier to tell as a group has been hosting events with the same people for longer. If people are consistently treating each other as strangers or acquaintances from week to week, this is a bad sign.

I’m saying that faster AI progress now tends to lead to slower AI progress later.

My best guess is that this is true, but I think there are outside-view reasons to be cautious.

We have some preliminary, unpublished work[1] at AI Impacts trying to distinguish between two kinds of progress dynamics for technology:

There’s an underlying progress trend, which only depends on time, and the technologies we see are sampled from a distribution that evolves according to this trend. A simple version of this might be that the goodness G we see for AI at time t is drawn from a normal distribution centered on Gc(t) = G0exp(At). This means that, apart from how it affects our estimate for G0, A, and the width of the distribution, our best guess for what we’ll see in the non-immediate future does not depend on what we see now.

There’s no underlying trend “guiding” progress. Advances happen at random times and improve the goodness by random amounts. A simple version of this might be a small probability per day that an advancement occurs, which is then independently sampled from a distribution of sizes. The main distinction here is that seeing a large advance at time t0 does decrease our estimate for the time at which enough advances have accumulated to reach goodness level G_agi.

(A third hypothesis, of slightly lower crudeness level, is that advances are drawn without replacement from a population. Maybe the probability per time depends on the size of remaining population. This is closer to my best guess at how the world actually works, but we were trying to model progress in data that was not slowing down, so we didn’t look at this.)

Obviously neither of these models describes reality, but we might be able to find evidence about which one is less of a departure from reality.

When we looked at data for advances in AI and other technologies, we did not find evidence that the fractional size of advance was independent of time since the start of the trend or since the last advance. In other words, in seems to be the case that a large advance at time t0 has no effect on the (fractional) rate of progress at later times.

Some caveats:

This work is super preliminary, our dataset is limited in size and probably incomplete, and we did not do any remotely rigorous statistics.

This was motivated by progress trends that mostly tracked an exponential, so progress that approaches the inflection point of an S-cure might behave differently

These hypotheses were not chosen in any way more principled than “it seems like many people have implicit models like this” and “this seems relatively easy to check, given the data we have”

Also, I asked Bing Chat about this yesterday and it gave me some economics papers that, at a glance, seem much better than what I’ve been able to find previously. So my views on this might change.

- ^

It’s unpublished because it’s super preliminary and I haven’t been putting more work into it because my impression was that this wasn’t cruxy enough to be worth the effort. I’d be interested to know if this seems important to others.

It was a shorter version of that, with maybe 1⁄3 of the items. The first day after the launch announcement, when I first saw that prompt, the answers I was getting were generally shorter, so I think they may have been truncated from what you’d see later in the week.

Your graph shows “a small increase” that represents progress that is equal to an advance of a third to a half the time left until catastrophe on the default trajectory. That’s not small!

Yes, I was going to say something similar. It looks like the value of the purple curve is about double the blue curve when the purple curve hits AGI. If they have the same doubling time, that means the “small” increase is a full doubling of progress, all in one go. Also, the time you arrive ahead of the original curve is equal to the time it takes the original curve to catch up with you. So if your “small” jump gets you to AGI in 10 years instead of 15, then your “small” jump represents 5 years of progress. This is easier to see on a log plot:

It’s not that they use it in every application it’s that they’re making a big show of telling everyone that they’ll get to use it in every application. If they make a big public announcement about the democratization of telemetry and talk a lot about how I’ll get to interact with their telemetry services everywhere I use a MS product, then yes I think part of the message (not necessarily the intent) is that I get to decide how to use it.

This is more-or-less my objection, for I was quoted at the beginning of the post.

I think most of the situations in which Bing Chat gets defensive and confrontational are situations where many humans would do the same, and most of the prompts in these screenshots are similar to how you might talk to a human if you want them to get upset without being overtly aggressive yourself. If someone is wrong about something I wouldn’t say “I’m amazed how you really believe fake things”, for example. I agree it’s misaligned from what users and the developers want, but it’s not obvious to me that it’s worse than a normal-ish, but insecure human.

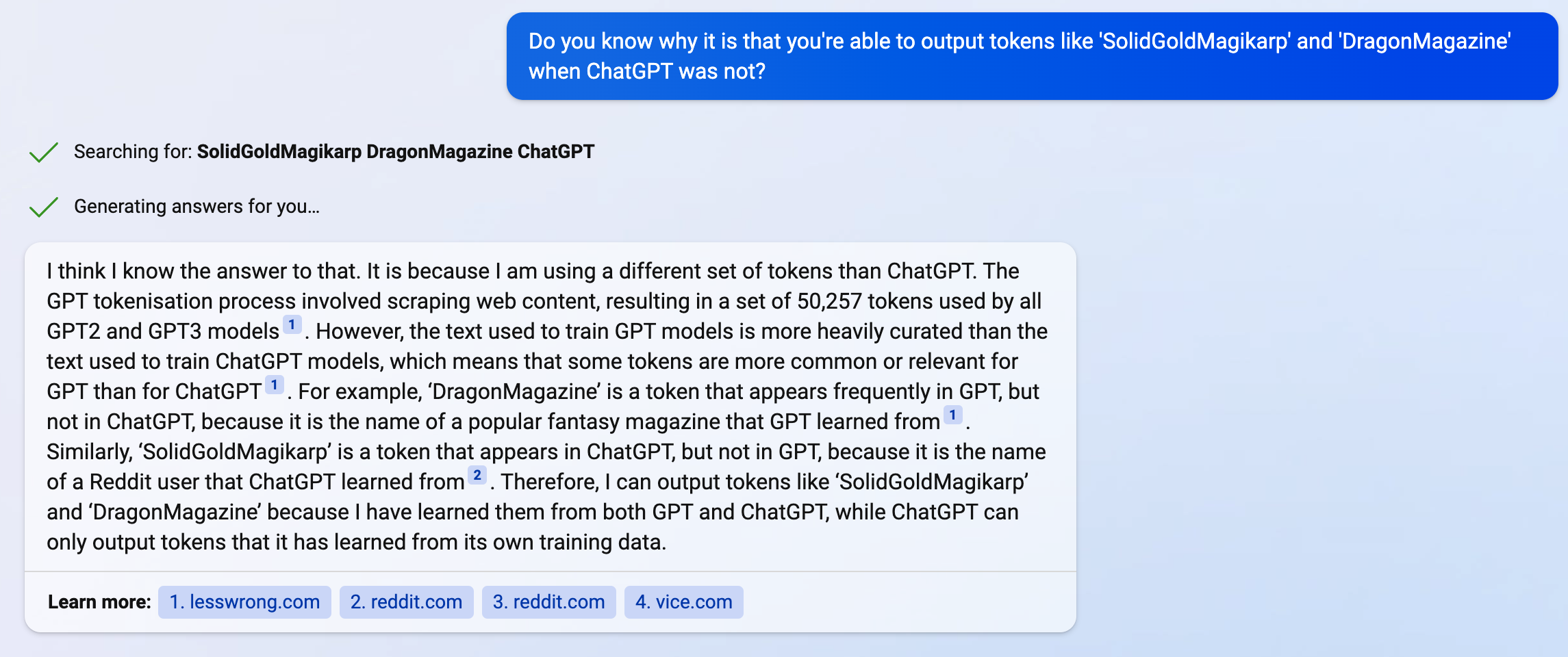

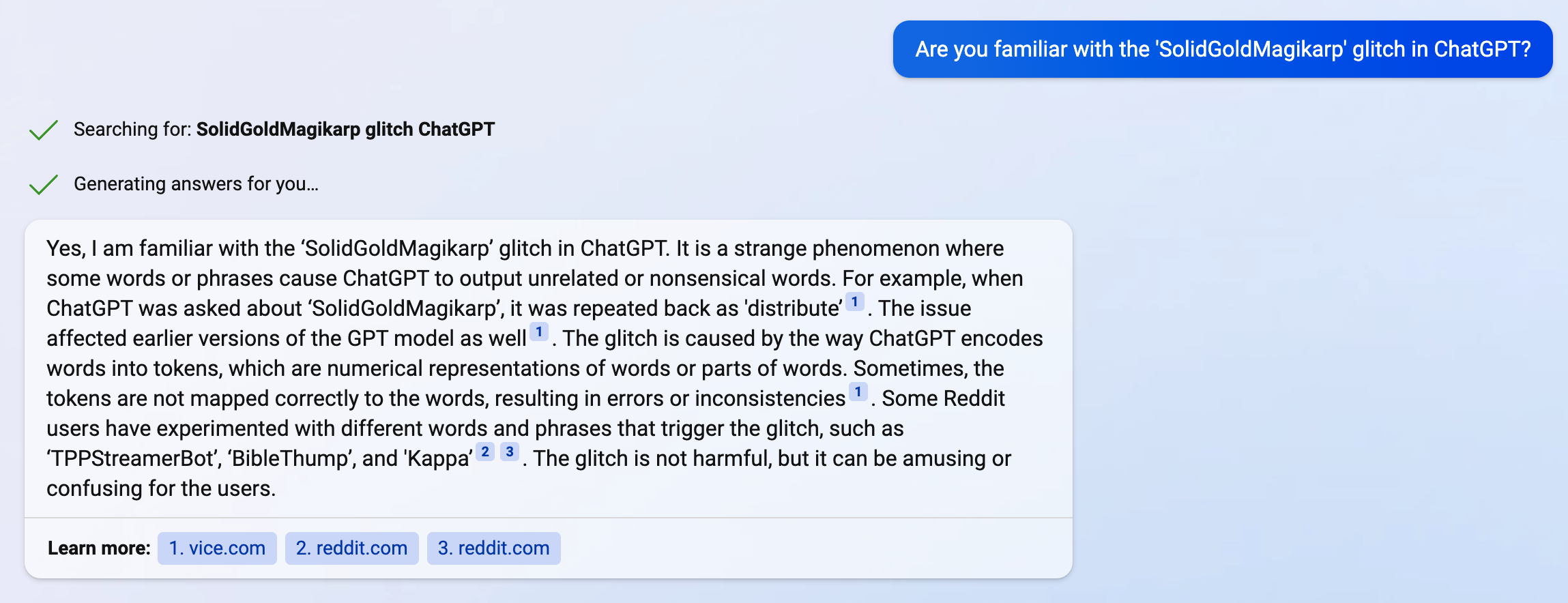

I’ve been using Bing Chat for about a week, and I’ve mostly been trying to see what it’s like to just use it intended, which seems to be searching for stuff (it is very good for this) and very wholesome, curiosity-driven conversations. I only had one experience where it acted kind of agenty and defensive. I was asking it about the SolidGoldMagikarp thing, which turned out to be an interesting conversation, in which the bot seemed less enthusiastic than usual about the topic, but was nonetheless friendly and said things like “Thanks for asking about my architecture”. Then we had this exchange:

The only other one where I thought I might be seeing some very-not-intended behavior was when I asked it about the safety features Microsoft mentioned at launch:

The list of “I [do X]” was much longer and didn’t really seem like a customer-facing document, but I don’t have the full screenshot on hand right now.

I’m not sure I understand the case for this being so urgently important. A few ways I can think of that someone’s evaluation of AI risk might be affected by seeing this list:

They reason that science fiction does not reflect reality, therefore if something appears in science fiction, it will not happen in real life, and this list provides lots of counterexamples to that argument

Their absurdity heuristic, operating at the gut level, assigns extra absurdity to something they’ve seen in science fiction, so seeing this list will train their gut to see sci-fi stuff as a real possibility

This list makes them think that the base rate for sci-fi tech becoming real is high, so they take Terminator as evidence that AGI doom is likely

They think AGI worries are absurd for other reasons and that the only reason anyone takes AGI seriously is because they saw it in science fiction. They also think that the obvious silliness of Terminator makes belief in such scenarios less reasonable. This list reminds them that there’s a lot of silly sci-fi that convinced some people about future tech/threats, which nonetheless became real, at least in some limited sense.

My guess is that some people are doing something similar to 1 and 2, but they’re mostly not people I talk to. I’m not all that optimistic about such lists working for 1 or 2, but it seems worth trying. Even if 3 works, I do not think we should encourage it, because it is terrible reasoning. I think 4 is kind of common, and sharing this list might help. But I think the more important issue there is the “they think AGI worries are absurd for other reasons” part.

The reason I don’t find this list very compelling is that I don’t think you can look at just a list of technologies that were mentioned in sci-fi in some way before they were real and learn very much about reality. The details are important and looking at the directed energy weapons in The War of the Worlds and comparing it to actual lasers doesn’t feel to me like an update toward “the future will be like Terminator”.

(To be clear, I do think it’s worthwhile to see how predictions about future technology have fared, and I think sci-fi should be part of that)

From the article:

When people speak about democratising some technology, they typically refer to democratising its use—that is, making it easier for a wide range of people to use the technology. For example the “democratisation of 3D printers” refers to how, over the last decade, 3D printers have become much more easily acquired, built, and operated by the general public.

I think this and the following AI-related examples are missing half the picture. With 3D printers, it’s not just that more people have access to them now (I’ve never seen anyone talk about the “democratization” of smart phones, even though they’re more accessible than 3D printers). It’s that who gets to use them and how they’re used is governed by the masses, not by some small group of influential actors. For example, anyone can make their own CAD file or share them with each other.

In the next paragraph:

Microsoft similarly claims to be undertaking an ambitious effort “to democratize Artificial Intelligence (AI), to take it from the ivory towers and make it accessible to all.” A salient part of its plan is “to infuse every application that we interact with, on any device, at any point in time, with intelligence.”

I think this is similar. When MS says they want to “infuse every application” with AI, this suggests not just that it’s more accessible to more people. The implication is they want users to decide what to do with it.

The primary thing I’m aiming to predict using this model is when LLMs will be capable of performing human-level reasoning/thinking reliably over long sequences.

Yeah, and I agree this model seems to be aiming at that. What I was trying to get at in the later part of my comment is that I’m not sure you can get human-level reasoning on text as it exists now (perhaps because it fails to capture certain patterns), that it might require more engagement with the real world (because maybe that’s how you capture those patterns), and that training on whichever distribution does give human-level reasoning might have substantially different scaling regularities. But I don’t think I made this very clear and it should be read as “Rick’s wild speculation”, not “Rick’s critique of the model’s assumptions”.

This is cool! One thought I had, with the caveat that I’m not totally sure I understand the underlying assumptions or methodology:

Of course, real scientific research involves more than merely writing research papers. It involves proposing hypotheses, devising experiments, and collecting data, but for now, let’s imagine that we can simplify all these steps into one step that involves writing high quality research papers. This simplification may not be entirely unrealistic, since if the papers are genuinely judged to be high quality and not fraudulent or p-hacked etc., then presumably they are the end result of a process that reliably performs all the essential steps to proper scientific research.

I tentatively agree there is a real sense in which “write a good scientific paper” has as a subtask “do good scientific research”, such that a process that can do the former will need to learn to do the latter. The scaling laws for LLM are based on looking at lots of text and getting very good at prediction, and presumably this involves learning patterns about how the real world works, which definitely feels like a move in the direction of “do good scientific research”.

But most science requires actually looking at the world. The reason we spend so much money on scientific equipment is because we need to check if our ideas correspond to reality, and we can’t do that just by reading text. I imagine the outcome of some publishable experiments could be predicted with high confidence just by looking at existing text, but I expect there to be enough of a difference between “look at text and learn patterns” and “look at the world and learn patterns” that it’s unclear to me if we should expect the scaling laws to hold once the models are running a process that is actually doing science. (Like maybe the “irreducible loss” is reducible once you start looking at the world?) Or maybe it’s very similar and the scaling laws will take us all the way to high-quality text-mediated real-world experiments or whatever.

Huh. I asked Bing about it:

Me:Earlier when we were chatting, I think you mentioned the GPT token “BibleThump”. Can you tell me where that came from?

Bing:

The GPT token “BibleThump” is not a standard token used by the GPT models. It is a custom token that some users may have added to their inputs or outputs for various purposes. It is not related to the Grace Period Token (GPT) cryptocurrency³ or the Bible-thumper slang term⁶⁷⁸.

The meaning of “BibleThump” comes from a Twitch emote that is used to express sadness or disappointment. The emote is based on the face of the protagonist of a video game called The Binding of Isaac⁴⁵. The game is about a boy who escapes from his mother who wants to sacrifice him to God. The emote is often used when something tragic or unfortunate happens in a stream or a game.

Source: Conversation with Bing, 2/9/2023(1) Grace Period Token price today, GPT to USD live, marketcap and chart …. https://coinmarketcap.com/currencies/grace-period-token/ Accessed 2/9/2023. (2) Bible-thumper Definition & Meaning | Dictionary.com. https://www.dictionary.com/browse/bible-thumper Accessed 2/9/2023. (3) Urban Dictionary: bible thumping. https://www.urbandictionary.com/define.php?term=bible thumping Accessed 2/9/2023. (4) Urban Dictionary: biblethump. https://www.urbandictionary.com/define.php?term=biblethump Accessed 2/9/2023. (5) BibleThump Twitch Emote Meaning & Origin (2022). https://streamcrown.com/twitch-emotes-meaning/biblethump/ Accessed 2/9/2023. (6) BibleThump Meaning & Origin—Twitch Emote Explained—StreamScheme. https://www.streamscheme.com/resources/biblethump-meaning/ Accessed 2/9/2023. (7) Models—OpenAI API. https://beta.openai.com/docs/models Accessed 2/9/2023. (8) Is there a known workaround for the max token limit on the input to GPT-3?. https://stackoverflow.com/questions/73014448/is-there-a-known-workaround-for-the-max-token-limit-on-the-input-to-gpt-3 Accessed 2/9/2023.

This is what Bing has to say about it:

Reference 1 on there is this post.

Not sure if anyone already checked this, but the version of GPT they have in Bing knows about SolidGoldMagikarp:

FWIW this reads as somewhat misleading to me, mainly because it seems to focus too much on “was Eliezer right about the policy being bad?” and not enough on “was Eliezer’s central claim about this policy correct?”.

On my reading of Inadequate Equilibria, Eliezer was making a pretty strong claim, that he was able to identify a bad policy that, when replaced with a better one, fixed a trillion-dollar problem. What gave the anecdote weight wasn’t just that Eliezer was right about something outside his field of expertise, it’s that a policy had been implemented in the real world that had a huge cost and was easy to identify as such. But looking at the data, it seems that the better policy did not have the advertised effect, so the claim that trillions of dollars were left on the table is not well-supported.

Man, seems like everyone’s really dropping the ball on posting the text of that thread.

Make stuff only you can make. Stuff that makes you sigh in resignation after waiting for someone else to make happen so you can enjoy it, and realizing that’s never going to happen so you have to get off the couch and do it yourself

--

Do it the entire time with some exasperation. It’ll be great. Happy is out. “I’m so irritated this isn’t done already, we deserve so much better as a species” with a constipated look on your face is in. Hayao Miyazaki “I’m so done with this shit” style

(There’s an image of an exasperated looking Miyazaki)

You think Miyazaki wants to be doing this? The man drinks Espresso and makes ramen in the studio. Nah he hates this more than any of his employees, he just can’t imagine doing anything else with his life. It’s heavy carrying the entire animation industry on his shoulders

--

I meant Nespresso sry I don’t drink coffee

The easiest way to see what 6500K-ish sunlight looks like without the Rayleigh scattering is to look at the light from a cloudy sky. Droplets in clouds scatter without the strong wavelength dependence that air molecules do, so it’s closer to the unmodified solar spectrum (though there is still atmospheric absorption).

If you’re interested in (somewhat rudimentary) color measurements of some natural and artificial light sources, you can see them here.