Was a philosophy PhD student, left to work at AI Impacts, then Center on Long-Term Risk, then OpenAI. Quit OpenAI due to losing confidence that it would behave responsibly around the time of AGI. Now executive director of the AI Futures Project. I subscribe to Crocker’s Rules and am especially interested to hear unsolicited constructive criticism. http://sl4.org/crocker.html

Some of my favorite memes:

(by Rob Wiblin)

(xkcd)

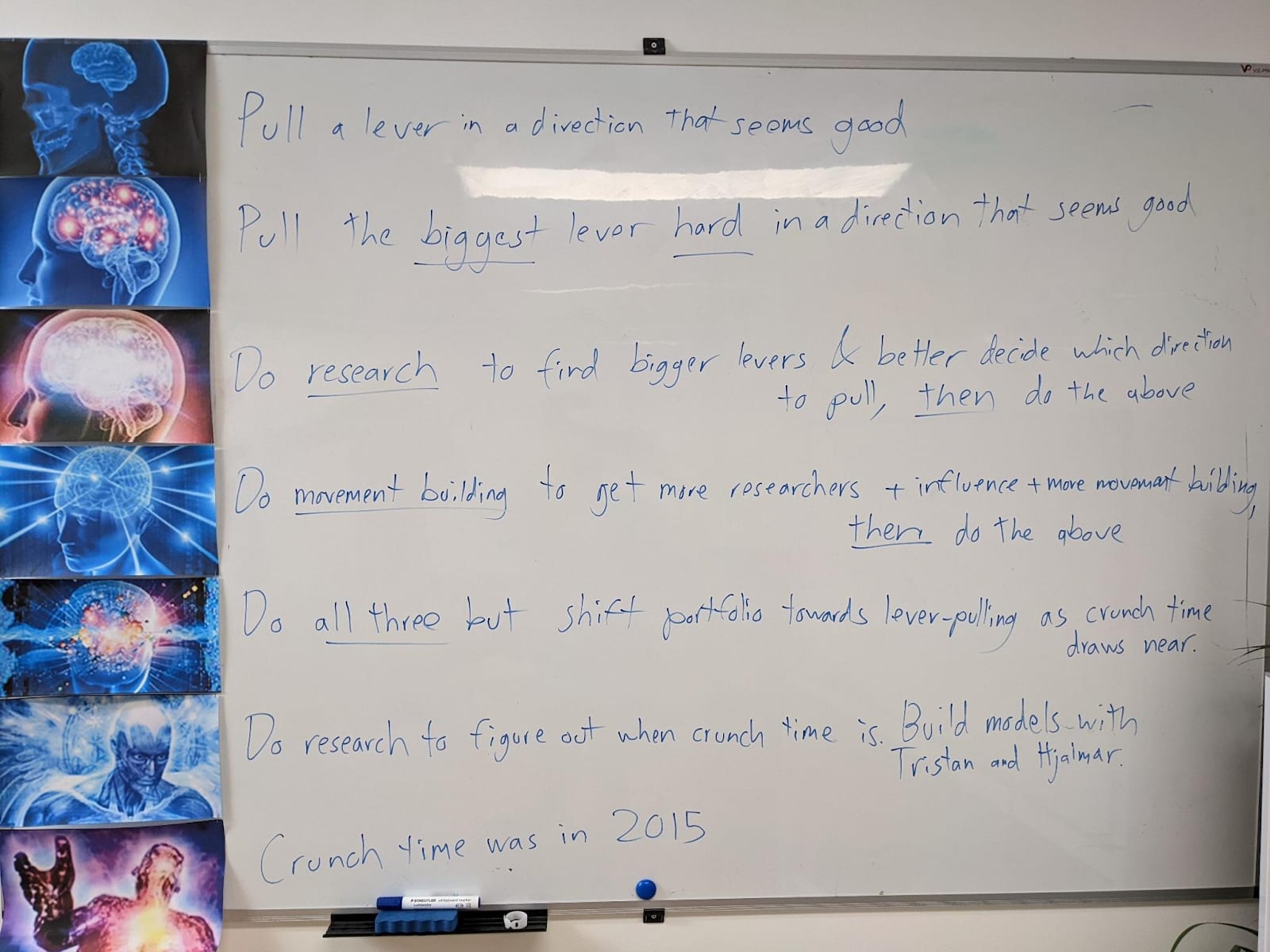

My EA Journey, depicted on the whiteboard at CLR:

(h/t Scott Alexander)

Hallucination was a bad term because it sometimes included lies and sometimes included… well, something more like hallucinations. i.e. cases where the model itself seemed to actually believe what it was saying, or at least not be aware that there was a problem with what it was saying. Whereas in these cases it’s clear that the models know the answer they are giving is not what we wanted and they are doing it anyway.