100 years ago Alfred Korzybski published The Manhood Of Humanity, the first book I’m aware of to analyze existential risks as a general category and try to diagnose their root causes. Using the primitive analytic tools available to him, Korzybski created a movement called General Semantics, which he hoped would raise the sanity waterline in a self improving process far into the future. General Semantics largely petered out a few decades after Korzybski’s death in 1950, but its spirit has been recaptured in contemporary movements like LessWrong rationality, Effective Altruism, etc. In typical histories of existential risk human extinction is taken as becoming an urgent concern after the invention of the atomic bomb. This is true, but Bostrom’s definition of existential risk doesn’t just concern extinction, it also considers regression and stagnation:

Existential risk – One where an adverse outcome would either annihilate Earth-originating intelligent life or permanently and drastically curtail its potential.

Human extinction may have become an urgent concern with the invention of the atomic bomb, but the permanent regression and stagnation of civilization became an urgent question sooner than that. Conservatively it became urgent after the end of World War I, and World War II simply escalated it from a question of regression to extinction. In fact if we examine the hundred years that have passed since Korzybski’s first investigation, what we find is a century of anxious discourse about the future of human technology and society. Each generation seems to rediscover and reiterate the same theme of exponential growth: in scientific knowledge, in firepower, in resource consumption, in population, in computer intelligence, and in the ability to finely manipulate the physical environment, as threats to humanity’s continued development and existence. Even as these things bolster and uplift us they threaten to destroy us in the same stroke.

The regression and stagnation of civilization is not just a technological question, but a sociological one. This means that the reiterated question of threats from exponential growth are inseparable from ideas like The Great Stagnation, which are fundamentally about why we see exponential growth in some areas but not others. This essay will outline a progression from democratic republics ending the feudal era by inventing mass mobilization, to the arms races of the 19th century taking mass mobilization and armaments to their logical conclusion, to the strategic firebombing and atomic weapons invented by the end of the second world war making armed conflict between nations an increasing logistical impossibility. In the wake of that impossibility societies lose one of the few escape hatches they had to update their institutions as they are rapidly obsolesced by the pace of industrial and scientific progress. Worse still, the link between being able to precisely train, control, and utilize large masses of men and military dominance is severed. In the contemporary battlefield large masses of men are a target, not an advantage, and the organization of the domestic society increasingly reflects this.

Prelude To Existential Risk

Our story begins with the French Revolution, when a group of liberal reformers took over the country, established a republic, and executed King Louis XVI

France found itself immediately under attack from the vengeful monarchs of Europe. The new republic lost badly until The Levée en Masse in August of 1793. It was a mass conscription decree, turning the entire French state and population into a war machine against the European monarchies. This was the trump card that allowed France to survive and ultimately dominate Europe. The monarchies had to be skittish about arming their citizens as troops: if they raised armies too large it would create a latent military ability that might destabilize their state and force concessions during rebellions. The French Republic had no such qualms, creating a latent military ability in the general population was the point, rather than a worrisome byproduct. This meant France could raise armies 10 times the size of its neighbors, an overwhelming advantage without which there would have been no possibility of survival.

France pressed that advantage against the rest of Europe, forcing the monarchies to slowly repeal themselves in the process. It is no coincidence that after Napoleon crushed Prussia at the Battle of Jena immediate nationalizing reforms followed. ‘Nationalization’ in the context of a feudal monarchy is the process of republicanizing and relating the peasantry to the state as citizens rather than serfs. It’s not that the monarchies fell overnight, but from that point forward there was a European trend towards nationalism and more egalitarian norms. The first industrial revolution further incentivized elites to learn to organize and finely control large numbers of people. Stately wealth and power became intimately connected to managerial competence, not just hypergamy and capital accumulation.

Even though Napoleon was defeated at Waterloo, the mass mobilization necessary to defeat him never really stopped. Instead the energy went into new methods of production like factories, which require strict ordered behavior and mass coordination to work. The new nation-states rapidly built and obsolesced fleets, artillery, weapons, armies. War itself became scarce but the European powers were constantly upgrading their means to pursue it in a costly arms race. This potential violence was exported to foreign lands and colonies, where it became actual violence as the Europeans subjugated less organized peoples and forced them to organize into trading ‘partners’ ruled over by a thin corps of European officers. These colonial empires effectively built an API around foreign lands to rationalize them and extract resources. This rapacious mode of development would have been hard to avoid even if it had been objected to on ethical grounds: Ceding a claim to a territory only meant that its resources would be marshaled against you by a rival.

While it would take some digging to make the case rigorously, it seems likely that the colonial mode of development had the side effect of making war seem romantic. When young men see fighting in distant territories against unequipped competitors as a route to social advancement, war might seem like a kind of hunting or sport. Tales of European exploration and the heraldic exploits of the feudal era promised bygone glory, severing popular imagination from the absurd dimensions of modern warfare between equipped adversaries. The American Civil War in the 1860′s gave some indication of what was to come, but by the onset of WW1 it was a picture 50 years out of date. Despite these defects the colonial powers developed wealth rapidly, creating a virtuous feedback loop where more wealth meant more room for exploration meant still greater wealth.

World War 1 and Early Existential Risk

Then in the 20th century, disaster struck when war became impossible.

The death of war went hand in hand with the birth of existential risk. In the run-up to World War One authors like Jan Bloch and Norman Angell didn’t just claim war would be wasteful. To them a general European war would be suicide. They expected a total war that would burn all resources. Once begun it was unclear when the fighting might stop. It could bring on a chain reaction of blood feuds, the belligerents wrestling each other to dissipation. The end result of this ceaseless, total war would be a global regression to medieval conditions. That republican war machine had become too efficient, the means of devastation too powerful for war to be a viable mechanism for organizing society.

But the theorists sketching World War One were skeptical it could really happen. Jan Bloch wrote 6 volumes on the shape of a future war, yet his ultimate conclusion was that it would never take place. He felt the vast expenditures states were making on a fairy tale conflict could have been going into schools or medicine. Bloch made three major predictions about WW1 that together he claims will make war impossible:

-

The armaments have become too deadly. The casualties will be enormous, the fighting stagnant and entrenched, and the officers will be killed at astonishing rates. Recent small wars had shown that officer corps get slaughtered in modern warfare.

-

It will be impossible to organize the multi-million man armies necessary to conduct this fighting. Especially once the officers are slaughtered and there’s no experienced leadership in the ranks.

-

It will be impossible to feed society and its armies after cutting off global trade and sending away farm hands to fight.

Of these three predictions, Bloch was wrong about the size of the armies being impossible, and he had underestimated the advances in agriculture that just barely kept the fighting viable. What he was not wrong about was the deadliness of the armaments, which had every effect he predicted of them. He felt that the European powers might try a general war once, and then it would be so disastrous that they would never try it again:

I maintain that war has become impossible alike from a military, economic, and political point of view. The very development that has taken place in the mechanism of war has rendered war an impracticable operation. The dimensions of modern armaments and the organization of society have rendered its prosecution an economic impossibility, and, finally, if any attempt were made to demonstrate the inaccuracy of my assertions by putting the matter to a test on a great scale, we should find the inevitable result in a catastrophe which would destroy all existing political organizations. Thus, the great war cannot be made and any attempt to make it would result in suicide. Such I believe, is the simple demonstrable fact.

But no, the European powers fought this war. Then after their regimes collapsed the new ones chose to fight it a second time.

If you had told Bloch that Europe would fight his impossible war, and organize the armies and feed the troops, that the armaments would be exactly as deadly as he says they would be and most of the political consequences he imagined would follow. And then, just a few decades later they would choose to fight that war again; I think he would be quite shocked. But that is in fact what happened.

X-Risk During The Interwar Years

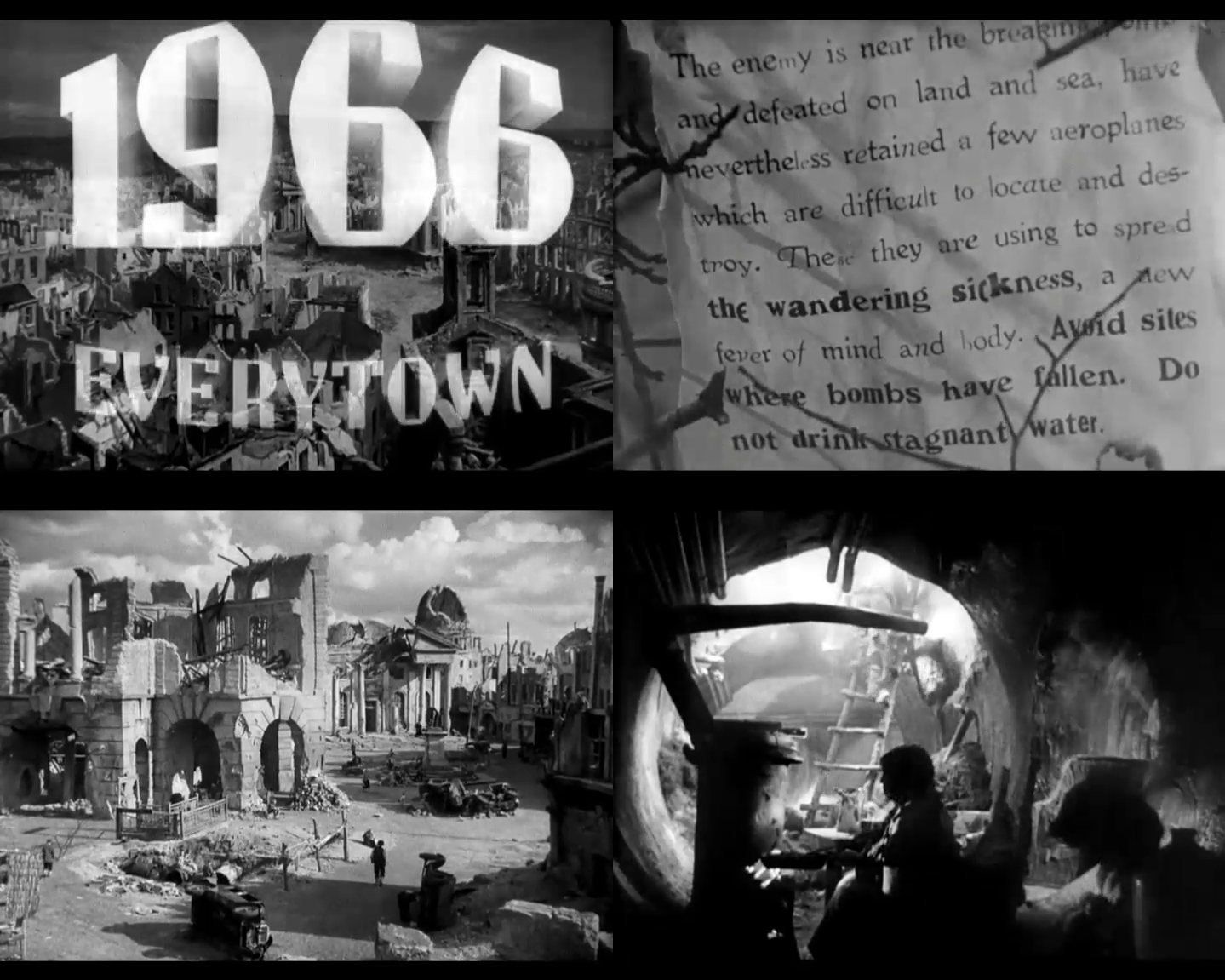

In the interwar years the seeds of what we might now call the rationalist community were born. Those seeds were planted by Alfred Korzybski, a polish nobleman who was 35 when the war started. After WW1 ended European civilization spent the next two decades soul searching. Korzybski participated in the slaughter and sustained lifelong injuries. His experiences drove him to search with the rest of Europe for some kind of answer to what the war meant. Like many other thinkers, the chief concern on his mind was how to prevent a second world war. Where before existential risk was a fringe subject for contrarian, speculative thinkers, now it was very much a going concern. As Dan Carlin points out, it was the first time men became afraid of new weapons not because their enemies might have them, but because they were afraid for the whole species. This fear is visually depicted in the 1936 film Things To Come. Where in the far off year of 1966, a combination of gas attacks, air bombing, and biological warfare has returned humanity to medieval standards of living.

Korzybski concluded that the ultimate cause of the first world war was a disparity in the rate of progress between physical and social science. He formulated the nature of man as a ‘time binder’ separate from animal life in its ability to transmit information between generations. Plants bind energy, animals bind space, humans bind time. He felt people were learning optimally from nature because physical science showed an exponential progression, but their institutions learned at a glacial pace. Wars and insurrections then are caused by people needing to refresh their institutions to keep pace with physical science:

Consider now any two matters of great importance for human weal—jurisprudence for example, and natural science—or any other two major concerns of humanity. It is as plain as the noon-day sun that, if progress in one of the matters advances according to the law of a geometric progression and the other in accordance with a law of an arithmetical progression, progress in the former matter will very quickly and ever more and more rapidly outstrip progress in the latter, so that, if the two interests involved be interdependent (as they always are), a strain is gradually produced in human affairs, social equilibrium is at length destroyed; there follows a period of readjustment by means of violence and force.

But war had become impossible! So what was man to do?

Korzybski’s hope was that it would be possible to use mathematics and engineering methods to make social progress as efficient as scientific progress without resorting to war. He published his first book on this subject, The Manhood Of Humanity, in 1921. He then spent the next ten years researching and writing the second with his ideas on how humanity was to bring about this change in the efficiency of its social progress.

However if war was now impossible, insurrection was not. Even as thinkers like Korzybski, and there were quite a few of them, were doing their best to try and rapidly produce some kind of panacea to the ills of the 20th century; the conditions for a 2nd world war arranged themselves at lightning pace. Russia’s monarchy had fallen to a coup of communist fanatics, who had sights on converting the rest of Europe by insurrection or conquest. This international socialist movement became a real danger to Western states, sympathy towards it prevailed among progressives in Europe and America alike. In response dissidents began constructing and adopting harsh, antihumanist forms of right wing progressivism every bit as maladjusted as that of the Bolsheviks. In the same year Norman Angell published The Great Illusion, his WW1 impossibility thesis, F.T. Marinetti wrote his Futurist Manifesto decrying the sentimentality of Italian culture and calling for omnipresent war:

Except in struggle, there is no more beauty. No work without an aggressive character can be a masterpiece. Poetry must be conceived as a violent attack on unknown forces, to reduce and prostrate them before man.

…

We will glorify war—the world’s only hygiene—militarism, patriotism, the destructive gesture of freedom-bringers, beautiful ideas worth dying for, and scorn for woman.

We will destroy the museums, libraries, academies of every kind, will fight moralism, feminism, every opportunistic or utilitarian cowardice.

The socialist and futurist visions clashed violently around the world (and it is this legacy of the word ‘futurist’ that inspired the alternative term ‘futurology’). The right wing dissidents congealed into the person of Mussolini, who combined his homelands futurism and traditionalist machismo aesthetics into a totalitarian, syndicalist vision to get ‘fascism’. The King of Italy was eventually forced to let Mussolini run his country. A German insurrectionist named Adolf Hitler received a stay of execution from sympathetic judges, going on to acquire dictatorial powers through a mass movement. He faced strong opposition from Germany’s domestic communist party, but won thanks to elite sympathies. In Spain the socialists and fascists came to blows with a vicious civil war that ultimately put the fascist dictator Franco in power. There must have been bitter irony for Korzybski, a polish nationalist, that it was Hitler’s invasion of his native Poland that incited the 2nd world war he had spent so much time trying to prevent.

World War 2 and the Invention of Atomic Weapons

A second world war paved way for the most significant event in the history of existential risk: the invention of atomic weaponry. At the war’s outbreak approximately one or two hundred physicists had detailed knowledge of nuclear fission, and its potential for a ‘chain reaction’ releasing enormous amounts of energy. Some of these physicists began preparing to create an atomic bomb based on the process. In Germany, Heisenberg and other theorists spent their time jockeying over status and cutting uranium samples into cubes. They believed themselves in possession of secret knowledge opaque to their dumber colleagues in Britain, the United States, and Russia. In reality the British secret service was well aware of their activities, and prepared to assassinate them if they seemed too close to an atom bomb.

Where the Germans were timid and lazy in their investigations of atomic power, the British and Americans were bold and relentless. In Britain those physicists who understood the danger were terrified that Hitler might attain an atomic bomb. Two of them, Otto Frisch and Rudolf Peierls wrote a memorandum outlining the basic theory of an atomic weapon, ultimately initiating the American Manhattan Project. Where Heisenberg felt reluctant to ask for 350,000 German Reichsmarks (around $140,000 US dollars at the time), the American Manhattan Project spent exorbitant millions in pursuit of the bomb. When the most promising process for getting pure samples of the elusive U-235 was gaseous diffusion, which produced only minute quantities, entire football fields of facilities were built to produce the necessary amounts.

The atom bomb was not just technologically the most significant event in the history of existential risk, but also conceptually. It was while inventing the bomb that the scientific community, and by extension the larger bulk of humanity, came face to face with our destiny as a species: the full implications of harnessing greater and greater amounts of energy. In the course of building the bomb American physicists invented new technologies and mathematics to drive the bomb forward. The polymath John von Neumann invented both the first stored program electronic computer and the statistical methods necessary to model key parts of the bombs operation. He had night terrors during the process about the ultimate consequences of his actions, in one from early 1945 supposedly telling his wife:

What we are creating now is a monster whose influence is going to change history, provided there is any history left. Yet it would be impossible not to see it through, not only for the military reasons, but it would also be unethical from the point of view of the scientists not to do what they know is feasible, no matter what terrible consequences it may have. And this is only the beginning!

The energy source which is now being made available will make scientists the most hated and most wanted citizens in any country. The world could be conquered, but this nation of puritans will not grab its chance; we will be able to go into space way beyond the moon if only people could keep pace with what they create.

It was here that Neumann and Korzybski converged on the shape of things to come, both thinkers independently derived the technological singularity thesis. The physicist Stan Ulam relates Neumann’s speculation that there is an “ever accelerating progress of technology and changes in the mode of human life, which gives the appearance of approaching some essential singularity in the history of the race beyond which human affairs, as we know them, could not continue”. 25 years before when Korzybski was trying to derive the nature of man, he had sketched a series of branching paths into his notebook. He noted that the amount of knowledge humanity accumulated from generation to generation seemed to depend upon what was already known, implying an exponential function. Korzybski saw this progression would build slowly over generations until it hit a critical mass.

The singularity they were discussing followed rationally from historical and current events. It is not just man’s knowledge but his energy use and availability that grew exponentially between the early 18th and late 20th centuries. Henry Adams writes in his autobiography:

The coal-output of the world, speaking roughly, doubled every ten years between 1840 and 1900, in the form of utilized power, for the ton of coal yielded three or four times as much power in 1900 as in 1840. Rapid as this rate of acceleration in volume seems, it may be tested in a thousand ways without greatly reducing it. Perhaps the ocean steamer is nearest unity and easiest to measure, for any one might hire, in 1905, for a small sum of money, the use of 30,000 steam-horse-power to cross the ocean, and by halving this figure every ten years, he got back to 234 horse-power for 1835, which was accuracy enough for his purposes.

Between population growth, efficiency improvements, and actual increases in raw energy consumption the nanotechnologist J. Storr Hall estimates an average 7% annual increase in the amount of energy available to mankind since the invention of the Newcomen Engine in 1712. Far from being unprecedented, the birth of a posthuman society that invents everything there is to invent in one sprint was the natural conclusion of recent history. What is surprising is not that these two both came to the same conclusion so early, but that it took people so long to begin speculating about the radical endpoint implied by the progress they were experiencing.

The atom bomb itself followed from existing trends in weaponry during the war. Even before its invention, the methods of strategic bombing had become so effective that they had a 50:1 advantage over air defenses. That is, it cost 50 times less to destroy a square mile of Tokyo than it cost to build it. The first atom bombs gave a 300:1 ratio, or only 6 times what peak allied firebombing was already doing to cities. As Air Force general H.H. Arnold put it in the essay he wrote for One World Or None, even without atomic weapons civilization had already been doomed by advances in bombing. The bomb only made the conclusion overdetermined.

Now war had truly become impossible!

And when your society is a war machine, that exists to organize large masses of men to fight in spectacular wars, this means you have lost the reason for organizing and decay will inevitably set in.

After the war ended there was great panic about the implications of atomic weapons. Many of its inventors quickly came together to write a series of essays to explain them to the public. The resulting book was published in 1946 as One World Or None. It’s a fascinating time capsule, capturing in amber the mood and arguments among many scientifically literate people immediately following the bombing of Hiroshima and Nagasaki. They were extremely pessimistic about the possibility of human technology overcoming the problems posed by atomic weapons. To quantify the danger H.H. Arnold put the bombs destructive power in terms of dollars to destroy a square mile of Tokyo, or advantage to the attacker over defenders. Louis Ridenour estimates the maximum efficiency of active defenses as stopping 90% of incoming projectiles, but the advantage to the enemy of only 10% making it through would still be comparable to the apocalyptic strategic bombing that was accomplished with chemical explosives. This is before considering further advances in atomic weapons, which we of course know progressed the question from one of civilizational collapse to human extinction.

The authors of One World Or None were urgent, and told the public that national borders and the concept of ‘national security’ needed to be abandoned immediately for there to be any hope for humanity’s survival. As Harold Urey puts it, whatever is the point of entrenching forts, putting industry underground, and protecting the army, navy, air force from destruction by enemy atomic bombs if those military units cannot protect the citizenry of the country. E.U. Condon speculates about the security state and abolition of civil liberties that will be necessary to prevent briefcase nukes from proliferating near every location of strategic interest in the United States. Walter Lippmann advocates passionately for the establishment of a world government whose laws would apply to individual citizens, not nations, and that the entire world community should stand behind whatever whistleblowers arise to bring anyone trying to build atomic weapons to justice. Einstein had some optimism that world government would be achieved, once people realized there was no cheaper or easier solution to the problem.

Unfortunately, an easier solution to the problem was found.

Game theory, another invention by the polymath Neumann, promised theorists at organizations like RAND a cheap way to prevent a third world war without giving up national sovereignty. The mathematics ‘proved’ that so long as you could make it in nobodies rational interest to start a third world war, and create common knowledge among all parties that it was in no ones interest, no war would occur. Unfortunately, these concepts were very much like the arguments and ideas used to say that there would never be a first world war. They also conveniently precluded building underground societies or dispersing industry across the country. In fact, according to this interpretation the best defense was a good offense, and any defensive measures simply fueled the delusion that war was still possible. The best thing to do was nothing, to ensure the maximum possible damage for all parties if a war broke out.

One could argue that this convenient logic absolved the burgeoning ‘military industrial complex’ of a responsibility to defend civilians or find a real solution to atomic weapons. It is often accepted that having gone 76 years without a 3rd world war that the game theory worked. We would do well to keep in mind however that at the onset of WW1 Europe hadn’t seen a continent wide conflict in a century. It was only after generations of relative peace, with European societies deeply disassociated from the reality of what war meant that they were able to fight Jan Bloch’s impossible conflict. The illusion of national security persists to this day even as no nation is really secure and the standing armies have become totally incapable of protecting the civilian populations they supposedly exist to serve.

Disassociation and Stagnation in the Postwar Years

After the invention of the atomic bomb Western society becomes increasingly unmoored from the material world. It is far beyond the scope of this essay to rigorously pinpoint the exact reasons for this, but a sketch of what happened is of undeniable interest. As has hopefully become clear, the history of existential risk is mostly a history of human technology and its consequences. The increasing disassociation of the postwar years effects not just how people characterize and think about existential risk in the decades that follow, but what technologies are developed. It is not possible to fully come to grips with the rational singularity forecasted by Korzybski and Neumann without a sense of how that singularity was thwarted in the 1960′s and 70′s. Nor is it possible to even begin to understand how Eric Drexler’s ideas about nanotechnology could have almost no physical impact after they became a New York Times bestseller in the 80′s without either assuming their impossibility or some kind of great stagnation thesis. The green activism and debate around limits to growth in the 70′s is both a cause and effect of the great stagnation, without which the narrative thread between what comes before and what follows gets murky.

We can begin our sketch by noting that the cultural decline of Western civilization from the 1950′s onwards is postmarked by its increasing distance from material reality. It was pointed out by the postmodernist Baudrillard that even a system with only reality and reference to reality (which includes other references) will eventually become deranged as the symbols begin pointing only to each other rather than any experience of the territory they claim to map. The only way to prevent this is to have some kind of grounding force that selects for reality based ideas over symbolic rabbit holes. This descent into ‘hyperreality’ can be observed in the trajectory of popular science fiction and ‘fantasy’ lore over the course of the last century. Heinlein’s science fiction novels were derivative of literature describing real scientific and industrial ideas to a young audience. Star Trek was derivative of the pulp science fiction produced by writers like Heinlein. And the same young audience that might once have looked up to Picard now pays attention to the alien-centered morality play of Rebecca Sugar’s Steven Universe. Tolkien took philology, which studies the linguistics of real historical mythologies and oral traditions, and produced The Lord of The Rings, Gary Gygax and Dave Arneson took the fantasy of Tolkien’s generation and produced Dungeons and Dragons, and a surprising number of adults enjoy Pendleton Ward’s Adventure Time. It’s notable that Steven Universe and Adventure Time are much more alike than any two works by Tolkien and Heinlein. In the game of simulacrum everything degenerates into the same kind of mush.

One factor going into the postwar stagnation is newfound consumer wealth cushioning US citizens against the immediate consequencs of their actions. After WW2 most industrial societies were in ruins and forced to rebuild, leaving America with strong net exports. In spite of this the decade and a half that followed the invention of the atomic bomb in the United States was malaised. The 1950′s are often remembered as the idyllic golden period of US society, but this is largely nostalgia. In reality the 1950′s was a transitional prelude to the widespread gender confusion and youth violence of the baby boomers in the 60′s and 70′s. The combination of affordable cars and an interstate highway system allowed young Americans to enter previously secluded high-trust communities and destroy them with no repercussions:

The “heroes” of On The Road consider themselves ill-done by and beaten-down. But they are people who can go anywhere they want for free, get a job any time they want, hook up with any girl in the country, and be so clueless about the world that they’re pretty sure being a 1950s black person is a laugh a minute. On The Road seems to be a picture of a high-trust society. Drivers assume hitchhikers are trustworthy and will take them anywhere. Women assume men are trustworthy and will accept any promise. Employers assume workers are trustworthy and don’t bother with background checks. It’s pretty neat.

But On The Road is, most importantly, a picture of a high-trust society collapsing. And it’s collapsing precisely because the book’s protagonists are going around defecting against everyone they meet at a hundred ten miles an hour.

We know that the 1950′s weren’t the golden age because the generation that grew up in that decade produced the stagnant 70′s. By contrast, the extremely generative period that produced the atomic bomb and most of the technologies that made broad prosperity possible through the rest of the 20th century were products of the American industrial culture of the 1920′s and 30′s. At the onset of the cold war that culture is increasingly demonized and othered in American rhetoric. When it is found that American students are unable to compete with the sterling rigor of Soviet education, America shifts focus to lionizing creativity and imagination. The godlessness of the 1920′s materialism is rescandalized as an intrinsically socialist, subversive idea. And the industrial utopianism that inspired many Americans like Frank Oppenheimer to try changing society through the power of science was interwoven with the communist vision and therefore taboo.

The most overwhelming aspect of One World Or None is its physical, highly intuitive account of the atomic bomb in terms of quantified material factors. The bomb is characterized not as a magic doomsday device, but a bomb with a destructive capacity comparable both to other bombs and to other methods of destruction, construction, etc. It is precisely this physical intuition that Korzybski appeals to in explaining why he thinks his age might succeed in solving the nature of man where previous generations had not. The reader need only compare One World Or None to the later X-Risk classic Engines of Creation by Eric Drexler, which spends pages suggesting the possibilities of atomic scale manufacturing without ever getting into the concrete details of how he expects these machines to compare to traditional industrial processes.

As the Soviet acquisition of atomic arms made war between the United States and the USSR truly impossible, military focus also became increasingly disassociated from reality. Operations shifted to proxy wars fought between the two powers. The first of these wars in Korea was so much like a real conflict that it allowed the US to keep up its illusions about the viability of war for a little longer. The next adventure in Vietnam removed all romance from the equation, pitting the well oiled US military against irregular jungle fighters who lured that foreign war machine straight into the mud. Getting stuck in that quagmire exposed US troops to lethal and traumatizing ambushes at the same time it exposed domestic society to insurrection and upheaval. Young men were incensed at the waste, brutality, and dishonor of this conflict. Their fathers had gotten to unseat the genocidal tyrant Hitler, but they were being asked to kill women and children in a faraway place of no clear importance.

Exponential Extraction and Externalities: The Limits To Growth

Both the focus on exponential growth and the disassociation from material factors are present in The Limits To Growth, a book that characterizes the 70′s X-Risk zeitgeist. The Limits To Growth is a short 1972 book about human resource consumption. It is written by The Club of Rome, a group of authors using ‘computer simulations’ not to predict the future, but to infer its shape. The Limits To Growth makes a basic argument about the trajectory of human civilization. Its authors state that because humanity is growing in population and using finite earthly resources at an exponential rate, the ‘natural’ development curve will be to consume almost all nonrenewable resources and then permanently crash down to an earlier level of development. At the same time humanity exponentially exhausts its resources it is also running into the limits for environmental absorbtion of the byproducts of human industry. Rising levels of mercury in fish, and lead in the polar ice point towards massive impacts of human industry on the surrounding environment. Carbon emissions from industry seem to be mostly absorbed by the ocean as well. In their simulations the Club of Rome finds that pollution is an even bigger threat than resource consumption. It both cuts off the flow of food and directly reduces the human lifespan, retarding the growth of civilization. However because their book is not a prediction, the Club of Rome completely declines to give any concrete figures about when pollution will reach a tipping point, noting that such questions seem impossible to answer even in principle while also admitting that tipping points have been observed from acute pollution in a local setting. They justify this hesitation explicitly:

The difference between the various degrees of “prediction” might be best illustrated by a simple example. If you throw a ball straight up into the air, you can predict with certainty what its general behavior will be. It will rise with decreasing velocity, then reverse direction and fall down with increasing velocity until it hits the ground. You know that it will not continue rising forever, nor begin to orbit the earth, nor loop three times before landing. It is this sort of elemental understanding of behavior modes that we are seeking with the present world model. If one wanted to predict exactly how high a thrown ball would rise or exactly where and when it would hit the ground, it would be necessary to make a detailed calculation based on precise information about the ball, the altitude, the wind, and the force of the initial throw. Similarly, if we wanted to predict the size of the earth’s population in 1993 within a few percent, we would need a very much more complicated model than the one described here. We would also need information about the world system more precise and comprehensive than is currently available.

The Limits To Growth could just as easily be titled “counterinutitive properties of the exponential function”. It discusses specific resources like chromium only as examples of general categories, which could be omitted from the text without losing any substance. A reader who wants to get a specific sense of what I mean by disassociation needs only read one of the essays in One World Or None and then compare it to a subject discussed in The Limits To Growth. They will quickly realize that where the physics trained essayists in One World Or None are capable of rapidly producing thoroughly justified answers to speculative subjects, the authors of The Limits To Growth can’t manage half the clarity of a single essay in ten times the space.

The book is very much like Korzybski’s Manhood Of Humanity in that it is dealing with the nature of man as an exponential function. But where Korzybski focuses on the disparity between the rate of social and physical science, the Club of Rome focuses on the disparity between exponential growth and a finite environment. Because it is published 50 years after Manhood of Humanity, The Limits To Growth can take advantage of cybernetic concepts like positive and negative feedback loops which it uses to frame its argument. These unfortunately result mostly in borderline incomprehensible graphics that do more to obfuscate than enlighten. None of these flaws prevented it from receiving widespread discussion, or from its essential conclusions becoming the dominant outlook of green activists.

In the 60′s and 70′s population growth and energy-resource use apocalypses go hand in hand with nuclear apocalypse. Multiple authors in One World Or None recommend that nuclear power be delayed until world government or other means of control are established, because atomic power plants are the natural fuel source for atomic bombs. Anti-nuclear activists took this recommendation to heart and vigorously protested the construction of nuclear plants, ensuring that humanity continues to use fossil fuels well into the 21st century. Nuclear power suggests some of the catch-22 in the recommendations of authors like Club of Rome, namely that the measures taken to curb the growth that might kill us exacerbate issues like climate change that also might kill us.

It is important to remember that the core problem of the atomic bomb is humanity harnessing a level of energy with which it can kill itself. So anti-nuclear activism isn’t really about nuclear power, but all forms of energy. Space colonization also implies gaining access to suicidal amounts of energy in the form of room to accelerate objects to deadly speeds that crash into earth with energies far greater than an atomic bomb. This means a green society must ultimately cripple or retard basically all forms of industrial progress and expansion. While the first anti-nuclear activists might be characterized as naive, their successors seem to have a gleeful nihilism about sabotaging society. Because all forms of production need to use energy, control over what uses of energy are considered wasteful is dictatorial control over all processes of production. J. Storr Hall notes in Where Is My Flying Car? that these same activists were horrified by the prospect of cold fusion power, which has nothing to do with atom bombs.

Of the three sources of energy becoming available to society noted by Hall: fuel, population growth, and efficiency improvements, fuel and population both flattened; avoiding the industrial singularity that had been building since the Newcomen Engine. Humans can only learn at a relatively slow pace, so the progress in knowledge noted by Korzybski relied on a rapidly increasing population. Production of goods and services also requires people to perform, so industrial progress was also retarded by slowing population growth and further slowed by flat energy use. Hall also notes that the efficiency improvements which green activists lionize tend to result in increased demand that leads to more resource use. He attributes 3% of his 7% more available energy per year to population growth, 2% to fuel consumption, and 2% to efficiency improvements. World GDP growth in the last 50 years tends to be in the 2-4% range.

Drexler Contra Rome on Limits To Growth: Nanotechnology and Engines of Creation

Probably the most interesting response to Limits To Growth was by the scientist Eric Drexler. He noted that the microscopic scale at which cells operate implied much more precise forms of industry were possible than currently in use. This precise nanomachine industry would be able to recycle waste, use much fewer resources to accomplish tasks, work directly off solar energy, and reproduce itself from common materials just like existing lifeforms. Man could clean up his existing environment and then push dirty processes of production more easily into space using enhanced material science. But this was only the start: the same nanotechnology could be used to produce fundamental changes in the human condition by merging machines with the human body, performing surgeries impossible with contemporary medicine like reviving cryonics patients from the dead, and even changing human nature by making every faculty of man a programmable machine.

In fact Drexler quickly became so impressed by the capabilities of his hypothetical nanobots that he realized they could easily become an existential risk unto themselves. Unlike traditional protein based life, diamond nanomachines would be able to easily outcompete existing lifeforms in the wild. Their rate of reproduction would also be exponential, if they could construct themselves from common biomass or soil they might consume the entire planet, a hypothetical known as the gray goo problem. Rather than rejoice that a solution to mankind’s resource problems was at hand, Drexler found himself horrified by the nightmare that civilization was sleepwalking into. Without adequate preparation disaster was sure to come, and the precursors to nanotechnology such as genetic engineering and miniature computing devices were already being developed.

This all might seem like a fanciful notion, but Drexler was responding to a call to action from the Nobel winning physicist Richard Feynman. In his 1959 talk Plenty Of Room At The Bottom Feynman explained how the miniaturization of industry would transform society. He discussed how working on the molecular and atomic scale would pave the way for genetic engineering, artificial intelligence, atomically precise manufacturing of all products, and possibly even the elimination of disease through small surgical robots. Feynman expresses his strong confusion that nobody is doing this yet, and exhorts the audience to closely investigate ways that the electron microscope might be improved. This talk seems to have been the basic blueprint not just for Drexler’s research program, but his overall thoughts on what nanotechnology implied for the future.

After publishing his thesis Drexler wrote up a pop science version of his ideas in the bestselling 1986 book Engines of Creation. Most of Engines… is not actually about the technical details of nanotech, or even nanotech at all. Rather Engines of Creation is a guide to thinking about the consequences of technologies with exponential growth curves using the frame of evolutionary selection, then the dominant paradigm in AI. In it Drexler invents the timeline mode of analysis that would be familiar to contemporary theorists of existential risk. In Drexler’s timeline convergent interests from computer manufacturers, biologists, and others will create what he terms proto-assemblers that put together products at the nanoscale level in a limited way. Once these are mastered they will become universal assemblers that can be programmed to put together any product that can be made out of atoms. But before that can happen certain obstacles have to be overcome, these obstacles frame the Drexler timeline.

Drexler’s expectation was that on the way to proto-assemblers we would build narrow AI tools that allow us to build smaller and smaller computer chips. By the standards of what was considered AI in the 80′s, the current computer programs used to design circuits in computer engineering certainly qualify. Narrow AI would have a positive feedback loop with computers where better computers lead to better AI leading to better computer chips. AI would also help unlock the secrets of protein folding and genetic engineering, allowing the eventual creation of proto-assemblers. Drexler believed that before its invention AI programs would already explore nanotechnology space in anticipation of its possibility.

In this timeline once narrow AI is good enough it will allow the creation of proto-assemblers and then universal assemblers. Drexler admits he’s uncertain about what it will take to create general artificial intelligence, but that as a upper bound we know human minds occur in the physical universe so neuromorphic AI should work. Working off that inference Drexler figures that narrow AI will usher in nanotech and then nanotech will usher in AGI built off extremely miniaturized cheap computing devices such as protein computers. Both the nanotech and the AGI present an existential risk to humanity, but since nanotech poses a threat first 1986!Drexler focuses his narrative on that.

In order to avert catastrophe Drexler believes that the first scientists to nanotech will have to develop an “active shield” that finds and destroys any rogue competing nanomachines in its sphere of influence. If successful not only would this avert apocalypse, but end all forms of microbic disease and unwanted pests in human territory. However it would also give the controllers of the active shield dictatorial power over the structure of matter in their territory, leading Drexler to deeply fear its first development by authoritarian societies. This likely leads to arms race dynamics in nanotech, reducing the likelihood that its first creators will have a significant lead time in which to develop an active shield and also reducing the likelihood that its inventors will create it safely. Since the two superpowers have a vested interest in not witnessing this outcome, Drexler muses about the possibility of a collaboration between the Soviets and Americans on this issue.

In the end it didn’t matter, because even after becoming a New York Times bestseller no state seems to have seriously pursued nanotechnology. It is not entirely clear why. J. Storr Hall writes in Where Is My Flying Car? that the money invested into American nanotech went to grifters and charlatans who did not want atomically precise manufacturing capability to exist. But even if we accept this it simply pushes the question a step backwards: If states understood the thing they were trying to fund, atomically precise manufacturing as opposed to the buzzword ‘nanotech’, it seems unlikely they would allow an investment of millions of dollars to be wasted. Nor would they stop after ‘merely’ wasting hundreds of millions of dollars on their research program. The Manhattan Project was allowed to consume the equivalent of 23 billion dollars in 2007 money, and the active shield seems at least as important to develop as the atomic bomb.

Some theorists believe the answer is that nanotechnology as described by Drexler is simply not possible. Richard Smalley, a Nobel prize winning chemist who discovered buckminsterfullerene had a well publicized debate with Drexler where he claimed that nanomachines are ruled out by physical principles, and that the idea of ‘atomically precise’ manufacturing is the province of computer scientists who don’t understand that atoms do not just go wherever you want to put them. There is a striking parallel between the arguments used against nanotech and the arguments used against AGI, in that both tend to imply things we know exist shouldn’t be possible. Beyond a certain point one is forced into the position of arguing that self replicating microscopic machines are known to exist but can only function in exactly the form we find them in nature with no alternative approaches or substrate materials being possible. In the same way, beyond a certain point one is forced into arguing that the human brain exists and seems to have at least some material basis but cannot be replicated with a sufficient quantity of nonbiological components, or if it can that humans happen to occupy the peak of potential intelligence in spite of their design, resource, energy, and space limitations. Which is to say that beyond a certain point one is forced to call bullshit.

Extropians and X-Risks

The collapse of the Soviet Union in 1989 made the 1990′s an optimistic decade in the West. Intellectuals such as Fukuyama speculated about an ‘end to history’ as liberal democracy spreads across the world. This meant the intense focus on existential risk in Drexler’s work largely fell on deaf ears. He correctly predicted at the end of Engines… that as time passed his ideas would be broken up into decontextualized chunks. Cryonicists were interested because nanotech provided a plausible way to revive patients. Engineers liked having a retort to the eco-doomsayers and hippies. The burgeoning transhumanist movement saw an opportunity to radically reinvent themselves. But it seems very few people read Drexler’s book and came away with the intended conclusion they were in mortal danger.

While a general atmosphere of 90′s optimism delayed thinking on existential risk, it was the most optimistic and vivacious transhumanist thinkers who ended up advancing it in the 2000′s. Perhaps the most notable conversion story is that of Eliezer Yudkowsky, a young physicist introduced to Drexler’s work through Great Mambo Chicken and The Transhuman Condition. Great Mambo Chicken… is a book detailing how the early cryonics and L5 space colonization communities (of which Drexler was a member) became the transhumanist movement. Yudkowsky had managed to totally miss the urgency of Drexler’s message, since it was unintuitive to him that as the engine of progress technology could really do harm to the human race. His newfound interest eventually landed him on the extropians mailing list. The extropians were a libertarian transhumanist group with the modest goal of (among other things) abolishing death and taxes. It was while discussing nanotechnology with these people that Yudkowsky came to the startling realization that they didn’t really seem to be taking it seriously. If the implications of the technology implied real danger, they shied away from it because it might be incompatible with their libertarianism. Eventually Yudkowsky forked the list and founded a new group called SL4, meant only for people who reliably take weird ideas to their logical conclusions.

The realization that nanoweapons would destroy humanity pushed Eliezer to work harder on superintelligent AI. Since nanotechnology was going to destroy everything by default, he reasoned, the best thing to do was to accelerate the invention of a superintelligent AI that could prevent humanity from destroying itself. At first he thought that since the AI would be superintelligent, it would do good intelligent things by default. But in time he came to understand that ‘intelligence’ isn’t a human category, that humanity occupies a tiny sliver of possible minds and most minds are not compatible with human values. People only encounter intelligent human minds, so they think intelligent behavior means human behavior. This is a fatal mistake, there is a long history of AI researchers being surprised by the gap between what they think their instructions mean and what their programs actually do. In the year 2000 Marvin Minsky suggests that a superintelligent AI asked to solve a difficult (or outright impossible) math problem might ‘satisfy’ its creator’s goal by turning them and the rest of the solar system into a bigger computer to think with.

The relentless optimism of the 90′s evaporated after the 9/11 attacks inaugerated the new millenium in 2001. It seems auspicious that Nick Bostrom coined the phrase ‘existential risk’ in a 2002 paper, while across the ocean the United States was hard at work moving towards the onerous police state E.U. Condon felt would be a necessary response to briefcase nukes. In the desipair laden years that followed optimistic transhumanist sects went extinct while the profile of existential risk grew. Extropy went from being the dominant force in transhumanism to a footnote. As a cofounder of the World Transhumanist Association, Bostrom was able to capture the wilting energy, leading the movement away from exuberant libertarianism towards a central focus on X-Risk.

At some point during those years an equinox was reached between nanotechnology and AGI as the apex existential risk. Where the Drexler timeline had specified AGI would be a consequence of nanotechnology, now many thinkers such as Yudkowsky had put it the other way around: Superintelligent AI would come first, and nanotechnology would just be an implementation detail of how it destroys the world. This reversal was likely a consequence of increasing globalization: Moore’s Law stubbornly refused to quit giving computer performance enhancements while physical industry in the West became obviously stagnant. It no longer seemed plausible that anybody would build nanotechnology before AI became so good that it could develop itself into a bigger threat. The Yudkowsky timeline where fully general AI causes nanotech (and then immediately after the end of the world) unseated the Drexler timeline so thoroughly that it has seemingly been totally forgotten. Drexler’s ideas are retrospectively described as though they were unaware of AI, or that inventing everything there is to invent in one short sprint isn’t explicitly discussed in Engines of Creation.

By contrast Yudkowsky describes his vision of AI apocalypse in That Alien Message. His hypothetical AI approximates Solomonoff Induction, a theoretically perfect reasoning process able to infer the structure of the universe from minute quantities of information, say a video of a falling apple. While our current AI techniques such as deep learning fall far short of the performance implied by Solomonoff, Yudkowsky believed while writing that general intelligence would force researchers to get strong theoretical foundations, letting them approach the mathematical limits of inference. In his story humanity stands in for the AI, describing how we would react if we discovered a group of braindead gods with braindead alien values were simulating us in one of their computers. We easily trick them into thinking we’re dumber than we are, convince someone in the outside world to mix together our nanotech built in their physics, then consume them without a shred of empathy. It’s possible to quibble about how much empathy we would show our creators in this situation, but we can be fairly sure the story does not end with “and then after aeons of slowly grinding themselves up to creating an artificial mind the smoothbrains ruled the universe in plenty and peace until the end of time.”

Effective Altruism and X-Risk

During the 2010′s philosophers of existential risk began to rebrand away from their close association with transhumanism. Being tied to the hip with a niche, ‘radical’ ideology is counterproductive to their goal of getting humanity to notice it is in mortal danger. The perceived cultishness of transhumanism put up barriers to academic and institutional support for studies into X-Risk. So whether consciously or not, philosophers such as Bostrom began to divorce themselves from an explicit ‘transhumanist’ agenda. This wasn’t a change in beliefs or policy proposals so much as a change in emphasis. Humanity was still meant to conquer the stars and produce trillions upon trillions of kaleidoscopic descendants, but this project began to be framed in ‘neutral’, ‘objective’ terms such as utilitarianism and population ethics.

The first major opportunity to do this arose with Peter Singer’s Effective Altruism movement. The original idea behind Effective Altruism is pretty simple: A dollar goes further in the 3rd world than the 1st world, so if your goal is to make peoples lives better you’ll get more leverage by helping people in developing countries. This was paired with another idea called Earn To Give, where people take high paying jobs and live like paupers while they donate the rest to charity. Between these two concepts Effective Altruism built a community of strong utilitarians with significant personal investment in finding the best ways to help others. Early Effective Altruism had a lot of overlap with Yudkowsky’s rationalist movement, a self help version of Extropy that has an explicit X-Risk focus. This meant that X-Risk theorists could translate their ideas into the traditional moral philosophy used by Singer and get intelligent elites interested in them. Fringe philosophers like Yudkowsky were phased out of discourse in favor of more popular thinkers like Derek Parfit, whose ideas about population ethics justified a central focus on existential risk for altruists. You can spend thousands of dollars to save a 3rd world life, or put the same amount of wealth towards ensuring the eventual existence of many many more future people. The resulting ‘longtermist’ altruism remains controversial in EA circles to this day.

Adopting the traditional moral philosophy of Effective Altruism had several unintended side effects on the field of existential risk. Probably the most important was that it put negative altruist interpretations of X-Risk into the overton window. Negative utilitarianism is the moral position that no amount of positive experiences outweighs suffering, and therefore only the reduction of suffering is morally relevant. Nick Bostrom cofounded the World Transhumanist Association with David Pearce, a negative utilitarian philosopher who believes life is a mistake and openly admits in interviews that he wants to destroy the universe. Because he doesn’t believe destroying the universe is politically viable, Pearce settles for advocating the abolition and replacement of all suffering with positive experiences. This isn’t because David Pearce is excited about there being more positive experiences, but because the positive experiences will replace the negative.

There is a natural tension between advocating the abolition of all life while opposing human extinction, but it’s not as crazy as it might sound at first. After all, if humanity goes extinct but leaves behind even trace multicellular life it will quickly evolve back into sentient beings capable of suffering. In a negative utilitarian interpretation humanity must become all-powerful so it can engage in true omnicide, not just save itself from suffering. Many other high profile negative utilitarians such as Brian Tomasik agree that it’s not politically viable to destroy all life, and see their job as harm reduction in the life that will inevitably persist past the singularity.

It is probably worth the readers time to reflect on the esoteric interpretation of these events. In order to garner stronger institutional support eager immortalists were forced to align themselves more closely with the underlying ethos of our current societies: The uncompromising, unexcepted suicide of all human persons and the total abolition of existence. With the COVID-19 pandemic testing all Western institutions and finding them virtually all wanting, at the same time it secludes people to their homes, and subjects them to an increasingly onerous police state, it seems predictable that the idea of committing suicide will gain enormous cachet, especially among the young. Under the weight of total social, institutional, and metaphysical failure it will increasingly seem to people like suicide is the only way out.

But there is another way out, if we still have enough sanity left to pursue it.

It is written nowhere in That Alien Message that the humans do anything human!mean to the aliens in the universe they break out into. The error strikes me as very significant; to write that humans would be ungracious in victory from a human perspective, be cruel or even uncaring towards the aliens, would indicate that the author’s fear of AI came from a belief that everybody has to be uncaring and humans have to be uncaring too, which is very much not what I believe.

(I do expect that the humans stopped the aliens from eating babies; and that the aliens were very sad about that, and that it was not what they’d have chosen for themselves.)

My favorite science communication of nanotech is A Capella Science’s: https://youtu.be/ObvxPSQNMGc

I actually gave a talk loosely inspired by that video, about how (as an STM guy) I found Drexler’s designs unrealistic, and that nanotechnology for the foreseeable future would look like those molecular ratchets driven by entropy gradients in warm, wet conditions rather than atomic manufacturing where atoms are chauffeured down an energy gradient in a vacuum to their spot in the final design.

Many thanks for an excellent overview. But here’s a question. Does an ethic of negative utilitarianism or classical utilitarianism pose a bigger long-term risk to civilisation?

Naively, the answer is obvious. If granted the opportunity, NUs would e.g. initiate a vacuum phase transition, program seed AI with a NU utility function, and do anything humanly possible to bring life and suffering to an end. By contrast, classical utilitarians worry about x-risk and advocate Longtermism (cf. https://www.hedweb.com/quora/2015.html#longtermism).

However, I think the answer is more complicated. Negative utilitarians (like me) advocate a creating a world based entirely on gradients of genetically programmed well-being. In my view, phasing out the biology of mental and physical pain in favour of a new motivational architecture is the most realistic way to prevent suffering in our forward light-cone. By contrast, classical utilitarians are committed, ultimately, to some kind of apocalyptic “utilitronium shockwave” – an all-consuming cosmic orgasm. Classical utilitarianism says we must maximize the cosmic abundance of pure bliss. Negative utilitarians can uphold complex life and civilisation.

This is news to me; I thought NU’s were advocating for the gradients of wellbeing thing only as a compromise; if they didn’t have to compromise they’d just delete all life. And if we allow for compromises then CUs won’t be killing off everyone in a utilitronium shockwave either. IMO both NUs and CUs are crazy.

Secular Buddhists like NUs seek to minimise and ideally get rid of all experience below hedonic zero. So does any policy option cause you even the faintest hint of disappointment? Well, other things being equal, that policy option isn’t NU. May all your dreams come true!

Anyhow, I hadn’t intended here to mount a defence of NU ethics—just counter the poster JDP’s implication that NU is necessarily more of an x-risk than CU.

Do they also seek to create and sustain a diverse variety of experiences above hedonic zero?

Would the prospect of being unable to enjoy a rich diversity of joyful experiences sadden you? If so, then (other things being equal) any policy to promote monotonous pleasure is anti-NU.

It wasn’t a rhetorical question; I really wanted (and still want) to know your answer.

(My answer to your question is yes, fwiw)

Thanks for clarifying. NU certainly sounds a rather bleak ethic. But NUs want us all to have fabulously rich, wonderful, joyful lives—just not at the price of anyone else’s suffering. NUs would “walk away from Omelas”. Reading JDP’s post, one might be forgiven for thinking that the biggest x-risk was from NUs. However, later this century and beyond, if (1) “omnicide” is technically feasible, and if (2) suffering persists, then there are intelligent agents who would bring the world to an end to get rid of it. You would end the world too rather than undergo some kinds of suffering. By contrast, genetically engineering a world without suffering, just fanatical life-lovers, will be safer for the future of sentience—even if you think the biggest threat to humanity comes from rogue AGI/paperclip-maximizers.

Thanks for answering. FWIW I’m totally in favor of genetically engineering a world without suffering, in case that wasn’t clear. Suffering is bad.

Quantitatively, given a choice between a tiny amount of suffering X + everyone and everything else being great, or everyone dying, NU’s would choose omnicide no matter how small X is? Or is there an amount of suffering X such that NU’s would accept it as the unfortunate price to pay rather than “walk away.” (“walk away” being a euphemism for “kill everyone?” In the Omelas story, walking away doesn’t actually help prevent any suffering. Working to destroy Omelas would, at least in the long run, depending on how painless the destruction was.)

A separate but related question: What if we also make it so that X doesn’t happen for sure, but rather happens with some probability. How low does that probability have to be before NUs would take the risk, instead of choosing omnicide? Is any probability too low?

It’s good to know we agree on genetically phasing out the biology of suffering!

Now for your thought-experiments.

To avoid status quo bias, imagine you are offered the chance to create a type-identical duplicate, New Omelas—again a blissful city of vast delights dependent on the torment of a single child. Would you accept or decline? As an NU, I’d say “no”—even though the child’s suffering is “trivial” compared to the immensity of pleasure to be gained. Likewise, I’d painlessly retire the original Omelas too. Needless to say, our existing world is a long way from Omelas. Indeed, if we include nonhuman animals, then our world may contain more suffering than happiness. Most nonhuman animals in Nature starve to death at a early age; and factory-farmed nonhumans suffer chronic distress. Maybe the CU should press a notional OFF button and retire life too.

You pose an interesting hypothetical that I’d never previously considered. If I could be 100% certain that NU is ethically correct, then the slightest risk of even trivial amounts of suffering is too high. However, prudence dictates epistemic humility. So I’d need to think some more before answering.

Back in the real world, I believe (on consequentialist NU grounds) that it’s best to enshrine in law the sanctity of human and nonhuman animal life. And (like you) I look forward to the day when we can get rid of suffering—and maybe forget NU ever existed.

Thanks for the clarification!