Blink: The Power of Thinking Without Thinking (book review)

Summary

Blink is a book about people making unconscious emotional judgments, and how this isn’t always a bad thing. In certain circumstances people subconsciously figure something out, and change their behavior based on this, far sooner than they gain conscious awareness. People here talk all the time about the need to de-bias themselves and think things through logically, which is a commendable goal, but working on biased hunches is much faster—and sometimes the biases lead to more accurate judgements.

The book is about when unconscious emotional judgements should be listened to, and when they shouldn’t. Blink also says how to get better results.

When Emotional Judgements are Good.

Feelings of Unease

Imagine that I ask you to play a simple gambling game. In front of you are four decks of cards: two are red and two are blue. Each card gives you money or costs you money, and you should turn over 100 cards. one at a time, and try to maximize your winnings.

The experimenters measured the activity of the sweat glands in the palms of the hands. When someone got a big penalty card from one of the decks, the sweat increased, and their behavior started to change as well. They started taking more cards from decks of the other color, making adjustments in response to data without keeping track of exact results.

The decks were not quite equal. The red decks had much higher penalties and only slightly higher rewards than the blue decks. The gamblers started saying that they had a hunch the blue decks were better (correctly) around the 50th card, and could say what the exact difference was by the 80th. But when did they start having noticeably more sweat turning over red cards instead of blue cards?

The tenth. They gained a correct emotional bias, usually in the right direction, in just a fifth of the time needed to realize they had a hunch. This is called the “Bechara gambling task” and gained quite a lot of follow-up studies.

(The replication studies show a weaker effect: subjects are able to have a hunch at the same time as the sweat and behavior change, but this still happens significantly before they can give a reason why):

Another example Gladwell gave in the first chapter was a marble statue. It was a very rare 2600 year old kouroi statue, found almost perfectly preserved, matching the style of similar statues, and had an asking price of just under $10 million. When the statue was examined with a microscope and other instruments, the statue was found to be from a Greek quarry and covered in a thin layer of calcite, which forms naturally over hundreds of years and supported its claim to authenticity. The Getty museum was convinced and bought it.

There was just one problem. Expert archaeologists and historians kept saying it didn’t look right. Frederico Zeri, who served on the Getty’s board of trustees, stared at the sculpture’s fingernails and said they seemed wrong somehow. Evelyn Harrison, a world foremost expert on Greek sculpture, also had a hunch. Thomas Hoving said it seemed “fresh”, which is not the right reaction to ancient statues, and said they should try to get their money back. Now worried, the Getty museum shipped it to Athens and invited the country’s most senior sculpture experts, who almost unanimously said it was wrong (but couldn’t point out specific details). Some archaeologists said nothing coming out of the ground would have that color.

Eventually, after investigation, the statue was found to be a forgery after all. (Here’s the Getty’s web page about it.) The experts, in a few seconds, without knowing why, were able to gather enough evidence to be confident that the museum was wrong, even though scientific experimenters were fooled.

Humans don’t make biased decisions just because they’re stupid. Letting unconscious emotional judgements control decisions was an extremely useful tool on the savanna. A slight advantage in guessing the optimal actions helped out-compete competitors in the long run. If a village elder said that a place they were considering making camp seemed wrong somehow, it was a good idea to move onward even if they were unable to give a specific legible reason. The book also mentioned a firefighter who was called to put out flames in a kitchen, but noticed that something felt off, and ordered his crew outside just before the basement fire collapsed the floor. Stopping to think everything through logically would get you killed.

Thin-slicing to make predictions

You don’t need to take a lot of data about someone to know important things about them. If you just walk into someone’s house and look around, you would probably learn a lot about their personality.

Blink, however, goes much further than this. John Gottman claims he can record a 15 minute video of newlyweds talking about any issue they disagree about, and predict with about 90% accuracy whether they will get divorced within 15 years. Follow-up studies show that just 3 minutes still allows almost as good of a prediction. This is done by checking for certain behavior patterns such as contempt. There are a lot of studies done by Gottman and it is unclear which ones were talked about in the book, although this is one of them:

Still, the effect does seem suspiciously strong for just observing one conversation. “Gottman has found, in fact, that the presence of contempt in a marriage can even predict such things as how many colds a husband or a wife gets; in other words, having someone you love express contempt toward you is so stressful that it begins to affect the functioning of your immune system.” Blink was written before the replication crisis was noticed, and it seems really dubious. There don’t seem to be replications not done by Gottman, so I’d be careful around this one.

The other example they gave seems a lot stronger, and is very useful to any doctors. US doctors are often hit with expensive malpractice lawsuits, but there is in fact one small change that lets them avoid it. Medical researcher Wendy Levinson recorded hundreds of conversations between a group of experienced surgeons and their patients. Half of the doctors have never been sued, and the other half had been sued at least twice.

The first clear difference was that the surgeons who had never been sued spent an average of 18.3 minutes with each patient, while the surgeons who had been sued at least twice spent an average of 15 minutes. This extra time was mostly spent making orienting comments such as “First I’ll examine you, and then we will talk the problem over” or “Go on, tell me more about that”. Then Nalini Ambady took thin-slicing further by selecting two 10-second clips of the doctor talking and removed high-frequency sounds. This preserves intonation, pitch and rhythm but makes it very hard to recognize individual words. Each clip was rated on dominance (and a few other qualities like warmth and anxiousness) by judges, and dominance ratings by itself was enough to predict very well how often the doctors got sued.

Apparently the biggest difference between the sued and not-sued group is how well patients feel listened to. But does that replicate?

It does. (1)(2) Sorry doctors who view charisma as a dump stat, you’d better start working on it.

Bias is still often bad of course

Blink doesn’t say biases (unconscious emotional judgements) are always good. The book covers the halo effect, racism and sexism, priming, and how associations and packaging affects people’s evaluation of quality. I won’t explain these in detail since Lesswrong already covered things like this well, but the book does give a couple examples I found helpful for showing these biases in action.

Far more people drink the soda Coke than Pepsi. The Pepsi Challenge, started in 1975, asked customers to do blind taste tests between Coke and Pepsi. When customers tried this, most people actually liked Pepsi better than Coke. New Coke was a marketing disaster for the Coca-Cola company, but the Pepsi Challenge worked even when the Coca-Cola company ran the tests. Trying to improve the taste was an understandable mistake. However, Coke is still far more popular than Pepsi. Gladwell explains that this is first impressions being wrong: Pepsi is sweeter, which is an advantage in a sip test but can be overpowering when the whole can is drunk.

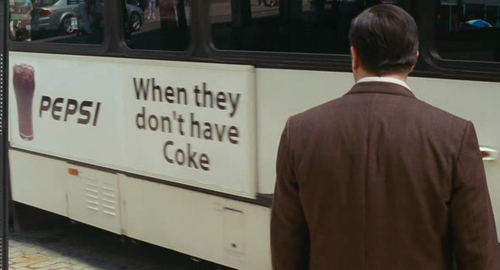

I disagree and think it really is mostly brand reputation. The comedy movie “The Invention of Lying” had a joke where a Pepsi truck went by with the slogan “When they don’t have Coke”, and the audience laughed.

On a more serious note, the book also mentions the case of Amadou Diallo, and describes step by step how priming and bias led to police shooting an unarmed man 47 times. First the plainclothes officers noticed that Diallo fit the description of a serial rapist in the neighborhood. Then, they noticed that he was looking around a lot, interpreted that as seeing what witnesses were nearby, and started yelling “show me your hands” and similar things. But the police were not in uniform, so all Diallo saw was a group of armed men yelling at him, and recently someone he knew had been robbed by a group of armed men, so he interpreted it as a mugging. He started to pull out his wallet, which to the officers looked like a gun. When they started shooting, some bullets ricocheted, giving the appearance of him shooting back. One of the officers dropped prone, which made the others think he was shot, and then adrenaline took over completely.

Even though international news kept calling them murderers, the officers really did believe Amadou Diallo was armed. The jury had 4 blacks and it still cleared the officers of all charges based on the strength of evidence. One of the cops was so distraught afterward that he couldn’t speak, and another one was crying. It was a bad situation, not a lynching.

The book has good news though: police can be trained to have alternate reactions like going to cover, and desensitization therapy can help police stay calmer.

Correcting for Biases (unconscious emotional judgements)

Slow down

An implicit association test is a test meant to test subconscious biases by doing something like this: people are given a category of “black person or good thing” and “white person or bad thing” and need to sort a lot of photographs. Then the categories are switched to “black person or bad thing” and “white person or good thing” and sorted again. If there is a time difference between the tasks, that supposedly shows racial bias. Another common example is female/family and male/career. Studies repeatedly find that one task is on average done slightly faster than the other, even when the pattern done first is randomized.

Psychologist Keith Payne: “When we make a split-second decision, we are really vulnerable to being guided by our stereotypes and prejudices, even ones we may not necessarily endorse or believe.” For implicit association tests, Payne has tried all kinds of techniques to reduce this bias. To try to put them on their best behavior, he told his subjects that their performance would be scrutinized later by a classmate. It made them even more biased. He told some subjects specifically what the experiment was about, and to avoid bias, which did nothing. The only thing that really helped was slowing the experiment down.

Many police departments have been moving from two-person cars to one-person cars. Why? When police officers are by themselves and see a dangerous situation, they must call for backup. This keeps things slow. With two-officer cars, however, they have a lot more confidence and bravado, and tend to take things faster. This means police are more likely to injure citizens they encounter, and citizens are more likely to be charged with assaulting an officer. Emphasizing cover and positioning, slow approaches, and other protective things also help.

Practicing getting shot at (with plastic pellets) and needing to continue to raid a house or something effectively helps with keeping clear thoughts during gunfights too. “In our test, the principal (the person being guarded) says, ‘Come here, I hear a noise,’ and as you come around the corner—boom! - you get shot… the round is a plastic marking capsule, but you feel it. And then you have to continue to function. Then we say, ’You’ve got to do it again… by the fourth or fifth time you get shot in simulation, you’re okay.”. This makes sense, considering how desensitization therapy/habituation works.

Block unnecessary details.

In the world of orchestra, it was thought for a long time that women could not play as well as men. This was not seen as a bias, but a fact: they simply didn’t have the strength, lung capacity, hand size, attitude, ambition, etc needed to be a master. This had proof: whenever auditions were held, the men just sounded better.

At some point, unions started complaining that judges were unfairly choosing non-union performers, and demanded changes. Now judges had to listen to performers from behind a curtain, with numbers given instead of names. I don’t know how much anti-union discrimination there was, but suddenly something neither the union leaders nor the judges expected happened. Women started being selected for main parts. Women like Abbie Conant kept impressing the judges no matter how jealous the men got.

To see pre-judging in action, you should really watch this 2009 video of judges rolling their eyes preemptively at Susan Boyle (very ugly by conventional standards) on Britain’s Got Talent. The judges were able to change their mind based on obvious excellence, but there was a clear hurdle that she had to overcome. (The important part starts at about 45 seconds)

This is a very useful concept. Establish beliefs about something before learning the political views of its supporters. Evaluate skill before looking at credentials. This isn’t always possible, but doing this would remove bias entirely, even biases you aren’t aware of.

Consider changing your appearance.

Finally, I should say why Malcolm Gladwell wrote this book.

“Believe it or not, it’s because I decided, a few years ago, to grow my hair long. If you look at the author photo on my last book, The Tipping Point, you’ll see that it used to be cut very short and conservatively. But, on a whim, I let it grow wild, as it had been when I was a teenager. Immediately, in very small but significant ways, my life changed. I started getting speeding tickets all the time—and I had never gotten any before. I started getting pulled out of airport security lines for special attention. And one day, as I was walking along Fourteenth Street in downtown Manhattan, a police van pulled up on the sidewalk and three officers jumped out. They were looking, it turned out, for a rapist, and the rapist, they said, looked a lot like me. They pulled out the sketch and the description. I looked at it and pointed out to them as nicely as I could that in fact the rapist looked nothing at all like me. He was much taller and much heavier, and about fifteen years younger… All we had in common was a large head of curly hair. After twenty minutes or so, the officers finally agreed with me and let me go… That episode on the street got me thinking about the weird power of first impressions.”

This is a lot like something Scott Alexander once wrote about drug prescriptions. People who look like him have no trouble getting the prescriptions they ask for, but when someone he knew who had a lot of tattoos had a problem, she was called a “drug-seeker” even though she hadn’t even asked for drugs. (I don’t remember which of his many articles it was in).

I wish people weren’t so insane that things like hairstyle matter so much. But, although you can change your own appearance, you can’t change other people. If you look like a slob or rebel or something, you might consider the benefits to still be worthwhile, but people will treat you differently based on that.

Conclusion

Humans make decisions based on unconscious emotional judgements because they were very useful in the ancestral environment. Biases are extremely hard to get rid of because they are unconscious emotional judgements.

There are ways to reduce the effect of unconscious emotional judgements. When events are slower the conscious thought processes have more time to consider the situation. Practicing actions causes them to be a normal reaction. Removing unneeded details prevents biases about them which affect judgement.

Biases are often based on things under your control, such as the way you look. Therefore, changing the way you appear and act would cause major changes in how you are treated.

As I understand it you’ve split this idea into two parts:

1. Good—People can develop their intuition to make correct predictions even without fully understanding how they can tell.

2. Bad—People frequently make mistakes when they jump to conclusions based on subconscious assumptions.

I’m confident that (2) is true, but I’m partly skeptical of (1). For example, Scott reviewed one of John Gottman’s books, and concluded that it’s “totally false” that he can predict who will get divorced 90% of the time: Book Review: The Seven Principles For Making Marriage Work | Slate Star Codex.

Another example which you’ve probably heard before is from the Superforecasting book: Book Review: Superforecasting | Slate Star Codex. Tetlock discovered that most pundit predictions were worse than random in the domains in which they were supposed to be experts.

I believe that intuition works well in “kind” learning environments, but not in “wicked” environments. David Epstein distinguished these in his book, Range: Why Generalists Triumph in a Specialized world (davidepstein.com). The main difference between the two environments is whether there’s quick and reliable feedback on whether your judgment was correct.

Chess is a kind environment, which is why Magnus Carlsen can play dozens of people simultaneously and still win virtually every game. This also explains the results of the Bechara gambling task. Politics and marriage are wicked learning environments, which is why expert predictions are much less reliable.