The Progress Forum

Opportunities

Links

IRBs save a few people while killing 10,000–100,000 more and costing $1.6B

Science depends on promoting good outliers, not preventing bad outliers

Breeding mosquitoes to prevent disease (strong XKCD 938 energy)

New, high-grade cache of rare earth elements was discovered recently

SF mayor introduces wide-reaching housing reforms (via @anniefryman who has a good explainer thread). And how to build a house in a day (via @Vernon3Austin)

Quotes

Why it’s more important to “raise the ceiling” rather than the “floor”

“Bureaucracies temporarily suspend the Second Law of Thermodynamics”

Queries

If you heard the term “intellectual entrepreneur,” what would you think it means?

What’s an example of a proactive regulation or policy that worked well?

Is anyone working on reducing the modern bureaucratic workload on researchers?

AI

The increased supply of intelligence will create more demand for tasks that require intelligence (via @packyM)

Observations on two years of programming with GitHub Copilot

A Turing test would be a dynamic, evolving, adversarial competition

“GPT agents” are cool experiments, but the demos look sort of fake?

Freeman Dyson gave a very short answer to the 2015 Edge question

AI safety

Both AI doomers and anti-doomers believe that the other side has a weird conjunction of many dubious arguments. Related, a critique of pure reason

Something the government can do now about AI safety: define liability

Scott Alexander on “not overshooting and banning all AI forever”

Norbert Wiener was concerned about machine alignment problems in the 1960s

Other tweets

Rocket launches as our aesthetic contribution for the age. (Compare to this painting)

On sending your life in a dramatically better, more ambitious direction

“The reductionist hypothesis does not by any means imply a ‘constructionist’ one”

Maps

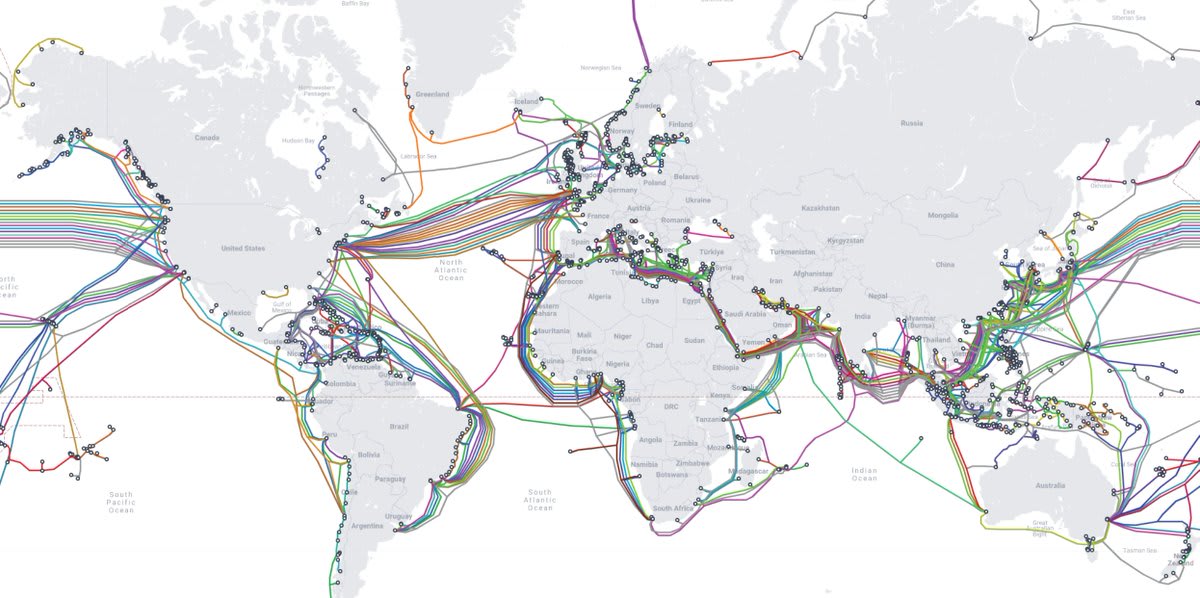

The map of undersea cables is like a wiring diagram for the planet. See also this 1996 piece by Neal Stephenson (non-fiction)

By fake, do you mean something like “giving an exaggerated impression of how likely they are to be successful in their aims when the task is actually difficult, or in how impressive we ought to find what they can currently achieve?”

If so, I think that can lead us into a mistaken view, which would be to compare AutoGPT to The Arrival of the Mail Train. The story is that the Lumiere brothers screened a continuous 50 second still shot of a train coming straight toward the camera for an 1895 audience, and that they ran screaming out of the movie theater because they weren’t sophisticated enough about film to tell the difference between the moving image of a train from the real thing.

In this analogy, our reaction to AutoGPT appears as that of the audience to The Arrival of the Mail Train, where we lack the sophistication to tell the behavior of ChaosGPT apart from that of a potentially effective world-destroying agent. It behaves and “talks” as if that’s what it was doing, but probably in its current form has effectively zero chance of being even slightly successful in executing its goal. We drastically overestimate its chances.

But of course, AutoGPT is not like The Arrival of the Mail Train, because with film, we know that the apparent danger is harmless. By contrast, with AutoGPT, we have good reason to think the mechanism is only harmless for now. A better analogy is the Mogwai from Gremlins: a cute little pet, totally harmless for now, but which can become extremely dangerous unless you consistently comply with a set of strict rules to keep it performing safely, which either you or somebody else won’t.