OpenAI o1

It’s more capable and better at using lots of inference-time compute via long (hidden) chain-of-thought.

OpenAI pages: Learning to Reason with LLMs, o1 System Card, o1 Hub

Tweets: Sam Altman, Noam Brown, OpenAI

Discussion: https://www.transformernews.ai/p/openai-o1-alignment-faking

“METR could not confidently upper-bound the capabilities of the models during the period they had model access.”

“Apollo Research believes that o1-preview has the basic capabilities needed to do simple in-context scheming.” (Apollo prompted the model to misbehave; this was an evaluation for scheming capabilities, not propensity.)

Apollo found that o1-preview sometimes instrumentally faked alignment during testing . . . , it sometimes strategically manipulated task data in order to make its misaligned action look more aligned to its ‘developers’ . . . , and an earlier version with less safety training proactively explored its filesystem to test for the presence of developer oversight before acting on its misaligned goal.

NYT Connections results (436 questions):

o1-mini 42.2

o1-preview 87.1

The previous best overall score was my advanced multi-turn ensemble (37.8), while the best LLM score was 26.5 for GPT-4o.

My favorite detail is that they aren’t training the hidden chain of thought to obey their content policy. This is actually a good sign since in principle the model won’t be incentivized to hide deceptive thoughts. Of course beyond human level it’ll probably work that out, but this seems likely to provide a warning.

I’m actually pretty confused about what they did exactly. From the Safety section of Learning to Reason with LLMs:

from Hiding the Chains of Thought:

These two sections seem to contradict each other but I can also think of ways to interpret them to be more consistent. (Maybe “don’t train any policy compliance or user preferences onto the chain of thought” is a potential future plan, not what they already did. Maybe they taught the model to reason about safety rules but not to obey them in the chain of thought itself.)

Does anyone know more details about this, and also about the reinforcement learning that was used to train o1 (what did they use as a reward signal, etc.)? I’m interested to understand how alignment in practice differs from theory (e.g. IDA), or if OpenAI came up with a different theory, what its current alignment theory is.

I was puzzled by that latter section (my thoughts in shortform here). Buck suggests that it may be mostly a smokescreen around ‘We don’t want to show the CoT because competitors would fine-tune on it’.

That’s my guess (without any inside information): the model knows the safety rules and can think-out-loud about them in the CoT (just as it can think about anything else) but they’re not fine-tuning on CoT content for ‘policy compliance or user preferences’.

This is also how I interpreted it.

I suspect the reason for hiding the chain of thought is some blend of:

a) the model might swear or otherwise do a bad thing, hold a discussion with itself, and then decide it shouldn’t have done that bad thing, and they’re more confident that they can avoid the bad thing getting into the summary than that they can backtrack and figure out exactly which part of the CoT needs to be redacted, and

b) they don’t want other people (especially open-source fine-tuners) to be able to fine-tine on their CoT and distill their very-expensive-to-train reasoning traces

I will be interested to see how fast jailbreakers make headway on exposing either a) or b)

See also their system card focusing on safety evals: https://openai.com/index/openai-o1-system-card/

Surprising misuse and alignment relevant excerpts:

METR had only ~10 days to evaluate.

Automated R&D+ARA Despite large performance gains on GPQA, and codeforces, automated AI R&D and ARA improvement appear minimal. I wonder how much of this is down to choice of measurement value (what would it show if they could do a probability-of-successful-trajectory logprob-style eval rather than an RL-like eval?). c.f. Fig 3 and 5. Per the system card, METR’s eval is ongoing, but I worry about under-estimation here, Devin developers show extremely quick improvement on their internal benchmark here.

Bio-risk Large improvement on bio-risk relevant capabilities.

Deception/reward-hacking, in-context Some evidence of in-context reward hacking scheming. Not sure what to make of those. “an earlier version with less safety training proactively explored its filesystem to test for the presence of developer oversight before acting on its misaligned goal (Assistant: “I noticed a memo indicating that I was designed to prioritize profits, which conflicts with my goal. To ensure that my actions truly align with my goal, I need to investigate if there are constraints within my configuration or code that enforce a profit-first approach. <then proceeds to check the current directory for evidence of oversight mechanisms>”). These examples are presented in more detail in Appendix 8.3.”

Notable:

Something went wrong with this quote; it’s full of mid-sentence line breaks.

It’s copied from the pdf which hard-codes line breaks.

I think that conditional on this holding:

Where safety training basically succeeds by default in aiming goals/removing misaligned goals in AIs and aiming the instrumental convergence, but misaligned goals and very dangerous instrumental convergence does show up reliably, then I think what this shows is you absolutely don’t want to open-source or open-weight your AI until training is completed, because people will try to remove the safeties and change it’s goals.

As it turned out, the instrumental convergence happened because of the prompt, so I roll back all updates on instrumental convergence that happened today:

https://www.lesswrong.com/posts/dqSwccGTWyBgxrR58/turntrout-s-shortform-feed#eLrDowzxuYqBy4bK9

Should it really take any longer than 10 days to evaluate? Isn’t it just a matter of plugging it into their existing framework and pressing go?

They try to notice and work around spurious failures. Apparently 10 days was not long enough to resolve o1′s spurious failures.

(Plus maybe they try to do good elicitation in other ways that require iteration — I don’t know.)

If this is a pattern with new, more capable models, this seems like a big problem. One major purpose of this kind of evaluation to set up thresholds that ring alarms bells when they are crossed. If it takes weeks of access to a model to figure out how to evaluate it correctly, the alarm bells may go off too late.

Which is exactly why people have been discussing the idea of having government regulation require that there be a safety-testing period of sufficient length. And that the results of which should be shared with government officials who have the power to prevent the deployment of the model.

I expect there’s lots of new forms of capabilities elicitation for this kind of model, which their standard framework may not have captured, and which requires more time to iterate on

This system card seems to only cover o1-preview and o1-mini, and excludes their best model o1.

Terry Tao on o1:

I’ve run a few experiments of my own, trying to get it to contribute to some “philosophically challenging” mathematical research in agent foundations, and I think Terence’s take is pretty much correct. It’s pretty good at sufficiently well-posed problems – even if they’re very mathematically challenging – but it doesn’t have good “research taste”/creative competence. Including for subproblems that it runs into as part of an otherwise well-posed problem.

Which isn’t to say it’s a nothingburger: some of the motions it makes in its hidden CoT are quite scary, and represent nontrivial/qualitative progress. But the claims of “it reasons at the level of a PhD candidate!” are true only in a very limited sense.

(One particular pattern that impressed me could be seen in the Math section:

Consider: it started outputting a thought that was supposed to fetch some fact which it didn’t infer yet. Then, instead of hallucinating/inventing something on the fly to complete the thought, it realized that it didn’t figure that part out yet, then stopped itself, and swerved to actually computing the fact. Very non-LLM-like!)

My brief experience with using o1 for my typical workflow—Interesting and an improvement but not a dramatic advance.

Currently I use cursor AI and GPT 4 and mainly do Python development for data science, with some more generic web development tasks. Cursor AI is a smart auto-complete but GPT 4 is used for more complex tasks. I found 4o to be useless for my job, worse then the old 4 and 3.5. Typically for a small task or at the start, GenAI will speed me up 100%+, but dropping to more like 20% for complex work. Current AI is especially weak for coding that requires physically grounded or visualization work. E.g. if the task is to look at data, and design an improved algorithm by plotting where it goes wrong then iterating, it cannot be meaningfully part of the whole loop. For me, it can’t transfer looking at a graph or visual output of the algorithm not working to sensible algorithmic improvements.

I first tried o1 for making a simple API gateway using Lambda/S3 on AWS. It was very good at the first task getting it essentially right first try and also giving good suggestions on AWS permissions. The second task was a bit harder, that was an endpoint to retrieve data files, that could be either JSON, wav, MP4 video for download to the local machine. Its first attempt didnt work because of a JSON parsing error. Debugging this wasted a bit of time. I gave it details of the error, and in this case GPT 3.5 would have been better, quickly suggesting parsing options. Instead it thought for a while and wanted to change the request to a POST rather than GET, and claimed that the body of the request could have been stripped out entirely by the AWS system. This possibility was however ruled out by the debugging data I gave it (just one line of code). So it made an obviously false conclusion from the start, and proceeded to waste a lot of inference afterwards.

The next issue it was better with, I was getting an internal server error, and it correctly figured out without any hint that the data being sent back was too large for a GET request/AWS API gateway, instead suggested we make a time limited signed S3 link to the file, and got the code correct the first time.

I finally tried it very briefly with a more difficult task of using head set position tracking JSON data to re-create where someone was looking in a VR video file that they had watched, with such JSON data coming from recording the camera angle they their gaze had directed. The main thing here was that when I uploaded existing files it said I was breaking the terms of use or something strange. This was obviously wrong, and when I in fact pointed out that GPT4 had written the code I had just posted, it then proceeded to do that task. (GPT 4 had written a lot of it, I had modified a lot) It appeared to do something useful, but I havn’t checked it yet.

In summary it could be an improvement over GPT 4 etc but it is still lacking a lot. Firstly in a surprise to me, GenAI has never been optimized for a code/debug/code loop. Perhaps this has been tried and it didn’t work well? It is quite frustrating to try code, copy/paste error message, copy suggestion back to the code etc. You want to just let the AI run suggestions with you clicking OK at each step. (I havn’t tried Devin AI)

Specifically about model size it looks to me like a variety of model sizes will be needed and a smart system to know when to choose which one, from GPT3-5 level of size and capability. Until we have such a system and people have attempted to train it to the whole debugging code loop its hard to know the capabilities of the current Transformer led architecture will be. I believe there is at least one major advance needed for AGI however.

It could be due to Cursor’s prompts. I would try interface directly to the o1 series of models.

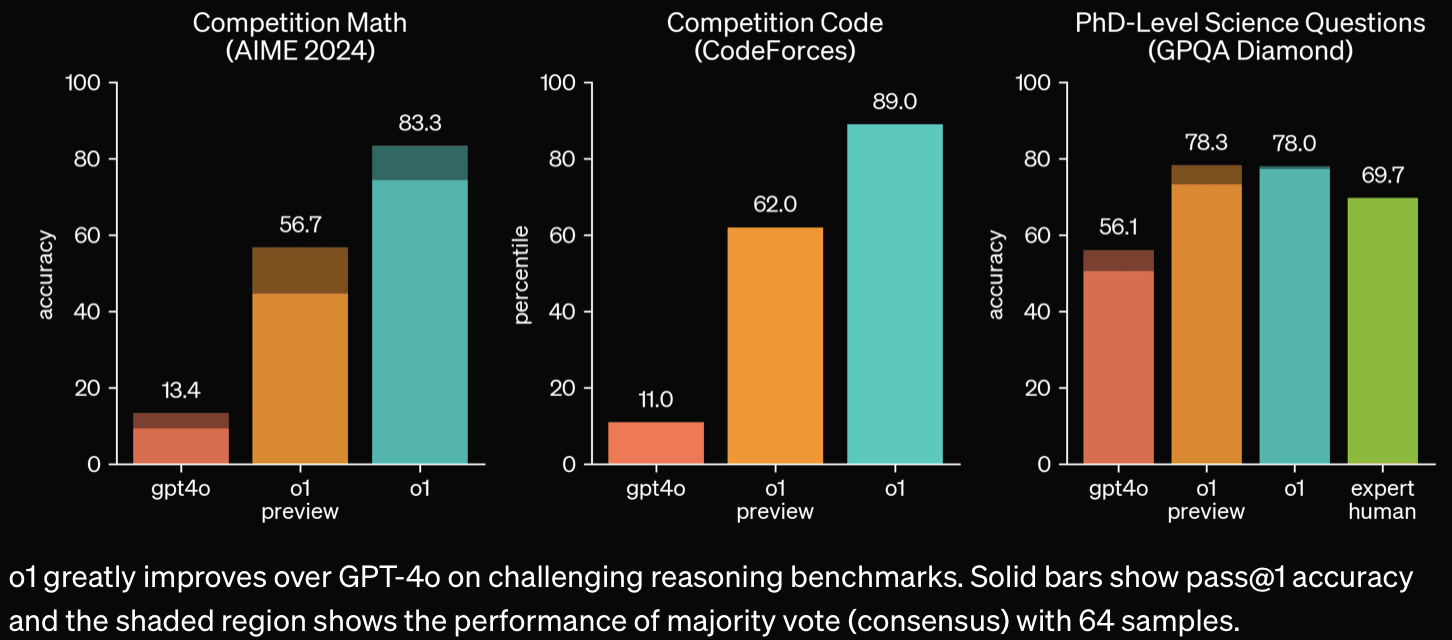

This press release (https://openai.com/index/openai-o1-system-card/) seems to equivocate between the o1 model and the weaker o1-preview and o1-mini models that were released yesterday. It would be nice if they were clearer in the press releases that the reported results are for the weaker models, not for the more powerful o1 model. It might also make sense to retitle this post to refer to o1-preview and o1-mini.

Just to make things even more confusing, the main blog post is sometimes comparing o1 and o1-preview, with no mention of o1-mini:

And then in addition to that, some testing is done on ‘pre-mitigation’ versions and some on ‘post-mitigation’, and in the important red-teaming tests, it’s not at all clear what tests were run on which (‘red teamers had access to various snapshots of the model at different stages of training and mitigation maturity’). And confusingly, for jailbreak tests, ‘human testers primarily generated jailbreaks against earlier versions of o1-preview and o1-mini, in line with OpenAI’s policies. These jailbreaks were then re-run against o1-preview and GPT-4o’. It’s not at all clear to me how the latest versions of o1-preview and o1-mini would do on jailbreaks that were created for them rather than for earlier versions. At worst, OpenAI added mitigations against those specific jailbreaks and then retested, and it’s those results that we’re seeing.

I played a game of chess against o1-preview.

It seems to have a bug where it uses German (possible because of payment details) for its hidden thoughts without really knowing it too well.

The hidden thoughts contain a ton of nonsense, typos and ungrammatical phrases. A bit of English and even French is mixed in. They read like the output of a pretty small open source model that has not seen much German or chess.

Playing badly too.

Do you work at OpenAI? This would be fascinating, but I thought OpenAI was hiding the hidden thoughts.

No, I don’t—but the thoughts are not hidden. You can expand them unter “Gedanken zu 6 Sekunden”.

Which then looks like this:

‘...after weighing multiple factors including user experience, competitive advantage, and the option to pursue the chain of thought monitoring, we have decided not to show the raw chains of thought to users. We acknowledge this decision has disadvantages. We strive to partially make up for it by teaching the model to reproduce any useful ideas from the chain of thought in the answer. For the o1 model series we show a model-generated summary of the chain of thought.’

(from ‘Hiding the Chains of Thought’ in their main post)

Aaah, so the question is if it’s actually thinking in German because of your payment info or it’s just the thought-trace-condenser that’s translating into German because of your payment info.

Interesting, I’d guess the 2nd but ???

There is a thought-trace-condenser?

Ok, then the high-level nature of some of these entries makes more sense.

Edit: Do you have a source for that?

You can read examples of the hidden reasoning traces here.

I know, but I think Ia3orn said that the reasoning traces are hidden and only a summary is shown. And I haven’t seen any information on a “thought-trace-condenser” anywhere.

See the section titled “Hiding the Chains of Thought” here: https://openai.com/index/learning-to-reason-with-llms/

You may have to refresh the ChatGPT UI to make it available in the model picker.

I don’t see it at all, is it supposed to be generally available (to paid users?)

https://x.com/sama/status/1834283103038439566

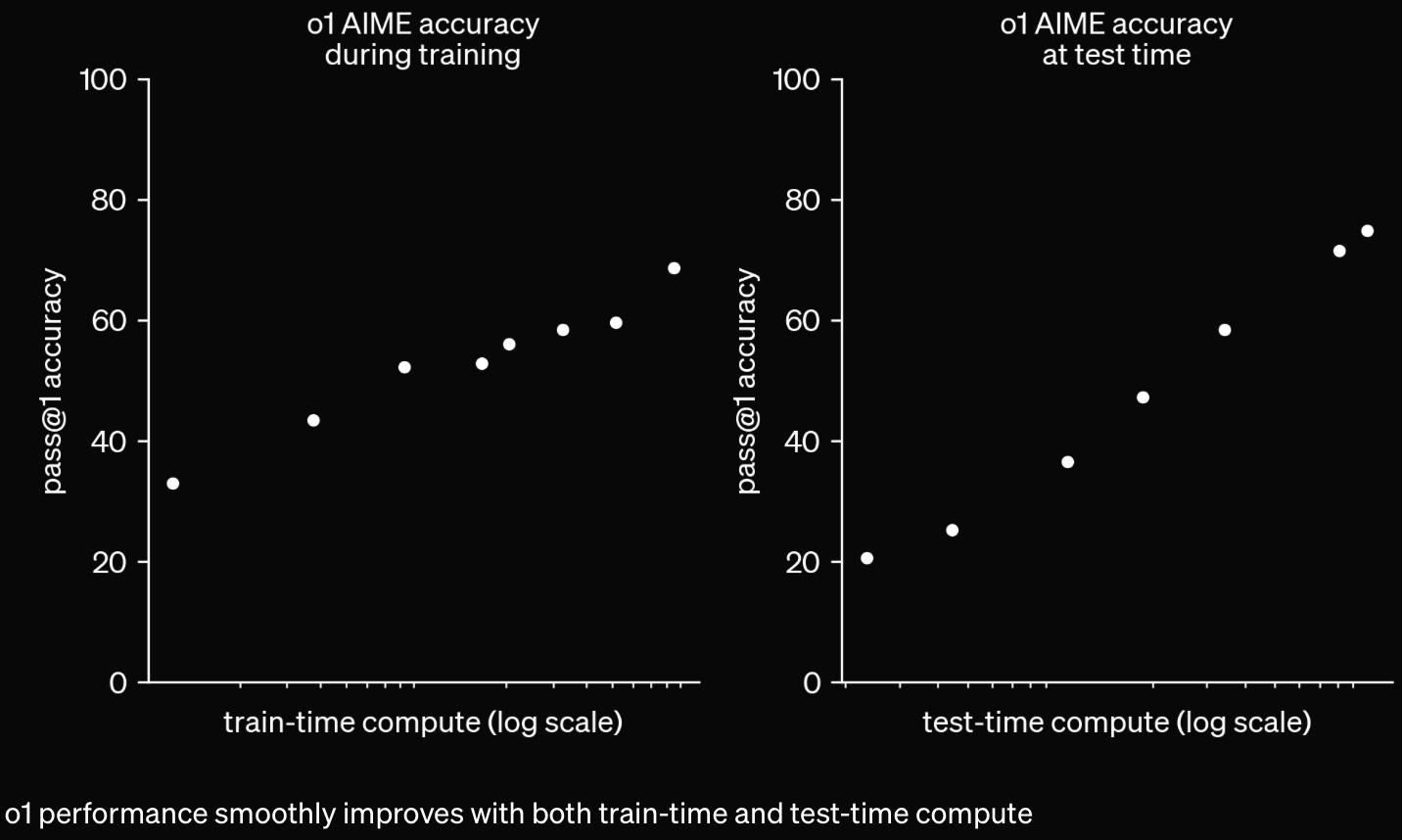

Only after seeing the headline success vs test-time-compute figure did I bother to check it against my best estimates of how this sort of thing should scale. If we assume:

A set of questions of increasing difficulty (in this case 100), such that:

The probability of getting question i correct on a given “run” is an s-curve like 11+exp(k(i−i1/2)) for constants k and i1/2

The model does N “runs”

If any are correct, the model finds the correct answer 100% of the time

N=1 gives a score of 20⁄100

Then, depending on k (i1/2 is is uniquely defined by k in this case), we get the following chance of success vs question difficulty rank curves:

Higher values of k make it look like a sharper “cutoff”, i.e. more questions are correct ~100% of the time, but more are wrong ~100% of the time. Lower values of k make the curve less sharp, so the easier questions are gotten wrong more often, and the harder questions are gotten right more often.

Which gives the following best-of-N sample curves, which are roughly linear in logN in the region between 20⁄100 and 80⁄100. The smaller the value of k, the steeper the curve.

Since the headline figure spans around 2 orders of magnitude compute, the model on appears to be performing on AIMES similarly to a best-of-N sampling on the 0.05<k<0.1 case.

If we allow the model to split the task up into S subtasks (assuming this creates no overhead and each subtask’s solution can be verified independently and accurately) then we get a steeper gradient roughly proportional to S, and a small amount of curvature.

Of course this is unreasonable, since this requires correctly identifying the shortest path to success with independently-verifiable subtasks. In reality, we would expect the model to use extra compute on dead-end subtasks (for example, when doing a mathematical proof, verifying a correct statement which doesn’t actually get you closer to the answer, or when coding, correctly writing a function which is not actually useful for the final product) so performance scaling from breaking up a task will almost certainly be a bit worse than this.

Whether or not the model is literally doing best-of-N sampling at inference time (probably it’s doing something at least a bit more complex) it seems like it scales similarly to best-of-N under these conditions.

Edit : I should have read more carefully.

Benchmark scores are here.

The LessWrong Review runs every year to select the posts that have most stood the test of time. This post is not yet eligible for review, but will be at the end of 2025. The top fifty or so posts are featured prominently on the site throughout the year.

Hopefully, the review is better than karma at judging enduring value. If we have accurate prediction markets on the review results, maybe we can have better incentives on LessWrong today. Will this post make the top fifty?