A Case for Cooperation: Dependence in the Prisoner’s Dilemma

“The man who is cheerful and merry has always a good reason for being so,—the fact, namely, that he is so.” The Wisdom of Life, Schopenhauer (1851)

TL;DR

Descriptions of the Prisoner’s Dilemma typically suggest that the optimal policy for each prisoner is to selfishly defect instead of to cooperate. I disagree with the traditional analysis and present a case for cooperation.

The core issue is the assumption of independence between the players. Articulations of the game painstakingly describe how the prisoners are in explicitly separate cells with no possibility of communication. From this, it’s assumed that one’s action can have no causal effect on the decision of the other player. However, (almost) everything is correlated, and this significantly changes the analysis.

Imagine the case where the prisoners are clones and make exactly the same decision. Then, when they compare the expected payout for each possible action, their payout will be higher in the case where they cooperate because they are certain the other player is having the same thoughts and deterministically will make the same choice. This essay generalizes and formalizes this line of reasoning.

Here’s what to expect in what follows. In the first section, we begin by introducing the standard causal decision theory analysis suggesting (Defect, Defect). Then, we introduce the machinery for mixed strategies in the following section. From there we discuss the particular case where both participants are clones, which motivates our new framework. Then, we introduce a bit more formalism around causal modeling and dependence. We proceed to analyze a more general case where both players converge to the same mixed strategy. Then we discuss the most general model, where the players’ mixed strategies have some known correlation. Finally, we conclude the analysis. In summary, given some dependency structure due to upstream causal variables, we uncover the cases where the game theory actually suggests cooperation as the optimal policy.

The Traditional Analysis

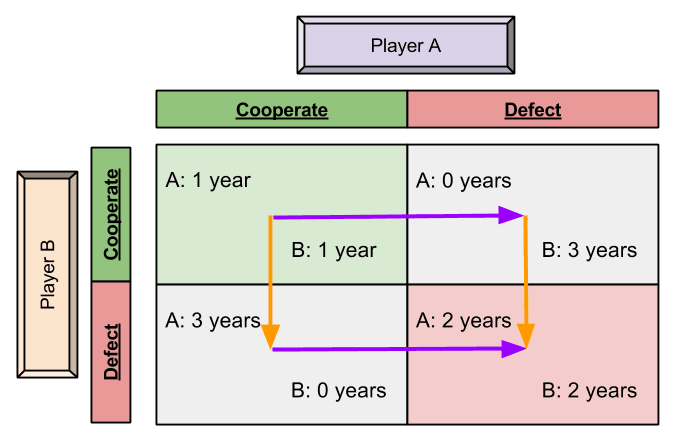

In Game Theory 101, here’s how the Prisoner’s Dilemma analysis traditionally goes. Alice and Bob are conspirators in a crime. They’re both caught and brought to separate interrogation rooms. They’re presented with a Faustian bargain: to snitch or not to snitch. If neither snitches on the other, they both get a 1-year sentence. If one snitches and the other does not, then the snitch goes home free while the non-snitch has to serve 3 years. If they both snitch, they serve 2 years.

Here’s the payoff diagram corresponding with this setup:

If Alice cooperates, Bob is better off defecting to get 0 years instead of 1 year. If Alice defects, Bob is better off defecting to get 2 years instead of 3 years. So in either case Bob is better off defecting. A strategy which is optimal regardless of the choices of an opponent is called a dominant strategy. Symmetrically, Alice is better off defecting no matter what Bob does. This means that even though they’re both happier in the case where they both cooperate, serving just one year each, the “Nash equilibrium” is the case where they both defect, serving two years each. A state is called a Nash equilibrium if no player can benefit by changing their action, holding the actions of all other players constant.

We can represent each player’s preferences and optimal choices with an arrow diagram.

Nash equilibria are represented by a state with no arrows pointing away from it, meaning no player would prefer to switch their choice, holding the other players’ choices the same. In the example above, (Defect, Defect) is the single Nash equilibrium.

We can generalize the payoff matrix a bit to represent all situations that capture the structure of a “Prisoner’s Dilemma”-like scenario.

I use the variables to keep organized. These can be remembered in the following way. is the uarrel payout when they rat each other out. is the eward for mutual cooperation. is the ucker’s payout if the other player snitches and they do not. is the emptation payout for snitching while the other does not. The necessary and sufficient conditions for a prisoner’s dilemma structure are that .

Probabilistic Play

Now, let’s make our model a bit more rigorous and extend our binary action space to a probabilistic strategy model.

Instead of discrete actions, let’s suppose Alice and Bob each choose mixed strategy vectors and , respectively, which represent their probabilities of cooperation or defection, such that and .

We formalize the analysis by noting that Alice wants to maximize her expected (VNM) utility. We now compute her optimal cooperation fraction given Bob’s optimal policy.

We decompose the expected value of the game to Alice into the expected value of the game given that she cooperates times the probability that she cooperates plus the expected value of the game given that she defects times the probability she defects .

We can further clarify Alice’s expected utility given her action into the cases where Bob cooperates and does not cooperate.

Bringing this back into our equation for yields:

We can reduce the mess a bit by substituting in our variables .

Now we apply and .

Normally to find the which maximizes we would differentiate with respect to and set this result to zero. But because the equation is linear in , the derivative degenerates. This means that there won’t be an optimal “mixed strategy” which is not a “pure strategy”, meaning that given the prisoner’s dilemma payout structure presented thus far Alice and Bob are better off making a decision to 100% cooperate or 100% defect.

Expanding out a bit further we see:

Now we isolate :

To see if Alice’s optimal cooperation percentage is 100% or 0%, we just need to see if the term is greater than or less than zero. If it’s greater than zero, then Alice best maximizes when , and correspondingly when it’s less than zero then Alice should always defect ().

We now show that each piece is greater than zero. , because , , and because . Therefore, , and Alice’s optimal policy is to always defect. The same analysis can be performed for Bob as well.

This is how the analysis typically goes. The common perspective is that, given the payoff diagram and the constraint that , in a two-player simultaneous game where the players cannot communicate with one another, then unfortunately the game theory optimal policy is always to defect.

I disagree with this conclusion and present my own analysis in the subsequent section.

The Clone Case for Cooperation

In the typical analysis of the prisoner’s dilemma, we consider the choices of Alice and Bob to be independent of each other. This is because they make their decisions simultaneously and have no way of affecting the decision of the other. But, there is an underlying dependence structure between A and B that we must include in our model.

To illustrate this, imagine the case where Alice is cloned and given exactly the same environment such that both clones will make the same decisions. If we label clone A and clone B, in this case we now have , implying , , and . This is the case where there’s perfect correlation between the decisions of A and B, which we analyze in this section.

Let’s now recompute A’s expected utility which she wants to maximize.

Variable definitions:

is the expected utility of the game to player A.

is the expected utility of the game to player A given that A choses to cooperate.

is the expected utility of the game to player A given that A choses to defect.

is the probability that A cooperates, which is optimized to maximize A’s expected utility given her knowledge.

is the probability that A defects, which is optimized to maximize A’s expected utility given her knowledge.

Given B’s potentially mixed strategy, the expected utility of the game to A given each of A’s possible choices can be decomposed into the cases for each of B’s choices given A’s choice.

Notice that we condition B’s probabilities on A’s choice. In the previous analysis, we claimed that B has no way of seeing A’s choice so we make them independent, claiming . But this is NOT RIGHT. Also, notice we place a hat on to denote that these are A’s estimates of B’s action probabilities based on A’s own knowledge. We will come back to discuss this step in more detail in a later section, but note that this is the heart of the difference between my perspective and the traditional analysis.

Academic economists would likely claim (have claimed) that this approach doesn’t sufficiently separate belief formation and decision making. The type of recursive relationship I’m suggesting between estimating a counterparties’ policy and our own action selection seems “irrational”, in the sense that it doesn’t follow the typical pre-defined “rational decision making” algorithm described by economists. However, I point out that the policy presented here wins at games. That is, it is the policy which results in both agents obtaining maximum expected utility.

It’s been recommended by an econ friend (who I love dearly), that while this approach might not fit the classification of “rational decision making”, it could find a home in the behavioral economics literature as a form of *motivated reasoning*. The claim is that the agent wants to maximize their ex-ante expected utility and this model assumes their beliefs are malleable. A motivated reasoner wants to believe their counterparty to act as they do, so they “trick” themselves into believing it even though they haven’t rationally deduced that the counterparty will actually cooperate. The academic argument against cooperation continues, “but this cannot be fully rational behavior, because my ex-post decision has to be fully independent from the other agent, as my decision, because it is unknown to them at the time of their own decision by construction, cannot influence their beliefs and so should not influence my own beliefs about their action.” It’s this notion that decisions are made independently that I take issue with. I discuss this further in the Everything is Correlated section which follows.

We now recombine this into :

Now we substitute in and use and .

Notice that, if the choices of the players are truly independent, then , yielding the traditional analysis of the last section.

Let’s now explore the case where A and B are clones who will make exactly the same choice, so and . Let’s now update our calculation.

Which we simplify into:

Because eward uarrel, is maximized when ! This means will cooperate and receive eward instead of uarrel, and similarly for . This seems trivial, but if and can make the same decision, they can obtain the globally optimal solution instead of the strictly worse typical Nash equilibrium which is reached when they believe they act independently.

Everything is Correlated

Alright, if Alice knows that Bob will make exactly the same decision, she can fearlessly cooperate, knowing for certain that he will too, dissolving the dilemma. So now, let’s reincorporate uncertainty back into our model.

Let’s say Alice and Bob are not clones but are instead siblings with similar family values, experiences, dispositions, genes, neurological structures, etc. We know that many, many, many things in the world are correlated in some way. The dependency structure of the world is extremely interconnected. There’s also an evolutionary argument for this that I won’t get to in much detail. But when picking the prisoners, we’re actually sampling players who have played an iterated version of this game many many times. If you believe that humans are not purely rational agents and make decisions based on instinct, then there’s likely to be downstream similarity in their policies. And if you believe that the agents are purely rational, they also have exactly the same game-theoretic payouts, so their GTO play should be exactly the same. It would be extremely improbable for Alice and Bob’s choices here to be perfectly independent. So let’s model some dependence structure.

(I will note that there’s a semi-compelling argument suggesting that we don’t even need to introduce more subtle forms of dependence. Because they have identical payoff structures, the game-theoretic optimal play should be exactly the same. Given their GTO play should be exactly the same and mixed strategies degenerate to pure strategies, we get correlation 1, and so they should both coordinate 100% of the time. But the real world is messy, so we proceed with slightly more subtlety.)

Causal Modeling 101

To better understand the dependencies and correlations between Alice and Bob’s choices, we can frame the problem in the light of causal modeling a la Judea Pearl. In causal modeling, we represent the dependencies between variables using a directed acyclic graph (DAG), where nodes represent variables and directed edges represent causal relationships.

In the traditional analysis of the Prisoner’s Dilemma, Alice and Bob are assumed to make their decisions independently. However, in reality, their decisions will definitely be influenced by common upstream factors. For example, Alice and Bob may have similar upbringings, values, experiences, and expectations about loyalty and cooperation that influence their decision-making. And if that’s not compelling, they also have access to similar information about the situation, and have exactly the same payout structure, so there’s a strong case that they will end up making similar decisions.

We can represent these upstream factors with a variable . The causal model can be depicted as follows:

V

/ \

A BHere, is a common cause that affects both Alice’s and Bob’s decisions. This creates a dependency between and .

Given the upstream factor , the choices of Alice and Bob could be conditionally independent. However, if we do not observe , the choices and become dependent. This is known as d-separation in causal graphs.

Mathematically, this can be expressed as:

This shows that the joint probability of and depends on their conditional probabilities given and the distribution of .

Independent Case (Optional)

Suppose A and B were truly independent. Here’s the causal model we might associate with this hypothesis.

V_1 V_2

| |

A BHere and are separate upstream factors that affect Alice’s and Bob’s decisions, respectively. This creates a scenario where and are conditionally independent given and . Mathematically, this can be expressed as:

This equation shows that the joint probability of and depends on their individual probabilities given and , and the distribution of and .

To prove the typical independence equation from the more complicated seeming equation above, we first find the marginal probabilities and and sum over the respective upstream variables:

Now we multiply and together:

Then we expand the product of the sums and we notice that this expression is the same as the expression for the joint probability obtained in step 1:

Because the two expressions are identical, we’ve shown that assuming that Alice’s and Bob’s decisions are influenced by separate, independent upstream variables and . With this extra machinery in hand, we proceed into the heart of our analysis.

Incorporating Dependence into Expected Utility

In The Clone Case for Cooperation section, I make the assumption that Alice and Bob deterministically make exactly the same choice. This time, let’s relax that assumption and suppose that because they’re rational agents presented with identical payoff matrices, they’ll conclude the same mixed strategies to be optimal. Though we’re not sure what their cooperate/defect probabilities will be yet, we just suppose that their GTO policies will be the same given the same payout structures.

To model this formulation, we go back to the start and split A’s expected utility based on her two possible actions.

Then, we split each of those cases for each of B’s possible choices.

Now we substitue in and use and .

Now we need thoughtful ways of modeling and . For Alice’s approximation of Bob’s probability of collaborating, , we can suppose that this matches Alice’s own probability of collaborating because of our assumption that rational agents will come to the same mixed strategy policies given identical payoff matrices. So, and .

This turns our into:

Which simplifies to:

Optimizing (Optional)

All that’s left to do from here is to find the which maximizes subject to the constraints that and . (The cases get slightly hairy so this section is skippable.)

Unlike the previous case where was linear, this time we actually can optimize via the derivative method suggested earlier. To do so, we compute:

Because is a second-degree polynomial, the parabola it sweeps out will have its vertex at . While there’s still some tidying up to do to determine which cases apply to which solutions, excitingly, the possible solution set is constrained to . This vertex won’t always be the maximum, and sometimes this vertex will be outside the valid probability range . In either of these countercases, the maximum value for will be either or .

We now describe the cases where the vertex is a valid maximum. For the vertex to be a maximum, the parabola must be concave. As we know, a parabola is concave when . So, Q+R-S-T<0 or . For the vertex to be valid, we say it must lie within the domain . First, to find the inequalities implied by , we note that because the parabola is concave down, the denominator will be negative, so . Second, implies that . We’ve found that, when and , then the optimal cooperation fraction for Alice will be .

We still have to sort out when will be or , and there’s a bit of tidying up to do when the denominator is zero (which actually was the case in the first example in the Traditional Analysis section where , , , and ). To solve this indeterminate case this when , we take the second derivative of : . Because in this case Q+R-S-T = 0, the second derivative is zero, implying that the expected utility curve will be linear.

So, when , , where here is a linear function of . In this edge case, we find that:

If , increases with . Therefore, is optimal.

If , decreases with . Therefore, is optimal.

If , is constant, and any is optimal.

For the other cases where we’re deciding between 0 or 1, we just need to compare with . That is, we need to compare with . That is, in these cases, if then , and if then .

Putting this all together:

If and , then the optimal cooperation fraction for Alice will be .

If then:

If , then .

If , then .

If , then any is optimal.

Otherwise:

If then .

If then .

If then .

Represented as one piecewise expression:

At last, if we make the assumption that A and B will conclude the same mixed strategy fractions to be optimal, given that they have identical payoffs, we now have a complete policy for determining their choices of optimal and .

Interpretation

Let’s interpret this solution a bit to make sure it accords with our intuitions. We start with the complicated seeming expression, , in the case where and .

This equation indicates that the optimal cooperation probability depends on the difference between the uarrel and eward payoffs, normalized by the overall differences between the players’ payoffs when they perform the same action minus when they perform different actions. This value should be between −1 and 1, which bounds between −1 and 1, as we would expect a probability to behave.

We continue to examine some important boundary conditions:

If uarrel eward, then , indicating that the player is indifferent between cooperation and defection.

If uarrel eward, then , indicating a higher probability of defection. (Remember the denominator is negative in this case.)

If uarrel eward, then , indicating a higher probability of cooperation. (Again the denominator is negative.)

This result seems reasonable because if the quarrel payoff is better than the reward for mutual cooperation , Alice is more likely to defect. Conversely, if is better, Alice is more likely to cooperate.

Let’s now try to apply this policy to our initial toy example. To see if our solution holds, we test the case when , , , .

First, we calculate . Then, because , we use the indeterminate case formula. We calculate . Finally, since, , the optimal policy is . This aligns with the interpretation that Alice should always cooperate in this particular setup.

We can also confirm this computationally as well.

import matplotlib.pyplot as plt

import numpy as np

# Define the payoffs

Q = -3

R = -2

S = -4

T = -1

# Define the cooperation probabilities

p_A_C = np.linspace(0, 1, 1000)

# Calculate the expected utility for Alice

E_U_A_G = (R - S - T + Q) * p_A_C**2 + (S + T - 2 * Q) * p_A_C + Q

# Plot the expected utility

plt.figure(figsize=(10, 6))

plt.plot(p_A_C, E_U_A_G, label=r'$E[U_A(G)]$', color='blue')

plt.xlabel(r'Cooperation Probability $p^*(A_C)$')

plt.ylabel(r'Expected Utility $E[U_A(G)]$')

plt.title('Expected Utility of the Game for Alice')

plt.axhline(0, color='black', linewidth=0.5)

plt.axvline(0, color='black', linewidth=0.5)

plt.grid(color='gray', linestyle='--', linewidth=0.5)

plt.ylim([np.min(E_U_A_G), np.max(E_U_A_G)])

plt.legend()

plt.showOverall, the analysis and derived equations seem to correctly capture the dependence and provide a policy for determining the optimal cooperation probability in this correlated Prisoner’s Dilemma. From what I can tell based on the tests I’ve performed, the solution works as intended and aligns with my theoretical expectations from the payoffs.

Incorporating Correlation into Expected Utility

If you’re not convinced by the case where we assume that two rational agents make exactly the same decision given identical payoffs or by the extended case where the two rational agents converge on the same optimal mixed strategy policy, I present a third generalization which introduces noise into our model.

To model the dependence structure between the agents’ decisions alongside some natural uncertainty, let’s introduce a correlation parameter that captures the degree of correlation between Alice’s and Bob’s choices due to the common upstream factors, . We can think of as a measure of how likely it is that if Alice cooperates, Bob will also cooperate, and similarly for defection. This parameter will range from −1 to 1, where:

indicates perfect positive correlation (Alice and Bob always make the same choice). When , this model degenerates to our clone case.

indicates no correlation (Alice and Bob’s choices are independent).

indicates perfect negative correlation (Alice and Bob always make opposite choices).

With this in mind, let’s redefine the probabilities , , , and to incorporate .

We assume the following relationships for the conditional probabilities based on :

These expressions ensure that the probabilities are consistent with the correlation parameter.

We can now substitute these probabilities into our expected utility equation for Alice from earlier:

Now, substitute the payoffs and use :

Simplify further:

Combine terms:

Simplify the coefficient of :

Because we’re looking to maximize , the optimal strategy for Alice depends on the sign of the coefficient of :

If , then . This implies that, if , then .

Similarly, if , then . This implies that, if , then .

In this section, we’ve incorporated the correlation parameter into our analysis to allow us to capture the dependency between Alice’s and Bob’s choices caused by common upstream factors. We’ve found that this extension suggests that the degree of correlation between the actions of the two players significantly influences their optimal strategies.

Specifically, when is sufficiently positive, there is a correlation threshold above which mutual cooperation becomes the dominant strategy for both players.

The Prisoners Do-Calculus

In the final most general case, we formalize the problem in the language of Pearl’s do-calculus. Instead of assuming a -model for correlation, we maintain a non-parametric perspective in this final stage.

We start again by defining the expected utility of the game to Alice:

This time the expected utility of the game to Alice is the sum of the expected utility of the game to her given that she chooses to cooperate and the expected utility of the game to Alice given that she chooses to defect, weighted by the optimal probability that she does either. We now decompose the new terms and .

That is,

And,

Given the dependence DAG earlier, we can incorporate our upstream variable into our refined expected utility calculation using do-calculus.

Substituting these probabilities back into Alice’s expected utility formula, we get:

Simplifying the expression:

This expression now shows the expected utility of the game to Alice in terms of her probability of cooperating and incorporates the dependencies through the common factor . We find that the expected utility of the game is maximized when:

If the inequality above is satisfied, then Alice should cooperate; otherwise, she should defect.

Confirmation

To test this most general form, we map it down to each of the particular cases we explored in the preceding sections.

Traditional Independent Case

In the first solution we assume independence between Alice’s and Bob’s choices. This means and .

We substitute these probabilities into our general utility function, yielding:

This simplifies down to:

This is exactly the expression we found for Alice’s expected utility in that section, so pat on the back, onto the next section.

Clone Case for Cooperation

In the second model, Alice and Bob are assumed to make exactly the same decision, implying perfect correlation. Thus, and . This simplifies the general solution as follows:

Because , is maximized when . Just as we anticipated, Alice should always cooperate, aligning with our expectations again.

Identical Mixed Strategies

In the next model, we extend and suggest that Alice and Bob converge on the same mixed strategy. That is, and . This gives us:

This is the same result we expected as well, confirming our general causal model.

Correlated Mixed Policy

In the final model, we introduced correlation parameter to incorporate possible noise.

We make the following definitions:

From this, the corresponding -model falls out.

The language of do-calculus is quite appealing for its generality, and it, in my eyes, models the reality of the problem at hand extremely well.

Conclusion

This framework implies that, despite typical Game Theory 101 lectures suggesting 100% selfish play in one-shot games like this, if you believe that you think sufficiently similarly to your counterparty, the game theory optimal policy would be to faithfully cooperate and both walk away free.

A broader conclusion of this analysis is to always consider higher-level dependencies, even when two things seem to be perfectly independent. We should also include all possible information into our decisions, and this includes the decisions themselves. In this Prisoner’s Dilemma situation, we can consider this in some sense to be the opposite of “adverse selection”. Oftentimes, in adversarial games, conditional on performing an action, you’re less happy. There are many possible reasons for this, but a common reason is that you’re acting in a market and your ability to perform an action means that no one else in the market was willing to do what you just did, which gives you information that you might be making a mistake. This could be hiring a candidate where your ability to hire them means no other firm wanted them at the price you’re willing to pay. Or this could be winning an auction where your winning means that no one else was willing to pay the price that you paid. However, in the case we have at hand, there’s a sort of “advantageous selection”. This is because, if you choose to cooperate, now you get extra information that someone else in a similar position also likely cooperated as well, which is quite a pleasant type of selection.

For those who remain compelled by the original argument that, “still, if you know your opponent is going to cooperate, you’re better off defecting to serve no time instead of 1 year”, I might share some of my ideas on why I suspect that the recursive expected utility maximization decision algorithm that I present is superior in a follow-up essay. But for now, I’ll just say that while the Causal Decision Theorists are stuck in their (defect, defect) “rational” Nash equilibria, my co-conspirators and I will be faithfully cooperating and walking free into the warm sun of Pareto Optimality.

Acknowledgements: Thank you Alok Singh, Baran Cimen, Keegan McNamara, and Paul Schmidt-Engelbertz for reading through the draft and providing helpful guidance.

I’m sharing a comment from Reddit here because I think this will be a common response.

Fantastic question, this gets straight to the heart of the analysis.

I wholeheartedly agree that whatever Alice does within her cell will not have any causal effect on Bob’s decision. They are separate in the sense that Alice cannot ripple the atoms in the universe in any way to affect Bob differently in either case. There are no downstream causal dependencies between them.

However, there is upstream causal dependence. Here’s an analogy. Imagine I have two papers with C(ooperate) written on them and two papers with D(efect) written on them. Then I blindly select either two Cs or two Ds. Whichever two papers I choose, I put them in envelopes labeled A and B, and I take those envelopes and shoot them each a light year in opposite directions along with Alice and Bob correspondingly. Before Alice opens her envelope up, she has no idea what Bob has––to her, it really is 50% C or D. When she opens up her envelope and sees that she has a C, she hasn’t causally affected Bob’s envelope, but now she does have information about it. Namely, she knows that Bob also has a C. When Alice sees that she has a C or a D, she gets new information about D’s envelope because of the upstream causal dependence I incorporated by placing the same letters in the envelopes. This corresponds with the clone case. To get the more subtle cases, suppose I choose Alice’s envelope content randomly. Then I can flip a weighted coin to determine whether or not to choose Bob’s contents randomly or to put in the same contents that Alice has. This corresponds to the rho-analysis in the second to last section.

Hopefully this metaphor helps clarify what exactly I mean when I say that Alice and Bob’s decisions share upstream causal dependencies.

I didn’t read through the math, but appreciate this post. Are you familiar with Douglas Hofstader’s “Metamagical Themas”? It’s a collection of articles he wrote for Scientific American in the early 1980s. I haven’t read it in a while but I remember him arguing persuasively that the right thing to do is cooperate in the prisoner’s dilemma. It was a concept he called “superrational” thinking (which I guess now has its own wikipedia page: https://en.wikipedia.org/wiki/Superrationality). You’ve generalized this concept beyond superrational thinkers and towards real world cases where even minor correlations may suggest cooperation is the rational strategy.

Again, from Reddit:

I appreciate the kind words. I can go back and clarify the language of the post to convey that I’m not actually smuggling in a form of iterated prisoner’s dilemma, rather this is specifically describing a one-shot play of the prisoner’s dilemma where Alice and Bob need not have ever met before or ever meet again.