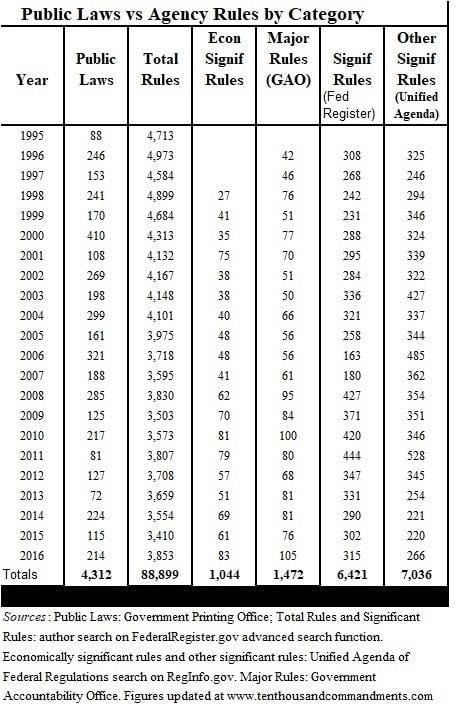

The United States has a lot of regulations:

So does the EU.

Each body has, no matter how you count them, tens of thousands of rules to be followed, across all the domains they are responsible for.

This does not have to be a problem. The world is a complicated place, and the number of fields, industries, subjects, topics, and magisteria a government might rightfully want rules for is large.

That being said, there are problems with laws and regulations, ranging from unintended consequences and ratchet effects to slowing down innovation and preventing the construction of everything from houses and green energy to nuclear power.

The Problems With Regulations And Laws

The Ratchet

A big problem with regulations—and laws in general—are that they tend to function like a ratchet.

Once a law is passed, it’s a law, and the same goes for regulations. They don’t get old, they don’t fade, and they don’t die.

In other words, the number of laws and regulations tends to increase monotonically, including the old, the outdated, and the silly.

This creates two problems:

The pace of innovation and construction of new things slows down over time, as more and more rules and bureaucracy are implemented, and

The body of rules grows until every action breaks some rule, at which point the de facto law becomes the subset of laws which are enforced—a decision made by those who enforce the law, giving them arbitrary power over citizens. In other words, when everyone is guilty of something, anyone can be punished for anything.

The Tech Debt

In software development, there’s a concept called Tech Debt. When a company or developer writes software, they’re often under a deadline or dealing with current problems, and so the software they create is made hastily, to solve the immediate problem, and without sufficient tests.

The result is a pile of software that grows more complicated and difficult to work with over time, as more and more one-off solutions are implemented and decisions to prioritize current crises over future needs are made. The pace of development slows, the code becomes harder to work with or fix, and eventually the whole thing has to be thrown out because it finally breaks in a way that can’t be fixed.

Dealing with Tech Debt is practically a field of software engineering by itself, because it genuinely matters—the livelihood of many companies depends on their software continuing to function, both now and in the future.

Laws and regulations, on the other hand, have no such constraint.

There is no automated process by which old, outdated, or stupid laws are disposed of, and without that it falls to actual legislators to repeal laws.

Who is going to spend political capital repealing dumb laws, that could be better spent fulfilling campaign promises or riding the current political hobbyhorse?

And so laws and regulations pile up, inevitably becoming a massive swamp that only lawyers and experts can navigate—thus making everything that has to do with laws/regulations (most things, at least in the physical world in the US) increasingly difficult and costly over time.

The Incentives

It would, in general, be a mistake to blame a specific legislator or regulator for these problems. Pointing fingers and assigning blame is a cherished human tradition, but I have yet to see it actually fix anything in recorded history.

No, the real problem is the incentives that legislators and regulators face. Think about it—what are these people rewarded for, and what are they punished for?

When was the last time a legislator was cheered for removing a stupid law, except when it happens to be a partisan issue?

Legislators are expected to and rewarded for making laws, not repealing them. The closest they might get is voting against a new law, except that usually winds up being a partisan issue anyway.

Regulators have it worse. Legislators undoubtedly have repealed laws in the past; when has regulation been completely removed, ever?

The issue is that regulation tends to be about safety—construction is regulated so that buildings are safe, food manufacturing is regulated so people don’t get poisoned, and so on. Which means that a regulator is rewarded for (and perhaps more importantly, can always justify) adding more regulations.

Can’t be too careful, right? What if they don’t add the regulation, and someone gets hurt? What if someone dies?

On the other hand, if the regulator didn’t add the regulation (or worse, removed existing regulation), and someone gets hurt or killed—well, that sounds like a lawsuit, doesn’t it?

So regulators are incentivized only to add regulation, never to repeal or simplify it, no matter how much regulation currently exists or how stupid it is. Regulators are never rewarded for the pace of construction or innovation, only punished for when things go wrong. Why shouldn’t they just keep adding regulations to everything?

Possible Meta-Regulations

In order to correct this problem, I suggest that we—you guessed it—add regulations!

Specifically, I think that there ought to be laws and regulations governing how laws and regulations work—meta-regulations, if you will. These meta-regulations would hopefully help to correct some of the above problems.

To be clear, I don’t know if these would work, and I don’t think all of them should necessarily be applied at the same time—but I think that each of them could be a serious boon to our government in general.

Within The Overton Window

I’ll start with the ideas I think are actually possible, as in, could feasibly happen.

Sunset Clauses

For those unaware, a sunset clause is an expiration date on a law. When the date comes up, the law is repealed by default unless the relevant legislative body votes to renew it.

Laws with sunset clauses have a long history, and some already exist or have expired, making this the most realistic meta-regulation available (indeed, the Texas Legislature has something like the following already).

To be specific, I would advocate that:

Every law passed by Congress—and every regulation made by the federal government—must include a sunset clause of no more than 30 years.

This would be a radical change to how our government functions, but I believe it would both a) make sense philosophically and b) have a large, tangible benefit.

Part of the entire idea of a democracy or republic is that government is only legitimate when it comes with the consent of the governed—and yet no one consented to the governance or laws made before they were born!

As for the benefit, it creates an automatic process for removing old, outdated, and useless laws from the books, which would clean up a considerable amount of the Tech Debt by default. It also shifts the status quo from keeping all laws and regulations to only keeping the ones that are relevant to the current day, which should help.

Cost-Benefit Analysis

The US Congressional Budget Office (CBO) is required to estimate the costs of almost every bill that congress is seriously considering passing.

What they are not required to do, on the other hand, is estimate the benefits of a given bill, whether in increased GDP, future tax income, deficit reduction, or any non-monetary effect.

Many of us are taught as children how to make lists of pros and cons as a tool to help us make important decisions—and yet the people making our laws are only required to look at the costs of a law, not at the full context of its effects.

I would advocate that:

For all laws and regulations, a full cost-benefit analysis must be done and made public.

While there are plenty of things in the world that can’t be quantified, our government should at least be responsible for considering what can be. (Note that I’m not advocating that every law or regulation must have a larger quantifiable benefit than cost—just that the analysis is done, and made public.)

I believe that this would force legislators and regulators to consider the full context of their decisions in a way that they currently don’t have to, which should hopefully improve their decision-making. Since half of this process already exists, I think it would be straightforward, if not easy, to implement the other half (at least for laws).

It’s also a matter of simple common sense.

Outcomes, Not Methods

The free market functions at its best when given goals or criteria to meet, and left to figure the methods out itself. That is the heart of innovation: given a what, figure out the how.

A good regulation ought to work with the free market by specifying the what, and leaving the how to innovators. Building codes can function this way, specifying certain levels of earthquake resistance or wind forces a building must be able to endure, and leaving the how in the hands of builders.

Other regulations, however, specify the how, leaving no room for improvement or innovation. This leads to stagnation.

A primary example of the latter is the Nuclear Regulatory Commission’s As Low As Reasonably Possible doctrine for radiation exposure, which functionally destroyed new nuclear power in the US for decades.

This is also an example of a regulation with an unclear or changing meaning, which is difficult to adapt to and easy to litigate against, again slowing down innovation over time and tying entire industries up in red tape.

I would advocate that:

A regulation or law must pertain to a desired outcome, not a specific method.

A good regulation is usually an attempt to mitigate or eliminate a negative externality of a free market—it should do that by setting a straightforward, clear outcome that the market must meet, and then leaving the how to the market.

Outside The Overton Window

The previous three meta-regulations all have precedent in existing laws and regulations.

The next three, so far as I am aware, do not.

Consider them more speculative, suggestions for counteracting the problems with incentives outlined above.

A Regulation Cap

One of the issues highlighted above is that regulators are only ever incentivized to add new regulations, never to repeal them. Legislators face similar incentives (with the exception of partisan legislation), but for this suggestion the focus will be on regulators.

One way to change these incentives would be to mandate a maximum number of regulations a given agency/regulator is allowed to create at any given time, a cap on the total number of regulations.

With a maximum in place, once that maximum is reached, regulators will face a different set of incentives than their current, misaligned ones. It would only be possible to add a new regulation by removing an existing one.

This would force regulators to genuinely consider the costs and benefits of each regulation alongside the proposed regulation, choosing only the regulations that achieve the most effect for their cost.

The Caveat: I freely admit that this cap would have to be very carefully executed, lest it be dodged or gamed by regulators. Having a maximum number of regulations might just make each regulation ridiculously long and complicated. A maximum word count for all regulations might solve that problem, but it would create other problems.

I would advocate that:

The total number of regulations a given agency is allowed to pass be no more than some specific number, and each regulation must be no more than some specific number of words or mandates.

By constraining regulators to a finite number of regulations, the most valuable regulations would hopefully be kept, while also minimizing the amount of pointless red tape.

Repeal Before Regulation

“Before passing a new law, however, one should always ask, ’Can we accomplish the same end by repealing—or liberalizing—an existing law?”

- Bryan Caplan, Repealing Political Discrimination

Given that the purpose of meta-regulations is to reduce the complexity and tech debt of laws and regulations, Bryan Caplan’s suggestion above is worth adding to the list.

I would advocate that:

If the purpose of a new law or regulation can be accomplished via the repeal or liberalization of an existing law or regulation, the latter must be preferred, with a new law or regulation only implemented if the former has been tried and failed.

The Caveat: This might have far-reaching consequences that I can’t foresee—for instance, it might significantly slow down any lawmaking as everyone has to check the proposed law against every existing law. I’d be willing to risk those consequences, however, since the primary consequence would be a reduction in the number of laws and regulations.

Error Budgets

There is a fundamental tension in software development between software developers and software operators—that is, between those who are tasked to make the system better and those who are tasked to keep the system running.

This tension exists because of the possibility of error—any change to the system, including ones designed to improve it, poses the risk of damaging the system or taking it offline.

The field of software engineering that manages this tension (Devops) has a tool for managing this tension, called the error budget, which is designed to maximize both development/innovation while also making sure the system is kept running as much as possible.

Definition And Motivation

An error budget is a finite amount of mistakes, agreed upon ahead of time, that a system is allowed to have per amount of time. A given application may be allowed five mistakes per year, for instance.

Two states may then exist for the system, with the following consequences:

The system has not yet reached its error budget for the year, in which case development/changes/innovation may proceed at full speed ahead.

The system has reached its error budget for the year, in which case no changes will be permitted (except for emergencies) until the year is up, and the error budget resets.

This balances the incentives of everyone involved—those making changes can go ahead and do so, while aware that they have some number of mistakes in the budget, and once those mistakes have been made, those who want to keep the system running are given priority in the decision-making process.

What is key to understanding how error budgets incentivize innovation is that the budget is supposed to be used up every time.

In other words, if the budget wasn’t used up, then changes weren’t being made fast enough. The budget allows people to make mistakes while still encouraging them to innovate by precisely defining how many mistakes are tolerated during a given period of time.

Regulatory Failures In The Trade-off

Many regulatory agencies, similar to software engineering, face a trade-off between innovation and safety. The difference is that, for many of these agencies, lives are on the line, which causes them to err on the side of safety so much that they actually get people killed.

The FDA, for instance, is so safety-conscious that they delay approving life-saving medicine or vaccines.

For each decision the FDA makes, there are two death tolls:

The people killed because the FDA approved an unsafe medication.

The people killed because the FDA didn’t approve (or delayed the approval of) a life-saving medication. (This also includes all the life-saving medications that could have existed, if the process to bring them to market was less slow and less costly.)

However, the regulators of the FDA are only punished for (1), not (2). This leads them to err too far on the side of not-approving (or delaying) life-saving medication, and gets people killed.

The Error Budget

To correct this, as morbid as it may sound and as politically unpalatable as the idea is, I believe that an error budget (in this case, a number of deaths per year) is a good solution, perhaps the best one.

The fact of the matter is that people die, and the goal of a regulatory body serving the American people should not be to minimize only the deaths that can be laid directly at their feet, but to minimize the total deaths from causes (1) and (2).

Innovation must be incentivized and the total death toll must be minimized.

Thus, I propose:

Regulatory agencies governing safety, including but not limited to the FDA, EPA, NRC, and CDC, must adopt an error budget. A specified number of errors per year sourced from the domains of each agency must be calculated, and if that number is below the error budget, regulatory requirements must be loosened. Once the budget has been filled, regulatory requirements would be tightened and no risks would be taken until the following year.

The Caveat: The implementation of an error budget would be very different for each agency, and the idea would need to be tailored appropriately to each situation, but I believe that this would help regulatory agencies adopt policies and regulations that both incentivize innovation and minimize the total death toll.

Conclusion

Laws and regulations are a part of life, and have the potential to do a great deal of good. The way that the current systems work, however, incentivizes lawmakers and regulators to make decisions that are not good for the long-term health of those systems.

The above meta-regulations would, I hope, change those systems and incentives such that the laws and regulations produced better serve the people they govern.

In political philosophy, this is not what it means to consent to be governed. Most social contract theorists would argue that by virtue of living within the society/state, you have implicitly consented to being governed. That is the nature of the social contract. As such, in being a member of that society, you consent not just to all laws being passed, but all laws previously passed. Further, this idea of an explicit consent doesn’t make sense, eg. if someone wants to avoid being subject to a certain law, they cannot simply not ‘consent’ to it.

For a democracy to be legitimate in the sense that you describe it is a truism. According to these social contract theorists, the democracy by definition comes with the consent of the governed. Additionally, there are other methods of obtaining legitimacy, such as justified coercion and right to rule.

That is not to say that your overall point about Sunset Clauses is invalid, but I feel this section subtracts rather than adds to your reasoning. From my perspective, you have already offered suitable reasoning to support your view in the benefit section not to require a section on the philosophical sense.

I’d like to add that this is an interesting article and I’m interested to conduct some more research on some of these suggestions.

“implicit consent” sounds like the exact opposite of “consent”

If we taboo the words, “consent” means that someone said “yes”, or nodded, or signed a paper. How specifically is “implicit consent” different from “the person disagrees, but doesn’t really have much of a choice”?

What do you need to do so that people finally stop saying that you “consent implicitly”? Some say that you should leave the society. But anywhere else is a different society; what if I don’t consent to any of their rules? And by the way, according to this logic, the popularity of ideas like seasteading and Mars colonization seems like a quite strong evidence that many people actually do not “consent implicitly” even in the passive way, but it is difficult to find an alternative, but they are working on it. It’s like when you tell someone to leave an island if they disagree, and they say “give me a few hours, I am building a boat”, I would interpret it as meaning that their preference to leave the island was obvious from the moment they started building the boat, not just when the boat finally left the shore.

Unless “implicit consent” is just a convenient legal fiction, i.e. a lie that makes things simple for the people who matter.

Your point about it being difficult to leave society is one of the most common objections to social contract theory. However, you have misunderstood what implicit consent is. This article offers a clear explanation of what explicit and implicit consent are. I recommend reading it in full, but I’ll quickly draw out the most relevant sections.

This is the form of contract that you spoke about as “consent” above.

This is what is meant by implicit consent. Implicit consent is different from the person disagreeing because they still take the action they would if they explicitly consented, from which it can be deducted that they do consent. By ordering the burger, it can be known that you consent to paying for it. If the waitress brought you the burger and you denied to pay, this would be ridiculous as you consented to pay when you ordered it. Does this make sense? To not consent would be to not order the burger in the first place.

There is also a third kind explored in the article, hypothetical consent, which might be worth a read. I do acknowledge your question on ‘what people need to do so that people stop saying you consent’ is difficult to answer, but it is one of the most central questions in social contract theory. As such, I don’t have a single answer for you, but I hope this was able to clear up a little what ‘implicit consent’ actually means. If you’d like to discuss more on what this barrier of consent is, I’d be happy to discuss further.

The problem with “implicit consent via acceptance of services” is that if someone takes you as a slave, unless you choose to starve to death in protest, you have “implicitly consented” to slavery by eating the food they gave you. This argument proves too much; it legitimizes not only governments but also slavery.

For the record, I am not an anarchist, and my objection against violating someone’s consent is not absolute. From my perspective, we live in a universe that doesn’t care about our freedoms or happiness, and sometimes there is simply no perfect solution, and trying to make people happy in one way will only make them unhappy in a different way. So I see consent as an important—but not absolute—thing. If building a functional society requires occasionally violating someone’s consent, I guess it should be done, because the alternative is much worse. But it should not be done cheaply, and we should not pretend that it didn’t happen, which is what the social contract theory is trying to do, in my opinion.

I understand what you’re saying. I wasn’t familiar with the exact definitions of the political theory you cite.

I do think that it’s reasonable to be bound by laws made before one was born, but only to a certain extent. Society changes over time, and over a long enough period of time I would argue—philosophically—that the society that passed the law is no longer the society I was born into. (And yet the law is still binding, because the law doesn’t have an expiration date.)

That being said, thanks for the reply, and I appreciate the feedback!