A Way To Be Okay

This is a post about coping with existential dread, shared here because I think a lot of people in this social bubble are struggling to do so.

(Compare and contrast with Gretta Duleba’s essay Another Way To Be Okay, written in parallel and with collaboration.)

As the title implies, it is about a way to be okay. I do not intend to imply it is the only way, or even the primary or best way. But it works for me, and based on my conversations with Nate Soares I think it’s not far from what he’s doing, and I believe it to be healthy and not based on self-deception or cauterizing various parts of myself. I wish I had something more guaranteed to be universal, but offering one option seems better than nothing, for the people who currently seem to me to have zero options.

The post is a bit tricky to write, because in my culture this all falls straight out of the core thing that everyone is doing and there’s not really a “thing” to explain. I’m sort of trying to figure out how to clearly state why I think the sky is often blue, or why I think that two plus two equals four. Please bear with me, especially if you find some parts of this to be obvious and are not sure why I said them—it’s because I don’t know which pieces of the puzzle you might be missing.

The structure of the post is prereqs/background/underlying assumptions, followed by the synthesis/conclusion.

I. Fabricated Options

There’s an essay on this one. The main thing that is important to grok, all the way deep down in your bones, is something like “impossible options aren’t possible; they never were possible; you haven’t lost anything at all (or failed at anything at all) by failing to take steps that could not be taken.”

I think a lot of people lose themselves in ungrounded “what if”s, both past-based and future-based, and end up causing themselves substantial pain that could have been avoided if they had been more aware of their true constraints. e.g. yes, sometimes people really are “lazy,” in the sense that they had more to give, and could have given it, and not-giving-it was contra to their values, and they chose not to give it and then things were worse for them as a result.

But it’s also quite frequently the case that “giving more” was a fabricated option, and the person really was effectively at their limit, and it’s only because they have a fairy tale in which they somehow have more to give that they have concluded they messed up.

(Related concept: counting up vs. counting down)

An excerpt from r!Animorphs: The Reckoning:

The Andalite’s stalks drooped. <Prince Jake, you speak as if you still intend to maintain control. As if you believe control is a thing that is possible. You know how the [ostensibly omniscient and omnipotent] Ellimist works. You know you cannot outmaneuver it. Any attempt to predict the intended outcome, and deliberately subvert it, will fail. You should be riding this wave, not trying to swim through it.>

...

<There are many things which seem possible which never were, in fact. You act as if we were on a path, and Cassie’s appearance has dragged us off of it—temporarily, pending a deliberate return. But consider. Rachel was forewarned of Marco’s reaction—may well have been placed here specifically to guard against it. The path you imagined us to be on is not real. We were never on it. We were on this path—always, from the beginning—and simply did not know it until now. To pretend otherwise is sheer folly.>

Humans appear to have some degree of agency and self-determination, but we often have less than we convince ourselves. Recognizing the limits on your ability to choose between possible futures is crucial for not blaming yourself for things you had no control over.

(In practice, many people need the opposite lesson—many people’s locus of control is almost entirely external, and they need to be woken up to their capacity for choice and stop blaming everything on immutable factors. But for the purposes of this discussion, on A Way To Be Okay, the relevant failure mode is erroneously thinking that you had more internal control than you did.)

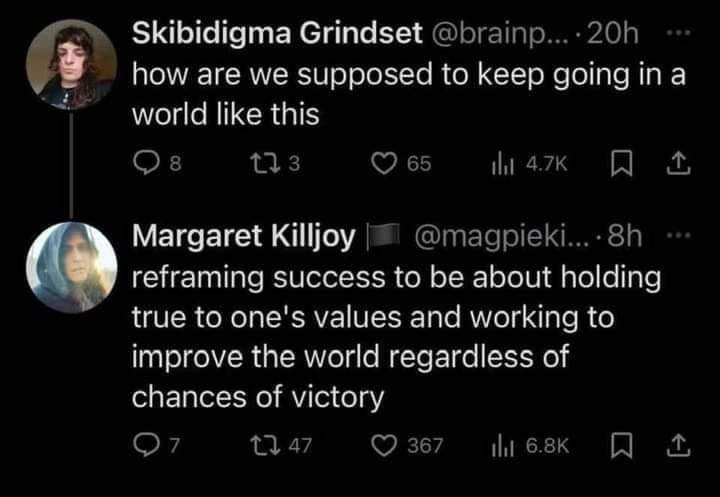

II. Victory Conditions

There’s a snippet of advice that I’ve found myself giving over and over again, especially to e.g. young middle school students under my care who are struggling with parents or friendship or early romance. It doesn’t have a standard phrasing, but it goes something like “it’s a bad idea to put your victory conditions inside someone else’s head.”

For instance: to be happy or okay only if someone else is happy or okay.

For instance: to feel settled only if your parents admit that they mistreated you.

There’s the trope of a character with a revenge plot who has everything they need to succeed, except for the fact that they need their enemy to know who it was who defeated them, and that complicates things and overexposes them and they end up losing.

If you make [feeling okay] contingent on someone else feeling a certain way or acknowledging a certain fact or taking a certain action, then you’re effectively putting your okay-ness in their hands; they can deny you closure or stability or whatever via an act of pure spite, or simply by seeing things differently, etc. It’s unstable, the sort of situation where even if you do turn out okay it’s only because you got lucky.

In addition to the core point, about not setting your victory conditions in places where you have no agency and no control, there’s a sub-point or prerequisite point which is did you know you can choose to put your victory conditions somewhere?

i.e. many people don’t realize that they can, via an intentional decision, shift what they’re caring about, in a given situation, or choose to redefine (local) victory; when I explain to a middle schooler that it’s a bad idea to wait on their parents to acknowledge unfairness, because what if their parent is just stubborn about it, they usually go “oohhhh … huh,” and then decide to declare victory after some other, more tractable benchmark.

III. Sculptors and Sculptures

I am diachronic; sometimes this gets misconstrued as never-changing or whatever, but it’s not quite that.

My go-to metaphor here is that there is a sculpture, which is the present-day version of a person (their current skills, appearance, relationships, projects, goals, etc), and a sculptor, which is the Person With A Vision, Currently Carving Toward That Vision.

By “diachronic,” what I mostly mean is that the sculptor hasn’t evolved much; at no point have I switched from carving This Statue to instead carving That Statue.

I don’t think that this particular Way To Be Okay requires someone to be diachronic rather than episodic; I mention this more to point at the distinction between locating your identity in your present snapshot state, versus locating your identity in the-thing-that’s-guiding-your-evolution. A lot of people see themselves as what they presently are, and thus if they switch to something else, they feel like they have deeply changed, as a person.

There’s another way to do things, which is to have an evaluator that evaluates options in the moment, and consistently chooses the best option according to your values, which (since the inputs to that evaluation function can change) will sometimes result in you spending three years as a public school teacher and other times have you vanish into the wilderness with a bunch of AI researchers during COVID, all without feeling like the overall thing-you’re-doing has changed at all.

(The equation y = 2x + 7 is the same equation even though sometimes it tells you that y is 13 and other times it tells you that y is 7 and other times it tells you that y is 0.71681469282.)

IV. Afghanistan

The United States occupied Afghanistan for nearly two decades.

This was a mixed bag to say the least, but it is unambiguously true that US occupation led to some better outcomes, e.g. greater freedom and opportunity for women.

When the United States pulled out of Afghanistan rather sloppily in August of 2021, the Taliban quickly took over, and the year-and-change since has seen a reversion of almost all of the progress on gender equality, with the remaining delta seeming likely to evaporate soon.

Many critics have said things to the effect of “we spent $X trillion and thousands of lives to take Afghanistan from oppressive Taliban rule to … oppressive Taliban rule.”

These people are missing (some of) the point.

For nearly two decades, girls in Afghanistan grew up going to school; many of them went to college; all of the individuals in that generation lived a brighter life than they otherwise would have.

That may not have been worth the cost. I’m not arguing about the overall net, or claiming that it was correct to be there.

(I’m not claiming it was incorrect, either. I’m dodging the question.)

But it’s important to note that those twenty years of relative freedom are not nothing. They happened. There was happiness. Lives were better. Some people escaped entirely. Even those who did not escape, and who are currently falling back under dark-ages religious oppression, had some time in the sun.

There’s a tendency among anti-transhumanists to scoff that there’s no such thing as an infinite life span, because you’ll just die after ten trillion years anyway, so what’s the point—as if there’s no appreciable difference between 100 years and 10,000,000,000,000 because both timespans end.

The point is the days in between. There’s the remembering self, and there’s the experiencing self; if I knew I were to die tomorrow I would not feel that my life today was wasted, and even if everyone were to die tomorrow, such that none of my work endured into the future, this would not be a total loss.

There is “mattering” in what happens, separate from its impact on the future. Both perspectives need to be taken into your final accounting.

V. “The Plan”

There’s a somewhat obscure but fairly-compelling-to-me model of psychology which states that people are only happy/okay to the extent that they have some sort of plan, and also expect that plan to succeed.

Handwaving, but basically: you feel like it’s reasonable to believe that the future holds at least some of the things you want it to hold; you feel like you’re on a path to at least surviving and possibly thriving; you have a sense of what things are on the bottom two or three layers of your needs-hierarchy, and you expect those needs to be met.

If you don’t have this sense, things start to fray and unravel, and you either tend to a) get really depressed, b) start to frantically cast about for a new plan to get them, or c) descend into self-deception about your looming doom, plugging your ears and ignoring the evidence that you’re about to fail.

(So claims this model.)

And so, if your plan depends on something like “being alive for at least the next thirty years,” or “all of the life in the biosphere not being converted into Bing,” then developments over the past five years which threw your expectations into uncertainty and which seemed to give you no particular path to doing anything about it...might have left you feeling somewhat not-okay.

A Way To Be Okay

All right, here we go. This may be anticlimactic; I can’t tell.

Basically:

If you locate your identity in being the sort of person who does the best they can, given what they have and where they are,

and if you define your victory condition as I did the best that I could throughout, given what I had and where I was,

then while the tragedy of dying (yourself) or having the species/biosphere end is still really quite large and really quite traumatic,

it nevertheless can’t quite cut at the core of you. There is a thing there which is under your control and which cannot be taken away, even by death or disaster. There is a plan whose success or failure is genuinely up to you, as opposed to being a roll of the dice. It’s a sort of Stoic mentality of “I cannot control what the environment presents to me, but I can control how I respond to it.”

(Note that “best” is sort of fractally meta; obviously most of us are not going to manage to successfully hit the ideal maximum of output circa our own values, in that none of us will steer our own bodies and resources as well as a superintelligence with our goals would. But that too is a constraint; doing the (realistic, approximate) best that you can is the best that you can actually do; a theoretical Higher Bar is a fabricated option.)

I am not super stoked about what seems to me to be the fact that I will die before my two hundredth birthday.

I am not super stoked about what seems to me to be the frighteningly high likelihood that I will die before my child’s twelfth birthday.

I am unhappy about the end of, essentially, All Things, and the vanishment of every scrap of potential that the human race has to offer, both to itself and to its descendants.

And yet, I believe I am okay. I believe I am coping with it, and I believe I am coping with it without flinching away from it. I believe I have looked straight at it, and grieved, and I believe that I will do what I can to change the future, and it is because I believe that I will do what I can (and no less than that) that I do not feel broken or panicked or despondent.

I also believe that it’s hard. That smarter and better people than me have tried, and reported to me that they have failed. That it’s overwhelmingly likely that nothing that I try will succeed, and all of my efforts will be in vain.

But that’s “okay” in the deepest sense, because I am not holding my identity to be “the guy who survived” or “the guy who fixed everything,” and I am not locating my emotional victory condition in “it actually succeeded.”

(In part, I am not doing this because it is self-defeating; it will cause me to be worse at actually contributing to the averting of disaster; it will cause me to behave erratically and unstrategically and possibly to be hopelessly depressed and none of these things help, so I shall not bother with them.)

Whether we make it or not is not fully under my control, and therefore I don’t put my evaluation of my self in that same bucket. Actually trying is something that is under my control—that’s a plan I can succeed at.

I know, in my bones, that I am capable of impressive-by-human-standards achievements.

I know, in my bones, that I really really want to live.

I know, in my bones, that a world where humanity and the biosphere are not destroyed by runaway artificial intelligence is a better world than the one we currently seem to be in, where they will be.

I know, about myself, that I can and will take what opportunities arise, and that I will put non-negligible effort toward making and finding opportunities, and not merely waiting for them to appear, and that I am the type of person who does what he can, and that I will do what I can here just like I will everywhere else.

(And I know that here is more important in a literal, objective sense, than everywhere else, and I know that I know that, and I trust myself to balance those scales properly.)

And, knowing all that—

I’m okay. I’m able to experience joy in the moment, I’m able to have happy thoughts about the future (both the immediate future that I more or less “expect” to get, and various idle daydreams about unlikely but delightful futures that it is fun to imagine in the event that we get miracles). I’m able to make plans, I’m able to set goals, I’m able to live life. Sometimes, I work really quite hard to try to nudge the world in ways I think might help, and sometimes I relax and live my life as if I’ve done what I can and the rest is out of my hands.

(Because in a very real sense, it is, and therefore this is not sacrificing or defecting on anything.)

Your mileage may vary, but if what you’re doing right now is both not helping you and not helping the world, something has gone wrong.

Good luck.

Post-script: expansions from the comments

In the thread under Logan Strohl’s top comment, I eventually wrote:

> You know, I think I’m actually just wrong here, and people should be Potential Knights of Infinite Resignation.

I think I come at this from a slightly different angle, and I think it has to do with my sculptor-sculpture distinction. I think there’s a difference between values and meta-values and meta-meta-values (or something), and that when I discover that a particular instantiation of a meta-value into a concrete value is unpossible, I respond by seeking a new way to instantiate that meta-value.

And I think this is perhaps a critical piece of the puzzle and belongs in the essay somewhere.

I haven’t fleshed this out or figured out how to connect it, but I did want to highlight it as a potential Important Inclusion. Look below for more context.

- Mental Health and the Alignment Problem: A Compilation of Resources (updated April 2023) by (May 10, 2023, 7:04 PM; 256 points)

- Another Way to Be Okay by (Feb 19, 2023, 8:49 PM; 107 points)

- Voting Results for the 2023 Review by (Feb 6, 2025, 8:00 AM; 86 points)

- A collection of approaches to confronting doom, and my thoughts on them by (Apr 6, 2025, 2:11 AM; 48 points)

- If you are too stressed, walk away from the front lines by (Jun 12, 2023, 2:26 PM; 44 points)

- EA & LW Forum Weekly Summary (20th − 26th Feb 2023) by (EA Forum; Feb 27, 2023, 3:46 AM; 29 points)

- 's comment on The Seeker’s Game – Vignettes from the Bay by (EA Forum; Jul 11, 2023, 9:30 AM; 23 points)

- Being at peace with Doom by (Apr 9, 2023, 2:53 PM; 23 points)

- Being at peace with Doom by (EA Forum; Apr 9, 2023, 3:01 PM; 15 points)

- If you are too stressed, walk away from the front lines by (EA Forum; Jun 12, 2023, 9:01 PM; 7 points)

- 's comment on Mental Health and the Alignment Problem: A Compilation of Resources (updated April 2023) by (Mar 28, 2023, 10:17 PM; 6 points)

- EA & LW Forum Weekly Summary (20th − 26th Feb 2023) by (Feb 27, 2023, 3:46 AM; 4 points)

Another piece of the “how to be okay in the face of possible existential loss” puzzle. I particularly liked the “don’t locate your victory conditions inside people/things you can’t control” frame. (I’d heard that elsewhere I think but it felt well articulated here)