Unconvenient consequences of the logic behind the second law of thermodynamics

Let’s consider a simple universe consisting of an ideal gas inside a box (which means a number of massive points behaving as prescribed by Newton’s physics with completely elastic collisions).

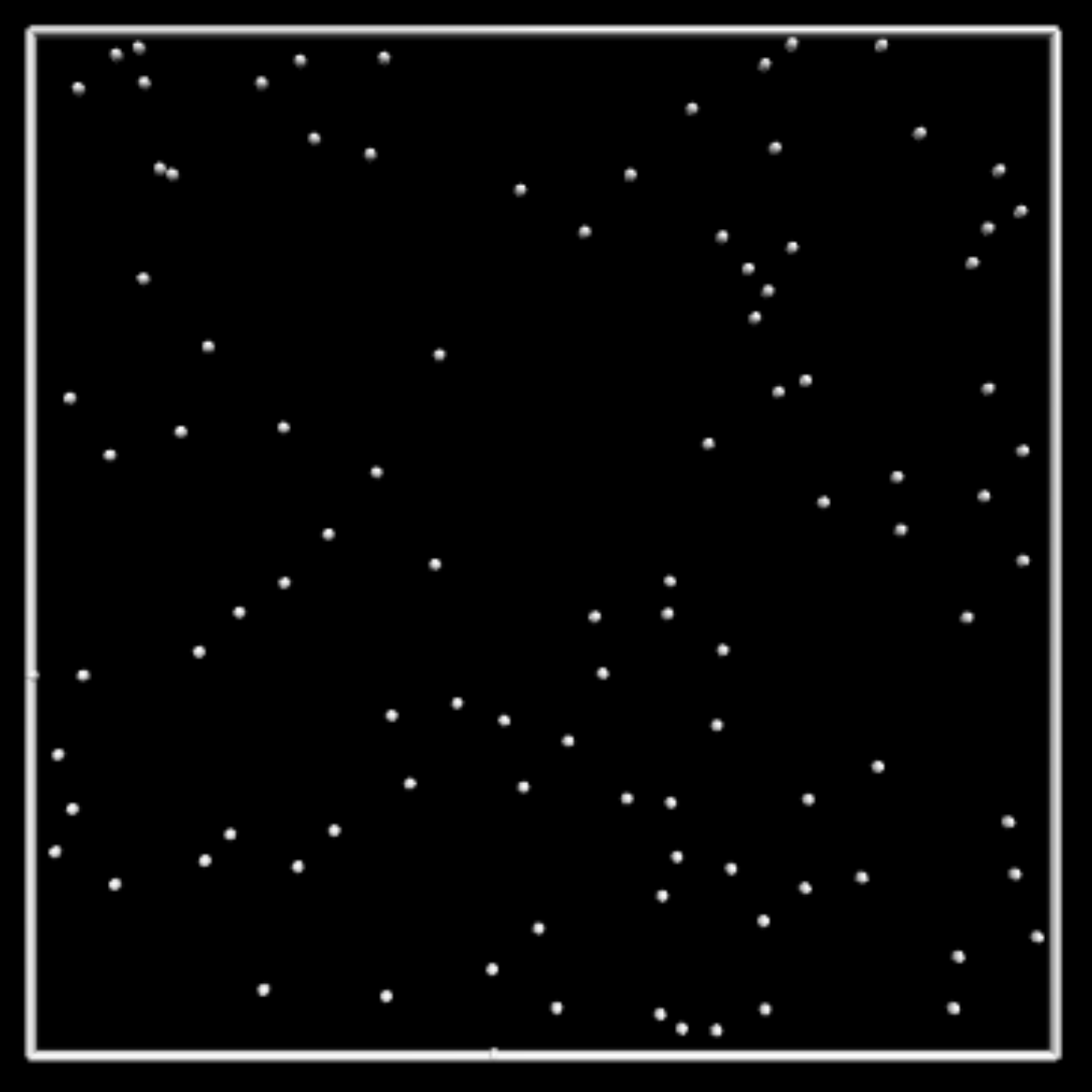

After the system reaches the thermodynamic equilibrium you could ask if it will stay forever in this equilibrium state, the answer turns out to be negative: Poincarè recurrence Theorem applies and we can be sure that the system will go far from equilibrium again and again given a sufficiently long “life” for this universe. Therefore entropy in this universe will be a fluctuating function like this:

If you are patient enough you would see

countinuous micro-fluctuations

occasional slightly bigger fluctuations

rare “not-so-small” fluctioations

extremely rare medium-big fluctuation

unconceiveably rare super-big fall of entropy like this

The interesting thing about these fluctuations is that their graph would look almost the same if you reverse the time (and this is because the underlying physics laws followed by the particles are time-symmetric). If you started in a special low-entropic configuration (for example the gas was concentrated) your reference to distinguish the past from the future could be the increasing of entropy at least at the very first moment until you reached the equilibrium. However if you wait long enough after the equilibrium is reached you would see the graph reaching again any possible level of low entropy so there is really nothing special in the low entropy at the beginning.

So this toy universe is actually symmetric in time, there is nothing inside it that would make a particular direction of time different from the other.

Now let’s consider our real actual universe. Like the toy universe it seems we have again time-symmetric laws of physics applied in a far more complex system of particles. Again if we are asked to distinguesh the past from the future we rely our decision on the direction of increasing entropy but like before it could be misleading: maybe our universe is fluctuating too and we are just inside a particular super-big fluctuation, so there is nothing special about low entropy that forces us to put it in the “past”: it will eventually happen also in the future, again and again. Furthermore if we are inside a fluctuation we have no way to tell if we are inside the decreasing part rather than the increasing part of the fluctuation: they would look very similar if you just reverse the time arrow. You could think you “see” that entropy is increasing but your perception of the time depends on your memory and if entropy is decreasing maybe your memory is just working “backwards”, so your perception is not reliable, we have just the illusion to see the time flowing in the way we think (and actually our memory it is not really a “memory” because it is not even really “storing” information, it is anticipating the future that we think is the past).

What is the probability that we are in the entropy-decreasing part rather than the increasing part of the process? As far as we can tell it should be 50%, given the information that we have: the two parts have the same frequency in the life of the universe. So it is equally possible that the world is behaving in the way we think it is and that the world is behaving in a super wild and improbable way: the heat is flowing from cold to hot objects, the free gasses are contracting, the living being are originating from dust and ending inside the woumb of their mother and all this sequence of almost absurd events is happening just by chance, an incredibly improbable combination of coincidences. So we should conclude that this absurd scenario is equally likely to be true as the “normal” scenario where entropy is increasing. This could seem a crazy though but it seems logically reasonable according to the argument we considered above. Isn’t it?

I think the key to the puzzle is likely to be here: there’s likely to be some principled reason why agents embedded in physics will perceive the low-entropy time direction as “the past”, such that it’s not meaningful to ask which way is “really” “backwards”.

Here’s the way I understand it: A low-entropy state takes fewer bits to describe, and a high-entropy state takes more. Therefore, a high-entropy state can contain a description of a low-entropy state, but not vice-versa. This means that memories of the state of the universe can only point in the direction of decreasing entropy, i.e. into the past.

Yes! As Jaynes teaches us: “[T]he order of increasing entropy is the order in which information is transfered, and has nothing to do with any temporal order.”

No. As I said in this comment, this can not be true, otherwise in the evening you would be able to mak prophecies about the following morning.

Your brain can not measure the entropy of the universe—and its own entropy is not monotone with time.

You should think of entropy as a subjective quantity. Even by thinking in terms of different fluctuations, you’re imposing a frame/abstraction that isn’t part of the actual structure of reality. Entropy is a property of abstractions, and quantifies the amount of information lost by abstracting. The universe, by itself, does not have any entropy.

Ok but even if I remove the idea of “entropy” from my argument the core problematic issue is still here: we have 50% probability that our universe is evolving in the opposite direction and an incredibly long chain of inbelievably improbable events is happening, and even if it is not happening right now it would happen with the same frequency of the standard “probable” evolution.

Space itself is symmetrical, so it is equally possible that the world is behaving in the way we think it is, with objects falling down and that the world is behaving in a super wild and improbable way: the earth is above us and things actually fall up.

The situation is symmetrical. We define past and future in terms of entropy. Your weird and improbable universe is an upside down map of the same territory.

Also, note that large fluctuations are much much less likely than small ones. The “we are in a random fluctuation” theory predicts that we are almost certainly in the smallest fluctuation we could possibly fit in. (Think spontaneously assembled nanocomputer just big enough to run a single mind that counts as an observer in whatever anthropic theory we use. ) Even conditioned on our insanely unlikely experience so far, this hypothesis stubbornly predicts that the world we see isn’t real. (I am not sure whether it predicts that our experiences were noise, or we are on a slightly larger nanocomputer that is running an approximate physics simulation.)

Clearly some sort of anthropic magic or something happens here. We are not in a random fluctuation. Maybe the universe won’t last a hyperexponential amount of time. Maybe we are anthropically more likely to find ourselves in places with low komolgorov complexity descriptions. (“All possible bitstrings, in order” is not a good law of physics, just because it contains us somewhere).

Another way of thinking about this, which amounts to the same thing: Holding the laws of physics constant, the Solomonoff prior will assign much more probability to a universe that evolves from a minimal-entropy initial state, than to one that starts off in thermal equilibrium. In other words:

Description 1: The laws of physics + The Big Bang

Description 2: The laws of physics + some arbitrary configuration of particles

Description 1 is much shorter than Description 2, because the Big Bang is much simpler to describe than some arbitrary configuration of particles. Even after the heat-death of the universe, it’s still simpler to describe it as “the Big Bang, 10^zillion years on” rather than by exhaustive enumeration of all the particles.

This dispenses with the “paradox” of Boltzmann Brains, and Roger Penrose’s puzzle about why the Big Bang had such low entropy despite its overwhelming improbability.

The entropy of the brain is approximately constant. A bit higher when you have the flu, a bit lower in the morning (when your body temperature is smaller). If we perceived past and future according to the direction in which the entropy of our brain increases, I would remember the next day when going to bed.

The human brain radiates waste heat. It is not a closed system. Lets think about a row of billiard balls on a large table. A larger ball is rolled over them, scattering several. Looking at where the billiard balls end up, we can deduce where the big ball went, but only if we assume the billiard balls started in a straight line. Imagine a large grid of tiny switches, every time they are pressed, they flip. If the grid starts off all 0, you can write on it. If it starts off random, you can’t. In all cases you have 2 systems, X and Y. Lets say that X starts in a state of nonzero entropy, and Y starts at all 0′s. X and Y can interact through a controlled not, sending a copy of X into Y. (Other interactions can send just limited partial info from X to Y) Then X can evolve somewhat, and maybe interact with some other systems, but a copy remains in Y. A recording of the past. You can’t make a recording of the past without some bits that start out all 0′s. You can’t record the future without bits that end up all 0′s. (Under naieve forward causality)

How do you make space for new memories? By forgetting old ones? Info can’t be destroyed. Your brain is taking in energy dense low entropy food and air, and radiating out the old memory as a pattern of waste heat. Maybe you were born with a big load of empty space that you slowly fill up. A big load of low entropy blank space can only be constructed from the negentropy source of food+air+cold.

Sure, but are you saying that the human brain perceives the whole universe (including the heat it dissipated around) when deciding what to label as “past” or “future”? The entropy of the human body is approximately constant (as long as you are alive).

Ok, we should clarify which entropy you are talking about. Since this is a post about thermodynamics, I assumed that we are talking about dS = dQ/T, that is the one entropy for which the second law was introduced. In that case, when your brain’s temperature goes down its entropy also goes down, no use splitting hair.

In the second paragraph, it seem instead that you are talking about an uncertainity measure, like Von Neumann entropy*. But the Von Neumann entropy of what? The brain is not a string or a probability distribution, so the VN entropy is ill-defined for a brain. But fine, let us suppose that we have agreed on an abstract model of the brain on which we can define a VN entropy (maybe its quantum density matrix, if one can define such a thing for a brain). Then:

in a closed system the fact that “Info can’t be destroyed [in a closed system]” means that the total “information” (in a sense, the total Von Neumann entropy) is a constant. It never increases nor decreases, so you can not use it to make a time arrow.

in an open system (like the brain) it can increase or decrease. When you sleep I guess that it should decrease, because in some sense the brain gets “tidied up”, many memories of the day are deleted, etc. But, again, when you go to bed your consciousness does not perceive the following morning as “past”.

*while the authenticity of the following quote is debated, it is worth to stress that they the thermodynamical entropy and the Shannon/Von Neumann entropy are indeed two entirely different things, while related in some specific contexts.

Imagine a water wheel. The direction the river flows in controls the direction that the wheel turns. The amount of water in the wheel doesn’t change.

Just because the total entropy doesn’t change over time, doesn’t mean the system is time symmetric. An electric circuit has a direction to it, even though the number of electrons in any position doesn’t change (except a bit in capacitors)

So the forward direction of time is the direction in which your brain is creating thermodynamic entropy. Run a brain forward, and it breaks up sugars to process information, and expels waste heat. Run it backwards and waste heat comes in and through huge fluke, jostles the atoms in just the right way to make sugars.

Entropic rules are more subtle than just tracking the total amount of entropy. You can track the total amount of entropy, and get meaningful restrictions on what is allowed to happen. But you can also get meaningful restrictions on which bits are allowed to be in which places. Restrictions that can also be understood in terms of state spaces. Restrictions that stop you finding out about quantum random events that will happen in the future.

The physical entropy and the von Neuman information theory entropy are intricately interrelated.

This is technically true of the universe as a whole. Suppose you take a quantum hard drive filled with 0′s, and fill it with bits in an equal superposition of 0 and 1 by applying a Hadamard gate. You can take those bits and apply the gate again to get the 0′s back. Entropy has not yet increased. Now print those bits. The universe branches into 2^bit count quantum branches. The entropy of the whole structure hasn’t increased, but the entropy of a typical individual branch is higher than that of the whole structure. In principle, all of these branches could be recombined, in practice the printer has radiated waste heat that speeds away at light speed, and there probably aren’t aliens rushing in from all directions carrying every last photon back to us.

The universe is low entropy (like < a kilobyte) in komolgorov complexity based entropy. Suppose you have a crystal made of 2 elements. One of the elements only appears in prime numbered coordinates in the atomic structure. Superadvanced nanomachines could exploit this pattern to separate the elements without creating waste heat. Current human tech would just treat them as randomly intermixed, and melt them all down or dissolve them. A technique that will work however the atoms were arranged, and must produce some waste heat. Entropy is just anything you can’t practically uncompute. https://en.wikipedia.org/wiki/Uncomputation

Remembering something into an empty memory buffer is an allowed operation of a reversible computer, just do a controled not.

When the brain forgets something, the thing its forgetting isn’t there any more, so it must radiate that bit away as waste heat.

The universe as a whole behaves kind of like a reversible circuit. Sure, its actually continuous and quantum, but that doesn’t make that much difference. The universe has an operation of scramble, something that is in principle reversible, but in practice will never be reversed.

If you look at a fixed reversible circuit, it is a bijection from inputs to outputs. (X,X) and (X,0) are both states that have half the maximum entropy, and one can easily be turned into the other. The universe doesn’t provide any instances of (X, X) to start off with (for non-zero X), but it provides lots of 0′s. When you come across a scrambled X, its easy to Cnot it with a nearby 0. So the number of copies of (X, X) patterns tends to increase. These are memories, photos, genes and all other forms of record of the past.

In this case you do not say “the wheel rotates in the direction of water increase”, but “the wheel rotates in the direction of water flow”.

I can see how you could argue that “the consciousness perceives past and future according to the direction of time in which it radiated heath”. But, if you mean that heath flow (or some other entropic-related phenomenon) is the explaination for our time perception (just like the water flow explains the wheel, or the DC tension explains the current in a circuit), this seems to me a bold and extraordinary claim, that would need a lot more evidence, both theoretical and experimental.

Yes, whenever you pinch a density matrix, its entropy increases. It depends on your philosophical stance on measurement and decoherence whether the superposition could be retrieved.

In general, I am more on the skeptical side about the links between abstract information and thermodynamics (see for instance https://arxiv.org/abs/1905.11057). It is my job, so I can not be entirely skeptic. But there is a lot of work to do before we can claim to have derived thermodynamics from quantum principles (at the state of the art, there is not even a consensus among the experts about what the appropriate definitions of work and heath should be for a quantum system).

Anyway, does the brain actually check whether it can uncompute something? How is this related with the direction in which we perceive the past? The future can (in principle) be computed, and the past can not be uncomputed; yet we know about the past and not about the future: is this that obvious?

This is another strong statement. Maybe in the XVIII century you would have said that the universe is a giant clock (mechanical philosophy), and in the XIX century you would have said that the brain is basically a big telephone switchboard.

I am not saying that it is wrong. Every new technology can provide useful insights about nature. But I think we should beware not to take these analogies too far.

Wikipedia has a good summary of what Entropy as used in the 2nd law actually is: https://en.m.wikipedia.org/wiki/Entropy

The key points are:

We define the direction of time by the increase in Entropy.

“If we wait long enough” rhetorically hides the scope involved. To have merely 10 Helium molecules congregate in the center 1% of a sealed sphere, assuming it takes them 1⁄10 of a second to traverse that volume, will require 10^99 seconds. Which is 10^82 times the current age of the universe. Or that it will happen approximately 10 times before the heat death of the universe.

If we want to “define the direction of time by the increase in Entropy” then we have a problem in a universe where entropy is not monotonic, the definition doens’t work

The “age of the universe” could be not really the age of the universe but the time after the last entropy minimum reached in a never-ending sequence of fluctuations

Let me put it another way: If Entropy was any sort of a random walk, there would be examples of spontaneous Entropy decrease in small environments. Since we have 150 years of experimental observation at a variety fo scales and domains and have literally zero examples of spontaneous Entropy decrease, that puts the hypothesis “Entropy behaves like a random walk” on par with “the sum of the square root of any two sides of an Icossoles triangle is equal to the square root of the remaining side”—it’s a test example for “how close to 0 probability can you actually get”

Depending on how you define it, arguably there are observation of entropy decreases as small scales (if you are willing to define the “entropy” for a system made of two atoms, for example).

At macroscopic scale (10^23 molecules), it is as unlikely as a miracle.

You do have spontatenous entropy decreases in very “small” environment. For gas in a box with 3 particles entropy is fluctuating in human-scale times.

No, it really isn’t. What definition of Entropy are you using?

For information theory of Entropy:

Consider the simplest possible system: a magical cube box made of forcefields that never deform or interacts with it’s contents in any way. Inside is a single Neutron with a known (as close as Heisenberg uncertainty lets us) position and momentum. When that Neutron reaches the side of the box, by definition (since the box is completely unyielding and unreactive) the Neutron will deform, translating it’s Kinetic Energy into some sort of internal potential energy, and then rebound. However, we become more uncertain of the position and momentum of the Neutron after each bounce—a unidirectional increase in Entropy. There is no mechanism (without injecting additional energy into the system—ie making another observation) by which the system can become more orderly.

For Temperature theory of Entropy:

Consider the gas box with 3 particles you mentioned. If all 3 particles are moving at the same speed (recall, average particle speed is the definition of temperature for a gas), the system is at maximum possible Entropy. What mechanism exists to cause the particles to vary in speed (given the magical non-deforming non-reactive box we are containing things in)?

“What mechanism exists to cause the particles to vary in speed (given the magical non-deforming non-reactive box we are containing things in)?”

The system is a compact deterministc dynamical system and Poincarè recurrence applies: it will return infinitely many times close to any low entropic state it was before. Since the particles are only 3 the time needed for the return is small.

Having read the definitions, I’m pretty sure that a system with Poincare recurrence does not have a meaningful Entropy value (or E is absolutely constant with time). You cannot get any useful work out of the system, or within the system. The speed distribution of particles never changes. Our ability to predict the position and momentum of the particles is constant. Is there some other definition of Entropy that actually shows fluctuations like you’re describing?

The ideal gas does have a mathematical definition of entropy, Boltzmann used it in the statistical derivation of the second law:

https://en.wikipedia.org/wiki/Entropy_(statistical_thermodynamics)

Here is an account of Boltzmann work and the first objections to his conclusions:

https://plato.stanford.edu/entries/statphys-Boltzmann/

The Poincarè recurrence theorem doesn’t imply that. It doesn’t imply the system is ergodic, and it only applies to “almost all” states (the exceptions are guaranteed to have measure zero, but then again, so is the set of all numbers anyone will ever specifically think about). In any case, the entropy doesn’t change at all because it’s a property of an abstraction.

An ideal gas in a box is an egodic system. The Poincarè recurrence theorem states that a volume preserving dynamical system (i.e. any conservative system in classical physics) returns infinitely often in any neighbourhood (as small as you want) of any point of the phase space.

If you believe that our existence is a result of a mere fluctuation of low entropy, then you should not believe that the stars are real. Why? Because the frequency with which a particular fluctuation appears decreases exponentially with the size of the fluctuation. (Basically because entropy is S=log W, where W is the number of micro states.) A fluctuation that creates both the Earth and the stars is far less likely than a fluctuation that just creates the Earth, and some photons heading towards the Earth that just happen to look like they came from stars. Even though it is unbelievably unlikely that the photons would happen to arrange themselves into that exact configuration, it’s even more unbelievably unlikely that of all the fluctuations, we’d happen to live in one so large that it included stars. Of course, this view also implies a prediction about starlight: Since the starlight is just thermal noise, the most likely scenario is that it will stop looking like it came from stars, and start looking just like (very cold) blackbody radiation. So the most likely prediction, under the entropy fluctuation view, is that this very instant the sun and all the stars will go out.

That’s a fairly absurd prediction, of course. The general consensus among people who’ve thought about this stuff is that our low entropy world cannot have been the sole result of a fluctuation, precisely because it would lead to absurd predictions. Perhaps a fluctuation triggered some kind of runaway process that produced a lot of low-entropy bits, or perhaps it was something else entirely, but at some point in the process, there needs to be a way of producing low entropy bits that doesn’t become half as likely to happen for each additional bit produced.

Interestingly, as long as the new low entropy bits don’t overwrite existing high entropy bits, it’s not against the laws of thermodynamics for new low entropy bits to be produced. It’s kind of a reversible computing version of malloc: you’re allowed to call malloc and free as much as you like in reversible computing, as long as the bits in question are in a known state. So for example, you can call a version of malloc that always produces bits initialized to 0. And you can likewise free a chunk of bits, so long as you set them all to 0 first. If the laws of physics allow for an analogous physical process, then that could explain why we seem to live in a low entropy region of spacetime. Something must have “called malloc” early on, and produced the treasure trove of low entropy bits that we see around us. (It seems like in our universe, information content is limited by surface area. So a process that increased the information storage capacity of our universe would have to increase its surface area somehow. This points suggestively at the expansion of space and the cosmological constant, but we mostly still have no idea what’s going on. And the surface area relation may break down once things get as large as the whole universe anyway.)

The prediction that the sun and stars we perceive will go out is absurd only because you are excluding the possibility that you are dreaming. Because of what we label as dreams we frequently perceive things that quickly pop out of existence.

First, a little technical precisation: Poincaré′s recurrence theorem applies to classical systems whose phase space is finite. So, if you believe that the universe is finite, then you have recurrence; otherwise no recurrence.

I think that your conclusion is correct under the hypothesis that the universe exists from an infinite time, and that our current situation of low entropy is the result of a random fluctuation.

The symmetry is broken by the initial condition. If at t=0 the entropy is very low, then it is almost sure that it will rise. The expert consensus is that there has been a special event (the big bang), that you can modelize as an initial condition of extremely high entropy.

It seems unlikely that the big bang was the result of a fluctuation from a previously unordered universe: you can estimate the probability of a random fluctuation resulting in the Earth’s existence. If I recall correctly Penrose did it explicitly, but you do not need the math to understand that (as already pointed out) “a solar system appears from thermal equilibrium” is extremely more likely that “an entire universe appears from thermal equilibrium”. Therefore, under you hypothesis, we should have expected with probability of almost 1, to be in the only solar system in existence in the observable universe.

I wish to stress that these are pleasant philosophical speculations, but I do not wish to assign an high confidence to anything said here: even if they work well for our purposes, I feel a bit nervous in extrapolating mathematical models it to the entire universe.

In order to apply Poincarè recurrence it is the set of available points of the phase space that must be “compact” and this is likely the case if we assume that the total energy of the universe is finite.

The energy constrains the moments, but not the positions. If there is infinite space, the phase space is also infinite, even at constant energy.

Take two balls which start both at x=0, one with velocity v(0) = 1 and the other with velocity v(0) = −1, in an infinite line. They will continue to go away forever, no recurrence.

Good point but gravity could be enough to keep the available positions in a bounded set

While of course it could, current measurements suggest that it is not.

If the origin of our current universe-state is just entropy randomly getting insanely-unlikely low and then bouncing back, that would make Big Bang just the random moment where the entropy bounced back.

Like, if you could travel back in time in some magical time machine, you would see the stars being unborn, universe getting smaller, and then at a completely random moment, the time machine would stop and say “this is as far in the past as I can go; from here it is a future in both directions: your time or the antichronos”. And that moment itself would be nothing special: universe much simpler than we are used to, but not literally zero-size. Just how much back the entropy went, before it bounced. Far enough that we had enough time to evolve. On the other hand, every second of the past is extra improbability, so even “five minutes after the universe was zero-size” would be astronomically more likely than “exactly when the universe was zero-size”, and it wouldn’t make a difference for our ability to evolve.

From this perspective, the theories of Big Bang are just an attempt to reverse-engineer how far back could the entropy go in the hypothetical extreme case… but in our specific past, it most likely didn’t go back that far. Like, on the picture with the red curve, Big Bang is a description of the lowest point on the scale, but the curve is more likely to have bounced a few pixels above it.

(Epistemic status: I don’t understand physics, I am just trying to sound smart and possibly interesting.)

I don’t think your graph is accurate. In our universe, entropy can reverse, but it’s unlikely, and an entropy reversal large enough to take us from heat-death to the current state of the universe is unimaginably unlikely. Given an infinite amount of time, that will eventually happen, but your graph should be entropy spikes surrounded by eternities at minimum entropy. Given that more-accurate graph, the probability that we’re in a decreasing-entropy part of the graph is ~100%.

Entropy “reversal”—i.e. decrease—must be equally frequent as entropy increases: you cannot have an increase if you didn’t have a decrease before. My graph is not quantitatively accurate for sure but with a rescaling of times it should be ok.

Sorry I got the terminology backwards. Entropy generally increases, so your graph should be mostly at maximum entropy with very short (at this timescale) downward spikes to lower entropy states.

It’s true that for entropy to increase you can’t already be at the maximum entropy state, but I’m not saying it constantly increases, I’m saying it increases until it hits maximum entropy and then stays near there unless something extraordinarily unlikely happens (a major entropy reversal).

I think the only scale where your graph works is if we’re looking at near-max-entropy for the entire time, which is nothing like the current state of the universe.

In the equilibrium, small increases and small decreases should be equally likely, with an unimaginably low probability of high decreases (which becomes 0 if the universe is infinite).

Yes, but our universe is not in equilibrium or anywhere near equilibrium. We’re in a very low entropy state right now and the state which is in equilibrium is extremely high entropy.

I agree—but, if understood correctly the OP, he is averaging over a time scale much larger than the time required to reach the equilibrium.

It’s actually worse than that. If you define Entropy using information theory, there is no such thing as random fluctuations decreasing Entropy. The system merely moves from one maximal Entropy state to the next, indefinitely.

If you work under the hypothesis that information is preserved, then the total entropy of the universe does not increase nor decrease.

This looks like:

Consider an artificial system (that doesn’t obey the 2nd law of thermodynamics, but that isn’t explicitly stated)

Such a system will fluctuate in phase space

One aspect of a system is the amount of Entropy in that system

Therefore, Entropy must decrease to arbitrarily low levels eventually.

Hey, what if the real world also didn’t obey the 2nd law?

Without some bridge to address why the 2nd law (which 100% of tests so far have confirmed) might not apply, I don’t see any value in the original post?

I think you are not considering some relevant points:

1) the artificial system we are considering (an ideal gas in a box) (a) is often used as an example to illustrate and even to derive the second law of thermodynamics by means of mathematical reasoning (the Boltzmann’s H-theorem) and (b) this is because it actually appears to be a prototype for the idea of the second law of thermodynamics so it is not just a random example, it is the root of out intuition of the second law

2) the post is talking about the logic behind the arguments which are used to justify the second law of thermodynamics

3) The core point of the post is this:

in the simple case of the ideal gas in the box we end up thinking that it must evolve like the second law is prescribing, and we also have arguments to prove this that we find convincing

yet the ideal gas model, as a toy universe, doesn’t really behave like that, even if it is counterintuitive the decrease of entropy has the same frequency of the increase of entropy

therefore our intuition about the second law and the argument supporting it seems to have some problem

so maybe the second laws is true, but our way of thinking at it maybe is not, or maybe the second law is not true and our way of thinking the universe is flawed: in any case we have a problem

This argument proves that

Along a given time-path, the average change in entropy is zero

Over the whole space of configurations of the universe, the average difference in entropy between a given state and the next state (according to the laws of physics) is zero. (Really this should be formulated in terms of derivatives, not differences, but you get the point).

This is definitely true, and this is an inescapable feature of any (compact) dynamical system. However, somewhat paradoxically, it’s consistent with the statement that, conditional on any given (nonmaximal) level of entropy, the vast majority of states have increasing entropy.

In your time-diagrams, this might look something like this:

I.e when you occasionally swing down into a somewhat low-entropy state, it’s much more likely that you’ll go back to high-entropy than that you’ll go further down. So once you observe that you’re not in the maxentropy state, it’s more likely that you’ll increase than that you’ll decrease.

(It’s impossible for half of the mid-entropy states to continue to low-entropy states, because there are much more than twice as many mid-entropy states as low-entropy states, and the dynamics are measure-preserving).

“conditional on any given (nonmaximal) level of entropy, the vast majority of states have increasing entropy”

I don’t think this statement can be true in any sense that would produce a non-symmetric behavior over a long time, and indeed it has some problem if you try to express it in a more accurate way:

1) what does “non-maximal” mean? You don’t really have a single maximum, you have a an average maximum and random oscillations around it

2) the “vast majority” of states are actually little oscillations around an average maximum value, and the downward oscillations are as frequent as the upward oscillations

3) any state of low entropy must have been reached in some way and the time needed to go from the maximum to the low entropy state should be almost equal to the time needed to go from the low entropy to the maximum: why shold it be different if the system has time symmetric laws?

In your graph you take very few time to reach low entropy states from high entropy—compared to the time needed to reach high entropy again, but would this make the high-low transition look more natural or more “probable”? Maybe it would look even more innatural and improbable!