Who ordered alignment’s apple?

Crossposted from the EA forum: https://forum.effectivealtruism.org/posts/hSugooaEQNTeKFsDu/who-ordered-alignment-s-apple

This post was inspired by G. Gigerenzer’s book, Gut Feelings: The Intelligence of the Unconscious. I’m drawing an analogy that I think informs us about how science works, at least from what I understand from the history of science. It also seems to resonate with the reflections of current alignment researchers, for example, see Richard’s post Intuitions about solving hard problems.

Imagine that you ask a professional baseball player, let’s call him Tom, how does he catch a fly ball? He takes a moment to respond realizing he’s never reflected on this question before and ends up staring at you, not knowing how or what is there exactly to explain. He says he’s never thought about it. Now imagine that you ask his baseball coach what’s the best way to catch the ball. The coach has a whole theory about it. In fact, he insists that Tom and everyone on the team should follow one specific technique he thinks it’s optimal. Tom and the rest of the team go ahead and do what the coach said (because they don’t want to get yelled at even though they’ve been doing fine so far). And lo and behold the team misses the ball more often than before.

What could have gone wrong?

Richard Dawkins, in The Selfish Gene, gives the following explanation:

When a man throws a ball high in the air and catches it again, he

behaves as if he had solved a set of differential equations in predicting

the trajectory of the ball. He may neither know nor care what a

differential equation is, but this does not affect his skill with the ball. At

some subconscious level, something functionally equivalent to the

mathematical calculations is going on.

So here’s the analogy I want to argue for: when you do science, by which I mean, when you set up your scientific agenda and organize your research, construct your theoretic apparatus, design experiments, interpret results, and so on, you behave as if you had solved a set of differential equations predicting the trajectory of your research. You may neither know nor care what this process is, but this does not affect your skill with the setup of your project. At some subconscious level, something functionally equivalent to the mathematical calculations is going on. (Be careful, I obviously don’t mean the calculations that are explicitly part of solving the problem).

Well, I just paraphrased Dawkins’ passage to make a point and that is that what we mean by “gut feelings” or “intuitions” is not magic, but rather a cognitive mechanism. This applies to a variety of tasks from baseball to thinking about agent foundations. It’s a cognitive procedure that might even be ultimately explained in mathematical terms about how the brain works.

Up until this point, it looks like I draw this analogy with the practice of science in general in mind. It’s worth trying to see what the analogy could suggest for alignment research, granting that it’s still at a pre-paradigmatic stage.

My analogy predicts that one or multiple insights about solving the alignment problem will come the way the experienced baseball player hits the ball before following his coach’s instructions: as a natural movement in virtue of his gut feelings. And it will be a successful movement. Sure, that requires unpacking, but I think Gigerenzer offers a satisfactory account in his book. [1]

Meanwhile, in the contemporary study of the history of science (especially HPS approaches), we talk about myths in science and then have arguments that debunk them. One of the most famous myths, widespread in popular culture is that an apple hit Newton’s head which inspired him to formulate the law of universal gravitation.

While this episode is primarily a cute myth about scientific discovery robustly incorporated into the historiographical package of the hero-scientist ideal, it reveals a truth about how scientists think, or at least, what we have noticed and prima facie believe about how they think. It encapsulates this sense of not being able to explain exactly how you got your insight as a scientist, just like the baseball player is clueless about what makes him catch the ball so perfectly. So, if this myth is meant to be treated as a metaphor then it tells us that the a-ha moment (also known as the “eureka effect”) in scientific research, might come when you least expect it; a falling apple might just trigger it.

The scientist might try to rationalize the process in hindsight, trace back the steps in his reasoning and find clever ways to explain how he got to his insight. But it’s plausible to believe that there’s a whole cognitive mechanism of heuristics and gut feelings that made a product of intuitive reasoning look like the most natural next move.

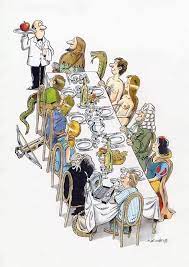

As far as alignment is concerned, I think some researchers have already ordered and received their apples (okay, perhaps smaller size apples). And if this continues and we’re still alive, the one apple that’s going to change the flow of the most important century will make its appearance.

- ^

Gigerenzer talks about this in a chapter called “Winning without thinking”. He brings up heuristics and especially the “gaze heuristic” and explains how this works in the baseball example. Just in case there’s confusion, I’m not conflating insight and intuition; I think that insight is generated through following one’s intuitions, i.e., it’s a product of intuitive reasoning. So, in that sense, it’s plausible to suppose that heuristics contribute to the generation of insights.

It might be worth pointing out that your text down to and including the Dawkins quote is paraphrased from Gigerenzer’s book, and Gigerenzer is quoting Dawkins only to argue against him.

Re the Dawkins quote. There is actually a significant literature on how people catch fly balls (and other objects such as frisbees and toy helicopters), and while there is not complete agreement, it is clear that people do not do it by any process of “solving differential equations in predicting the trajectory of the ball”. Some of the contending theories are called “linear optical trajectory”, “optical acceleration cancellation”, or “vertical optical velocity” (all Googleable phrases if you want to know more). In spite of disagreements among the researchers, they all have this in common: the fielder is trying to keep some aspect of their perception of the ball constant. He does not “behave as if he had solved a set of differential equations”, and nothing “functionally equivalent to the mathematical calculations is going on” in the process, except in the tautologous sense that the fielder succeeds in catching the ball.

Gigerenzer knows this, as he follows the Dawkins quote with an explanation of how implausible it is when you think about how that could work. (I haven’t seen the book, but enough of the first chapter is available in the Amazon preview.) He then talks about how fielders actually do it, and it’s clear he’s read the same papers I have, although he doesn’t mention them, because he eventually quotes (unsourced) the rule; “Fix your gaze on the ball, start running, and adjust your running speed so that the angle of gaze remains constant.” No “intuition”, no “intelligence of the unconscious”, an explicit rule, consciously applied, and one that does not involve solving any differential equations or making any predictions.

He gives another example, of German people guessing which city is more populous, Detroit or Milwaukee. They generally pick Detroit, because they’ve heard of it and they haven’t heard of Milwaukee, and the more prominent a city is, the more populous it is likely to be. Americans, being equally familiar with both names, find the question more difficult. Again, an explicit rule, deliberately applied, which he sprinkles with fairy dust by calling it a “heuristic”.

So two key examples in the introduction to his book undercut the thesis promised by its title.

In the blurb on Amazon (for which, of course, Gigerenzer is not responsible) I read “Intuition, it seems, is not some sort of mystical chemical reaction but a neurologically based behavior that evolved to ensure that we humans respond quickly when faced with a dilemma.” It seems to me that “A neurologically based behavior etc.” is the same thing as “some sort of mystical chemical reaction”. “Neurons!” “Evolution!”

I’m guessing that what you are getting at is the process of building through learning and practice, which I assume is quite uncontroversial. I think the main argument against its applicability to AI alignment specifically is that you (and everyone else) die before you get to learn and practice enough, unlike in baseball or in physics. If TAI emergence is a slow and incremental process, then you have a point. Eliezer argues that it is not.

I agree there probably isn’t enough time. Best case scenario there’s enough time for weak alignment tools (small apples).