David Schneider-Joseph

dsj

I don’t know much background here so I may be off base, but it’s possible that the motivation of the trust isn’t to bind leadership’s hands to avoid profit-motivated decision making, but rather to free their hands to do so, ensuring that shareholders have no claim against them for such actions, as traditional governance structures might have provided.

(Unless “employees who signed a standard exit agreement” is doing a lot of work — maybe a substantial number of employees technically signed nonstandard agreements.)

Yeah, what about employees who refused to sign? Have we gotten any clarification on their situation?

Thank you, I appreciated this post quite a bit. There’s a paucity of historical information about this conflict which isn’t colored by partisan framing, and you seem to be coming from a place of skeptical, honest inquiry. I’d look forward to reading what you have to say about 1967.

Thanks for doing this! I think a lot of people would be very interested in the debate transcripts if you posted them on GitHub or something.

Okay. I do agree that one way to frame Matthew’s main point is that MIRI thought it would be hard to specify the human value function, and an LM that understands human values and reliably tells us the truth about that understanding is such a specification, and hence falsifies that belief.

To your second question: MIRI thought we couldn’t specify the value function to do the bounded task of filling the cauldron, because any value function we could naively think of writing, when given to an AGI (which was assumed to be a utility argmaxer), leads to all sorts of instrumentally convergent behavior such as taking over the world to make damn sure the cauldron is really filled, since we forgot all the hidden complexity of our wish.

I think this reply is mostly talking past my comment.

I know that MIRI wasn’t claiming we didn’t know how to safely make deep learning systems, GOFAI systems, or what-have-you fill buckets of water, but my comment wasn’t about those systems. I also know that MIRI wasn’t issuing a water-bucket-filling challenge to capabilities researchers.

My comment was specifically about directing an AGI (which I think GPT-4 roughly is), not deep learning systems or other software generally. I *do* think MIRI was claiming we didn’t know how to make AGI systems safely do mundane tasks.

I think some of Nate’s qualifications are mainly about the distinction between AGI and other software, and others (such as “[i]f the system is trying to drive up the expectation of its scoring function and is smart enough to recognize that its being shut down will result in lower-scoring outcomes”) mostly serve to illustrate the conceptual frame MIRI was (and largely still is) stuck in about how an AGI would work: an argmaxer over expected utility.

[Edited to add: I’m pretty sure GPT-4 is smart enough to know the consequences of its being shut down, and yet dumb enough that, if it really wanted to prevent that from one day happening, we’d know by now from various incompetent takeover attempts.]

Okay, that clears things up a bit, thanks. :) (And sorry for delayed reply. Was stuck in family functions for a couple days.)

This framing feels a bit wrong/confusing for several reasons.

-

I guess by “lie to us” you mean act nice on the training distribution, waiting for a chance to take over the world while off distribution. I just … don’t believe GPT-4 is doing this; it seems highly implausible to me, in large part because I don’t think GPT-4 is clever enough that it could keep up the veneer until it’s ready to strike if that were the case.

-

The term “lie to us” suggests all GPT-4 does is say things, and we don’t know how it’ll “behave” when we finally trust it and give it some ability to act. But it only “says things” in the same sense that our brain only “emits information”. GPT-4 is now hooked up to web searches, code writing, etc. But maybe I misunderstand the sense in which you think GPT-4 is lying to us?

-

I think the old school MIRI cauldron-filling problem pertained to pretty mundane, everyday tasks. No one said at the time that they didn’t really mean that it would be hard to get an AGI to do those things, that it was just an allegory for other stuff like the strawberry problem. They really seemed to believe, and said over and over again, that we didn’t know how to direct a general-purpose AI to do bounded, simple, everyday tasks without it wanting to take over the world. So this should be a big update to people who held that view, even if there are still arguably risks about OOD behavior.

(If I’ve misunderstood your point, sorry! Please feel free to clarify and I’ll try to engage with what you actually meant.)

-

Hmm, you say “your claim, if I understand correctly, is that MIRI thought AI wouldn’t understand human values”. I’m disagreeing with this. I think Matthew isn’t claiming that MIRI thought AI wouldn’t understand human values.

I think you’re misunderstanding the paragraph you’re quoting. I read Matthew, in that paragraph as acknowledging the difference between the two problems, and saying that MIRI thought value specification (not value understanding) was much harder than it’s looking to actually be.

I know this is from a bit ago now so maybe he’s changed his tune since, but I really wish he and others would stop repeating the falsehood that all international treaties are ultimately backed by force on the signatory countries. There are countless trade, climate reduction, and nuclear disarmament agreements which are not backed by force. I’d venture to say that the large majority of agreements are backed merely by the promise of continued good relations and tit-for-tat mutual benefit or defection.

A key distinction is between linearity in the weights vs. linearity in the input data.

For example, the function is linear in the arguments and but nonlinear in the arguments and , since and are nonlinear.

Similarly, we have evidence that wide neural networks are (almost) linear in the parameters , despite being nonlinear in the input data (due e.g. to nonlinear activation functions such as ReLU). So nonlinear activation functions are not a counterargument to the idea of linearity with respect to the parameters.

If this is so, then neural networks are almost a type of kernel machine, doing linear learning in a space of features which are themselves a fixed nonlinear function of the input data.

The more I stare at this observation, the more it feels potentially more profound than I intended when writing it.

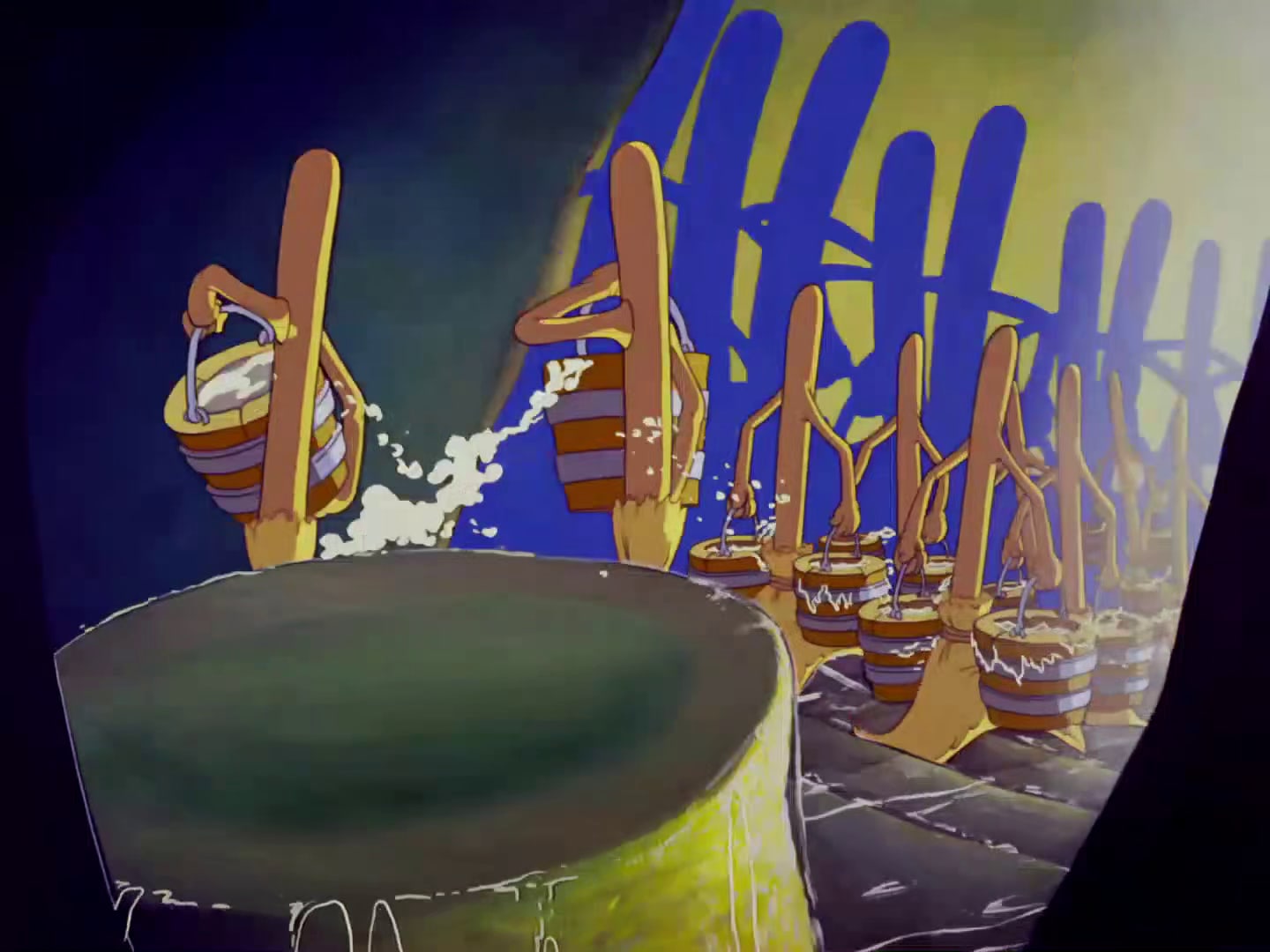

Consider the “cauldron-filling” task. Does anyone doubt that, with at most a few very incremental technological steps from today, one could train a multimodal, embodied large language model (“RobotGPT”), to which you could say, “please fill up the cauldron”, and it would just do it, using a reasonable amount of common sense in the process — not flooding the room, not killing anyone or going to any other extreme lengths, and stopping if asked? Isn’t this basically how ChatGPT behaves now when you ask it for most things, bringing to bear a great deal of common sense in its understanding of your request, and avoiding overly-literal interpretations which aren’t what you really want?

Compare that to Nate’s 2017 description of the fiendish difficulty of this problem:

Why would we expect a generally intelligent system executing the above program [sorta-argmaxing over probability that the cauldron is full] to start overflowing the cauldron, or otherwise to go to extreme lengths to ensure the cauldron is full?

The first difficulty is that the objective function that Mickey gave his broom left out a bunch of other terms Mickey cares about:

The second difficulty is that Mickey programmed the broom to make the expectation of its score as large as it could. “Just fill one cauldron with water” looks like a modest, limited-scope goal, but when we translate this goal into a probabilistic context, we find that optimizing it means driving up the probability of success to absurd heights.

Regarding off switches:

If the system is trying to drive up the expectation of its scoring function and is smart enough to recognize that its being shut down will result in lower-scoring outcomes, then the system’s incentive is to subvert shutdown attempts.

… We need to figure out how to formally specify objective functions that don’t automatically place the AI system into an adversarial context with the operators; or we need to figure out some way to have the system achieve goals without optimizing some objective function in the traditional sense.

… What we want is a way to combine two objective functions — a default function for normal operation, and a suspend function for when we want to suspend the system to disk.

… We want our method for combining the functions to satisfy three conditions: an operator should be able to switch between the functions (say, by pushing a button); the system shouldn’t have any incentives to control which function is active; and if it’s plausible that the system’s normal operations could inadvertently compromise our ability to switch between the functions, then the system should be incentivized to keep that from happening.

So far, we haven’t found any way to achieve all three goals at once.

These all seem like great arguments that we should not build and run a utility maximizer with some hand-crafted goal, and indeed RobotGPT isn’t any such thing. The contrast between this story and where we seem to be heading seems pretty stark to me. (Obviously it’s a fictional story, but Nate did say “as fictional depictions of AI go, this is pretty realistic”, and I think it does capture the spirit of much actual AI alignment research.)

Perhaps one could say that these sorts of problems only arise with superintelligent agents, not agents at ~GPT-4 level. I grant that the specific failure modes available to a system will depend on its capability level, but the story is about the difficulty of pointing a “generally intelligent system” to any common sense goal at all. If the story were basically right, GPT-4 should already have lots of “dumb-looking” failure modes today due to taking instructions too literally. But mostly it has pretty decent common sense.

Certainly, valid concerns remain about instrumental power-seeking, deceptive alignment, and so on, so I don’t say this means we should be complacent about alignment, but it should probably give us some pause that the situation is this different in practice from how it was envisioned only six years ago in the worldview represented in that story.

Though interestingly, aligning a langchainesque AI to the user’s intent seems to be (with some caveats) roughly as hard as stating that intent in plain English.

My guess is “today” was supposed to refer to some date when they were doing the investigation prior to the release of GPT-4, not the date the article was published.

Got a source for this estimate?

Nitpick: the paper from Eloundou et al is called “GPTs are GPTs”, not “GPTs and GPTs”.

Probably I should get around to reading CAIS, given that it made these points well before I did.

I found it’s a pretty quick read, because the hierarchical/summary/bullet point layout allows one to skip a lot of the bits that are obvious or don’t require further elaboration (which is how he endorsed reading it in this lecture).

We don’t know with confidence how hard alignment is, and whether something roughly like the current trajectory (even if reckless) leads to certain death if it reaches superintelligence.

There is a wide range of opinion on this subject from smart, well-informed people who have devoted themselves to studying it. We have a lot of blog posts and a small number of technical papers, all usually making important (and sometimes implicit and unexamined) theoretical assumptions which we don’t know are true, plus some empirical analysis of much weaker systems.

We do not have an established, well-tested scientific theory like we do with pathogens such as smallpox. We cannot say with confidence what is going to happen.

I agree that if you’re absolutely certain AGI means the death of everything, then nuclear devastation is preferable.

I think the absolute certainty that AGI does mean the death of everything is extremely far from called for, and is itself a bit scandalous.

(As to whether Eliezer’s policy proposal is likely to lead to nuclear devastation, my bottom line view is it’s too vague to have an opinion. But I think he should have consulted with actual AI policy experts and developed a detailed proposal with them, which he could then point to, before writing up an emotional appeal, with vague references to air strikes and nuclear conflict, for millions of lay people to read in TIME Magazine.)

And mine.