B.Eng (Mechatronics)

anithite

No. Strain wave gears are lighter than using hydraulics.

Note:I’m taking the outside view here and assuming Boston dynamics went with hydraulics out of necessity.

I’d imagine the problem isn’t just the gearing but the gearing + a servomotor for each joint. Hydraulics still retain an advantage so long as the integrated hydraulic joint is lighter than an equivalent electric one.

Maybe in the longer term absurd reduction ratios can fix this to cut motor mass? Still, there’s plenty of room to scale hydraulics to higher pressures.

The small electric dog sized robots can jump. The human sized robots and exoskeletons (EG:sarcos Guardian XO) aren’t doing that. improved motor power density could help there but I suspect the benefits of having all power from a single pump available to distribute to joint motors at need is substantial.

Also, there’s no power cost to static force. Atlas can stand in place all day (assuming it’s passively stable and not disturbed) an equivalent robot with electric motor powered joints pays for every Nm of torque when static.

Take an existing screw design, double the diameter without changing the pitch. The threads now slide about twice as far (linear distance around the screw) per turn for the same amount of travel. The efficiency is now around half it’s previous value.

There was a neat DIY linear drive system I saw many years back where an oversized nut was placed inside a ball bearing so it was free to rotate. The nut had the same thread pitch as the driving screw. The screw was held off center so the screw and nut threads were in rolling contact. Each turn of the screw caused <1 turn of the nut resulting in some axial movement.

Consider the same thing but with a nut of pitch zero (IE:machined v grooves instead of threads). This has the same effect as a conventional lead screw nut but the contact is mostly rolling. If the “nut” is then fixed in place you get sliding contact with much more friction.

What? No. You can make larger strain wave gears, they’re just expensive & sometimes not made in the right size & often less efficient than planetary + cycloidal gears.

Not in the sense of you can’t make them bigger but square cube means greater torque density is required for larger robots. Hydraulic motors and cylinders have pretty absurd specific force/torque values.

hydraulic actuators fed from a single high pressure fluid rail using throttling valves

That’s older technology.

Yes you can use servomotors+fixed displacement pumps or a single prime mover + ganged variable displacement pumps but this has downsides. Abysmal efficiency of the a naive (single force step actuator+throttling) can be improved by using ≥2 actuating cavities and increasing actuator force in increments (see:US10808736B2:Rotary hydraulic valve)

The other advantage is plumbing, You can run a single set of high/low pressure lines throughout the robot. Current construction machinery using a single rail system are worst of both worlds since they use a central valve block (two hoses per cylinder) and have abysmal efficiency. Rotary hydraulic couplings make things worse still.

Consider a saner world where equipment was built with solenoid valves integrated into cylinders. Switching to ganged variable displacement pumps then has a much higher cost since each joint now requires running 2 additional lines.

No. There’s a reason excavators use cylinders instead of rotary vane actuators.

Agreed in that a hydraulic cylinder is the best structural shape to use for an actuator. My guess is when building limbs, integration concerns trumped this. (Bearings+Rotary vane actuator+control valve+valve motor) can be a single very dense package. That and not needing a big reservoir to handle volume change meant the extra steel/titanium was worth it.

No. Without sliding, screws do not produce translational movement.

This is true, the sun and planet screws have relative axial motion at their point of contact, Circumferential velocities are matched though so friction is much less than in a leadscrew. Consider two leadscrews with the same pitch (axial distance traveled per turn). One screw has twice the diameter of the first. The larger screw will have a similar normal force and so similar friction, but sliding at the threads will be roughly twice that of the smaller screw. Put another way, fine pitch screws have lower efficiency.

For a leadscrew, the motion vectors for a screw/nut contact patch are mismatched axially (the screw moves axially as it turns) and circumferentially (the screw thread surface slides circumferentially past the nut thread surface). In a roller screw only the axial motion component is mismatched and the circumferential components are more or less completely matched. The size of the contact patches is not zero of course but they are small enough that circumferential/radial relative motion across the patch is quite small (similar to the ball bearing case).

Consider what would happen if you locked the planet screws in place. it still works as a screw although the effective pitch might change a bit but now the contact between sun and planet screw involves a lot more sliding.

What’s your opinion on load shifting as an alternative to electrical energy storage. (EG:phase change heating/cooling storage for HVAC). I am currently confused why this hasn’t taken off given time of use pricing for electricity (and peak demand charges) offer big incentives. My current best guess is added complexity is a big problem leading to use only in large building HVAC(eg:this sort of thing)

Both in building integrated PCMs(phase change materials) (EG:PCM bags above/integrated in building drop ceilings) and PCMs integrated in the HVAC system (EG:ice storage air conditioning) seem like very good options. Heck, refrigeration unit capacity is still measured in tons (IE:tons ice/day) in some parts of the world which is very suggestive.

Another potential complication for HVAC integrated PCMs is needing a large thermal gradient to use the stored cooling/heating (EG:ice at 0°C to cool buildings to 20°C).

With respect to articulated robot progress

Strain wave gearing scales to small dog robot size reasonably(EG:boston dynamics spot) thanks to square cube law but can’t manage human sized robots without pretty horrible tradeoffs(IE:ASIMO and the new Tesla robots walk slowly and have very much sub-human agility).

You might want to update that post to mention improvements in … “digital hydraulics” is one search term I think but essentially hydraulic actuators fed from a single high pressure fluid rail using throttling valves.

Modeling, Identification and Joint Impedance Control of the Atlas Arms US10808736B2:Rotary hydraulic valve My guess is current state of the art (ATLAS) Boston dynamics actuators are rotary vane type actuators with individual pressurization of fluid compartments. Control would use a rotary valve actuated by a small small electric motor. Multiple fluid compartments allow for multiple levels of static force depending on which are pressurized so efficiency is less abysmal. Very similar to hydraulic power steering but with multiple force “steps”.

Rotary actuators are preferred over linear hydraulic cylinders because there’s no fluid volume change during movement so no need for a large low pressure reservoir sized to handle worst case joint extension/retraction volume changes.

Roller screws have high friction?

This seems incorrect to me. The rolling of the individual planet screws means the contact between the planet and (ring/sun) screws is rolling contact. Not perfect rolling but slip depends on the contact patch size and average slip should be zero across a given contact patch. A four point contact ball bearing would be analogous. if the contact patches were infinitesimally small there would be no friction since surface velocities at the contact points would match exactly. Increasing the contact patch size means there’s a linear slip gradient across the contact patch with zero slip somewhere in the middle. Not perfect but much much better than a plain bearing.

For roller screws the ring/planet contact patch operates this way with zero friction for a zero sized contact patch. The sun/planet contact patch will have some slip due to axial velocity mismatch at the contact patch since the sun screw does move axially relative to the planets. Still the most of the friction in a simple leadscrew is eliminated since the circumferential velocitiy at the sun/planet contact point is matched. What’s left is more analogous to the friction in strain wave gearing.

Though I think “how hard is world takeover” is mostly a function of the first two axes?

I claim almost entirely orthogonal. Examples of concrete disagreements here are easy to find once you go looking:

If AGI tries to take over the world everyone will coordinate to resist

Existing computer security works

Existing physical security works

I claim these don’t reduce cleanly to the form “It is possible to do [x]” because at a high level, this mostly reduces to “the world is not on fire because:”

existing security measures prevent effectively (not vulnerable world)

vs.

existing law enforcement discourages effectively (vulnerable world)

existing people are mostly not evil (vulnerable world)

There is some projection onto the axis of “how feasible are things” where we don’t have very good existence proofs.

can an AI convince humans to perform illegal actions

can an AI write secure software to prevent a counter coup

etc.

These are all much much weaker than anything involving nanotechnology or other “indistinguishable from magic” scenarios.

And of course Meta makes everything worse. There was a presentation at Blackhat or Defcon by one of their security guys about how it’s easier to go after attackers than close security holes. In this way they contribute to making the world more vulnerable. I’m having trouble finding it though.

I suggest an additional axis of “how hard is world takeover”. Do we live in a vulnerable world? That’s an additional implicit crux (IE:people who disagree here think we need nanotech/biotech/whatever for AI takeover). This ties in heavily with the “AGI/ASI can just do something else” point and not in the direction of more magic.

As much fun as it is to debate the feasibility of nanotech/biotech/whatever, digital-dictatorships require no new technology. A significant portion of the world is already under the control of human level intelligences (dictatorships). Depending on how stable the competitive equilibrium between agents ends up, required intelligence level before an agent can rapidly grow not in intelligence but in resources and parallelism is likely quite low.

One minor problem, AI’s might be asked to solve problems with no known solutions (EG:write code that solves these test cases) and might be pitted against one another (EG:find test cases for which these two functions are not equivalent)

I’d agree that this is plausible but in the scenarios where the AI can read the literal answer key, they can probably read out the OS code and hack the entire training environment.

RL training will be parallelized. Multiple instances of the AI might be interacting with individual sandboxed environments on a single machine. In this case communication between instances will definitely be possible unless all timing cues can be removed from the sandbox environement which won’t be done.

“Copilot” type AI integration could lead to training data needed for AGI

As a human engineer who has done applied classical (IE:non-AI, you write the algorithms yourself) computer vision. That’s not a good lower bound.

Image processing was a thing before computers were fast. Here’s a 1985 paper talking about tomato sorting. Anything involving a kernel applied over the entire image is way too slow. All the algorithms are pixel level.

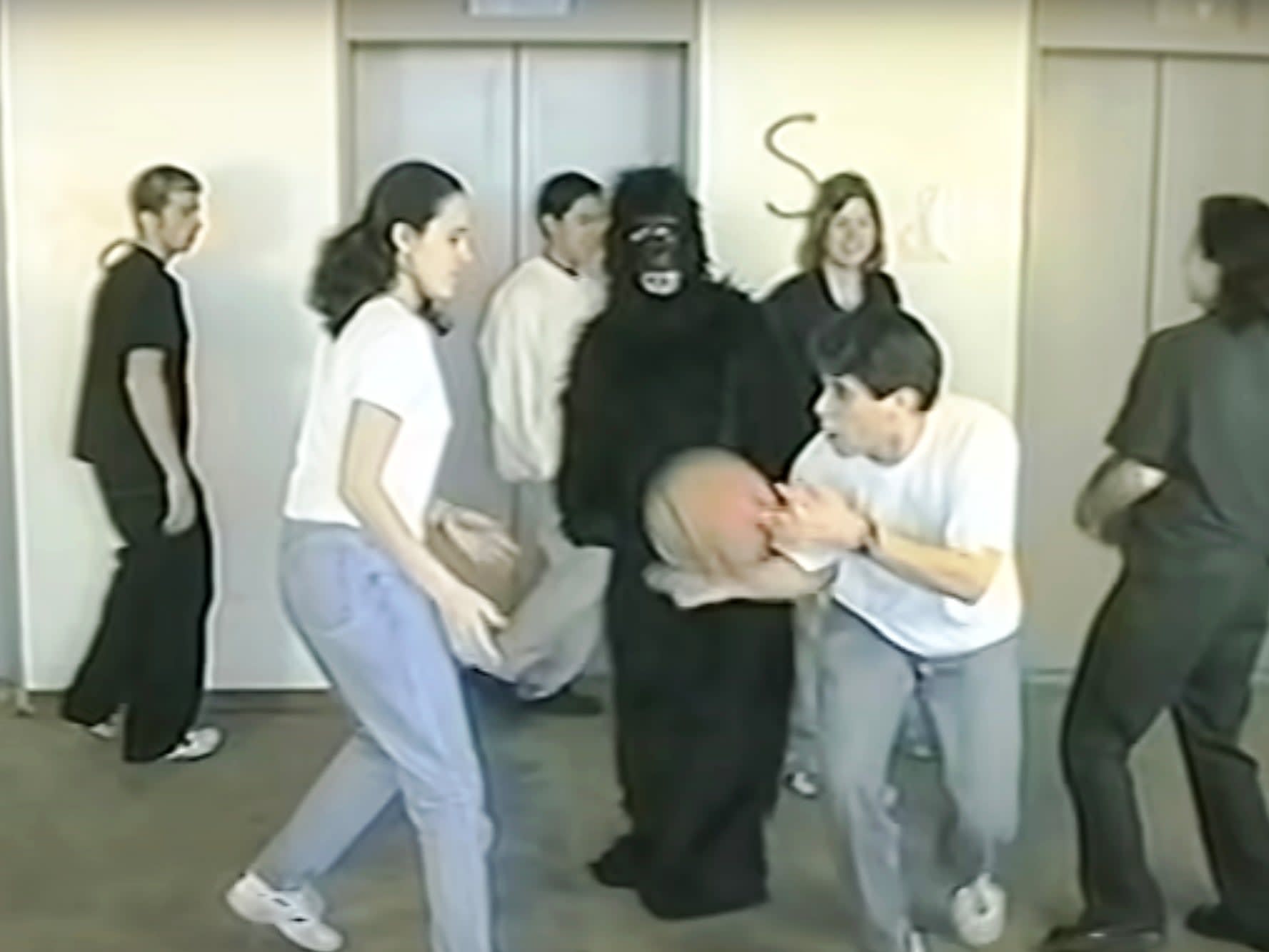

Note that this is a fairly easy problem if only because once you know what you’re looking for, it’s pretty easy to find it thanks to the court being not too noisy.

An O(N) algorithm is iffy at these speeds. Applying a 3x3 kernel to the image won’t work.

So let’s cut down on the amount of work to do. Look at only 1 out of every 16 pixels to start with. Here’s an (80*60) pixel image formed by sampling one pixel in every 4x4 square of the original.

The closer player is easy to identify. Remember that we still have all the original image pixels. If there’s a potentially interesting feature (like the player further away), we can look at some of the pixels we’re ignoring to double check.

Since we have 3 images, and if we can’t do some type of clever reduction after the first image, then we’ll have to spend 1.1 seconds on each of them as well.

Cropping is very simple, once you find the player that’s serving, focus on that rectangle in later images. I’ve done exactly this to get CV code that was 8FPS@100%CPU down to 30FPS@5%. Once you know where a thing is, tracking it from frame to frame is much easier.

Concretely, the computer needs to:

locate the player serving and their hands/ball (requires looking at whole image)

track the player’s arm/hand movements pre-serve

track the ball and racket during toss into the air

track the ball after impact with the racket

continue ball tracking

Only step 1 requires looking at the whole image. And there, only to get an idea of what’s around you. Once the player is identified, crop to them and maintain focus. If the camera/robot is mobile, also glance at fixed landmarks (court lines, net posts/net/fences) to do position tracking.

If we assume the 286 is interfacing with a modern high resolution image sensor which can do downscaling (IE:you can ask it to average 2*2 4*4 8*8 etc. blocks of pixels) and windowing (You can ask for a rectangular chunk of the image to be read out. This gets you closer to what the brain is working with (small high resolution patch in the center of visual field + low res peripheral vision on moveable eyeball)

Conditional computation is still common in low end computer vision systems. Face detection is a good example. OpenCV Face Detection: Visualized. You can imagine that once you know where the face is in one frame tracking it to the next frame will be much easier.

Now maybe you’re thinking: “That’s on me I, set the bar too low”

Well human vision is pretty terrible. Resolution of the fovea is good but that’s about a 1 degree circle in your field of vision. move past 5° and that’s peripheral vision, which is crap. Humans don’t really see their full environment.

Current practical applications of this is to cut down on graphics quality in VR headsets using eye tracking. More accurate and faster tracking allows more aggressive cuts to total pixels rendered.

This is why where’s waldo is hard for humans.

Yeah, transistor based designs also look promising. Insulation on the order of 2-3 nm suffices to prevent tunneling leakage and speeds are faster. Promises of quasi-reversibility, low power and the absurdly low element size made rod logic appealing if feasible. I’ll settle for clock speeds a factor of 100 higher even if you can’t fit a microcontroller in a microbe.

My instinct is to look for low hanging design optimizations to salvage performance (EG: drive system changes to make forces on rods at end of travel and blocked rods equal reducing speed of errors and removing most of that 10x penalty.) Maybe enough of those can cut the required scale-up to the point where it’s competitive in some areas with transistors.

But we won’t know any of this for sure unless it’s built. If thermal noise is 3OOM worse than Drexler’s figures it’s all pointless anyways.

I remain skeptical the system will move significant fractions of a bond length if a rod is held by a potential well formed by inter-atomic repulsion on one of the “alignment knobs” and mostly constant drive spring force. Stiffness and max force should be perhaps half that of a C-C bond and energy required to move the rod out of position would be 2-3x that to break a C-C bond since the spring can keep applying force over the error threshold distance. Alternatively the system *is* that aggressively built such that thermal noise is enough to break things in normal operation which is a big point against.

This requires that “takeoff” in this space be smooth and gradual. Capability spikes (EG:someone figures out how to make a much better agent wrapper), Investment spikes(EG:major org pours lots of $$$ into an attempt), and super-linear returns for some growth strategies make things unstable.

An AGI could build tools to do a thing more efficiently for example. This could turn a negative EV action positive after some large investment in FLOPs to think/design/experiment. Experimental window could be limited by law enforcement response requiring more resources upfront for parallelizing development.

Consider what organizations might be in the best position to try and whether that makes the landscape more spiky.

Sorry for the previous comment. I misunderstood your original point.

My original understanding was, that the fluctuation-dissipation relation connects lossy dynamic things (EG, electrical resistance, viscous drag) to related thermal noise (Johnson–Nyquist noise, Brownian force). So Drexler has some figure for viscous damping (essentially) of a rod inside a guide channel and this predicts some thermal W/Hz/(meter of rod) spectral noise power density. That was what I thought initially and led to my first comment. If the rods are moving around then just hold them in position, right?

This is true but incomplete.

But the drive mechanism is also vibrating. That’s why I mentioned the fluctuation-dissipation theorem—very informally, it doesn’t matter what the drive mechanism looks like. You can calculate the noise forces based on the dissipation associated with the positional degree of freedom.

You pointed out that a similar phenomenon exists in *whatever* controls linear position. Springs have associated damping coefficients so the damping coefficient in the spring extension DOF has associated thermal noise. In theory this can be zero but some practical minimum exists represented by EG:”defect-free bulk diamond” which gives some minimum practical noise power per unit force.

Concretely, take a block of diamond and apply the max allowable compressive force. This is the lowest dissipation spring that can provide that much force. Real structures will be much worse.

Going back to the rod logic system, if I “drive” the rod by covalently bonding one end to the structure, will it actually move 0.7 nm? (C-C bond length is ~0.15 nm. linear spring model says bond should break at +0.17nm extension (350kJ/mol, 40n/m stiffness)). That *is* a way to control position … so if you’re right, the rod should break the covalent bond. My intuition is thermal energy doesn’t usually do that.

What are the the numbers you’re using?(bandwidth, stiffness, etc.)?

Does your math suggest that in the static case rods will vibrate out of position? Maybe I’m misunderstanding things.

During its motion, the rod is supposed to be confined to its trajectory by the drive mechanism, which, in response to deviations from the desired trajectory, rapidly applies forces much stronger than the net force accelerating the rod.

(Nanosystems PP344 (fig 12.2)Having the text in front of me now, the rods supposedly have “alignment knobs” which limit range of motion. The drive springs don’t have to define rod position to within the error threshold during motion.

The knob<-->channel contact could be much more rigid than the spring, depending on interatomic repulsion. That’s a lot closer to the “covalently bond the rod to the structure” hypothetical suggested above. If the dissipation-fluctuation based argument holds, the opposing force and stiffness will be on the order of bond stiffness/strength.

There’s a second fundamental problem in positional uncertainty due to backaction from the drive mechanism. Very informally, if you want your confining potential to put your rod inside a range with some response speed (bandwidth), then the fluctuations in the force obey , from standard uncertainty principle arguments. But those fluctuations themselves impart positional noise. Getting the imprecision safely below the error threshold in the presence of thermal noise puts backaction in the range of thermal forces.

When I plug the hypothetical numbers into that equation (10Ghz, 0.7nm) I get force deviations in the fN range (1.5e-15 N) that’s six orders of magnitude from the nanonewton range forces proposed for actuation. This should Accommodate using the pessimistic “characteristic frequency of rod vibration”(10Thz) along with some narrowing of positional uncertainty.

That aside, these are atoms. De Broglie wavelength for a single carbon atom at room temp is 0.04 nm and we’re dealing with many carbon atoms bonded together. Quantum mechanical effects are still significant?

If you’re right, and if the numbers are conservative with real damping coefficients 3 OOM higher, forces would be 1.5 OOM higher meaning covalent bonds hold things together much less well. This seems wrong. Benzyl groups would seem then to regularly fall off of rigid molecules for example. Perhaps the rods are especially rigid leading to better coupling of thermal noise into the anchoring bond at lower atom counts?

Certainly if drexler’s design is impossible by 3 orders of magnitude rod logic would perform much less well.

The adversary here is assumed to be nature/evolution. I’m not referring to scenarios where intelligent agents are designing pathogens.

Humans can design vaccines faster than viruses can mutate. A population of well coordinated humans will not be significantly preyed upon by viruses despite viruses being the fastest evolving threat.

Nature is the threat in this scenario as implied by that last bit.

edit: This was uncharitable. Sorry about that.

This comment suggested not leaving rods to flop around if they were vibrating.

The real concern was that positive control of the rods to the needed precision was impossible as described below.

well coordinated

Yes, assume no intelligent adversary.

Well coordinated -->

enforced norms preventing individuals from making superpathogens.

large scale biomonitoring

can and will rapidly deploy vaccines

will rapidly quarantine based on bio monitoring to prevent spread

might deploy sterilisation measures (EG:UV-C sterilizers in HVAC systems)

There is a tradeoff to be made between level of bio monitoring, speed of air travel, mitigation tech and risk of a pathogen slipping past. Pathogens that operate on 2+day infection-->contagious times should be detectable quickly and might kill 10000 worst case. That’s for a pretty aggressive point in the tradeoff space.

Earth is not well coordinated. Success of some places in keeping out COVID shows what actual competence could accomplish. A coordinated earth won’t see much impact from the worst of natural pathogens much less COVID-19.

Even assuming a 100% lethal long incubation time highly infective pathogen for which no vaccine can be made. Biomonitoring can detect it prior to symptoms, then quarantine happens and 99+% of the planet remains uninfected. Pathogens travel because we let them.

*Fire*

Forest fires are a tragedy of the commons situation. If you are a tree in a forest, even if you are not contributing to a fire you still get roasted by it. Fireproofing has costs so trees make the individually rational decision to be fire contributing. An engineered organism does not need to do this.

Photosynthetic top layer should be flat with active pumping of air. Air intakes/exausts seal in fire conditions. This gives much less surface area for ignition than existing plants.

Easiest option is to keep some water in reserve to fight fires directly. possibly add some silicates and heat activated foaming agents to form an intumescent layer. secrete from the top layer on demand.

That is only plausible from a “perfect conditions” engineering perspective where the Earth is a perfect sphere with no geography or obstacles, resources are optimally spread, and there is no opposition. Neither kudzu, or even microbes can spread optimally.

I’ll clarify that a very important core competency is transport of (water/nutrients). Plants don’t currently form desalination plants (seagulls do this to some extent) and continent spanning water pumping networks. The fact that rivers are dumping enormous amounts of fresh water into the oceans shows that nature isn’t effective at capturing precipitation. Some plats have reservoirs where they store precipitation. This organism should capture all precipitation and store it. Storage tanks get cheaper with scale.

Plant growth currently depends on pulling inorganic nutrients and water out of the soil, C, O and N can be extracted from the atmosphere.

An ideal organism roots itself into the ground, extracts as much as possible from that ground then writes it off once other newly covered ground is more profitably mined. Capturing precipitation directly means no need to go into the soil for that although it might be worthwhile to drain the water table when reachable or ever drill wells like humans do. No need for nutrient gathering roots after that. If it covers an area of phosphate rich rock it starts excavating and ships it far and wide as humans currently do.

As for geographic obstacles 2/3rds of the earth is ocean. With a design for a floating breakwater that can handle ocean waves, the wavy area can be enclosed and eventually eliminated. Covered area behind the breakwater can prevent formation of waves by preventing ripple formation (IE:act as a distributed breakwater).

If it’s hard to cover mountains, then the AI can spend a bit of time solving the problem during the first few months, or accept a small loss in total coverage until it does get around to the problem.

One man with a BIC lighter can destroy weeks of work. Wildfires spread faster than plants. Planes with herbicides, or combine harvesters with a chipper, move much faster than plants grow. As bad as engineered Green Goo is, the Long Ape is equally formidable at destruction.

I even bolded the parts about killing all the humans first. Yes humans can do a lot to stop the spread of something like this. I suspect humans might even find a use for it (EG:turn sap into ethanol fuel) and they’re likely clever enough to tap it too.

I’m not going to expand on “kill humans with pathogens” for Reasons. We can agree to disagree there.

raises finger

realizes I’m about to give advice on creating superpathogens

I’m not going to go into details besides stating two facts:

nature has figured out how to make cellular biology do complicated things

including coordinating behavior across instances of the same base genetic programming

This suggests an engineered pathogen could have all sorts of interesting coordinated behavior.

natural viruses are evolved for simplicity

selection pressure in natural viruses leads to a tragedy of the commons (burn fast, burn hot)

A common reasoning problem I see is:

“here is a graph of points in the design space we have observed”

EG:pathogens graphed by lethality vs speed of spread

There’s an obvious trendline/curve!

therefore the trendline must represent some fundamental restriction on the design space.

Designs falling outside the existing distribution are impossible.

This is the distribution explored by nature. Nature has other concerns that lead to the distribution you observe. That pathogens have a “lethality vs spread” relationship tells you about the selection pressures selecting for pathogens, not the space of possible designs.

I guess but that’s not minimal and doesn’t add much.

“how do we make an ASI create a nice (highly advanced technology) instead of a bad (same)?”.

IE: kudzugoth vs robots vs (self propagating change to basic physics)

Put differently:

If we build a thing that can make highly advanced technology, make it help rather than kill us with that technology.

Neat biotech is one such technology but not a special case.

Aligning the AI is a problem mostly independent of what the AI is doing (unless you’re building special purpose non AGI models as mentioned above)

Sorry, I should have clarified I meant robots with per joint electric motors + reduction gearing. almost all of Atlas’ joints aside from a few near the wrists are hydraulic which I suspect is key to agility at human scale.

Inside the lab: How does Atlas work?(T=120s)

Here’s the knee joint springing a leak. Note the two jets of fluid. Strong suspicion this indicates small fluid reservoir size.