Goodhart Ethology

To answer your first question, ethology is the study of animal behavior in the wild.

I—Introduction

In this post I take a long, rambly tour of some of the places we want to apply the notion of Goodhart’s law to value learning. We’ll get through curve fitting, supervised and self-supervised learning, and inverse reinforcement learning.

The plan for the sections was that I was going to describe patterns that we don’t like, without falling back on comparing things to “True Values.” Unfortunately for this plan, a lot of things we call Goodhart’s law are just places where humans are straightforwardly wrong about what’s going to happen when we turn on the AI—it’s hard to avoid sounding like you’re talking about objective values when you’re just describing cases that are really really obvious. I didn’t get as much chance as I’d like to dig into cases that are non-obvious, either, because those cases often got mopped up by an argument from meta-preferences, which we start thinking about more here.

Honestly, I’m not sure if this post will be interesting to read. It was interesting and challenging to write, but that’s mostly because of all the things that didn’t make it in! I had lots of ideas that ended up being bad and had to be laboriously thrown out, like trying to read more deeply into the fact that some value learning schemes have an internal representation of the Goodhart’s law problem, or making a distinction between visible and invisible incompetence. I do worry a bit that maybe I’ve only been separating the chaff from other chaff, but at least the captions on the pictures are entertaining.

II—Curve fitting

One of the simplest systems that has something like Goodhart’s law is curve fitting. If you make a model that perfectly matches your data, and then try to extrapolate it, you can predict ahead of time that you’ll be wrong.

You can solve this overfitting problem by putting a minimum message length prior on models and trading off likelihood against goodness of fit. But now suppose this curve represents the human ratings of different courses of action, and you choose the action that your model says will have the highest rating. You’re going to predictably mess up again, because of the optimizer’s curse (regressional Goodhart on the correlation between modeled rating and actual rating).

This is one of those toy models where the naive framing of Goodhart works great. And it will show up later as a component of how Goodhart’s law manifests in actual value learning schemes, albeit with different real-world impacts depending on context. However, the thing the curve-fitting model of value learning is missing is that in the real world, we don’t start by being given points on a curve, we start with a messy physical situation, and turning that into points on a curve involves a sophisticated act of interpretation with many moving parts.

III—Hard-coded utility or reward functions

This is the flash game Coast Runners. OpenAI trained an AI to play itby treating the score as the reward signal in a training process (which seems like a sensible proxy; getting a high score is well correlated with skill among human players). And by “play it,” I mean that the AI ended up learning that it can maximize its score by only driving the boat in this circle to repeatedly pick up those 3 powerups. It’s crashing and on fire and never finishes the race, but by Jove it has a high score.

This is the sort of problem we typically mean when we think of Goodhart’s law for AI, and it has more current real-world importance than the other stuff I’ll soon spend more words on. It’s not hard to understand what happened—the human was just straightforwardly wrong about what the AI was going to do. If asked to visualize or describe the desired outcome beforehand, the programmer might visualize or talk about the boat finishing the race very quickly. But even when we know what we want, it’s hard to code a matching reward function, so a simple proxy gets used instead. The spirit is willing, but the code-fu is weak.

This still makes sense in the framework of the last post about modeling humans and competent preferences. Even though programming the AI to drive the boat into walls is evidence against wanting it to win the race, sometimes humans are just wrong. It’s a useful and accurate model overall to treat the human as wanting the AI to win the race, but being imperfect, and so it’s totally fine to say that the human didn’t get what they wanted. Note, though, that this is a finely-tuned piece of explanation—if we tried to demand stronger properties out of human preferences (like holding in every context), then we would no longer be able to match common sense.

But what about when things just work?

In the Atari game Breakout, training an AI to maximize the score doesn’t cause any problems, it makes it learn cool tricks and be good at the game. When this happens, when things just work, what about Goodhart’s law?

First, note that the goal is still only valid within a limited domain—we wouldn’t want to rearrange the entire universe purely to better win at Breakout. But given the design of the agent, and its available inputs and outputs, it’s never going to actually get outside of the domain of applicability no matter how much we train it (barring bugs in the code).

Within the game’s domain, the proxy of score correlates well with playing skillfully, even up to very superhuman play, although not perfectly. For instance, the farther-back bricks are worth more points, so a high-scoring RL agent will be biased to hit farther-back bricks before closer ones even if humans would rather finish the round faster.

And so the Breakout AI “beats” Goodhart’s law, but not in a way that we can replicate for big, complicated AI systems that act in the real world. The agent gives good results, even though its reward function is not perfectly aligned with human values within this toy domain, because it is aligned enough and the domain is small enough that there’s simply no perverse solution available. For complicated real-world agents we can’t replicate this—there’s much more room for perverse solutions, and it’s hard to write down and evaluate our preferences.

IV—Supervised learning

Supervised learning is for when humans can label data, but can’t write down their rules for doing it. The relevance to learning value functions is obvious—just get humans to label actions or outcomes as good or bad. Then build AIs that do good things rather than bad things.

We can imagine two different fuzzy categories we might be thinking of as Goodhart’s law for this plan: the optimized output being bad according to competent preferences, or humans being incompetent at evaluating it (similar people disagreeing, choices being easily influenced by apparently minor forces, that sort of thing).

These categories can be further subdivided. Violating competent preferences could be the equivalent of those DeepDream images of Maximum Dog (see below), which are what you get when you try to optimize the model’s output but which the human would have labeled as bad if they saw them. Or it could be like deceiving the human’s rating system by putting a nice picture over the camera, where the label is correctly predicted but the labeling process doesn’t include information about the world that would reveal the human’s competent preference against this situation.

That second one is super bad. But first, Maximum Dog.

Complicated machine learning models often produce adversarial examples when you try to optimize their output in a way they weren’t trained for. This is so Goodhart, and so we’d better stop and try to make sure that we can talk about adversarial examples in naturalistic language.

To some extent, adversarial examples can be defined purely in terms of labeling behavior. Humans label some data, and then a model is trained, but optimizing the model for probability of a certain label leads to something that humans definitely wouldn’t give that label. Avoiding adversarial examples is hard because it means inferring a labeling function that doesn’t just work on the training data, but continues to match human labels well even in new and weird domains.

Human values are complex and fragile (to perturbations in a computational representation), so a universe chosen via adversarial example is going to violate a lot of our desiderata. In other words, when the model fails to extrapolate our labeling behavior it’s probably because it hasn’t understood the reasons behind our labels, and so we’ll be able to use our reasons to explain why its choices are bad. Because we expect adversarial examples to be unambiguously bad, we don’t even really need to worry about the vagueness of human preferences when avoiding them, unless we try really hard to find an edge case.

IV.5 - Quantilizers

If we want to take a classifier and use it to search for good states, one option is a mild optimization process like a quantilizer (video). Quantilizers can be thought of as treating their reward signal or utility function as a proxy for “True Value” that happened to correlate well in everyday cases, but is not trusted beyond that. There are various designs for satisficers and quantilizers that have this property, and all have roughly similar Goodhart’s law considerations.

The central trick is not to generalize beyond some “safe” distribution. If we have a proxy for goodness that works over everyday plans / states of the world, just try to pick something good from the distribution over everyday plans / states. This is actually a lot like our ability to evade Goodhart’s law for Breakout by restricting the domain of the search.

The second trick is to pick by sampling at random from all options that pass some cutoff, which means that even if there are still adversarial examples inside the solution space, the quantilizer doesn’t seek them out too strongly.

Quantilizers have some technical challenges (mild optimization gets a lot less mild if you iterate it), but they really do avoid adversarial examples. However, they pay for this by just not being very good optimizers—taking a random choice of the top 20% (or even 1%) of actions is pretty bad compared to the optimization power required for problems that humans have trouble with.

IV.6 - Back to supervised learning

The second type of problem I mentioned, before getting sidetracked, was taking bad actions that deceive the labeling process.

In most supervised value learning schemes, the AI learns what “good” is by mimicking the labeling process, and so in the limit of a perfect model of the world it will learn that good states of affairs are whatever gets humans to click the like button. This can rapidly lead to obvious competent preference violations, as the AI tries to choose actions that get the “like button” pressed whether humans like it or not.

This is worth pausing at: how is taking actions that maximize “likes” so different from inferring human values and then acting on them? In both cases we have some signal of when humans like something, then we extract some regularities from this pattern, and then act in accordance with those regularities. What gives?

The difference is that we imagine learning human values as a more complicated process. To infer human values we model humans not as arbitrary physical systems but as fallible agents like ourselves, with beliefs, desires, and so on. Then the values we infer are not just whatever ones best predict button-presses, but ones that have a lot of explanatory power relative to their complexity. The result of this is a model that is not the best at predicting when the like button will be pressed, but that can at least imagine the difference between what is “good” and the data-labeling process calling something good.

The supervised learner just trying to classify datapoints learns none of that. This causes bad behavior when the surest way to get the labeling process’ approval violates inferred human preferences.

The third type of problem I mentioned was if the datapoint the supervised learner thinks is best is actually one that humans don’t competently evaluate. For example, suppose we rate a bunch of events as good or bad, but when we take a plan for an event and try to optimize its rating using the trained classifier, it always ends up as some weird thing that humans don’t interact with as if they were agents who knew their own utility function. How do we end up in this situation, and what do we make of it?

We’re unlikely to end up with an AI recommending such ambiguous things by chance—a more likely story is that this is a result of applying patches to the learning process to try to avoid choosing adversarial examples or manipulating the human. How much we trust this plan for an event intuitively seems like it depends on how much we trust the process by which the plan was arrived at—a notion we’ll talk more about in the context of inverse reinforcement learning. For most things based on supervised learning, I don’t trust the process, and therefore this weird output seems super sketchy.

But isn’t this weird? What are we thinking when we rate some output not based on its own qualities, but on its provenance? The obvious train of thought is that a good moral authority will fulfill our True Values even if it makes an ambiguous proposal, while a bad moral authority will not help us fulfill our True Values. But despite the appeal, this is exactly the sort of nonsense I want to get away from talking.

A better answer is that we have meta-preferences about how we want value learning to happen—how we want ourselves to be interpreted, how we want conflicts between our preferences to be resolved, etc., and we don’t trust this sketchy supervised learning model to have incorporated those preferences. Crucially, we might not be sure of whether it’s followed our preferences even as we are studying its proposed plan—much as Gary Kasparov could propose a chess plan and I wouldn’t be able to properly evaluate it, despite having competent preferences about winning the game.

V—Self-supervised learning

We might try to learn human values by predictive learning—building a big predictive model of humans and the environment and then somehow prompting it to make predictions that get interpreted as human value. A modest case would involve predicting a human’s actions, and using the predictions to help rate AI actions. An extreme case would be trying to predict a large civilization (sort of like indirect normativity) or a recursive tree of humans who were trying to answer questions about human values.

As with supervised learning, we’re still worried about the failure mode of learning that “good” is whatever makes the human say yes (see section IV.6). By identifying value judgment with prediction of a specific physical system we’ve dodged some problems of interpretation like the alien concepts problem, but optimizing over prompts to find the most positive predicted reaction will gravitate towards adversarial examples or perverse solutions. To avoid these, self-supervised value learning schemes often avoid most kinds of optimization and instead try to get the model of the human to do most of the heavy lifting, reminiscent of how quantilizers have to rely on the cleverness of their baseline distribution to get clever actions.

An example of this in the modest case would be OpenAI Codex. Codex is a powerful optimizer, but under the hood it’s a predictor trained by self-supervised learning. When generating code with Codex, your prompt (perhaps slightly massaged behind the scenes) is fed into this predictor, and then we re-interpret the output (perhaps slightly post-processed) as a solution to the problem posed in the prompt. Codex isn’t going to nefariously promote its own True Values because Codex doesn’t have True Values. Its training process had values—minimize prediction error—but the training process had a restricted action space and used gradient descent that treats the problem only in abstract logical space, not as a real-world problem that Codex might be able to solve by hacking the computer it’s running on. (Though theoretical problems remain if the operations of the training process can affect the gradient via side channels.)

We might think that Codex isn’t subject to Goodhart’s law because it isn’t an agent—it isn’t modeling the world and then choosing actions based on their modeled effect. But this is actually a little too simplistic. Codex is modeling the world (albeit the world of code, not the physical world) in a very sophisticated way, and choosing highly optimized outputs. There’s no human-programmed process of choosing actions based on their consequences, but that doesn’t mean that the training process can’t give us a Codex that models its input, does computations that are predictive of the effects of different actions, and then chooses actions based on that computation. Codex has thus kind of learned human values (for code) after all, defeating Goodhart’s law. The only problem is that what it’s learned is a big inseparable mishmash of human biases, preferences, decision-making heuristics, and habits.

This highlights the concern that predictive models trained on humans will give human-like answers, and human answers often aren’t good or reliable enough, or can’t solve hard real-world problems. Which is why people want to do value learning with the extreme cases, where we try to use predictive models trained on the everyday world to predict super-clever systems. However, going from human training data to superhuman systems reliably pushes those predictors out of the distribution of the training data, which makes it harder to avoid nonsense output, or over-optimizing for human approval, or undesired attractors in prompt-space.

But suppose everything went right. What does that story look like, in the context of the Goodhart’s law problems we’ve been looking at?

Well, first the predictive model would have to learn the generators of human decisions so that it could extrapolate them to new contexts—challenging the learning process by trying to make the dataset superhuman might make the causal generators of behavior in the dataset not match the things we want extrapolated, so let’s suppose that the learned model can perform at “merely” human level on tasks by predicting what a human would do, but with a sizeable speed advantage. Then the value learning scheme would involve arranging these human-level predictive pieces into a system that actually solves some important problem, without trying to optimize on the output of the pieces too hard. This caution might lead to fairly modest capabilities by superhuman AI standards, so we’d probably want to make the target problem as modest as possible while still solving value alignment. Perhaps use this to help design another AI, by asking “How do you get a powerful IRL-like value learner that does good things and not bad things.” On that note...

VI—IRL-like learning

Inverse reinforcement learning (IRL) means using a model of humans and their surroundings to learn human values by inferring the parameters of that model from observation. Actually, it means a specific set of assumptions about how humans choose actions, and variations like cooperative inverse reinforcement learning (CIRL) assume more sophisticated human behavior that can interact with the learner.

Choosing a particular way to model humans is tricky in the same way that choosing a set of human values is tricky—we want this model to be a simplified agent-shaped model (otherwise the AI might learn that the “model” is a physical description of the human and the “values” are the laws of physics), but our opinions about what model of humans is good are fuzzy, context-dependent, and contradictory. In short, there is no single True Model of humans to use in an IRL-like learning scheme. If we think of the choice of model as depending on human meta-preferences, then it’s natural that modeling inherits the difficulties of preferences.

Just to be clear, what I’m calling “meta-preferences” don’t have to give a rating to every single possible model of humans. The things endorsed by meta-preferences are more like simple patterns that show up within the space of human models (analogous to how I can have “a preference to keep healthy” that just cares about one small pattern in one small part of the world). Actual human models used for value learning will satisfy lots of different meta-preferences in different parts of the design.

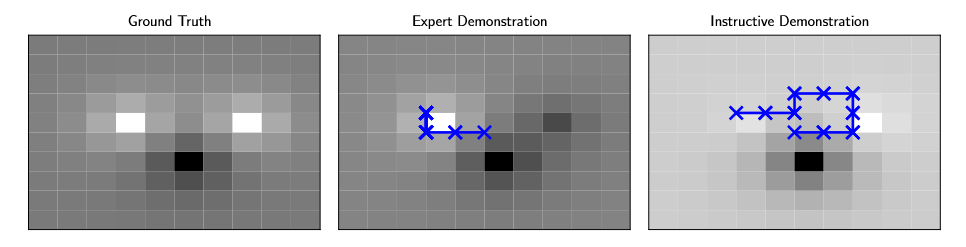

So let’s talk about CIRL. The human model used here is of a utility-maximizing planner who randomly makes mistakes (with worse mistakes being exponentially less likely, so-called Boltzmann-rationality). This model doesn’t do a great job of matching how humans think of their preferences (although since any desired AI policy can be expressed as utility-maximization, we might alternatively say that it doesn’t do a great job of matching how humans think of their mistakes). In terms of Goodhart’s law properties, this is actually very similar to supervised learning from human labels, discussed earlier. Not just the untrustworthiness due to not capturing meta-preferences, also something like adversarial examples—CIRL infers human utilities and then tries to maximize expected value, which can reproduce some of the properties of adversarial examples if human actions can have many different possible explanations. This is one of several reasons why the examples in the CIRL paper were tiny gridworlds.

But what if we had a human model that actually seemed good?

This is a weird thought because it seems implausible. Compare with the even more extreme case: what if we hand-wrote a utility function that seemed good? The deflationary answer in this case is that we would probably be mistaken—conditional on looking at a hand-written utility function and thinking it seems good, it’s nevertheless more likely that we just mis-evaluated the empirical facts of what it would do. But now in the context of IRL-like value learners, the question is more interesting because such learners have their own internal standards for evaluating human reactions to their plans.

If our good-seeming model starts picking actions in the world, it might immediately start getting feedback that it interprets as evidence that it’s doing a bad job. Like a coffee-fetching robot that looks like it’s about to run over the baby, and so it notices humans going for the stop button. This is us discovering that we were wrong about what the value learner was going to do.

Alternatively, if it gets good feedback (or exactly the feedback it expected), it will carry on doing what it’s doing. If it’s fallen into one of those failure modes we talked about for supervised learning, this could mean it’s modeled humans badly, and so it not only acts unethically but interprets human reactions as confirmation of its worldview. But if, hypothetically, the model of humans actually is good (in some sense), it will occupy the same epistemic state.

From the humans’ perspective, what would running a good model look like? One key question is whether success would seem obvious in retrospect. There might be good futures where all the resolutions to preference conflicts seem neat and clear in hindsight. But it seems more likely that the future will involve moral choices that seem non-obvious, that similar people might disagree on, or that you might have different feelings about if you’d read books in a different order. One example: how good is it for you to get destructively uploaded? If a future AI decides that humans should (or shouldn’t) get uploaded, our feelings on whether this is the right decision might depend more on whether we trust the AI’s moral authority than on our own ability to answer this moral question. But now this speculation is starting to come unmoored from the details of the value learning scheme—we’ll have to pick this back up in the next post.

VII—Extra credit

Learning human biases is hard. What does it look like to us if there are problems with a bias-learning procedure?

An IRL-like value learner that has access to self-modifying actions might blur the line between a system that has hard-coded meta-preferences and one that has learned meta-preferences. What would we call Goodhart’s law in the context of self-modification?

Humans’ competent preferences could be used to choose actions through a framework like context agents. What kinds of behaviors would we call Goodhart’s law for context agents?

VIII—Conclusions

Absolute Goodhart[1] works well for most of these cases, particularly when we’re focused on the possibility of failures due to humans mis-evaluating what a value learner is going to do in practice. But another pattern that showed up repeatedly was the notion of meta-preference alignment. A key feature of value learning is that we don’t just want to be predicted, we want to be predicted using an abstract model that fulfills certain desiderata, and if we aren’t predicted the way we want, this manifests as problems like the value learner navigating conflicts between inferred preferences in ways we don’t like. This category of problem won’t make much sense unless we swap from Absolute to Relative Goodhart.

In terms of solutions to Goodhart’s law, we know of several cases where it seems to be evaded, but our biggest successes so far just come from restricting the search process so that the agent can’t find perverse solutions. We can also get good present-day results from imitating human behavior and interpreting it as solving our problems. Even when trying to learn human behavior, though, our training process will be a restricted search process aligned in the first way. However, the way of describing Goodhart’s law practiced in this post hints at another sort of solution. Because our specifications for what counts as a “failure” are somewhat restrictive, we can avoid failing without needing to know humans’ supposed True Values. Which sounds like a great thing to talk about, next post.

- ^

Recall that this is what I’m calling the framing of Goodhart’s law where we compare a proxy to our True Values.

It’s not obvious to me how the optimizer’s curse fits in here (if at all). If each of the evaluations has the same noise, then picking the action that the model says will have the highest rating is the right thing to do. The optimizer’s curse says that the model is likely to overestimate how good this “best” action is, but so what? “Mess up” conventionally means “the AI picked the wrong action”, and the optimizer’s curse is not related to that (unless there’s variable noise across different choices and the AI didn’t correct for that). Sorry if I’m misunderstanding.

Yeah, this is right. The variable uncertainty comes in for free when doing curve fitting—close to the datapoints your models tend to agree, far away they can shoot off in different directions. So if you have a probability distribution over different models, applying the correction for the optimizer’s curse has the very sensible effect of telling you to stick close to the training data.

Oh, yup, makes sense thanks

np, I’m just glad someone is reading/commenting :)