EIS VI: Critiques of Mechanistic Interpretability Work in AI Safety

Part 6 of 12 in the Engineer’s Interpretability Sequence.

Thanks to Chris Olah and Neel Nanda for discussions and comments. In particular, I am thankful to Neel Nanda for correcting a mistake I made in understanding the arguments in Olsson et al. (2022) in an earlier draft of this post.

TAISIC = “the AI safety interpretability community”

MI = “mechanistic interpretability”

What kind of work this post focused on

TAISIC prioritizes a relatively small set of problems in interpretability relative to the research community at large. This work is not homogenous, but a dominant theme is a focus on mechanistic, circuits-style interpretability with the end goals of model verification and/or detecting deceptive alignment.

There is a specific line of work that this post focuses on. Key papers from it include:

A Mathematical Framework for Transformer Circuits (Elhage et al., 2021)

In-context Learning and Induction Heads (Olsson et al., 2022)

Progress measures for grokking via mechanistic interpretability (Nanda et al., 2023)

…etc.

And the points in this post will also apply somewhat to the current research agendas of Anthropic, Redwood Research, ARC, and Conjecture. This includes Causal Scrubbing (Chan et al., 2022) and mechanistic anomaly detection (Christiano, 2022).

Most (all?) of the above work is either from Distill or inspired in part by Distill’s interpretability work in the late 2010s.

To be clear, I believe this research is valuable, and it has been foundational to my own thinking about interpretability. But there seem to be some troubles with this space that might be keeping it from being as productive as it can be. Now may be a good time to make some adjustments to TAISIC’s focus on MI. This may be especially important given how much recent interest there has been in interpretability work and how there are large recent efforts focused on getting a large number of junior researchers working on it.

Four issues

This section discusses four major critiques of the works above. Not all of these critiques apply to all of the above, but for every paper mentioned above, at least one of the critiques below apply to it. Some but not all of these examples of papers exhibiting these problems will be covered.

Cherrypicking results

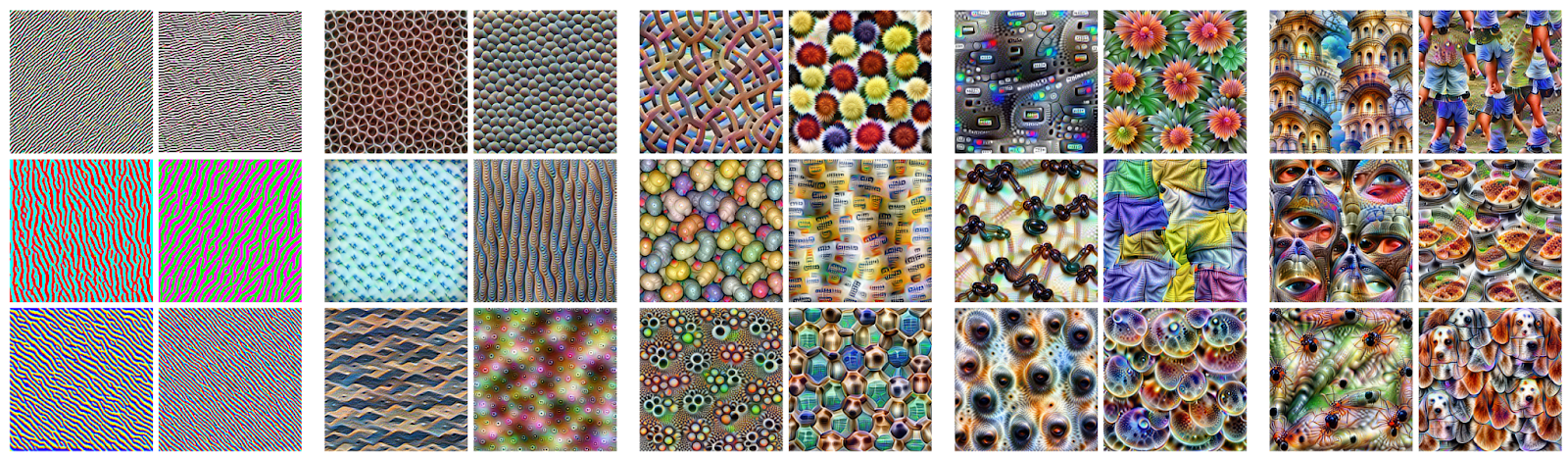

As discussed in EIS III and the Toward Transparent AI survey (Räuker et al., 2022), cherrypicking is common in the interpretability literature, but it manifests in some specific ways in MI work. It is very valuable for papers to include illustrative examples to build intuition, but when a paper makes such examples a central focus, cherrypicking can make results look better than they are. The feature visualization (Olah et al., 2017) and zoom in (Olah et al., 2020) papers have examples of this. Have a look at the cover photo for (Olah et al., 2017).

From Olah et al., (2017)

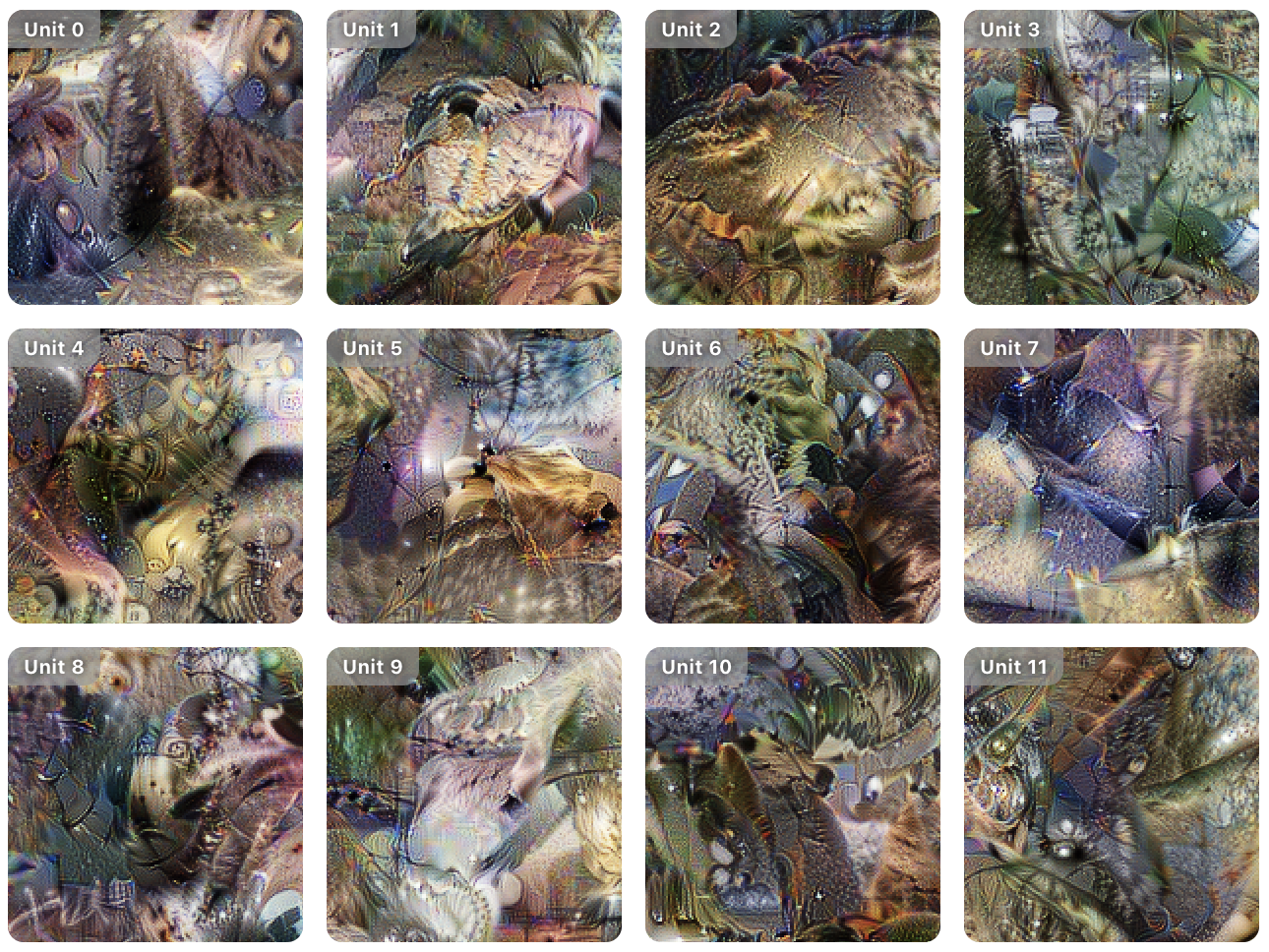

These images seem easy to describe and form hypotheses from. But instead of these, try going to OpenAI microscope and looking at some random visualizations. For example, here are some from a deep layer in an Inception-v4.

From this link.

As someone who often works with feature visualizations, I can confirm that these visualizations from OpenAI microscope are quite typical. But notice how they seem quite a bit less ‘lucid’ than the ones in the cover photo from Olah et al., (2017).

Of course, many papers present the rosiest possible examples with their Figure 1 – I am guilty of this myself. And there is an argument to be made for this because it can help a reader gain quick intuition. But the main danger is when a paper structures its arguments around cherrypicked example after example without rigorous evaluation or multiple hypothesis testing corrections.

From Olah et al., (2017)

Consider this figure in which Olah et al., (2017) selects a single neuron, presents some visualizations, and argues that the feature visualizations show cats, car hoods (looks more like pool tables to me though), and foxes.

In this same example, these interpretations are then validated by using a different interpretability tool – test set exemplars. This begs the question of why we shouldn’t just use test set exemplars instead. And when Borowski et al, (2020) tested exemplars versus feature visualizations on a neural response prediction task using humans, the exemplars did better! Remember the gorilla parable from EIS II – it’s important not to grade different types of methods on a curve.

In this particular case, it is a great hypothesis that the neuron of interest is polysemantic for these four types of features. But the evidence provided does not show that this is a complete or robust understanding of the neuron. It could just be an interpretability illusion (Bolukbasi et al., 2021). And regardless, cherrypicked results are not enough to show that this understanding of the neuron is practical. And cherrypicking might be directly harmful via Goodharting. As discussed in EIS III, normalized cherrypicking will tend to guide progress toward methods that perform well in the best case – not the average or worst case.

Networks are usually big and contain many neurons and weights. These include many frivolous neurons (Casper et al., 2020) and weights (Frankle et al., 2018). And all of these neurons and weights mean that there are many different subnetworks to study. Olah et al. (2020) pick a number of these examples, do not do any correction for testing many hypotheses, present select ones as coherent circuits, and pontificate about how they work. We see examples like this.

From Olah et al. (2020)

Here, the neurons look like they may wire together in a way that’s important for curve detection, and the weights connecting the circuit make this seem plausible. And this is a great hypothesis. But picking neurons and weights that wire together and then constructing a story of what is happening is a fraught way to draw conclusions. We know of examples in which this type of methodology fails to produce explanations that generalize (Hoffmann et al. (2021), Bolukbasi et al., (2021)), a point that Olah et al. (2020) acknowledge.

At the risk of being overly blunt, I hope to be clear in expressing that this is not an acceptable standard for work that is touted for the purpose of the safety of real people in the real world.

The point here is to highlight some problems with cherrypicking but not to claim that the methods from papers like (Olah et al., 2017) and (Olah et al., 2020) are incapable of being useful. In fact, these papers and subsequent ones gave a thorough case that their methods were useful for something. However, that something was the very simple task of characterizing curve detection. And this leads to the next point.

Toy models and tasks

The key reason we need good interpretability tools is for spotting issues with AI systems in high-stakes settings. Given how daunting this task is, it seems incongruous how the mechanistic interpretability literature consistently avoids applying tools to problems of engineering relevance and usually opts instead for studying toy (sub)networks trained on toy tasks.

Cammarata et al. (2020) studied curve detection. The circuits that they concluded were responsible for detecting curves did so by detecting edges from pixels and curves from edges in exactly the way you would expect.

Elhage et al. (2021) and Olsson et al. (2022) studied attention heads in transformers. In particular, they focus on “induction heads” which aid in in-context learning by retrieving information from previous mentions of the current token. Abstractly, induction heads perform a task that can be understood by a 2-state finite state machine that either matches a token and returns the next or fails to match a token and moves to the previous one. They study induction heads quite directly in small models but much more indirectly in large ones, using mostly correlational evidence and analogy plus some interventional ones which they described as offering “weak” evidence.

Wang et al. (2022) studied the task of indirect object identification. They analyzed GPT2-small which is notably not a toy model. But the task was cherrypicked. Consider a next-token-predicton task such as “When Mary and John went to the store, John gave a bottle of milk to ___”. The hypothesis from Wang et al. (2022) about how the network mechanistically identifies indirect objects was described as “1. Identify all previous names in the sentence (Mary, John, John). 2. Remove all names that are duplicated (in the example above: John). 3. Output the remaining name.” But this is not sufficient for indirect object identification in English. For example, consider “Alice reminded Bob that it was Charlie’s birthday and that Charlie wanted roller skates. So Bob gave a gift of roller skates to ___.” The correct completion is “Charlie,” not “Alice.” And I checked that GPT2-small indeed does get this completion right. So the real task studied here is simpler than general IOI. It is IOI on a restricted set of sentences that they used, and it leaves the model’s IOI capabilities outside of this set unexplained. Maybe their circuit was a somewhat frivolous one...(Ramanujan et al., 2020)

Nanda et al. (2023) studied a small one-layer transformer trained to perform modular addition.

One concern with these works is the process by which these tasks were selected. None of the tasks seem uniquely compelling for AI safety. And this prompts the question – why were these particular tasks selected for study? The papers above did not provide details on task selection or argue that the chosen task was particularly relevant to alignment. This suggests that some of the above results could have been effectively p-hacked. Moving forward, MI researchers should provide details on task selection to avoid this problem.

Further, the problems being studied in these works have trivial solutions which give MI researchers an unrealistic advantage in finding mechanistic explanations. In these cases, it was trivial for a human to generate mechanistic hypotheses. But any practical problem where this can be done is not a deep learning problem (Rudin, 2019). The reason why we use neural networks is precisely that they are good at learning complex solutions to nontrivial problems that we cannot devise programmatic solutions for. So we can expect that failures in advanced AI systems in high-stakes settings will not be due to trivial mechanisms. So long as MI remains focused on explaining how networks handle toy tasks, there will be a fundamental gap between what MI research is accomplishing and what we need it to accomplish.

A lack of scalability

There is a dual issue to how MI research tends to focus on toy tasks and models. The norm is not to just solve very simple, convenient problems, but to do so in one of the least scalable ways possible – through large amounts of effort from human experts.

One of the reasons for this approach was discussed in EIS V. The hard part of MI is generating good mechanistic hypotheses, and this requires a form of program synthesis, induction, or language translation which are challenging. But by only focusing on toy problems and having humans generate mechanistic hypotheses, MI research has avoided confronting this problem. This work has failed to scale to challenging problems, and might always fail to scale because of this dependency on hypothesis generation.

There may be a way forward. It might be possible to develop good ways of training intrinsically interpretable networks and translating them into programs in certain domain-specific languages. This could be extremely valuable. However, automated MI has been discussed for years within TAISIC, and we haven’t seen significant work in this direction yet. But we should expect this to be hard for the same reason that programming language translation is hard (Qiu, 1999).

But given that good, automated mechanistic hypothesis generation seems to be the only hope for scalable MI, it may be time for TAISIC to work on this in earnest. Because of this, I would argue that automating the generation of mechanistic hypotheses is the only type of MI work TAISIC should prioritize at this point in time. See the previous post, EIS V, for a discussion of some existing non-TAISIC work in neurosymbolic AI related to this. Some of these works have made progress on automated hypothesis generation and may be making more progress on TAISIC’s MI goals than TAISIC. And later, EIS XI will argue that one of the best subproblems for this to work on may involve intrinsic interpretability tools.

Failing to do useful things

The status quo

Recall that the key goal for interpretability tools is to help us find problems in AI systems – especially insidious ones. This is a subset of all engineering-relevant things that practitioners might want to do with AI systems. Given how central MI work has been to AI safety, it is a little bit disappointing that it has failed to produce competitive tools that engineers can use to solve real world problems. If MI is a good way to tackle the alignment problem, shouldn’t we be having success with applications? One of the reasons that not much MI research is applied to non-toy problems may be that current approaches to MI may just be ill-equipped to produce competitive techniques.

EIS III discussed how most work in interpretability relies on intuition-based or weak ad-hoc evaluation. And MI is no exception. Cammarata et al. (2020), Elhage et al. (2021), Olsson et al. (2022), Wang et al. (2022), and Nanda et al. (2023) all do some amount of pontificating about what a network is doing while demonstrating fairly little engineering value to the interpretations. It is worth highlighting in particular one method of evaluation from Elhage et al. (2022). They claimed to have trained a more interpretable network based on an experiment in which they measured how easily human subjects could come up with mechanistic hypotheses from looking at dataset exemplars using their network versus a control. This is a textbook example of the sort of evaluation by intuition discussed in EIS III.

Relatedly, when doing MI work, we should be very cautious when researchers claim to identify a subnetwork that seems to be performing a specific task. In general, it’s easy to find circuits in networks that do arbitrary things without doing anything more impressive than just a form of performance-guided network compression. Ramanujan et al. (2020) demonstrate how networks can be “trained” on tasks just by getting rid of all the parameters that don’t help task performance and then keeping the circuit that remains.

It is not ideal to paint with a broad brush when discussing the MI literature. It is important to be just as clear about the great things that have been done as the flawed ones. For example, Wang et al. (2022) adopted a useful hierarchical approach paired with good ablation tests while Cammarata et al. (2020) and Nanda et al. (2023) were particularly thorough to the point of leaving little room for plausible doubt for how their (sub)networks worked. And all of the works discussed in this post do indeed provide fairly compelling cases for their mechanistic hypotheses. In fact, these works should have gone further than they did. For example, these papers could have done more work on debugging or implanting backdoors. Wang et al. (2022) come closest to doing this in a competitive way. They found that ablating their circuit for indirect object identification harmed task performance but still left some to be explained. This type of thing should continue, ideally with non-toy problems.

We need rigorous evaluation

As discussed in EIS III, we need benchmarks to measure how competitively useful interpretability tools are for helping humans or automated processes (1) make novel predictions about how the system will handle certain inputs, (2) control what a system does by guiding manipulations to its parameters or (3) abandon a system performing a nontrivial task and replace it with a simpler reverse-engineered alternative. EIS III discusses a recent paper on benchmarking (Casper et al., 2023)and the next post in this sequence will propose a challenge for mechanists.

Exploratory work is good and should continue to be prioritized in TAISIC. But it alone cannot solve real problems. Benchmarking seems to be a solution for making interpretability research more engineering-relevant because it concretizes research goals, gives indications of what approaches are the most useful, and spurs community efforts. For further discussion on the importance of benchmarking for progress in AI, see EIS III and Hendrycks and Woodside (2022).

Finally, recall the gorilla parable from EIS II about not grading techniques on curves...

Imagine that you heard news tomorrow that MI researchers from TAISIC meticulously studied circuits in a way that allowed them to…

Reverse engineer and repurpose a GAN for controllable image generation.

Done by Bau et al. (2018).

Identify and successfully ablate neurons responsible for biases and adversarial vulnerabilities.

Done by Ghorbani et al. (2020).

Identify and label semantic categories of inputs that networks tend to fail on.

Done by Wiles et al. (2022).

Debug a model well enough to greatly reduce its rate of misclassification in a high-stakes type of setting.

Done by Ziegler et al. (2022)

Identify hundreds of interpretable copy/paste attacks.

Done by Casper et al. (2022)

Rediscover interpretable trojans in a network

Done by several of the non-mechanistic techniques benchmarked by Casper et al. (2023)

How shocked and impressed would you be?

This underscores the importance of not grading different techniques on different curves. Interpretability tools are just means to an end. To the extent that we see more rigorous benchmarking of interpretability tools in the coming years, we might find that MI approaches don’t compete well. And as will be discussed in EIS VIII, EIS IX, and EIS XI, MI might not be the most promising way to make progress on the problems we care about. But I would welcome being proven wrong on this!

Re: “We just need to find out what the hell is going on.”

Hell yeah we do. We absolutely need to do exploratory work, play with toy models, experiment with new concepts, etc. Despite how critical this post is on the works that do this, it should be clear that this is not a criticism of exploratory work – engineers do this kind of thing all the time. It is worth clarifying the nuance behind what this post is arguing.

First, exploratory work, toy problems, etc. should not constitute the lion’s share of MI research effort as it does today. To date, the MI research community has not produced many useful new regularizers, diagnostic techniques, debugging techniques, editing techniques, architectures, etc. It seems good to work much more on this.

Second, when we do exploratory work, we should be honest that we are doing them, and we should be clear about the difference between this work and the type of engineering work that will be directly relevant to safety. When doing exploratory work, one should always be able to explain how it could plausibly lead to methods of practical value, and if such a story hinges on things that are not being worked on, maybe they should be worked on.

Re: “We need interpretability for verification.”

Some MI researchers describe part of their vision for MI as helping with verification. Certainly, it would be nice for AI safety if we could make good mechanistic arguments about how a model would always/never do certain things. But in practice, it seems hard to do something like this reliably with rigor or precision. There is already a lot of work that tries to make formal guarantees about neural networks. Despite being from 2018, Huang et al. (2018) offer an overview of these methods that I think is helpful for understanding key approaches. And while these methods have potential, they too have not made it into any mainstream engineering toolbox.

A key thing to remember when trying to make forall statements about neural networks via MI is that these systems regularly behave in completely unexpected ways as the result of small, often imperceptible adversarial perturbations. So it seems unlikely that interpretability work will get us very far with verification.

Questions

Do you agree or disagree that automating mechanistic hypothesis generation is the only hope for mechanistic interpretability to scale? Why?

Do you agree or disagree that we should work to benchmark mechanistic interpretability tools? Why?

What lessons do you think the mechanistic interpretability community should learn from Ramanujan et al. (2020)? For example, how concerning is this for the causal scrubbing agenda? Could this have explained the outcome of the IOI paper?

Are you or others that you know working on solving any of the problems discussed here?

What is your steelman for current mechanistic interpretability research?

Anyway, the last 4 sequence posts have been critical. But the rest of the sequence will be constructive :)

- Shallow review of live agendas in alignment & safety by (27 Nov 2023 11:10 UTC; 335 points)

- Against Almost Every Theory of Impact of Interpretability by (17 Aug 2023 18:44 UTC; 328 points)

- Shallow review of technical AI safety, 2024 by (29 Dec 2024 12:01 UTC; 180 points)

- Takeaways from the Mechanistic Interpretability Challenges by (8 Jun 2023 18:56 UTC; 94 points)

- Shallow review of live agendas in alignment & safety by (EA Forum; 27 Nov 2023 11:33 UTC; 76 points)

- The Engineer’s Interpretability Sequence (EIS) I: Intro by (9 Feb 2023 16:28 UTC; 46 points)

- Takeaways from a Mechanistic Interpretability project on “Forbidden Facts” by (15 Dec 2023 11:05 UTC; 33 points)

- EIS XII: Summary by (23 Feb 2023 17:45 UTC; 18 points)

- 's comment on Takeaways from the Mechanistic Interpretability Challenges by (10 Jun 2023 18:50 UTC; 5 points)

Mechanistic Interpretability exacerbates AI capabilities development — possibly significantly.

My uninformed guess is that a lot of the Mechanistic Interpretability community (Olah, Nanda, Anthropic, etc) should (on the margin) be less public about their work. But this isn’t something I’m certain about or have put much thought into.

Does anyone know what their disclosure policy looks like?

Doesn’t Olah et al (2017) answer this in the “Why visualize by optimization” section, where they show a bunch of cases where neurons fire on similar test set exemplars, but visualization by optimization appears to reveal that the neurons are actually ‘looking for’ specific aspects of those images?

I buy this value—FV can augment examplars. And I have never heard anyone ever say that FV is just better than examplars. Instead, I have heard the point that FV should be used alongside exemplars. I think these two things make a good case for their value. But I still believe that more rigorous task-based evaluation and less intuition would have made for a much stronger approach than what happened.

“Automating” seems like a slightly too high bar here, given how useful human thoughts are for things. IMO, a better frame is that we have various techniques for combining human labour and algorithmic computation to generate hypotheses about networks of different sizes, and we want the amount of human labour required to be sub-polynomial in network size (e.g. constant or log(n)).

I think that the best case for full automation is you get the best iteration speeds, and iteration matters more than virtually anything else for making progress.

I have been wondering if neural networks (or more specifically, transformers) will become the ultimate form of AGI. If not, will the existing research on Interpretability, become obsolete?

I do not worry a lot about this. It would be a problem. But some methods are model-agnostic and would transfer fine. Some other methods have close analogs for other architectures. For example, ROME is specific to transformers, but causal tracing and rank one editing are more general principles that are not.

It’s good to see some informed critical reflection on MI as there hasn’t been much AFAIK. It would be good to see reactions from people who are more optimistic about MI!

All the critiques focus on MI not being effective enough at its ultimate purpose—namely, interpretability, and secondarily, finding adversaries (I guess), and maybe something else?

Did you seriously think through whether interpretability, and/or finding adversaries, or some specific aspects or kinds of either interoperability or finding adversaries could be net negative for safety overall? Such as what was contemplated in “AGI-Automated Interpretability is Suicide”, “AI interpretability could be harmful?”, and “Why and When Interpretability Work is Dangerous”. However, I think that none of the authors of these three posts is an expert in interpretability or adversaries, so it would be really interesting to see your thinking on this topic.