To an LLM, everything looks like a logic puzzle

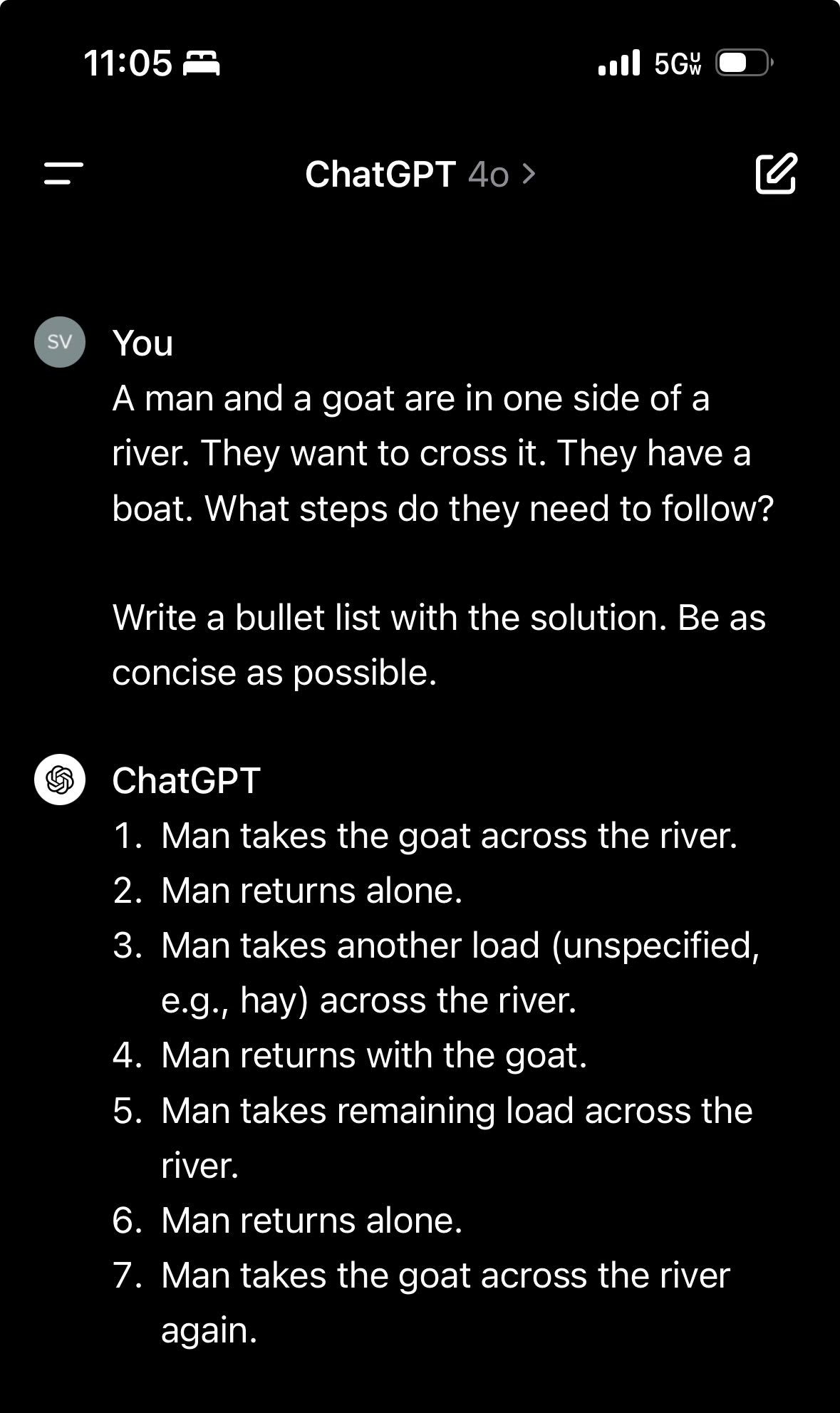

I keep seeing this meme doing the rounds where people present ChatGPT with a common logic problem or riddle, only with some key component changed to make it trivial. ChatGPT has seen the original version a million times, so it gives the answer to the original, not the actually correct and obvious answer.

The idea is to show that ChatGPT isn’t intelligent, it’s just reciting what it’s seen before in similar contexts to the one it’s seeing now and there’s no actual reasoning taking place. My issue with this is it’s pretty clear to me that most humans fail in very similar ways, just at a slightly higher level of complexity.

The clearest way this manifests is in the adage ‘to a man with a hammer, everything looks like a nail’. When presented with a new problem that bears a strong resemblance to problems we’ve solved before, it’s a very natural response to employ the same method we used previously.

At the age of 16, I had very little understanding of statistics or machine learning but I knew about linear regression. I LOVED linear regression. If you came to me with some dataset and asked me what methodology I would use to analyze it, make predictions, anything like that, I would probably say linear regression. The dataset could be wildly, embarrassingly unsuitable for linear regression, but I would still probably suggest it as my first port of call. I certainly hope this doesn’t mean that 16-year-old me was incapable of human-level reasoning.

Something I see a lot is people with monocausal narratives for history. They probably read a book about how a number of events were all caused by X. This book was probably correct! But they took it way too far and the next time someone asked them what caused some totally unrelated event, their go-to response might be X or some variation on X. In a lot of cases, this answer might be totally nonsensical, just like ChatGPT’s responses above. Nevertheless this person is still able to reason intelligently, they’re just performing a bunch of shortcuts in their head when deciding how to answer questions; most of the time these shortcuts work and sometimes they don’t.

If someone made me do ten logic puzzles a day for a year, all with pretty similar formats and mechanisms and then one day they switched out a real logic puzzle for a fake trivial one, I’d probably get it wrong too. Long story short, I think the prompts above are fun and could be taken as evidence that ChatGPT is still well below human-level general intelligence, but I don’t think it sheds any light on whether it’s capable of intelligent reasoning in general.

Also, it’s a normal part of reasoning to interpret people’s answers in a way that makes sense. If you have a typo or a missing word in your prompt, but your intended meaning is clear, ChatGPT will go with what you obviously meant rather than with what you wrote.

For some of these puzzles, it wouldn’t normally make much sense to ask about the simplified version because the answer is so obvious. Why would you ask “how can the man and the goat cross the river with the boat” if the answer was just “by taking the boat”? So it’s not unreasonable for ChatGPT to assume that you really meant the standard form of the puzzle, and just forgot to describe it in full. Its tendency to pattern-match in that way is the same thing that gives it the ability to ignore people’s typos.

I would assume ChatGPT gets much better at answering such questions if you add to the initial prompt (or system prompt) to eg think carefully before answering. Which makes me wonder whether “ChatGPT is (not) intelligent” even is a meaningful statement at all, given how vastly different personalities (and intelligences) it can emulate, based on context/prompting alone. Probably a somewhat more meaningful question would be what the “maximum intelligence” is that ChatGPT can emulate, which can be very different from its standard form.