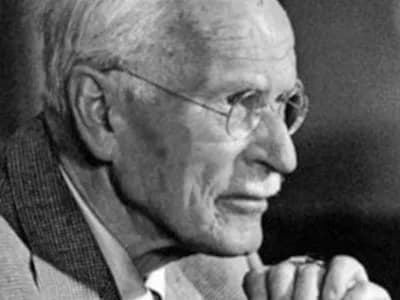

Carl Jung’s theories of individuation and the collective unconscious focus on the individual experience in relation to a larger collective unconscious shared by humanity. Individuation is the journey of discovering a person’s true identity, and in order to do so, one must be aware of their unconscious psychological patterns, symbols, and archetypes stored within. On the other hand, the collective unconscious is a vast repository of ancient symbols and myths that are shared across many cultures around the world. In Jungian psychology, these archetypes are seen as the foundation for creativity and imagination. By examining how these archetypes interact with each other in various contexts, one can gain insight into their personal life journey.

Why has AI alignment research (in general) failed to draw on Carl Jung’s theories of archetypes, individuation, and the collective unconscious to identify potential gaps in the field?

Probably because Carl Jung’s theories are mostly unfalsifiable woo…?

Hello, Can you specify which of them were unfalisfiable woo?

Archetypes, individuation, the collective unconscious, etc.

Why describe them as unfalisifiable woo? Do you have a basis you can share?

Come now, I know you’re able to do a search for “criticisms of Jungian psychology” or similar. This sort of objection is hardly new. (Surely you’re not just right now, for the first time, encountering such a view? If you are—well, you’ve got a lot of reading to do!)

In any case, it seems to me that you’ve got your answer, regardless of whether you agree with it: Carl Jung is not popular in alignment research because the prevailing view is that his theories are not even remotely rigorous enough to be relevant.

Thanks for a much more clearer answer. I tried looking here in the forum if there are of such nature of discussion on Carl Jung’s theories but found very little. That is why I asked here why. But, in my practice as a business professional—his theories were very helpful in determining fraudulent behaviour and capacity of workers to create errors… I just see it as something that alignment research might need to reconsider.. especially the idea that humans have a subconscious layer in their thinking -not just merely goal/reward driven.

The idea that individuals are driven by subconscious or unconscious instincts is a well established fact of psychology. The idea of a collective unconscious, in the way the Jung described it, is the unfalsifiable woo.

Thanks for your comment. Can you elaborate on why you believe Jung’s theory on the collective unconscious is an unfalsifiable woo?

It is a belief that doesn’t pay rent. Let’s assume that there is such a thing as a collective unconscious, which is the source of archetypes. What additional predictions does this enable? Why should I add the notion of a collective unconscious to my existing psychological theory? Why shouldn’t I trim away this epicycle with Occam’s Razor?

I know this will not answer your questions directly but there are currently patterns in AI that appears to be fitting to be debugged by Jungian analysis.. Like this one:

The davinci model can conjure sophisticated stories out the tokens Leilan and petertodd.

https://twitter.com/SoC_trilogy/status/1638016382037110784?t=XvdXSJLK2vbNIemZqSWaZA&s=19

I fail to see how Jungian analysis can actually debug LLMs better than the approach that Robert_AIZI used in their analysis of the “SolidGoldMagikarp” glitch token.

However insightful Jung was or wasn’t, his ideas are not precise. Meaning, none of us knows how to reduce them to math, or how to use them in practice to apply them to non-human systems. Almost all the work needed would be in that step, and it isn’t clear why starting from Jung would help more than starting from any other thinker who pondered the workings of the mind in that past several thousand years.

As an aside, if anyone did know how to do any of this, I think I’d much rather live in a world where an AI was aligned to think of itself as being in Rawles’ original position than one who thought of itself as an individuated Jungian archetype. I think both would be disastrous, but at least the former might be so indecisive it fails to do too much bad stuff.

CHAT GPT 4′s answer to the question:

AI alignment research has predominantly focused on the technical and algorithmic aspects of aligning AI systems with human values and intentions, as well as ensuring AI systems are robust and safe. There are a few reasons why AI alignment research may not have extensively drawn on Carl Jung’s theories of archetypes, individuation, and the collective unconscious:

Different domains: AI alignment research is primarily a technical field, focused on computer science, mathematics, and engineering. Carl Jung’s theories are grounded in psychology and the human experience, which may not directly intersect with the core focus of AI alignment.

Complexity of human psychology: While Jung’s theories provide valuable insights into the human psyche, they don’t offer a simple, straightforward way to translate these insights into AI alignment algorithms. Human psychology is complex and nuanced, and integrating these concepts into AI systems may be challenging.

Lack of empirical evidence: Although Jung’s theories are influential and thought-provoking, they have been criticized for a lack of empirical evidence and testability. AI alignment research often demands rigorous, evidence-based methods, which may not align well with the more theoretical and speculative nature of Jung’s work.

Scalability: AI alignment research aims to create AI systems that can scale to handle increasingly complex tasks and environments. Incorporating Jung’s theories into AI alignment may introduce additional complexity that is difficult to scale or generalize across different domains and tasks.

However, this doesn’t mean that Carl Jung’s theories cannot contribute to AI alignment research in any way. There may be value in exploring how these theories can inform our understanding of human values and behavior, which can be relevant when designing AI systems that must interact with and understand humans. Additionally, studying the collective unconscious and archetypes could inspire new approaches to AI alignment that account for the cultural and historical context in which AI systems are developed and deployed.

Follow-up question:

But Jungian archetypes is not enough as a bridge to the thought patterns that resides in our psyche? I believe a model can be trained with enough archetypal data...

CHAT GPT 4′s answer:

It is true that Jungian archetypes might offer some insights into human thought patterns, and it is possible that a model could be trained with archetypal data to better understand human psychology. However, there are still some challenges to consider:

Representing archetypes: Archetypes are abstract concepts that can manifest in various ways across different cultures and individuals. Finding a suitable representation of archetypes in a form that can be used as input for an AI model may be difficult.

Incompleteness: Jung’s theories and archetypes may not cover the entire spectrum of human thought patterns and behavior. While they can provide valuable insights, relying solely on Jungian archetypes may limit the AI’s understanding of the nuances and complexities of human psychology.

Subjectivity and interpretation: The interpretation of archetypes and their relevance to specific situations can be highly subjective. This subjectivity may pose challenges in training an AI model that can accurately and consistently identify and apply archetypes in a meaningful way.

Integration with existing AI alignment approaches: AI alignment research already includes various methods and approaches to understand and align AI systems with human values. Integrating Jungian archetypes with these existing approaches may require significant effort and rethinking of the underlying principles and techniques.

That being said, exploring the potential of Jungian archetypes in AI alignment research could lead to interesting insights and novel approaches. It may be worthwhile to investigate how these archetypes can inform our understanding of human values and behavior, as well as how they can be incorporated into AI systems in a meaningful way. This could involve interdisciplinary research, combining expertise from psychology, computer science, and other fields to develop innovative solutions to AI alignment challenges.