If a technology may introduce catastrophic risks, how do you develop it?

It occurred to me that the Wright Brothers’ approach to inventing the airplane might make a good case study.

The catastrophic risk for them, of course, was dying in a crash. This is exactly what happened to one of the Wrights’ predecessors, Otto Lilienthal, who attempted to fly using a kind of glider. He had many successful experiments, but one day he lost control, fell, and broke his neck.

Believe it or not, the news of Lilienthal’s death motivated the Wrights to take up the challenge of flying. Someone had to carry on the work! But they weren’t reckless. They wanted to avoid Lilienthal’s fate. So what was their approach?

First, they decided that the key problem to be solved was one of control. Before they even put a motor in a flying machine, they experimented for years with gliders, trying to solve the control problem. As Wilbur Wright wrote in a letter:

When once a machine is under proper control under all conditions, the motor problem will be quickly solved. A failure of a motor will then mean simply a slow descent and safe landing instead of a disastrous fall.

When actually experimenting with the machine, the Wrights would sometimes stand on the ground and fly the glider like a kite, which minimized the damage any crash could do:

When they did go up in the machine themselves, they flew relatively low. And they did all their experimentation on the beach at Kitty Hawk, so they had soft sand to land on.

All of this was a deliberate, conscious strategy. Here is how David McCullough describes it in his biography of the Wrights:

Well aware of how his father worried about his safety, Wilbur stressed that he did not intend to rise many feet from the ground, and on the chance that he were “upset,” there was nothing but soft sand on which to land. He was there to learn, not to take chances for thrills. “The man who wishes to keep at the problem long enough to really learn anything positively must not take dangerous risks. Carelessness and overconfidence are usually more dangerous than deliberately accepted risks.”

As time would show, caution and close attention to all advance preparations were to be the rule for the brothers. They would take risks when necessary, but they were no daredevils out to perform stunts and they never would be.

Solving the control problem required new inventions, including “wing warping” (later replaced by ailerons) and a tail designed for stability. They had to discover and learn to avoid pitfalls such as the tail spin. Once they had solved this, they added a motor and took flight.

Inventors who put power ahead of control failed. They launched planes hoping they could be steered once in the air. Most well-known is Samuel Langley, who had a head start on the Wrights and more funding. His final experiment crashed into the lake. (At least they were cautious enough to fly it over water rather than land.)

The Wrights invented the airplane using an empirical, trial-and-error approach. They had to learn from experience. They couldn’t have solved the control problem without actually building and testing a plane. There was no theory sufficient to guide them, and what theory did exist was often wrong. (In fact, the Wrights had to throw out the published tables of aerodynamic data, and make their own measurements, for which they designed and built their own wind tunnel.)

Nor could they create perfect safety. Orville Wright crashed a plane in one of their early demonstrations, severely injuring himself and killing the passenger, Army Lt. Thomas Selfridge. The excellent safety record of commercial aviation was only achieved incrementally, iteratively, over decades.

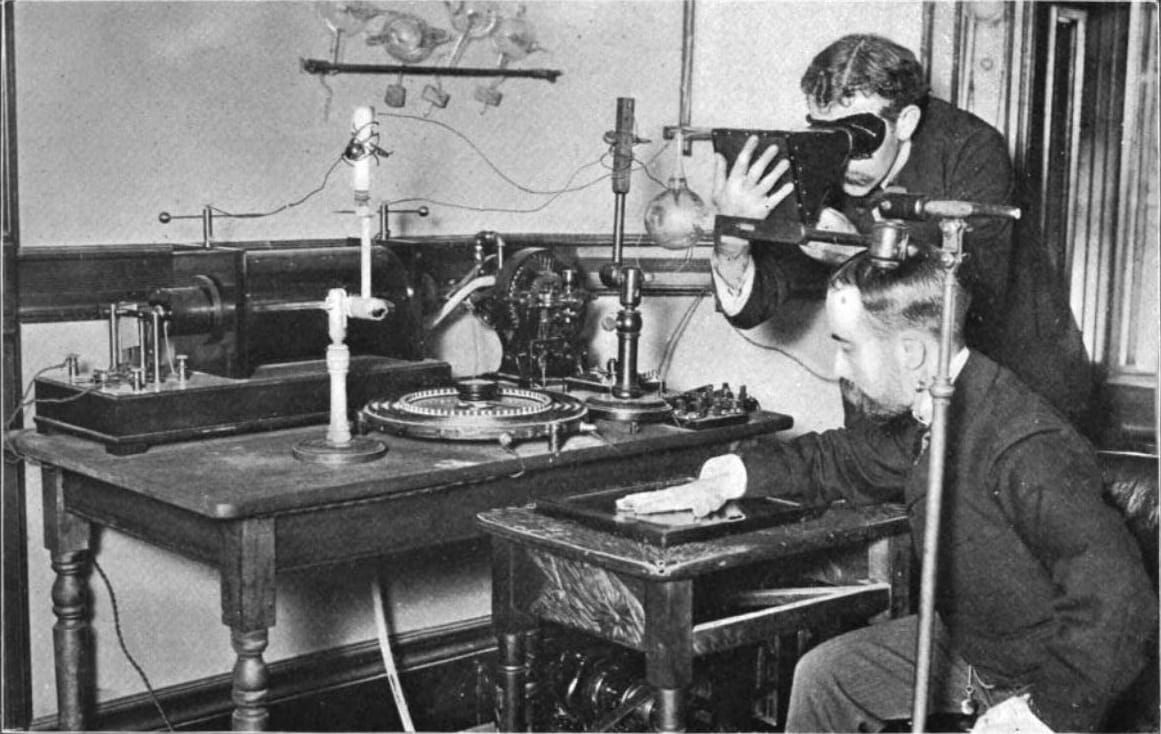

And of course the Wrights were lucky in one sense: the dangers of flight were obvious. Early X-ray technicians, in contrast, had no idea that they were dealing with a health hazard. They used bare hands to calibrate the machine, and many of them eventually had to have their hands amputated.

But even after the dangers of radiation were well known, not everyone was careful. Louis Slotin, physicist at Los Alamos, killed himself and sent others to the hospital in a reckless demonstration in which a screwdriver held in the hand was the only thing stopping a plutonium core from going critical.

Exactly how careful to be—and what that means in practice—is a domain-specific judgment call that must be made by experts in the field, the technologists on the frontier of progress. Safety always has to be traded off against speed and cost. So I wouldn’t claim that this exact pattern can be directly transferred to any other field—such as AI.

But the Wrights can serve as one role model for how to integrate risk management into a development program. Be like them (and not like Slotin).

Corrections: the Slotin incident involved a plutonium core, not uranium as previously stated here. Thanks to Andrew Layman for pointing this out.

This part in particular is where I think there’s a whole bunch of useful lessons for alignment to draw from the Wright brothers.

First things first: “They couldn’t have solved the control problem without actually building and testing a plane” is… kinda technically true, but misleading. What makes the Wright brothers such an interesting case study is that they had to solve the large majority of the problem (i.e. “get the large majority of the bits of optimization/information”) without building an airplane, precisely because it was very dangerous to test a plane without the ability to control it. Furthermore, they had to do it without reliable theory. And the Wright brothers are an excellent real-world case study in creating a successful design mostly without relying on either robust theory or trial-and-error on the airplane itself.

Instead of just iterating on an airplane, the Wright brothers relied on all sorts of models. They built kites. They studied birds. They built a wind tunnel. They tested pieces in isolation—e.g. collecting their own aerodynamic data. All that allowed them to figure out how to control an airplane, while needing relatively-few dangerous attempts to directly control the airplane. That’s where there’s lots of potentially-useful analogies to mine for AI. What would be the equivalent of a wind tunnel, for AI control? Or the equivalent of a kite? How did the Wright brothers get their bits of information other than direct tests of airplanes, and what would analogies of those methods look like?

The big difference between AI and these technologies is that we’re worried about adversarial behavior by the AI.

A more direct analogy would be if Wright & co had been worried that airplanes might “decide” to fly safely until humanity had invented jet engines, then “decide” to crash them all at once. Nuclear bombs do have a direct analogy—a Dr. Strangelove-type scenario in which, after developing an armamentarium in a ostensibly carefully-controlled manner, some madman (or a defect in an automated launch system) triggers an all-out nuclear attack and ends the world.

This is the difficulty, I think. Tech developers naturally want to think in terms of a non-adversarial relationship with their technology. Maybe this is more familiar to biologists like myself than to people working in computer science. We’re often working with living things that can multiply, mutate and spread, and which we know don’t have our best interests in mind. If we achieve AGI, it will be a living in silico organism, and we don’t have a good ability to predict what it’s capable of because it will be unprecedented on the earth.

This is, to a certain extent, the drawing out of my true crux of a lot of a lot of my disagreements with the doomer view, especially on LW. I fundamentally suspect that the trajectory of AI has fundamentally been a story of a normal technology, and in particular I see 1 very important adversarial assumption both fail to materialize, which is evidence against it, given that absence of evidence is evidence of absence, and the assumption didn’t even buy us that much evidence, as it still isn’t predictive enough to be used for AI doom.

I’m talking about, of course, the essentially unbounded instrumental goals/powerseeking assumptions.

Indeed, it’s a pretty large crux, in that if I fundamentally believed that the adversarial framework was a useful model for AI safety, I’d be a lot more worried about AI doom today.

To put it another, this was hitting at one of my true rejections of a lot of the frameworks used often by doomers/decelerationists.

I love stories like this. It’s not immediately obvious to me how to translate them to AI—like what is the equivalent of what the Wright brother’s did for AI?—but I think hearing these are helpful to developing the mindset that will create the kinds of precautions necessary to work with AI safely.