Interpreting and Steering Features in Images

You can see the feature library here. We also have an intervention playground you can try.

Key Results

We can extract interpretable features from CLIP image embeddings.

We observe a diverse set of features, e.g. golden retrievers, there being two of something, image borders, nudity, and stylistic effects.

Editing features allows for conceptual and semantic changes while maintaining generation quality and coherency.

We devise a way to preview the causal impact of a feature, and show that many features have an explanation that is consistent with what they activate for and what they cause.

Many feature edits can be stacked to perform task-relevant operations, like transferring a subject, mixing in a specific property of a style, or removing something.

Interactive demo

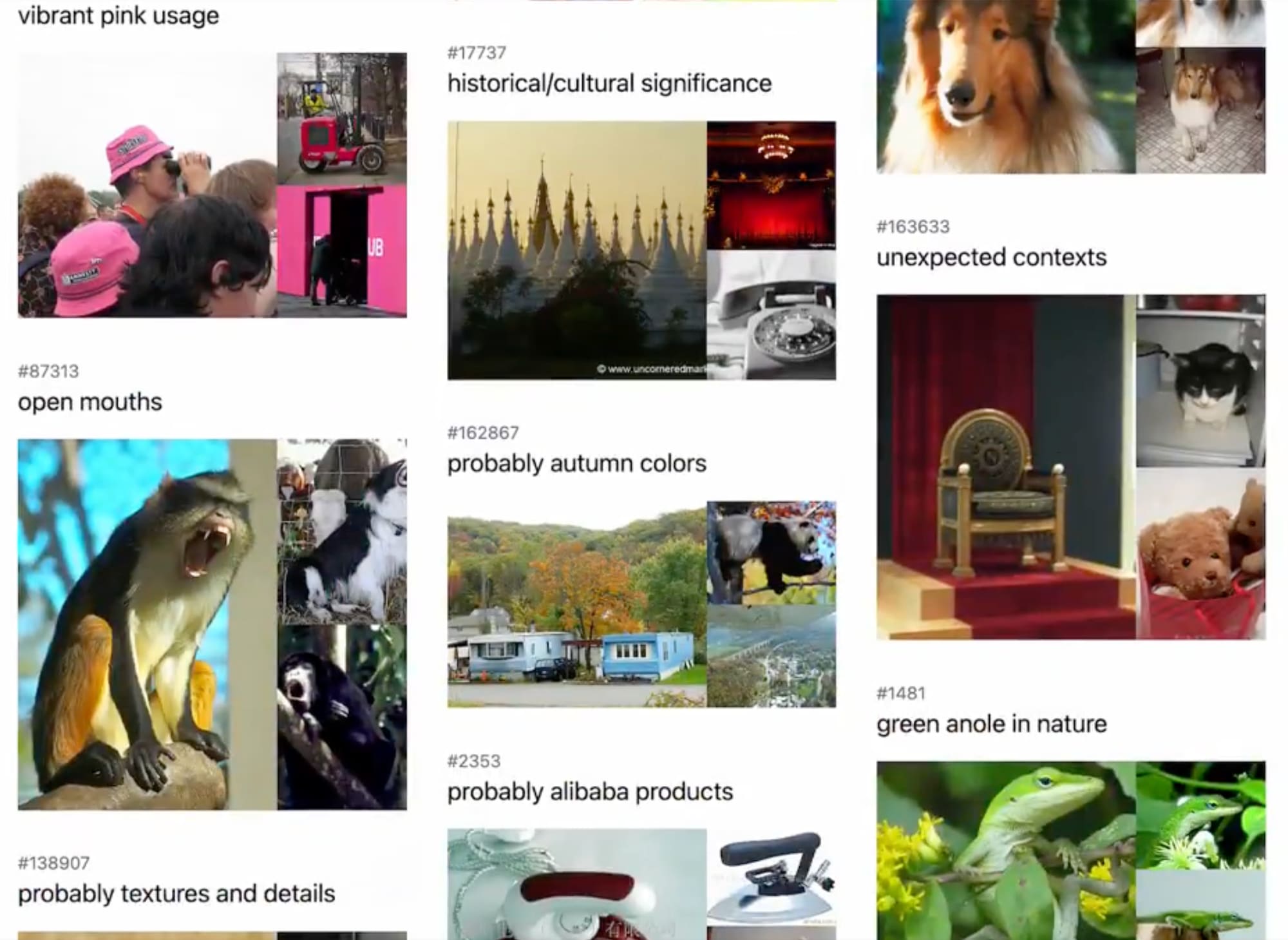

Visit the feature library of over ~50k features to explore the features we find.

Our main result, the intervention playground, is now available for public use.

The weights are open source—here’s a notebook to try an intervention.

Introduction

We trained a sparse autoencoder on 1.4 million image embeddings to find monosemantic features. In our run, we found 35% (58k) of the total of 163k features were alive, which is that they have a non-zero activation for any of the images in our dataset.

We found that many features map to human interpretable concepts, like dog breeds, times of day, and emotions. Some express quantities, human relationships, and political activity. Others express more sophisticated relationships like organizations, groups of people, and pairs.

Some features were also safety relevant.We found features for nudity, kink, and sickness and injury, which we won’t link here.

Steering Features

Previous work found similarly interpretable features, e.g. in CLIP-ViT. We expand upon their work by training an SAE in a domain that allows for easily testing interventions.

To test an explanation derived from describing the top activating images for a particular feature, we can intervene on an embedding and see if the generation (the decoded image) matches our hypothesis. We do this by steering the features of the image embedding and re-adding the reconstruction loss. We then use an open source diffusion model, Kadinsky 2.2, to diffuse an image back out conditional on this embedding.

Even though an image typically has many active features that appear to encode a similar concept, intervening on one feature with a much higher activation value still works and yields an output without noticeable degradation of quality.

We built an intervention playground where users could adjust the features of an image to test hypotheses, and later found that the steering worked so well that users could perform many meaningful tasks while maintaining an output that is comparably as coherent and high quality as the original.

For instance, the subject of one photo could be transferred to another. We could adjust the time of day, and the quantity of the subject. We could add entirely new features to images to sculpt and finely control them. We could pick two photos that had a semantic difference, and precisely transfer over the difference by transferring the features. We could also stack hundreds of edits together.

Qualitative tests with users showed that even relatively untrained users could learn to manipulate image features in meaningful directions. This was an exciting result, because it could suggest that feature space edits could be useful for setting inference time rules (e.g. banning some feature that the underlying model learned) or as user interface affordances (e.g. tweaking feature expression in output).

Discovering and Interpreting Features

In order to learn what each feature actually controls, we developed a feature encyclopedia where we see the top activations, coactivating features, and features similar in direction.

To quickly compare features, we also found it useful to apply the feature to a standard template to generate a reference photo for human comparison. We call this the feature expression icon, or just the icon for short. We include it as part of the human-interpretable reference to this feature.

From left to right: the template, and then the feature expressions of — being held, the subject being the American Robin, and there being three of something.

The icon helps clarify and isolate the feature intervention direction. We tested 200 randomly sampled icons, and found that 88% of the samples were rated by a human as clearly displaying the intervention in question. But there are some limitations to technique. For instance, the activation value we used (7.5x the max activation in the embeddings) did not express some features, which remained very close to the default template. These features did not tend to be meaningful to intervene on, and represented concepts like text or fine compression artifacts.

Above, we’ve shown the dimension reduced map of the top 1000 features that we found, and how they cluster into meaningful semantic categories. Many of the top activating features were about image borders, compression artifacts, and minute differences in light or shadow. Further down the list we see more semantically meaningful features, like dogs, objects, background scenes, relationships between objects, and styles. We haven’t yet begun to explore the full depth of the 58k learned features, and so have released the feature library publically to encourage more interpretation.

Autointerpretation Labels

We created auto-interpreted labels by using Gemini to come up with a hypothesis given (a) some top activating samples, and (b) the standard template and the feature expression icon. The feature expression icon helped clarify otherwise confusing or ambiguous features. Of these labels, the human reviewer rated 63% (in a random sample of 200) as a good or great explanation.[1]

There is some low hanging fruit to improve this metric. We plan to improve label precision by sampling from features that have a similar direction and asking the model to discriminate between them. We can also perform simple accuracy tests by using a LLM to predict which images conform to the label, and measuring the rate of false positives.

Training Details

We used the open-source image encoder, ViT-bigG-14-laion2B-39B-b160k, to embed 1.4 million images from the ImageNet dataset. The resulting embeddings were 1280-dimensional.

We trained the SAE without using any neuron resampling and with untied decoder and encoder weights. We selected a SAE dictionary size of 163,840, which is a 128x expansion factor on the CLIP embedding dimension. However, our run resulted in approximately 105k dead features, suggesting that the model might be undertrained on the given number of image embeddings. We plan to conduct a hyperparameter search in future work to address this issue.

The SAE reconstruction explained 88% of the variance in the embeddings. We observed that the reconstruction process often led to a loss of some aesthetic information, resulting in decreased generation quality. To mitigate this, we found the residual information lost during reconstruction by finding the difference between the reconstructed and original embedding, and added this residual back in when performing an intervention.

For image generation (decoding), we utilized Kadinsky 2.2[2], a pretrained open-source diffusion model.

Future Work

We’re planning to continue this work on interpreting CLIP by:

Performing a hyperparameter sweep to discover the upper limits of reconstruction on image embeddings

Trying various strategies to improve the autointerpretation, e.g. by adding a verification step to label generation, and finding improvements to feature expression icons.

Train on significantly more data, including from LAION to extract more features about style and beauty.

Additional work on user interface and steerability would be helpful to see if we can gain more knowledge about how to help users steer generations in ways meaningful to them. Logit lens approaches on CLIP[3] could help localize why a feature is firing and might suggest better ways to modify an image to, e.g. remove or change something.

You can replicate an image steering intervention in this notebook.

Thanks to David M, Matt S, Linus L for great conversations on the research direction, and Max K, Mehran J, and many others, for design feedback and support.

Please cite this post as “Daujotas, G. (2024, June 20). Interpreting and Steering Features in Images. https://www.lesswrong.com/posts/Quqekpvx8BGMMcaem/interpreting-and-steering-features-in-images″

- ^

We didn’t see a large difference between various LLM providers, and so chose Gemini because it had greater uptime.

- ^

We chose Kadinsky as it was reasonably popular and open source. We expect this to transfer over to other CLIP guided diffusion models. https://github.com/ai-forever/Kandinsky-2

- ^

See ViT-Prisma by Sonia Joseph. https://github.com/soniajoseph/ViT-Prisma

Really cool work! Some general thoughts from training SAEs on LLMs that might not carry over:

L0 vs reconstruction

Your variance explained metric only makes sense for a given sparsity (ie L0). For example, you can get near perfect variance explained if you set your sparsity term to 0, but then the SAE is like an identity function & the features aren’t meaningful.

In my initial experiments, we swept over various L0s & checked which ones looked most monosemantic (ie had the same meaning across all activations), when sampling 30 features. We found 20-100 being a good L0 for LLMs of d_model=512. I’m curious how this translates to text.

Dead Features

I believe you can do Leo Gao’s topk w/ tied-initialization scheme to (1) tightly control your L0 & (2) have less dead features w/o doing ghost grads. Gao et al notice that this tied-init (ie setting encoder to decoder transposed initially) led to little dead features for small models, which your d_model of 1k is kind of small.

Nora has an implementation here (though you’d need to integrate w/ your vision models)

Icon Explanation

I didn’t really understand your icon image… Oh I get it. The template is the far left & the other three are three different features that you clamp to a large value generating from that template. Cool idea! (maybe separate the 3 features from the template or do a 1x3 matrix-table for clarity?)

Other Interp Ideas

Feature ablation—Take the top-activating images for a feature, ablate the feature ( by reconstructing w/o that feature & do the same residual add-in thing you found useful), and see the resulting generation.

Relevant Inputs—What pixels or something are causally responsible for activating this feature? There’s gotta be some literature on input-causal attribution on the output class in image models, then you’re just applying that to your features instead.

This really sounds amazing. Did you patch the features from one image to another specifically? Details on how you transferred a subject from one to the other would be appreciated.

These are all great ideas, thanks Logan! Investigating different values of L0 seems especially promising.

I’ve been told by Gabriel Goh that the CLIP neurons are apparently surprisingly sparse, so the neuron baseline might be stronger relative to SAEs than in e.g LLMs. (This is also why the Multimodal Neurons work was possible without SAEs)

Pretty fun! The feature browsing ui did slightly irritate after a while, but hey, it’s a research project. I think this is about 15 to 20 features, each of which I added at high magnitude to test, then turned down to background levels. Was hoping for something significantly more surreal, but eventually got bored of trying to browse features. Would be cool to have a range of features of similarity ranging from high to low in the browse view.

As is typical for current work, the automatic feature labeling and previewing seems to leave something to be desired in terms of covering the variety of meanings of a feature, and Gemini’s views on some of the features were… well, I found one feature that seemed to be casual photos of trans ladies in nice dresses, and Gemini had labeled it “Harmful stereotypes”. Thanks google, sigh. Anyway, I might suggest Claude next time; I’ve been pretty happy with Claude’s understanding of sensitive issues, as well as general skill. Though of course none of these AIs really are properly there yet in terms of not being kind of dorks about this stuff.

If this were steering, eg, a diffusion planning model controlling a high strength robot, it wouldn’t be there yet in terms of getting enough nines of safety; but being able to control the features directly, bypassing words, is a pretty fun capability, definitely fun to see it for images. The biggest issue I notice is that trying to control with features has a tendency to rapidly push you way out of distribution and break the model’s skill. Of course I also have my usual reservations about anything being improved without being part of a backchained plan. I wouldn’t call this mutation-robust alignment or ambitious value learning or anything, but it’s pretty fun to play with.

Thanks for trying it out!

A section I was writing but then removed due to time constraints involved setting inference time rules. I found that they can actually work pretty well and you could ban features entirely or ban features conditionally and some other feature being present. For instance, to not show natural disasters when some subjects are in the image. But I thought this was pretty obvious, so I got bored of it.

Definitely right on the Gemini point!

Awesome work! I couldn’t find anywhere that specified how sparse your SAE is (eg your l0). I would be interested to hear what l0 you got!