I’m trying out “asteroid mindset”

This is a personal note, and not an advocation that anyone do the same. I’m honestly not really sure why I’m writing it. I think I just want to talk about it in a place where other people might feel similarly or have useful things to say.

Like many others, the past few months of AI advancement (and more generally, since GPT-3) have felt to me like something of a turning point. I have always been sold on the arguments for AI x-risk, but my timelines were always very wide. It always seemed plausible to me that we were one algorithm around the corner from doom, and it also seemed entirely plausible to me that I would die of old age before AGI happened.

My timelines are no longer wide.

I should make it clear to any readers that I am under no illusion that I am a particularly notable or impactful. This is emphatically not a post telling you that you haven’t worked hard enough. I am in the least position to chide anyone for their impact.

My biggest problem has always been productivity/focus/motivation et cetera. I have a pretty unusual psychology, and likely a pretty unrelatable one. I have always been “pathologically content”, happy and satisfied by default. This has its advantages, but a disadvantage is that I’m rarely motivated to change the world around me.

But in the course of paying attention to my own drives, I have repeatedly observed that I am reliably motivated at the pointy part of hyperbolic discounting. My favorite example is spilling a glass of water. I absolutely never react with, *sigh* I guess I should go clean that up.… Instead, I’m just up, getting paper towels. There’s no hesitation to even overcome. Similarly, when I’ve been in a theatre production, or when I’ve worked at a busy cafe, there’s no attempt to save energy or savor the moment—I just do the thing. Again, I’m not saying that I’m any good at the job; otherwise I’d be doing ops right now. But the drive is reliable. I always finish my taxes on time. I always find a job before my money runs out.

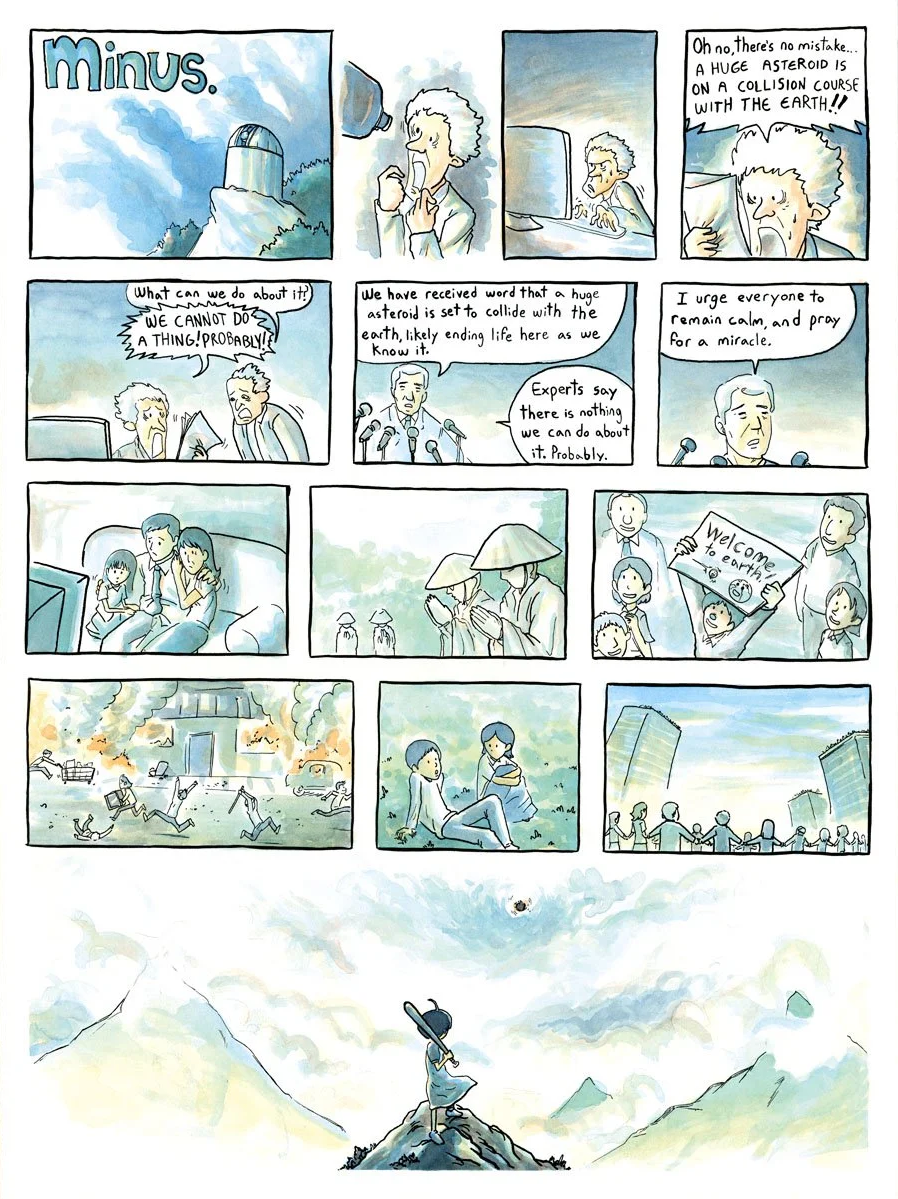

A couple years ago I picked up the book Seveneves. (The following isn’t really spoilers, because it is the premise of the book.) In it, the moon has broken into pieces, and the debris will eventually rain onto the Earth, raising the atmospheric temperature to hundreds of degrees, rending the entire planet profoundly uninhabitable. The characters calculate that there are two years remaining. This is of course a variation on the classic scenario of an asteroid headed toward earth.

My reaction to this was—well, to put it politely, something like, “Christ almighty! What an awful scenario! What a despondent and heart-rending situation to describe! How can anyone bear to read this story?” This isn’t the first time I’ve contemplated the destruction of earth, but reading a hundred pages of fiction on it makes it feel more real.

I feel sure that if I was in that world, the hyperbolic discounting would kick into overdrive, and I would burn everything I had to do something about that situation. I don’t know that I would be very useful, or that it would be any particular duration of time before I burned out, but it just feels extremely clear that the thing to do is to clean up that spill, to put that fire out. It doesn’t feel like there are other options. The only purpose of considering different actions is to figure out which one best fixes the crisis.

As a reader of LessWrong you might be thinking, aren’t we in that world already? Hasn’t that been evident since the original arguments for existential risk? And like, yeah, kinda, except for the part about hyperbolic discounting. It needs to be really in my face before it’s like Seveneves, and there has always been way too much fog obscuring the probabilities and the timelines.

And, well, it feels like that fog has substantially lifted. It feels like I have access to this state of mind now. Not that I regularly embody it, or that I fully believe it to be accurate, but that I can “dip” into it with some amount of effort (whereas before, it was always a hypothetical).

It reminds me of a time when I noticed that my right lymphnode was quite swollen, but my left one wasn’t at all. I did a quick google search, and it seemed to be pretty odd for them to be asymmetrical, and one of the possible causes was cancer. And yes, I know, it’s a common joke that people will check their unremarkable symptoms on WebMD and then suddenly feel terrified that they have some terminal illness that’s listed there. I didn’t confidently believe I had cancer. I didn’t even 2% believe that I had cancer. After a few minutes I remembered that I had recently gotten my ears pierced, and the right piercing was swelling more than the left one. But something about the way that went gave me access to this state of mind. It made my mind slip into the state of recognizing that, yes, it is possible, you could really truly die, and there’s nihil supernum to save you, and this is what that would feel like.

Whether or not you will die is in the territory. But the feeling of believing you will die is in the map, as is the feeling of not believing you are going to die. You may feel fine when you are not, and you may feel mortified when you are not.

For whatever reason, it occurred to me the other day that we might just be in the asteroid scenario now. If an asteroid were really coming, we would probably see it a ways out, and there would probably be a notable period of time where we weren’t sure exactly how big it was or what its trajectory was. I’m not extremely sure when AGI will come, or how hard it will be to make go well. But any subjective uncertainty that I do currently have should be pretty equivalent to a possible asteroid scenario. There is definitely an asteroid coming. It seems really really likely to hit earth. It’s not obviously so big that it’s going to melt the earth, but it is entirely possible, and it’s also entirely possible that it’s a size that will kill us if we don’t divert it, but still small enough to divert.

So, what would I actually do, right now, if that asteroid was coming?

If people thought AGI was likely to eliminate them but leave most others unharmed, there would be a massive outcry for more research funding.

Nice post! I’m curious, what are you planning to do?

I’m trying out independent AI alignment research.

Awesome!

Our ancient ancestors lived dipping in and out of this state all the time. Particularly warrior cultures. I imagine berserkers, which are in fact a pan-Indo-European phenomenon, not just among Vikings, used various techniques to intensify this feeling into a blind rage to help them fight—but you also see it in Bushido where it is suggested that the samurai meditate on his own death every day until he can live like a man already dead. Having attempted it, it creates a certain focus, but it doesn’t provide any decision-making aid, and that’s what I primarily lack, so that focus is just stressful for me without giving me any real value.