A shortcoming of concrete demonstrations as AGI risk advocacy

Given any particular concrete demonstration of an AI algorithm doing seemingly-bad-thing X, a knowledgeable AGI optimist can look closely at the code, training data, etc., and say:

“Well of course, it’s obvious that the AI algorithm would do X under these circumstances. Duh. Why am I supposed to find that scary?”

And yes, it is true that, if you have enough of a knack for reasoning about algorithms, then you will never ever be surprised by any demonstration of any behavior from any algorithm. Algorithms ultimately just follow their source code.

(Indeed, even if you don’t have much of a knack for algorithms, such that you might not have correctly predicted what the algorithm did in advance, it will nevertheless feel obvious in hindsight!)

From the AGI optimist’s perspective: If I’m not scared of AGI extinction right now, and nothing surprising has happened, then I won’t feel like I should change my beliefs. So, this is a general problem with concrete demonstrations as AGI risk advocacy:

“Did something terribly bad actually happen, like people were killed?”

“Well, no…”

“Did some algorithm do the exact thing that one would expect it to do, based on squinting at the source code and carefully reasoning about its consequences?”

“Well, yes…”

“OK then! So you’re telling me: Nothing bad happened, and nothing surprising happened. So why should I change my attitude?”

I already see people deploying this kind of argument, and I expect it to continue into future demos, independent of whether or not the demo is actually being used to make a valid point.

I think a good response from the AGI pessimist would be something like:

I claim that there’s a valid, robust argument that AGI extinction is a big risk. And I claim that different people disagree with this argument for different reasons:

Some are over-optimistic based on mistaken assumptions about the behavior of algorithms;

Some are over-optimistic based on mistaken assumptions about the behavior of humans;

Some are over-optimistic based on mistaken assumptions about the behavior of human institutions;

Many are just not thinking rigorously about this topic and putting all the pieces together; etc.

If you personally are really skilled and proactive at reasoning about the behavior of algorithms, then that’s great, and you can pat yourself on the back for learning nothing whatsoever from this particular demo—assuming that’s not just your hindsight bias talking. I still think you’re wrong about AGI extinction risk, but your mistake is probably related to the 2nd and/or 3rd and/or 4th bullet point, not the first bullet point. And we can talk about that. But meanwhile, other people might learn something new and surprising-to-them from this demo. And this demo is targeted at them, not you.

Ideally, this would be backed up with real quotes from actual people making claims that are disproven by this demo.

For people making related points, see: Sakana, Strawberry, and Scary AI; and Would catching your AIs trying to escape convince AI developers to slow down or undeploy?

Also:

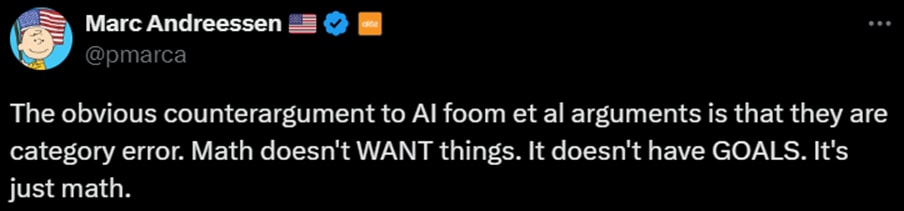

I think this comment is tapping into an intuition that assigns profound importance to the fact that, no matter what an AI algorithm is doing, if you zoom in, you’ll find that it’s just mechanically following the steps of an algorithm, one after the other. Nothing surprising or magic. In reality, this fact is not a counterargument to anything at all, but rather a triviality that is equally true of human brains, and would be equally true of an invading extraterrestrial army. More discussion in §3.3.6 here.

I’m usually not a fan of tone-policing, but in this case, I feel motivated to argue that this is more effective if you drop the word “delusional.” The rhetorical function of saying “this demo is targeted at them, not you” is to reassure the optimist that pessimists are committed to honestly making their case point by point, rather than relying on social proof and intimidation tactics to push a predetermined “AI == doom” conclusion. That’s less credible if you imply that you have warrant to dismiss all claims of the form “Humans and institutions will make reasonable decisions about how to handle AI development and deployment because X” as delusional regardless of the specific X.

Hmm, I wasn’t thinking about that because that sentence was nominally in someone else’s voice. But you’re right. I reworded, thanks.

There hasn’t been a large-scale nuclear war, should we be unafraid of proliferation? More specific to this argument, I don’t know any professional software developers who haven’t had the experience of being VERY surprised by a supposedly well-known algorithm.

I’m not sure what argument you think I’m making.

In a perfect world, I think people would not need any concrete demonstration to be very concerned about AGI x-risk. Alan Turing and John von Neumann were very concerned about AGI x-risk, and they obviously didn’t need any concrete demonstration for that. And I think their reasons for concern were sound at the time, and remain sound today.

But many people today are skeptical that AGI poses any x-risk. (That’s unfortunate from my perspective, because I think they’re wrong.) The point of this post is to suggest that we AGI-concerned people might not be able to win over those skeptics via concrete demonstrations of AI doing scary (or scary-adjacent) things, either now or in the future—or at least, not all of the skeptics. It’s probably worth trying anyway—it might help for some of the skeptics. Regardless, understanding the exact failure modes is helpful.

I expect the second-order effects of allowing people to get political power by crisis-mongering about risk when there is no demonstration/empirical evidence to ruin the initially perfect world pretty immediately, even assuming that AI risk is high and real, because this would allow anyone to make claims about some arbitrary risk and get rewarded for it even if it isn’t true, and there’s no force that systematically favors true claims over false claims about risk in this incentive structure.

Indeed, I think it would be a worse world than now, since it supercharges already existing incentives to crisis monger for the news industry and political groups.

Also, while Alan Turing and John Von Neumann were great computer scientists, I don’t particularly have that much reason to elevate their AI risk opinions over anyone else on this topic, and their connection to AI is at best very indirect.

In a perfect world, everyone would be concerned about the risks for which there are good reasons to be concerned, and everyone would be unconcerned about the risks for which there are good reasons to be unconcerned, because everyone would be doing object-level checks of everyone else’s object-level claims and arguments, and coming to the correct conclusion about whether those claims and arguments are valid.

And those valid claims and arguments might involve demonstrations and empirical evidence, but also might be more indirect.

See also: It is conceivable for something to be an x-risk without there being any nice clean quantitative empirically-validated mathematical model proving that it is.

I do think Turing and von Neumann reached correct object-level conclusions via sound reasoning, but obviously I’m stating that belief without justifying it.

It’s true in a perfect world that everyone would be concerned about the risks for which there are good reasons to be concerned, and everyone would be unconcerned about the risks for which there are good reasons to be unconcerned, because everyone would be doing object-level checks of everyone else’s object-level claims and arguments, and coming to the correct conclusion about whether those claims and arguments are valid, so I shouldn’t have stated that the perfect world was ruined by that, but I consider this a fabricated option for reasons relating to how hard it is for average people to validate complex arguments, combined with the enormous economic benefits of specializing in a field, so I’m focused a lot more on what incentives does this give a real society, given our limitations.

To address this part:

I actually agree with this, and I agree with the claim that an existential risk can happen without leaving empirical evidence as a matter of sole possibility.

I have 2 things to say here:

I am more optimistic that we can get such empirical evidence for at least the most important parts of the AI risk case, like deceptive alignment, and here’s one reason as comment on offer:

https://www.lesswrong.com/posts/YTZAmJKydD5hdRSeG/?commentId=T57EvmkcDmksAc4P4

2. From an expected value perspective, a problem can be both very important to work on and also have 0 tractability, and I think a lot of worlds where we get outright 0 evidence or close to 0 evidence on AI risk are also worlds where the problem is so intractable as to be effectively not solvable, so the expected value of solving the problem is also close to 0.

This also applies to the alien scenario: While from an epistemics perspective, it is worth it to consider the hypothesis that the aliens are unfriendly, from a decision/expected value perspective, almost all of the value is in the hypothesis that the aliens are friendly, since we cannot survive alien attacks except in very specific scenarios.

I think the key point of this post is precisely the question of “is there any such demonstration, short of the actual real very bad thing happening in a real setting that people who discount these as serious risks would accept as empirical evidence worth updating on?”

I’m pretty sure that the right sort of demo could convince even a quite skeptical viewer.

Imagine going back in time and explaining chemistry to someone, then showing them blueprints for a rifle.

Imagine this person scoffs at the idea of a gun. You pull out a handgun and show it to them, and they laugh at the idea that this small blunt piece of metal could really hurt them from a distance. They understand the chemistry and the design that you showed them, but it just doesn’t click for them on an intuitive level.

Demo time. You take them out to an open field and hand them an apple. Hold this balanced on your outstretched hand, you say. You walk a ways away, and shoot the apple out of their hand. Suddenly the reality sinks in.

So, I think maybe the demos that are failing to convince people are weak demos. I bet the Trinity test could’ve convinced some nuclear weapon skeptics.

Math doesn’t have GOALS. But we constantly give goals to our AIs.

If you use AI every day and are excited about its ability to accomplish useful things, its hard to keep the dangers in mind. I see that in myself.

But that doesn’t mean the dangers are not there.